DeepLabCut: A Comprehensive Guide to Markerless Pose Estimation in Preclinical Neuroscience and Drug Discovery

This article provides a definitive guide to DeepLabCut (DLC), a premier open-source toolbox for markerless animal pose estimation, tailored for neuroscientists and drug development researchers.

DeepLabCut: A Comprehensive Guide to Markerless Pose Estimation in Preclinical Neuroscience and Drug Discovery

Abstract

This article provides a definitive guide to DeepLabCut (DLC), a premier open-source toolbox for markerless animal pose estimation, tailored for neuroscientists and drug development researchers. We explore its core principles as a transfer learning framework that democratizes access to deep-learning-based behavioral analysis. A detailed methodological walkthrough covers experimental design, efficient labeling, network training, and deployment for high-throughput analysis. Critical troubleshooting advice addresses common pitfalls in prediction accuracy, speed, and generalization. Finally, we validate DLC's performance against commercial alternatives and manual scoring, highlighting its reproducibility and transformative potential for quantifying complex, naturalistic behaviors in models of neurological and psychiatric disorders, thereby accelerating translational research.

What is DeepLabCut? Demystifying Markerless Tracking for Behavioral Neuroscience

DeepLabCut (DLC) represents a paradigm shift in behavioral quantification for neuroscience and drug development. Its core philosophy moves beyond traditional marker-based or manual tracking by leveraging deep learning to enable markerless pose estimation from standard video recordings. This allows for the precise, high-throughput analysis of naturalistic animal behaviors, which is critical for modeling psychiatric and neurological diseases, screening pharmacological interventions, and uncovering neural circuit mechanisms.

Core Philosophical Tenets of DLC

DLC is built on several foundational principles that distinguish it from other tools:

- Accessibility through Transfer Learning: DLC democratizes deep learning by utilizing state-of-the-art convolutional neural network (CNN) architectures (e.g., ResNet, EfficientNet) pre-trained on massive human image datasets (ImageNet). Through transfer learning, these networks can be efficiently re-trained (fine-tuned) with a remarkably small set of user-labeled frames (typically 100-200) from a new animal, achieving high accuracy. This eliminates the need for vast, species-specific datasets.

- Generalization and Robustness: The framework is explicitly designed to generalize across subjects, experimental setups, and even species with minimal additional training, provided the visual features are reasonably consistent. Its robustness to changes in lighting, posture, and partial occlusions is a key advantage for long-term or complex behavioral assays.

- Open-Source, Modular Ecosystem: As an open-source project, DLC fosters reproducibility, customization, and community-driven development. Its modular Python-based API allows researchers to integrate it into larger pipelines for neural data alignment, complex behavioral analysis, and closed-loop stimulation.

- From Keypoints to Behavioral Phenotypes: The ultimate goal is not just to generate (x, y) coordinates. DLC positions itself as the first, crucial step in a pipeline that transforms raw video into quantifiable, interpretable behavioral phenotypes. These phenotypes serve as the ground truth for correlating with or perturbing neural activity.

Technical Advantages & Comparative Performance

The advantages of DLC are best illustrated by comparing it to traditional methods and highlighting key performance metrics from recent studies.

Table 1: Comparative Analysis of Behavioral Tracking Methods

| Method | Required Animal Preparation | Typical Throughput | Labor Intensity | Scalability to Groups | Natural Behavior Disruption |

|---|---|---|---|---|---|

| Manual Scoring | None | Very Low (real-time) | Extremely High | Low | None |

| Physical Markers | Dyes, implants | Medium | Medium (setup) | Low-Medium | High |

| Traditional CV (Background Subtraction) | None | High | Low (post-processing) | High | None |

| DeepLabCut (Markerless DLC) | None | Very High | Low (after training) | Very High | None |

Table 2: Quantitative Performance Benchmarks of DLC in Recent Studies

| Study Focus (Year) | Key Species | Training Frames Used | Reported Accuracy (Mean Pixel Error) | Key Advantage Demonstrated |

|---|---|---|---|---|

| Social Behavior Analysis (2023) | Mice (group of 4) | ~200 per mouse | < 5 px (HD video) | Robust identity tracking in dense, occluded settings. |

| Pharmacological Screening (2022) | Zebrafish larvae | 150 | ~2 px (approx. 0.5% body length) | High sensitivity to subtle drug-induced locomotor changes. |

| Neural Correlation - Freely Moving (2024) | Rat | 100 | 3.8 px | Millisecond-accurate alignment with wireless neural recordings. |

| Cross-Species Generalization (2023) | From Mouse to Rat | 50 (fine-tuning) | < 8 px | Effective transfer learning across related species. |

Experimental Protocol: Implementing DLC for a Drug Screening Study

This protocol outlines a typical workflow for assessing drug effects on rodent behavior.

A. Video Acquisition:

- Setup: Use a standardized arena (e.g., open field, elevated plus maze) with consistent, diffuse overhead lighting. Employ high-speed cameras (e.g., 30-100 Hz) placed orthogonally to the arena plane. Ensure minimal background noise.

- Recording: Record vehicle-control and drug-treated animals (e.g., following administration of a novel psychoactive compound or a classic anxiolytic like diazepam). Each session should be 10-30 minutes. Save videos in a lossless or high-quality compressed format (e.g., .avi, .mp4 with H.264).

B. DeepLabCut Workflow:

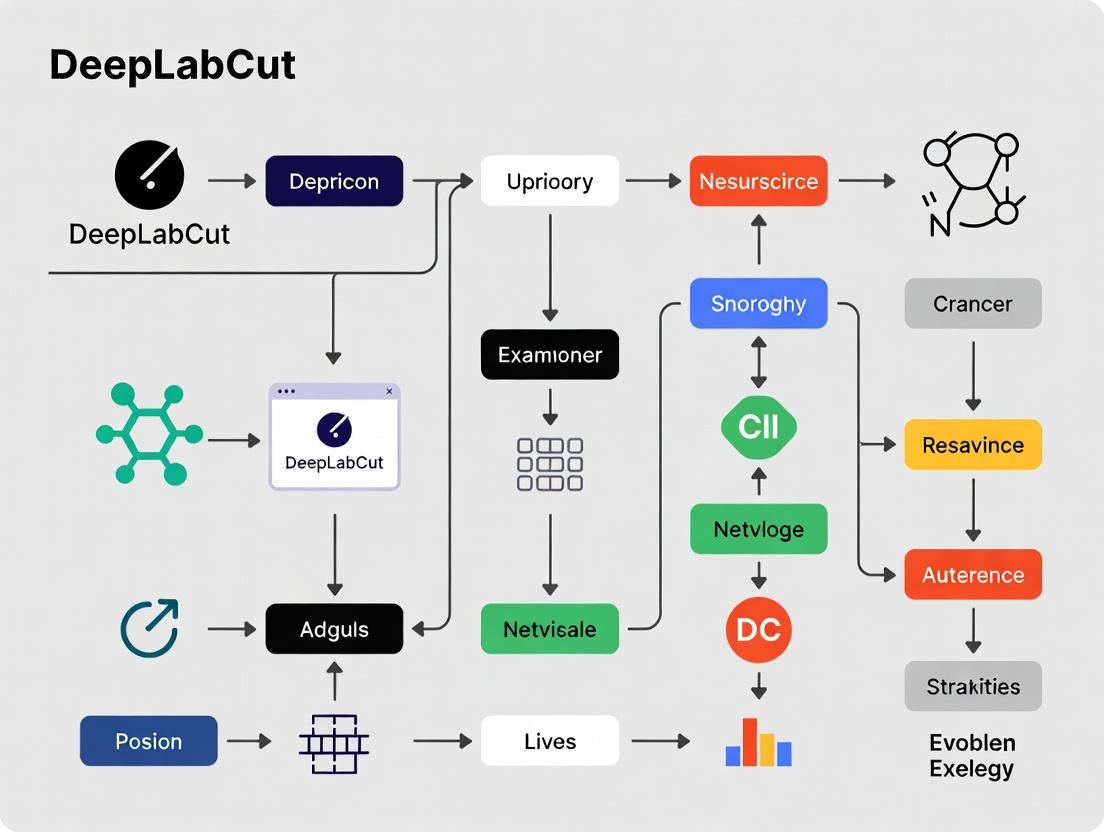

Diagram Title: Standard DeepLabCut Experimental Workflow

C. Detailed Steps:

- Frame Extraction: Use DLC's GUI or API to extract frames from a representative subset of videos across all experimental groups. Select ~200 frames that maximize pose and viewpoint diversity.

- Labeling: Manually annotate key body parts (e.g., snout, ears, tail base, paws) on all extracted frames using the DLC GUI.

- Training: Create a training dataset and configure the network (choose backbone: ResNet-50 is standard). Initiate training, which typically runs for thousands of iterations until loss plateaus (can be done on a robust GPU or cloud services like Google Colab).

- Evaluation: Use the built-in evaluation tools to analyze the network's performance on a held-out video. Key metrics include Train Error, Test Error, and Pixel Error. Refine training with more frames if necessary.

- Analysis: Process all experimental videos with the trained network to obtain time-series data of keypoint locations and confidence scores.

D. Downstream Behavioral Phenotyping:

- Preprocessing: Filter predictions based on confidence (e.g., discard points with likelihood < 0.9). Smooth trajectories using a median or Savitzky-Golay filter.

- Feature Extraction: Calculate behavioral metrics.

- Kinematics: Velocity, acceleration, movement bouts.

- Posture: Body elongation, spine curvature.

- Arena-Based: Time in center (anxiety), distance traveled (locomotion).

- Social: Inter-animal distance (if multiple animals).

- Statistical Modeling: Apply appropriate statistical tests (e.g., t-tests, ANOVA, linear mixed models) to compare features between drug and vehicle groups, identifying significant behavioral shifts.

Signaling Pathway Analysis via Behavioral Deconstruction

DLC data can be used to infer the modulation of neural pathways by drugs or genetic manipulations. The following diagram models how a drug might alter behavior through a specific neural pathway, with each behavioral component quantifiable by DLC-derived features.

Diagram Title: From Drug Target to DLC-Measured Behavioral Phenotype

The Scientist's Toolkit: Essential Research Reagents & Solutions

| Item / Solution | Function in DLC-Centric Research | Example Product / Specification |

|---|---|---|

| High-Speed Camera | Captures fast, nuanced movements for accurate pose estimation. | Basler acA2040-120um (120 fps, global shutter) |

| Behavioral Arena | Provides standardized context for reproducible behavioral assays. | Customizable open-field, Med Associates, Noldus EthoVision arenas |

| Dedicated GPU Workstation | Accelerates DLC model training and video analysis. | NVIDIA RTX 4090/3090 with 24GB+ VRAM, CUDA/cuDNN installed |

| Data Annotation Tool | Core interface for creating training datasets. | DeepLabCut GUI (native), or alternative: SLEAP GUI |

| Behavioral Analysis Suite | For transforming DLC keypoints into interpretable metrics. | DLC-Analyzer, B-SOiD, MARS, Simple Behavioral Analysis (SimBA) |

| Neural Data Acquisition System | To synchronize and correlate DLC pose data with neural activity. | SpikeGadgets Trodes, Intan RHD recording system, Neuropixels |

| Synchronization Hardware | Precisely aligns video frames with neural timestamps. | Arduino-based TTL pulse generator, Neuralynx Sync Box |

| Animal Model | Genetically defined or disease-model subjects for hypothesis testing. | C57BL/6J mice, Long-Evans rats, transgenic lines (e.g., DAT-Cre) |

| Pharmacological Agents | To perturb systems and measure behavioral output via DLC. | Diazepam (anxiolytic), MK-801 (NMDA antagonist), Clozapine (atypical antipsychotic) |

This whitepaper details how transfer learning, a core pillar of modern machine learning, bridges human pose estimation and animal behavior quantification, fundamentally advancing neuroscience research. Within the framework of DeepLabCut (DLC), an open-source toolbox for markerless pose estimation, transfer learning enables researchers to leverage vast, pre-existing human pose datasets to train accurate, efficient, and data-lean models for novel animal species and experimental paradigms. This capability is central to a broader thesis: that DLC democratizes and scales high-throughput, quantitative behavioral phenotyping, transforming hypothesis testing in basic neuroscience and drug development.

Technical Foundation: How Transfer Learning Works in Pose Estimation

Modern pose estimation networks (e.g., ResNet, EfficientNet, HRNet) comprise two parts: a backbone (feature extractor) and a head (task-specific output layers). The backbone learns hierarchical features (edges, textures, shapes, parts) from millions of general images (e.g., ImageNet).

Diagram 1: Transfer Learning Workflow for Animal Pose

In transfer learning for DLC:

- Initialization: A backbone pre-trained on a large human pose dataset is loaded.

- Adaptation: The head is replaced with new layers predicting animal-specific keypoints.

- Fine-tuning: The entire network (or later layers) is trained on a small, labeled set of animal frames. The pre-learned generic features are adapted to the new domain with far less data.

Quantitative Evidence: Efficiency Gains from Transfer Learning

The power of transfer learning is quantified by the drastic reduction in required labeled training data and training time while achieving high accuracy.

Table 1: Impact of Transfer Learning on Model Performance in DLC

| Experiment Subject | Training Data (No Transfer) | Training Data (With Transfer) | Performance Metric (MAP)* | Training Time Reduction | Source/Key Study |

|---|---|---|---|---|---|

| Mouse (Laboratory) | ~1000 labeled frames | ~200 labeled frames | >0.95 (vs. ~0.85 without transfer) | ~70% | Mathis et al., 2018; Nath et al., 2019 |

| Fruit Fly (Drosophila) | ~500 labeled frames | ~50 labeled frames | >0.90 | ~80% | Pereira et al., 2019, 2022 |

| Zebrafish (Larva) | ~800 labeled frames | ~150 labeled frames | >0.92 | ~65% | Kane et al., 2020 |

| Rat (Social Behavior) | ~1500 labeled frames | ~300 labeled frames | >0.89 | ~60% | Lauer et al., 2022 |

*Mean Average Precision (MAP): A standard metric for keypoint detection accuracy (range 0-1, higher is better).

Detailed Experimental Protocols

Protocol A: Establishing a New DLC Project with Transfer Learning

- Video Acquisition: Record high-quality, high-resolution videos of the animal under appropriate lighting.

- Frame Extraction: Extract a diverse, representative set of frames (~50-200) covering the full behavioral repertoire and camera views.

- Labeling: Manually annotate keypoints (body parts) on the extracted frames using the DLC GUI.

- Network Configuration: In the configuration file (

config.yaml), set:init_weights: path/to/pretrained/human/network(e.g., a ResNet-50 trained on COCO).- Define the new keypoint names and number.

- Training: Execute training. The process will fine-tune the pre-trained weights on the new animal labels.

- Evaluation: Use the built-in evaluation tools to compute test error and create labeled videos to visually assess performance.

Protocol B: Benchmarking Transfer vs. Scratch Training (Cited in Table 1)

- Dataset Creation: For a single species (e.g., mouse), create a fully labeled dataset of 1000 frames.

- Split Data: Divide into training (80%) and test (20%) sets.

- Model Training - Scratch: Train a model from randomly initialized weights using a subset (e.g., 1000, 500, 200 frames) of the training data.

- Model Training - Transfer: Train a model initialized with human-pose weights using the same subsets.

- Evaluation: For each condition, evaluate the model on the held-out test set using Mean Average Precision (MAP) and Root Mean Square Error (RMSE).

- Analysis: Plot training curves (loss vs. iterations) and final accuracy (MAP) vs. training set size for both conditions.

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Materials for DLC-based Behavioral Neuroscience

| Item / Solution | Function & Rationale |

|---|---|

| DeepLabCut Software Suite | Core open-source platform for creating, training, and deploying markerless pose estimation models. Provides GUI and API for full workflow. |

| Pre-trained Model Zoo (DLC Model Zoo) | Repository of published, pre-trained models for various species. Enables "one-shot" transfer learning or benchmarking. |

| High-Speed Camera (>60 fps) | Captures rapid motion (e.g., rodent grooming, fly wing beats) without motion blur, essential for fine-grained behavioral analysis. |

| Controlled Illumination (IR or Visible LED) | Ensures consistent video quality. IR illumination allows for nighttime observation in nocturnal animals without behavioral disruption. |

| Behavioral Arena (Standardized) | Provides a consistent context for video recording. Enables comparison across labs and drug trials. |

| GPU Workstation (NVIDIA, CUDA-enabled) | Accelerates model training (from days to hours) and video analysis via parallel processing. |

| Data Annotation Tools (DLC GUI, COCO Annotator) | Facilitates efficient, multi-user labeling of training image frames. |

| Downstream Analysis Suite (SimBA, B-SOID, MARS) | Open-source tools for converting pose tracks into behavioral classifiers (e.g., chase, freeze, rearing) and ethograms. |

Signaling Pathway & Analysis Workflow

The following diagram maps the logical pathway from raw video to behavioral insight, highlighting where transfer learning integrates.

Diagram 2: From Video to Insight with Transfer Learning

Transfer learning is the engine that makes DeepLabCut a scalable, generalizable solution for animal behavior neuroscience. By drastically reducing the data and computational burden, it allows researchers to rapidly deploy accurate pose estimation across diverse species and settings. This accelerates the core thesis of quantitative behavior as a robust readout for circuit neuroscience and psychopharmacology, enabling high-throughput screening in drug development and revealing previously inaccessible nuances of natural behavior.

DeepLabCut (DLC) is an open-source toolbox for markerless pose estimation of animals. Within neuroscience and drug development research, it enables quantitative analysis of behavior as a readout for neural function, disease models, and therapeutic efficacy. This technical guide details its ecosystem, which is central to a thesis on scalable, precise behavioral phenotyping.

Core Components & Python Backend Architecture

Foundational Libraries & Dependencies

The DLC backend is a Python-centric stack built on deep learning frameworks.

Table 1: Core Python Backend Dependencies

| Package | Version Range (Typical) | Primary Function in DLC |

|---|---|---|

| TensorFlow | 2.x (≥2.4) or 1.15 | Core deep learning framework for model training/inference. |

| PyTorch (DLC 2.3+) | ≥1.9 | Alternative backend; offers flexibility and performance. |

| NumPy & SciPy | Latest stable | Numerical operations, data filtering, and interpolation. |

| OpenCV (cv2) | ≥4.1 | Video I/O, image processing, and augmentation. |

| Pandas | Latest stable | Handling labeled data, configuration, and results (CSV/HDF). |

| Matplotlib & Seaborn | Latest stable | Plotting trajectories, loss curves, and statistics. |

| MoviePy / imageio | Latest stable | Video manipulation and frame extraction. |

| Spyder / Jupyter | N/A | Common interactive development environments for prototyping. |

Workflow & Data Pipeline

The standard workflow involves: 1) Project creation, 2) Data labeling, 3) Model training, 4) Video analysis, and 5) Post-processing.

DLC Core Analysis Workflow (96 characters)

Supporting Tools: DLC GUI & Model Zoo

DeepLabCut GUI

The GUI (dlc-gui) provides an accessible interface for non-programmers. It is built with wxPython and wraps core API functions for project management, labeling, training, and analysis.

Key Features:

- Project Management: Create/load projects, configure body parts, and manage video lists.

- Labeling Tool: Manually label extracted frames to generate ground truth data.

- Training Control: Launch and monitor network training sessions.

- Video Processing: Queue videos for pose estimation with a trained model.

- Result Visualization: Create labeled videos and plot trajectories.

Model Zoo

The Model Zoo is a community-driven repository of pre-trained models. It accelerates research by allowing scientists to fine-tune models on their specific animals and settings, reducing labeling and computational costs.

Table 2: Representative Models in the DLC Model Zoo

| Model Name | Base Architecture | Typical Application | Reported Performance (Pixel Error)* |

|---|---|---|---|

dlc-models/rat-reaching |

ResNet-50 | Rat forelimb kinematics | ~5-8 pixels |

dlc-models/mouse-social |

EfficientNet-b0 | Mouse social interaction | ~4-7 pixels |

dlc-models/zebrafish-larvae |

MobileNet-v2 | Zebrafish larval locomotion | ~3-5 pixels |

dlc-models/fly-walk |

ResNet-101 | Drosophila leg tracking | ~2-4 pixels |

dlc-models/marmoset-face |

ResNet-50 | Marmoset facial expressions | ~6-10 pixels |

*Performance is video resolution and context-dependent. Errors are typical for within-lab transfer learning.

Experimental Protocol: Benchmarking a Pre-trained Model

This protocol details how to evaluate and fine-tune a Model Zoo model for a new laboratory setting.

Materials & Reagent Solutions

Table 3: Research Reagent & Tool Solutions for DLC Experimentation

| Item | Function/Description | Example Vendor/Product |

|---|---|---|

| Experimental Animal | Subject for behavioral phenotyping. | C57BL/6J mouse, Long-Evans rat, etc. |

| High-Speed Camera | Video acquisition at sufficient fps for behavior. | Basler acA series, FLIR Blackfly S, GoPro Hero. |

| Consistent Lighting | Eliminates shadows, ensures consistent pixel values. | LED panels with diffusers (e.g., Phlox). |

| Behavioral Arena | Standardized environment for recording. | Open field, plus maze, operant chamber. |

| DLC-Compatible Workstation | GPU-equipped computer for training/analysis. | NVIDIA GPU (RTX 3080/4090 or Quadro), 32GB+ RAM. |

| Data Storage Solution | High-throughput storage for large video files. | NAS (Synology/QNAP) or large-capacity SSDs. |

| Annotation Tool | For creating ground truth data. | DLC GUI, Labelbox, or COCO Annotator. |

Step-by-Step Methodology

Acquisition & Pre-processing:

- Record behavior videos (e.g., .mp4, .avi) at 30-100 fps, ensuring consistent lighting and minimal background clutter.

- Trim videos to relevant epochs. Convert all videos to a consistent format (e.g.,

.mp4with H.264 codec) usingffmpegor MoviePy.

Model Selection & Installation:

- From the DLC Model Zoo (

https://github.com/DeepLabCut/DeepLabCut-ModelZoo), select a model pre-trained on a similar species/body part. - Download the model configuration (

config.yaml) and checkpoint files.

- From the DLC Model Zoo (

Project Creation & Data Labeling:

- Create a new DLC project using the downloaded model's config as a template.

- Extract frames from your videos (typically 20-100 frames per video, randomly sampled).

- Use the DLC GUI to manually correct labels on the extracted frames. This creates a tailored training set.

Fine-Tuning Training:

- Configure the

config.yamlto point to your new labeled data. - Initiate training. Use a lower learning rate (e.g.,

0.0001) than for training from scratch to fine-tune the pre-trained weights. - Train until the train/test error plateaus (typically 50-200k iterations). Monitor loss plots.

- Configure the

Evaluation & Analysis:

- Use the model to analyze a held-out video.

- Evaluate accuracy by manually labeling a few frames from the held-out set and calculating the Root Mean Square Error (RMSE) between manual and predicted points.

- Use DLC's

analyze_videosandcreate_labeled_videofunctions to generate outputs.

Post-processing & Kinematics:

- Filter pose data (e.g., using Savitzky-Golay filter or ARIMA model within DLC).

- Calculate derived metrics: velocity, acceleration, joint angles, distance between animals, etc.

Technical Implementation: Key Signaling & Data Pathways

The system's data flow from video input to scientific insight involves several processing stages.

DLC Data Processing Pathway (97 characters)

Quantitative Performance Benchmarks

Performance is measured by train/test error (in pixels) and inference speed (frames per second, FPS).

Table 4: DLC 2.3 Performance Benchmarks (Typical Desktop GPU)

| Task / Model | Training Iterations | Train Error (pixels) | Test Error (pixels) | Inference Speed (FPS)* |

|---|---|---|---|---|

| Mouse Pose (ResNet-50) | 200,000 | 2.1 | 4.7 | 45-60 |

| Rat Gait (EfficientNet-b3) | 150,000 | 3.5 | 6.2 | 35-50 |

| Human Hand (ResNet-101) | 500,000 | 1.8 | 3.5 | 25-40 |

| Transfer Learning (from Zoo) | 50,000 | 4.2 | 7.8 | 45-60 |

*FPS measured on NVIDIA RTX 3080, 1920x1080 video. Speed varies with resolution and model size.

The Essential DLC Ecosystem—its robust Python backend, accessible GUI, and collaborative Model Zoo—provides a comprehensive, scalable platform for quantitative behavioral neuroscience. Its modularity supports everything from exploratory pilot studies to high-throughput drug screening pipelines, making it a cornerstone technology for modern research linking neural mechanisms to behavior.

This whitepaper details core applications of DeepLabCut (DLC), an open-source toolbox for markerless pose estimation, within animal behavior neuroscience. Framed within a broader thesis on DLC's transformative role, we explore its technical implementation for quantifying social interactions, gait dynamics, and its integration with unsupervised learning for behavior discovery—methodologies critical for researchers and drug development professionals seeking high-throughput, objective phenotypic analysis.

Quantifying Social Interaction

Social behavior in rodents (e.g., mice, rats) is a key phenotype in models of neuropsychiatric disorders (e.g., autism, schizophrenia). DLC enables precise, continuous tracking of multiple animals' body parts, moving beyond simple proximity measures.

Experimental Protocol: Resident-Intruder Assay with DLC

- Animals & Housing: House experimental male C57BL/6J mice (residents) singly for >2 weeks. Age-matched male intruders are group-housed.

- Hardware Setup: Use a standard home cage (e.g., Tecniplast) placed in a controlled light/sound environment. Record from a top-down view at 30 fps with a high-definition USB camera (e.g., Basler acA1920-155um).

- DLC Workflow:

- Frame Labeling: Extract ~500-1000 frames from multiple videos. Manually label keypoints for each animal: nose, ears, neck, base of tail, and all four paws.

- Network Training: Train a ResNet-50 or -101 based neural network using DLC's default parameters (shuffles=1, training iterations=103000). Use a 90/10 train-test split.

- Inference & Tracking: Analyze novel videos. Use DLC's

multianimalmode or post-hoc identity tracking algorithms (e.g.,Tracklets) to maintain individual identities across frames.

- Derived Metrics:

- Nose-to-Anogenital Sniffing: Distance between resident nose and intruder anogenital region < 2 cm.

- Following: Resident orientation and path alignment behind intruder.

- Approach/Retreat Dynamics: Velocity vectors relative to the other animal.

- Postural Classification: Use tracked keypoints to classify sub-behaviors (e.g., upright postures, mounting).

Table 1: Quantitative Social Metrics Derived from DLC Tracking

| Metric | Definition | Typical Baseline Value (C57BL/6J Mice) | Significance in Drug Screening |

|---|---|---|---|

| Social Investigation Time | Time nose-to-nose/nose-to-anogenital distance < 2 cm | 100-150 sec in a 10-min session | Reduced in ASD models; sensitive to prosocial drugs (e.g., oxytocin). |

| Chasing Duration | Time resident follows intruder with velocity > 20 cm/s & heading alignment < 30° | 10-30 sec in a 10-min session | Modulated by aggression/mania models; increased by psychostimulants. |

| Inter-Animal Distance | Mean centroid distance between animals | 15-25 cm in neutral exploration | Increased by anxiogenic compounds; decreased in social preference. |

| Contact Bout Frequency | Number of discrete physical contact initiations | 20-40 bouts in a 10-min session | Measures sociability and engagement. |

Title: DLC Workflow for Social Behavior Analysis

High-Precision Gait Analysis

Gait impairments are hallmarks of neurodegenerative (e.g., Parkinson's, ALS) and neuropsychiatric disorders. DLC provides a scalable alternative to force plates or pressure mats for detailed kinematic analysis.

Experimental Protocol: Treadmill or Overground Locomotion

- Apparatus: Use a motorized treadmill with a transparent belt (e.g., Noldus Treadmill) or a narrow, enclosed runway (e.g., 100cm L x 10cm W x 20cm H) to enforce straight-line walking.

- Recording: Synchronize a high-speed camera (≥ 100 fps, e.g., Phantom Miro) for lateral (side) view with the treadmill encoder or use a bottom-up view through the transparent belt.

- DLC Labeling: Label keypoints: iliac crest, hip, knee, ankle, metatarsophalangeal (MTP) joint, and digit tip for each limb. Include the snout and tail base for body axis.

- Analysis Pipeline:

- Stride Segmentation: Identify successive paw-strike events from the vertical position of the MTP joint.

- Kinematic Calculation: Compute angles (e.g., knee flexion/extension), joint trajectories, and limb coordination.

- Temporal-Spatial Parameters: Calculate stride length, stance/swing phase duration, and cadence from the tracked positions and frame rate.

Table 2: Gait Parameters Quantified via DLC

| Parameter | Calculation Method | Neurological Model Correlation |

|---|---|---|

| Stride Length | Distance between successive paw strikes of the same limb. | Reduced in Parkinsonian models (6MPD-treated mice: ~4 cm vs control ~6 cm). |

| Stance Phase % | (Stance duration / Stride duration) * 100. | Increased in ataxic models (e.g., SCA mice: ~75% vs control ~60%). |

| Base of Support | Mean lateral distance between left and right hindlimb paw strikes. | Widened in ALS models (SOD1 mice). |

| Joint Angle Range | Max-min of knee/ankle angle during a stride cycle. | Reduced amplitude in models of spasticity. |

| Inter-Limb Coupling | Phase relationship between forelimb and hindlimb cycles. | Disrupted in spinal cord injury models. |

Title: Gait Analysis Pipeline with DLC

Unsupervised Behavior Discovery

The integration of DLC with unsupervised machine learning (ML) moves beyond pre-defined behaviors to discover naturalistic, ethologically relevant action sequences.

Protocol: Pose to Behavior Embedding

- Pose Feature Engineering: From DLC tracks (x,y coordinates, likelihood), compute features per time window (e.g., 100ms): body part velocities, accelerations, distances between points, angular speeds, and postural eigenvalues.

- Dimensionality Reduction: Use Uniform Manifold Approximation and Projection (UMAP) or t-SNE to embed high-dimensional features into 2-3 dimensions.

- Temporal Segmentation: Apply clustering algorithms (e.g., HDBSCAN, k-means) to the embedded space to identify discrete postural "states."

- Markov Modeling: Use a Hidden Markov Model (HMM) or autoregressive HMM to model transitions between states, defining discrete "behavioral syllables."

- Sequence Analysis: Identify recurrent sequences of syllables as "motifs" or "super-syllables" representing complex behaviors (e.g., "stretch-attend" risk assessment).

Table 3: Unsupervised Methods for Behavior Discovery from DLC Poses

| Tool/Method | Input | Output | Typical Use Case |

|---|---|---|---|

| SimBA | DLC coordinates + labels | Classifier for user-defined behaviors | Scalable analysis of specific, known behaviors across cohorts. |

| VAME | DLC coordinates | Temporal segmentation into behavior motifs | Discovery of recurrent, patterned behavior sequences. |

| B-SOiD | DLC coordinates | Clustering of posture into identifiable units | Identification of novel, non-intuitive behavioral categories. |

| MotionMapper | DLC-derived wavelet features | 2D embedding & behavioral maps | Visualization of continuous behavioral repertoire. |

Title: Unsupervised Behavior Discovery Workflow

The Scientist's Toolkit: Research Reagent Solutions

Table 4: Essential Materials for DLC-Based Behavior Neuroscience

| Item | Supplier Examples | Function in DLC Workflow |

|---|---|---|

| High-Speed CMOS Camera | Basler, FLIR, Phantom | Captures high-frame-rate video for precise gait analysis and fast movement. |

| Near-Infrared (IR) Lighting & Camera | Point Grey, Edmund Optics | Enables recording during dark/active phases without visible light disruption. |

| Motorized Treadmill | Noldus, Columbus Instruments | Provides controlled, consistent locomotion for gait kinematics. |

| Social Test Arena (e.g., open field with walls) | Med Associates, San Diego Instruments | Standardized environment for resident-intruder or three-chamber assays. |

| DeepLabCut Software Suite | Mathis Lab, Mackenzie Mathis Lab (MIT) | Core open-source platform for markerless pose estimation. |

| Powerful GPU Workstation | NVIDIA (RTX series) | Accelerates DLC neural network training and video analysis. |

| Behavior Annotation Software (BORIS, ELAN) | Open-source | For generating ground-truth labels to validate DLC-based classifiers. |

| Python Data Science Stack (NumPy, SciPy, pandas) | Open-source | Essential for custom analysis scripts processing DLC output data. |

Within the field of animal behavior neuroscience and related drug development, markerless pose estimation has become a cornerstone technology. This whitepaper, framed within the broader thesis of DeepLabCut's (DLC) role in democratizing advanced behavioral analysis, provides a technical comparison of the current competitive landscape. We evaluate DLC against prominent open-source frameworks (SLEAP, Anipose) and commercial solutions, focusing on technical capabilities, experimental applicability, and quantitative performance.

Quantitative Landscape Comparison

The following tables summarize key quantitative and feature-based comparisons based on recent benchmarks and software documentation.

Table 1: Core Software Characteristics & Capabilities

| Feature | DeepLabCut (DLC) | SLEAP | Anipose | Commercial Solutions (e.g., Noldus EthoVision XT, Viewpoint) |

|---|---|---|---|---|

| Primary Model Architecture | ResNet/ EfficientNet + Deconv. | UNet + Part Affinity Fields | DeepLabCut + 3D Triangulation | Proprietary, often not disclosed |

| Licensing & Cost | Open-source (MIT) | Open-source (Apache 2.0) | Open-source (GNU GPL v3) | Commercial, high annual license fees |

| Key Technical Strength | Strong 2D tracking, active learning (DLC 2.x), broad community | Multi-anant tracking, GPU-accelerated inference, user-friendly GUI | Streamlined multi-camera 3D calibration & triangulation | Integrated hardware/software suites, dedicated technical support |

| Typical Workflow Speed (FPS, 1080p)* | 20-40 FPS (on GPU) | 50-100 FPS (on GPU) | ~10-30 FPS (depends on 2D backend) | Highly optimized, often real-time |

| Multi-animal Tracking | Yes (with maDLC) |

Yes (native, strong suit) | Limited, via 2D backends | Yes (often limited to predefined species/contexts) |

| 3D Pose Estimation | Yes (requires separate camera calibration & triangulation) | Yes (via sleap-3d add-on) |

Yes (native, streamlined workflow) | Common in high-end packages |

| Active Learning Support | Yes (native, via GUI) | Limited | No | No |

*Throughput depends on hardware, model complexity, and video resolution.

Table 2: Recent Benchmark Performance (Mouselight Dataset Excerpt)

| Metric | DeepLabCut | SLEAP | Anipose (via DLC backend) | Notes |

|---|---|---|---|---|

| Mean RMSE (pixels) | 5.2 | 4.8 | N/A | Lower is better. SLEAP shows slight edge in 2D precision. |

| OKS@0.5 (AP) | 0.89 | 0.91 | N/A | Object Keypoint Similarity Average Precision. Higher is better. |

| Multi-anant ID Switches | 12 per 1000 frames | 3 per 1000 frames | N/A | SLEAP demonstrates superior identity persistence. |

| 3D Reprojection Error (mm) | 1.8 (with calibration) | 2.1 (with sleap-3d) |

1.5 | Anipose's optimized pipeline yields lowest 3D error. |

| Training Time (hrs, 1k frames) | ~2.5 | ~1.5 | ~2.5 (for 2D model) | SLEAP's training is generally faster. |

Data synthesized from Pereira et al., Nat Methods 2022 (SLEAP) and Nath et al., eLife 2019 (DLC), and project GitHub repositories. Actual performance is task-dependent.

Detailed Experimental Protocols

Protocol 1: Benchmarking 2D Pose Estimation Accuracy (for DLC, SLEAP, Anipose)

- Objective: Quantify the root-mean-square error (RMSE) of keypoint predictions on a held-out test set with manually annotated ground truth.

- Materials: Curated video dataset (e.g., Mouselight Benchmark Suite), GPU workstation, software installations.

- Procedure:

- Data Preparation: Split dataset into training (70%), validation (15%), and test (15%) sets. Ensure consistent annotations across all tools.

- Model Training: For each framework, train a standard model (e.g., DLC: ResNet-50; SLEAP: UNet with Single Instance Centroid) on the identical training set. Use default optimization parameters initially.

- Inference: Run prediction on the held-out test set videos. Export predicted keypoint coordinates.

- Analysis: Calculate RMSE between predicted and ground truth coordinates for each keypoint, averaged across all frames and keypoints. Use Object Keypoint Similarity (OKS) for a scale-invariant measure.

Protocol 2: Multi-Camera 3D Reconstruction Workflow (DLC vs. Anipose)

- Objective: Reconstruct 3D animal pose from synchronized 2D video feeds.

- Materials: 2+ synchronized cameras, calibration charuco/checkerboard, calibration software/script.

- DLC-centric Workflow:

- Calibration: Record a charuco board moved throughout the volume. Use DLC's

calibrateor OpenCV'scalibrateCamerato obtain intrinsic and extrinsic camera parameters. - 2D Tracking: Train a separate DLC network or use a pre-trained one to obtain 2D keypoints from each camera view.

- Triangulation: Use DLC's

triangulatefunction or a custom script (e.g.,direct linear transform) to reconstruct 3D points from 2D correspondences and the camera calibration.

- Calibration: Record a charuco board moved throughout the volume. Use DLC's

- Anipose-centric Workflow:

- Calibration: Use Anipose's

calibrateGUI to record the calibration board. It automates parameter estimation and outlier rejection. - 2D Tracking: Use Anipose's pipeline to run a supported 2D pose estimator (DLC or SLEAP) on all videos.

- 3D Reconstruction: Run Anipose's

triangulatecommand, which handles matching 2D points across cameras, filtering implausible 3D reconstructions, and smoothing the final 3D trajectories.

- Calibration: Use Anipose's

Protocol 3: Evaluating Multi-Animal Tracking Performance

- Objective: Measure identity preservation accuracy in social housing experiments.

- Materials: Video of interacting animals (≥2), ground truth tracks with identities.

- Procedure:

- Model Training: Train multi-animal models in DLC (

maDLC) and SLEAP (native) using animal identity as part of the training labels. - Tracking: Process a long, challenging video sequence with frequent animal interactions and occlusions.

- Metric Calculation: Use metrics like ID switches (count of identity assignment errors), MOTA (Multi-Object Tracking Accuracy), and HOTA (Higher Order Tracking Accuracy) to benchmark performance against manual ground truth.

- Model Training: Train multi-animal models in DLC (

Visualized Workflows & Relationships

Title: Competitive Tool Landscape: From Video to 3D Pose

Title: Experimental Decision Workflow for 3D Pose Estimation

The Scientist's Toolkit: Essential Research Reagents & Solutions

Table 3: Key Reagents & Materials for Pose Estimation Experiments

| Item | Function/Description | Example Brand/Type |

|---|---|---|

| High-Speed Cameras | Capture fast animal movements without motion blur. Essential for gait analysis. | FLIR Blackfly S, Basler acA, or affordable global shutter alternatives (e.g., Raspberry Pi HQ). |

| Infrared (IR) Illumination & Filters | Enables nighttime behavioral tracking or eliminates visual cues for optogenetics experiments. | 850nm or 940nm LED arrays with matching IR-pass filters on cameras. |

| Calibration Charuco Board | Provides a hybrid checkerboard/ArUco marker pattern for robust, sub-pixel camera calibration. | Custom printed on rigid substrate or purchased from scientific imaging suppliers. |

| Synchronization Hardware | Ensures frame-accurate alignment of video streams from multiple cameras for 3D reconstruction. | Arduino-based trigger, National Instruments DAQ, or commercial genlock cameras. |

| GPU Workstation | Accelerates model training (days→hours) and real-time inference. Critical for iterative labeling. | NVIDIA RTX series with ≥8GB VRAM (e.g., RTX 4070/4080, or A-series for labs). |

| Behavioral Arena | Standardized experimental enclosure. Often includes controlled lighting, textures, and modular walls. | Custom acrylic or plastic, may integrate with touch screens or operant chambers. |

| Data Annotation Software | Creates ground truth data for model training and validation. | DLC's labelGUI, SLEAP's sleap-label, or commercial annotation tools (CVAT). |

| High-Performance Storage | Stores large volumes of high-resolution video data (TB-scale). Requires fast read/write for processing. | NAS (Network Attached Storage) with RAID configuration or direct-attached SSD arrays. |

From Video to Data: A Step-by-Step DeepLabCut Pipeline for Robust Behavioral Phenotyping

This guide serves as the foundational technical document for a broader thesis on employing DeepLabCut (DLC) for robust, reproducible animal behavior neuroscience research. The accuracy of downstream pose estimation and behavioral quantification is wholly dependent on the quality of the initial video data. This section provides a current, in-depth technical protocol for camera setup, lighting, and video formatting to ensure optimal DLC performance.

Camera Selection & Configuration

The choice of camera is dictated by the behavioral paradigm, animal size, and required temporal resolution.

Key Specifications & Quantitative Data

Table 1: Camera Specification Comparison for Common Behavioral Paradigms

| Behavior Paradigm | Recommended Resolution | Minimum Frame Rate (Hz) | Sensor Type Consideration | Lens Type |

|---|---|---|---|---|

| Open Field, Home Cage | 1080p (1920x1080) to 4K | 30 | Global Shutter (preferred) | Wide-angle (fixed focal) |

| Rotarod, Grip Strength | 720p (1280x720) to 1080p | 60-100 | Global Shutter | Standard or Macro |

| Social Interaction | 1080p to 4K | 30-60 | Global Shutter | Wide-angle |

| Ultrasonic Vocalization (USV) Sync | 1080p | 100+ (for jaw/mouth movement) | Global Shutter | Standard |

| Paw Gait Analysis (Underneath) | 720p to 1080p | 150-500 | Global Shutter (mandatory) | Telecentric (minimize distortion) |

Experimental Protocol: Camera Calibration & Validation

- Spatial Calibration: Place a checkerboard pattern (e.g., 8x6 squares, 5mm each) within the arena. Record a brief video where the pattern is moved to different locations and orientations. Use OpenCV's

cv2.calibrateCamerafunction or the DLC calibration toolbox to compute the intrinsic camera matrix and lens distortion coefficients. Apply these to all subsequent videos. - Temporal Validation: For multi-camera synchronization, record an LED timer or a rapidly blinking LED visible to all cameras. Post-hoc analysis of the precise frame of each flash across cameras allows for sub-frame alignment of video streams.

- Resolution-Frame Rate Trade-off Test: Before the main experiment, record a short trial of the animal at the intended resolution and frame rate. Verify that the fastest body part movement (e.g., paw during a reach) does not displace more than ~5-10 pixels between consecutive frames to ensure trackability.

Lighting: The Critical, Often Overlooked, Variable

Consistent, high-contrast lighting is more important than ultra-high resolution for DLC.

Best Practices & Protocols

- Protocol for Diffuse, Shadow-Minimized Lighting: Use LED light panels equipped with diffusers. Position lights at a 45-degree angle to the arena floor from at least two opposing sides to fill in shadows. Never use a single, direct overhead point source.

- Protocol for Eliminating Flicker: Set camera shutter speed to a multiple of the AC power frequency (e.g., 1/100s or 1/120s for 50Hz/60Hz power). Use DC-powered LED lights, not AC-dimmed bulbs. Verify by recording a stationary scene and checking for periodic brightness fluctuations in pixel intensity.

- Contrast Enhancement Protocol: For light-colored animals (e.g., white mice), use a non-reflective, dark-colored arena floor and vice versa. Infrared (IR) lighting for nocturnal animals must be even and produce no visible "hot spots."

Video Format & Acquisition Standards

Table 2: Recommended Video Format Specifications for DeepLabCut

| Parameter | Recommended Setting | Rationale & Technical Note |

|---|---|---|

| Container/Codec | .mp4 with H.264 or .avi with MJPEG |

H.264 offers good compression; MJPEG is lossless but creates larger files. Avoid motion-compensated codecs. |

| Pixel Format | Grayscale (8-bit) | Reduces file size, eliminates chromatic aberration issues, and is sufficient for DLC. |

| Bit Depth | 8-bit | Standard for consumer/prosumer cameras; provides 256 intensity levels. |

| Acquisition Drive | SSD (Internal or fast external) | Must sustain high write speeds for high-frame-rate or multi-camera recording. |

| Naming Convention | YYMMDD_ExperimentID_AnimalID_Camera#_Trial#.mp4 |

Ensures automatic sorting and prevents ambiguity in large datasets. |

Protocol for Video Pre-processing Check:

- Load a sample video using

cv2.VideoCapturein Python or similar. - Extract frame statistics: mean pixel intensity per frame. Plot this over time to detect lighting drift or flicker.

- Check for compression artifacts by examining single frames for blockiness in areas of movement.

- Confirm the actual frame rate (

cv2.CAP_PROP_FPS) matches the setting from the acquisition software.

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for High-Quality Behavioral Videography

| Item | Function & Rationale |

|---|---|

| Global Shutter CMOS Camera | Eliminates motion blur (rolling shutter artifact) during fast movement. Critical for gait analysis. |

| IR-Pass Filter (850nm) | Blocks visible light, allowing for simultaneous visible-spectrum experiments and IR tracking in dark phases. |

| Telecentric Lens | Provides orthographic projection; object size remains constant regardless of distance from lens. Essential for accurate 3D reconstruction. |

| Diffused LED Panels | Provides even, shadow-free illumination, maximizing contrast and minimizing pixel value variance. |

| Synchronization Pulse Generator | Sends a TTL pulse to all cameras and data acquisition systems (neural, physiological) for perfect temporal alignment. |

| Calibration Charuco Board | Combines checkerboard and ArUco markers for robust, sub-pixel accurate camera calibration and distortion correction. |

| High-Write-Speed SSD | Prevents dropped frames during high-speed or multi-camera recording by maintaining sustained write throughput. |

| Non-Reflective Arena Material (e.g., matte acrylic, felt) | Minimizes specular highlights and reflections that confuse pose estimation algorithms. |

Experimental Workflow Visualization

Title: Workflow for Optimizing Video Acquisition for DeepLabCut

Signaling Pathway: From Photons to Reliable Keypoints

Title: Data Acquisition Pathway for Optimal DLC Performance

Within the broader thesis on implementing DeepLabCut (DLC) for high-throughput, quantitative analysis of animal behavior in neuroscience and drug discovery, Stage 2 is the critical foundational step. This phase transforms a raw video dataset into a structured, machine-readable project by defining the ethological or biomechanical model of interest (body parts) and strategically selecting frames for human annotation. The precision of this stage directly dictates the performance, generalizability, and biological relevance of the resulting pose estimation network.

Defining the Anatomical and Behavioral Model: Body Parts

The selection of body parts (or "keypoints") is not merely anatomical but functional, directly derived from the experimental hypothesis. In behavioral neuroscience and pharmacotherapy development, these points must capture the relevant kinematic and postural features.

Core Principles for Keypoint Selection

- Relevance to Behavioral Phenotype: Keypoints must operationalize the behavior of interest (e.g., distances between snout and object for sociability, joint angles for gait analysis in pain models).

- Invariance and Consistency: Points should be reliably identifiable across all animals, sessions, and treatments, even with varying coat colors or lighting.

- Information Density: A minimal set that maximally describes posture. Redundant points increase annotation burden without improving model performance.

- Hierarchical Organization: Grouping related body parts (e.g., forelimb: shoulder, elbow, wrist) aids in network interpretation and error analysis.

Quantitative Guidelines from Literature

Recent benchmarking studies provide empirical guidance on keypoint selection.

Table 1: Impact of Keypoint Number on DLC Model Performance

| Study (Year) | Model Variant | # Keypoints | # Training Frames | Resulting Pixel Error (Mean ± SD) | Inference Speed (FPS) | Key Recommendation |

|---|---|---|---|---|---|---|

| Mathis et al. (2020) | ResNet-50 | 4 | 200 | 3.2 ± 1.1 | 210 | Sufficient for basic limb tracking. |

| Lauer et al. (2022) | EfficientNet-B0 | 12 | 500 | 5.8 ± 2.3 | 180 | Optimal for full-body rodent pose. |

| Pereira et al. (2022) | ResNet-101 | 20 | 1000 | 7.1 ± 3.5* | 45 | High complexity; error increases without proportional training data. |

| Error increase attributed to self-occlusion in dense clusters. |

Experimental Protocol 1: Systematic Body Part Definition

- Hypothesis Mapping: List all quantitative measures required (e.g., velocity of snout, flexion angle of knee).

- Kinematic Chain Drafting: Draft a skeleton connecting proposed keypoints. Validate that all measures can be derived.

- Pilot Video Review: Inspect a subset of videos for occlusions, lighting variance, and animal orientation. Refine keypoints for consistency.

- Final Configuration: Document the

config.yamlfile entries, including body part names, skeleton links, and coloring scheme.

Extracting Training Frames: A Strategic Sampling Protocol

The goal is to select a set of frames that maximally represents the variance in the entire dataset, ensuring model robustness.

Sampling Methodologies

DLC offers multiple algorithms for frame extraction, each with distinct advantages.

Table 2: Frame Extraction Method Comparison

| Method | Algorithm Description | Best Use Case | Potential Pitfall |

|---|---|---|---|

| Uniform | Evenly samples frames across video(s). | Initial exploration, highly stereotyped behaviors. | Misses rare but critical behavioral states. |

| k-means | Clusters frames based on image pixel intensity (after PCA) and selects frames closest to cluster centers. | Capturing diverse postures and appearances. Computationally intensive. | May undersample transient dynamics between postures. |

| Manual Selection | Researcher hand-picks frames. | Targeted sampling of specific, low-frequency events (e.g., seizures, social interactions). | Introduces selection bias; not reproducible. |

Quantitative Sampling Strategy

The required number of training frames is a function of keypoint complexity, desired accuracy, and dataset variance.

Experimental Protocol 2: Optimized k-means Frame Extraction

- Input Preparation: Concatenate videos from all experimental groups and conditions (e.g., control vs. drug-treated).

- Parameter Setting: In the DLC GUI or script, specify the target number of frames (e.g., 500-1000 from a multi-video set). Adjust the

cropparameters if using a consistent region of interest. - Feature Extraction: DLC downsamples each frame, reduces dimensionality via PCA, and applies k-means clustering on the principal components.

- Frame Selection: The algorithm outputs a list of frame indices closest to each cluster centroid. These are saved as individual PNG files in the

labeled-datafolder. - Validation: Manually scroll through the selected frames to confirm they capture the full range of animal poses, orientations, lighting, and any experimental apparatus.

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for DLC Project Creation & Labeling

| Item | Function | Example/Specification |

|---|---|---|

| High-Speed Camera | Captures motion with minimal blur for precise keypoint localization. | >100 FPS for rodent gait; global shutter recommended. |

| Consistent Lighting | Eliminates shifting shadows and ensures consistent appearance. | IR illumination for nocturnal animals; diffuse LED panels. |

| Ethological Apparatus | Standardized environment for behavioral tasks. | Open field, elevated plus maze, rotarod. |

| Video Annotation Software | Interface for human labeling of extracted training frames. | DeepLabCut's labeling GUI, COCO Annotator. |

| Computational Workspace | Environment for running DLC and managing data. | Jupyter Notebooks, Python 3.8+, GPU with CUDA support. |

| Data Management Platform | Stores and versions raw videos, config files, and labeled data. | Hierarchical folder structure, cloud storage (AWS S3), DVC (Data Version Control). |

Visual Workflow

DLC Stage 2 Workflow

Keypoint & Frame Selection Logic

Within the broader thesis of employing DeepLabCut (DLC) for animal behavior neuroscience research, the manual annotation stage is a critical bottleneck. This stage determines the quality of the ground truth data used to train the pose estimation model, directly impacting downstream analyses of neural correlates and behavioral pharmacology. This guide details strategies to optimize this process for efficiency and accuracy.

Foundational Principles and Quantitative Benchmarks

Effective labeling is predicated on two pillars: inter-rater reliability and labeling efficiency. The table below summarizes key quantitative benchmarks from recent literature for establishing annotation quality control.

Table 1: Key Metrics for Annotation Quality and Efficiency

| Metric | Target Benchmark | Measurement Method | Impact on DLC Model |

|---|---|---|---|

| Inter-Rater Reliability (IRR) | ICC(2,1) > 0.99 | Intraclass Correlation Coefficient (Two-way random, absolute agreement) | High IRR ensures consistent ground truth, reducing model confusion. |

| Mean Pixel Error (MPE) | < 5px (for typical 500x500 frame) | Average distance between annotators' labels for the same point. | Lower MPE leads to lower training error and higher model precision. |

| Frames Labeled per Hour | 50-200 (task-dependent) | Count of fully annotated frames per annotator hour. | Determines project timeline; can be optimized with workflow tools. |

| Train-Test Consistency Error | < 2.5px | Average distance of labels from the same annotator on a repeated frame. | Measures intra-rater reliability; critical for dataset cohesion. |

Detailed Experimental Protocol for Establishing Annotation Standards

Protocol: Calibration and Reliability Assessment for Annotation Team

- Selection of Calibration Frame Set: Randomly select 50-100 representative frames from the full video corpus, encompassing the full range of animal poses, lighting conditions, and occlusion scenarios expected in the study.

- Independent Annotation: All annotators on the team independently label the entire calibration set using the defined DLC project configuration (body parts, labeling order).

- Statistical Analysis: Calculate Inter-Rater Reliability (ICC) and Mean Pixel Error (MPE) for each body part across all annotators using the calibration set labels.

- Discrepancy Resolution & Guideline Refinement: Hold a consensus meeting to review frames with the highest disagreement. Establish explicit, written rules for edge cases (e.g., occluded limb location, top-of-head vs. ear base).

- Re-test: Annotators re-label a subset (20%) of the calibration frames after guideline refinement. Re-calculate metrics to confirm improvement.

- Approval: Annotators achieving benchmark metrics (ICC>0.99, MPE<5px) proceed to label the full dataset. Periodic re-checks (every 500 frames) are mandated to prevent "labeling drift."

Optimized Workflow for Manual Annotation

The following diagram outlines the systematic workflow for efficient and accurate manual annotation within a DLC project, incorporating quality control checkpoints.

DLC Manual Annotation Quality Assurance Workflow

The Scientist's Toolkit: Key Reagent Solutions for Behavioral Annotation

Table 2: Essential Research Reagents & Tools for DLC Annotation

| Item | Function in Annotation Process | Example/Note |

|---|---|---|

| High-Contrast Animal Markers | Creates artificial, high-contrast keypoints for benchmarking DLC or simplifying initial labeling. | Non-toxic, water-resistant fur dyes (e.g., Nyanzol-D) or small reflective markers for high-speed tracking. |

| Standardized Illumination | Provides consistent lighting to minimize video artifact variability, simplifying label definition. | Infrared (IR) LED arrays for dark-phase rodent studies; diffused white light for consistent color. |

| DLC-Compatible Annotation GUI | The primary software interface for efficient manual clicking and frame navigation. | DeepLabCut's labelGUI (native), SLEAP, or Anipose. Efficiency hinges on keyboard shortcuts. |

| Ergonomic Input Devices | Reduces annotator fatigue and improves precision during long labeling sessions. | Gaming-grade mouse with adjustable DPI, graphic tablet (e.g., Wacom), or ergonomic chair. |

| Computational Hardware | Enables smooth display of high-resolution, high-frame-rate video during labeling. | GPU (for rapid frame loading), high-resolution monitor, and fast SSD storage for video files. |

| Data Management Scripts | Automates file organization, label aggregation, and initial quality checks. | Custom Python scripts to shuffle/extract frames, collate .csv files from multiple annotators, and compute initial MPE. |

Advanced Strategies for Complex Behaviors

For complex behavioral paradigms (e.g., social interaction, drug-induced locomotor changes), a tiered labeling approach is recommended. The following diagram illustrates the logical decision process for applying advanced labeling strategies to different experimental scenarios.

Decision Logic for Advanced Labeling Strategies

Protocol: Sparse Labeling with Temporal Propagation

- Frame Extraction: Instead of labeling every frame, extract frames at a lower frequency (e.g., every 5th or 10th frame) using DLC's

extract_outlier_framesfunction or a custom temporal sampler. - Annotation: Manually label only this sparse set of frames with high precision.

- Initial Training: Train a preliminary DLC model on this sparse set.

- Prediction & Interpolation: Use this preliminary model to generate predictions for all unlabeled frames in the video. Use DLC's

analyze_videoandcreate_labeled_videofunctions. - Correction & Refinement: Manually correct the model's predictions on a new, smaller set of outlier frames (identified by low prediction likelihood). Add these corrected frames to the training set.

- Full Training: Iterate or proceed to train the final model on the enriched dataset. This protocol can reduce manual labeling effort by 60-80% for long videos with smooth motion.

This guide details the critical model training stage within a comprehensive thesis on employing DeepLabCut (DLC) for robust markerless pose estimation in animal behavior neuroscience and preclinical drug development.

Network Architecture & Hyperparameter Configuration

The DeepLabCut standard employs a ResNet-based backbone (often ResNet-50 or ResNet-101) for feature extraction, followed by transposed convolutional layers for upsampling to generate heatmaps for each keypoint.

Table 1: Standard vs. Optimized Network Parameters for Rodent Behavioral Analysis

| Parameter | DLC Standard Default | Recommended for Complex Behavior (e.g., Social Interaction) | Recommended for High-Throughput Screening | Function & Rationale |

|---|---|---|---|---|

| Backbone | ResNet-50 | ResNet-101 | EfficientNet-B3 | Deeper networks (ResNet-101) capture finer features; EfficientNet offers accuracy-efficiency trade-off. |

| Global Learning Rate | 0.0005 | 0.0001 (with decay) | 0.001 | Lower rates stabilize training on variable behavioral data; higher rates can accelerate convergence in controlled setups. |

| Batch Size | 8 | 4 - 8 | 16 - 32 | Smaller batches may generalize better for heterogeneous poses; larger batches suit consistent, high-volume data. |

| Optimizer | Adam | AdamW | SGD with Nesterov | AdamW decouples weight decay, improving generalization. SGD can converge to sharper minima. |

| Weight Decay | Not Explicitly Set | 0.01 | 0.0005 | Regularizes network to prevent overfitting to specific animals or environmental artifacts. |

| Training Iterations (Epochs) | Variable (~200k steps) | 500k - 1M steps | 200k - 400k steps | Complex behaviors require more iterations to learn pose variance from drug effects or social dynamics. |

Protocol 1: Hyperparameter Optimization via Grid Search

- Define a search space for 2-3 key parameters (e.g., learning rate: [0.001, 0.0005, 0.0001], batch size: [4, 8, 16]).

- Hold out a fixed validation dataset from the labeled frames.

- Train multiple DLC models in parallel, each with a unique parameter combination, for a fixed number of iterations (e.g., 50k).

- Evaluate each model on the validation set using the Root Mean Square Error (RMSE) in pixels.

- Select the parameter set yielding the lowest validation RMSE for full-scale training.

Data Augmentation Strategies for Behavioral Robustness

Augmentation is vital to simulate biological variance and prevent overfitting to lab-specific conditions.

Table 2: Augmentation Pipeline for Preclinical Research

| Augmentation Type | Technical Parameters | Neuroscience/Pharmacology Rationale |

|---|---|---|

| Spatial: Affine Transformations | Rotation: ± 30°; Scaling: 0.7-1.3; Shear: ± 10° | Mimics variable animal orientation and distance to camera in open field or home cage. |

| Spatial: Elastic Deformations | Alpha: 50-150 px; Sigma: 5-8 px | Simulates natural body fluidity and non-rigid deformations during grooming or rearing. |

| Photometric: Color Jitter | Brightness: ± 30%; Contrast: ± 30%; Saturation: ± 30% | Accounts for differences in lighting across experimental rigs, times of day, or drug administration setups. |

| Photometric: Motion Blur | Kernel Size: 3x3 to 7x7 | Blurs rapid movements (e.g., head twitches, seizures), forcing network to learn structural rather than temporal features. |

| Contextual: CutOut / Random Erasing | Max Patch Area: 10-20% of image | Forces model to rely on multiple body parts, improving robustness if a keypoint is occluded by a feeder, toy, or conspecific. |

Protocol 2: Implementing Progressive Augmentation

- Initial Training: Begin with moderate augmentation (e.g., rotation ±20°, mild color jitter). Train for the first 30% of total iterations.

- Intensification: Gradually increase augmentation strength (e.g., rotation to ±30°, add motion blur). Train for the next 50% of iterations.

- Fine-tuning: Reduce augmentation to initial levels or disable photometric changes for the final 20% of iterations. This allows the network to fine-tune on data closer to the original distribution.

Iterative Refinement and Active Learning

The DLC framework emphasizes an iterative training and refinement cycle to correct labeling errors and improve model performance.

Protocol 3: The Refinement Loop

- Initial Training: Train a network on the initially labeled dataset (

Dataset 1). - Evaluation: Analyze the model on a novel video (not used in training). Use DLC's

analyze_videosandcreate_labeled_videofunctions. - Extraction of Outlier Frames: Use DLC's

extract_outlier_framesfunction. This employs a statistical approach (based on network prediction confidence and consistency across frames) to identify frames where the model is most uncertain. - Labeling & Refinement: Manually correct the labels on these extracted outlier frames in the DLC GUI.

- Merging & Retraining: Merge the newly corrected frames with

Dataset 1to createDataset 2. Re-train the network from its pre-trained state on this expanded, corrected dataset. - Convergence Check: Repeat steps 2-5 until model performance (e.g., RMSE, percent correct tracks) plateaus on a held-out test set. Typically, 1-3 refinement cycles yield significant gains.

Title: DLC Iterative Refinement Workflow

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for DLC-Based Behavioral Experiments

| Item/Reagent | Function in DLC Experiment | Specification Notes |

|---|---|---|

| High-Speed Camera | Captures fast motor sequences (e.g., gait, tremors). | ≥ 100 fps; global shutter preferred to reduce motion blur. |

| Controlled Infrared (IR) Lighting | Enables consistent tracking in dark-cycle or dark-adapted behavioral tasks. | 850nm or 940nm LEDs; uniform illumination to minimize shadows. |

| Multi-Animal Housing Arena | Generates data for social interaction studies. | Sized for species; contrasting background (e.g., white for black mice). |

| Calibration Grid/Board | Corrects for lens distortion, ensures metric measurements (e.g., distance traveled). | Checkerboard or grid of known spacing. |

| DLC-Compatible GPU Workstation | Accelerates model training and video analysis. | NVIDIA GPU (≥8GB VRAM); CUDA and cuDNN installed. |

| Behavioral Annotation Software (BORIS, JAABA) | Used for generating ground-truth event labels (e.g., "rearing", "grooming") to correlate with DLC pose data. | Enables multi-modal behavioral analysis. |

| Data Sanity Check Toolkits | Validates pose estimates before analysis. | Custom scripts to plot trajectory smoothness, bone length consistency over time. |

This whitepaper details Stage 5 of a comprehensive thesis on implementing DeepLabCut (DLC) for robust animal pose estimation in behavioral neuroscience and psychopharmacology. Following network training, this stage transforms raw 2D/3D coordinate outputs into biologically meaningful, analysis-ready data. It addresses the critical post-processing pipeline involving video analysis, trajectory filtering for noise reduction, and the generation of publication-quality visualizations, which are essential for hypothesis testing in research and drug development.

Core Video Analysis with DeepLabCut

Following pose estimation on new videos, DLC outputs pose data in structured formats (e.g., .h5 files). The analysis phase extracts kinematic and behavioral metrics.

Key Analysis Outputs:

- Kinematic Variables: Speed, velocity, acceleration, distance traveled, angular changes.

- Event Detection: Identification of discrete behaviors (e.g., rearing, grooming, freezing) based on body part configurations and movement.

- Interaction Metrics: Proximity, contact duration, and coordinated movement between animals or with objects.

Experimental Protocol: Extracting Kinematic Metrics from DLC Output

- Data Loading: Load the DLC-generated

.h5file (containing coordinates and likelihoods) into a Python environment usingpandas. - Data Structuring: Reshape the multi-index DataFrame to have columns for each body part's

x,y, andlikelihood. - Likelihood Thresholding: Filter coordinates based on a likelihood threshold (e.g., 0.95). Coordinates below the threshold are set to

NaN. - Pixel-to-Real-World Conversion: Apply a linear transformation using a known scale (e.g., pixels/cm) derived from calibration.

- Smoothing: Apply a low-pass Butterworth filter (e.g., 10Hz cutoff) to the x and y time series to reduce high-frequency camera noise.

- Metric Calculation: Compute derivatives. For speed (centroid movement):

displacement = sqrt(diff(x)^2 + diff(y)^2)speed = displacement / frame_interval

- Temporal Binning: Aggregate calculated metrics (mean, max) into biologically relevant time bins (e.g., 1-minute bins for a 10-minute open field test).

Table 1: Representative Kinematic Data from a Mouse Open Field Test (5-min trial)

| Metric | Mean ± SEM | Unit | Relevance in Drug Studies |

|---|---|---|---|

| Total Distance Traveled | 3520 ± 210 | cm | General locomotor activity |

| Average Speed (Movement Bouts) | 12.5 ± 0.8 | cm/s | Motor coordination & vigor |

| Time Spent in Center Zone | 58.3 ± 7.2 | s | Anxiety-like behavior |

| Rearing Events (#) | 42 ± 5 | count | Exploratory drive |

| Grooming Duration | 85 ± 12 | s | Stereotypic/self-directed behavior |

Trajectory Filtering with Kalman and Related Filters

Raw trajectories contain noise from estimation errors and occlusions. Filtering is essential for accurate velocity/acceleration calculation and 3D reconstruction.

Kalman Filter Theory

The Kalman Filter (KF) is an optimal recursive estimator that predicts an object's state (position, velocity) and corrects the prediction with new measurements. It is ideal for linear Gaussian systems. For animal tracking, a Constant Velocity model is often appropriate.

State Vector: x = [pos_x, pos_y, vel_x, vel_y]^T

Measurement: z = [measured_pos_x, measured_pos_y]^T

The KF operates in a Predict-Update cycle, optimally balancing the previous state estimate with the new, noisy measurement from DLC.

Implementation Protocol: Kalman Filtering for 2D DLC Trajectories

Materials: DLC output coordinates, Python with pykalman or filterpy library.

Initialize Filter Parameters:

state_transition_matrix: Defines the constant velocity model.observation_matrix: Maps state (position & velocity) to measurement (position only).process_noise_cov: Uncertainty in the model's predictions (tuneable).observation_noise_cov: Estimated error variance from DLC's likelihood or p-cutoff.

Filter Application:

- Iterate through each frame's measured coordinates.

- Run the

predict()andupdate()steps. - Store the smoothed state estimates.

Handle Missing Data (Occlusions):

- For frames where likelihood is below threshold (

NaN), run only thepredict()step withoutupdate(). - This uses the model to extrapolate the trajectory during short occlusions.

- For frames where likelihood is below threshold (

Validation: Visually and quantitatively compare raw vs. filtered trajectories. Calculate the reduction in implausible, high-frequency jitter.

Table 2: Comparison of Trajectory Filtering Algorithms

| Filter Type | Best For | Key Assumptions | Computational Cost | Implementation Complexity |

|---|---|---|---|---|

| Kalman Filter (KF) | Linear dynamics, Gaussian noise. Real-time. | Linear state transitions, Gaussian errors. | Low | Medium |

| Extended Kalman Filter (EKF) | Mildly non-linear systems (e.g., 3D rotation). | Locally linearizable system. | Medium | High |

| Unscented Kalman Filter (UKF) | Highly non-linear dynamics (e.g., rapid turns). | Gaussian state distribution. | Medium-High | High |

| Savitzky-Golay Filter | Offline smoothing of already-cleaned trajectories. | No explicit dynamical model. | Very Low | Low |

| Alpha-Beta (-Gamma) Filter | Simple, constant velocity/acceleration models. | Fixed gains, simplistic model. | Very Low | Low |

Output Visualization for Scientific Communication

Effective visualization communicates complex behavioral data intuitively.

Key Visualization Types:

- Pose Overlays: Superimpose skeleton or keypoints on original video frames.

- Trajectory Plots: 2D path plots, optionally colored by speed or time.

- Kinematic Time Series: Plots of speed, distance, or angle over the session.

- Heatmaps: 2D density plots of animal occupancy or specific body part location.

- Ethograms: Strip charts depicting the temporal sequence of classified behaviors.

Visual Workflows and Pathways

Title: DLC Stage 5 Post-Processing Workflow

Title: Kalman Filter Predict-Update Cycle

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Tools & Reagents for DLC-Based Behavioral Analysis

| Item | Function in Analysis/Deployment | Example Product/Software |

|---|---|---|

| DeepLabCut (Core Software) | Open-source toolbox for markerless pose estimation. Provides the initial coordinate data for Stage 5. | DeepLabCut 2.3+ |

| High-Speed Camera | Captures high-resolution, high-frame-rate video to minimize motion blur, crucial for accurate trajectory filtering. | Basler acA2040-120um, FLIR Blackfly S |

| Calibration Object | Provides spatial scale (pixels/cm) and corrects lens distortion for accurate metric calculation. | Charuco board (recommended by DLC) |

| Python Scientific Stack | Core programming environment for implementing filtering algorithms and creating custom analyses. | Python 3.8+, NumPy, SciPy, Pandas, Matplotlib |

| Filtering Library | Provides optimized implementations of Kalman filters and related algorithms. | filterpy, pykalman |

| Behavioral Arena (Standardized) | Provides a controlled, replicable environment for video acquisition. Essential for cross-study comparison. | Open Field, Elevated Plus Maze (clearly marked zones) |

| Video Annotation Tool | For labeling ground truth events (e.g., grooming start/end) to validate automated kinematic metrics. | BORIS, ELAN |

| Statistical Analysis Software | For final hypothesis testing of filtered and visualized behavioral metrics. | GraphPad Prism, R (lme4, emmeans) |

The quantification of naturalistic, socially complex behaviors is a central challenge in animal behavior neuroscience and psychopharmacology. DeepLabCut (DLC), a deep learning-based markerless pose estimation toolbox, has become a cornerstone for this work. This whitepaper explores its advanced applications—multi-animal tracking, 3D reconstruction via multiple cameras, and real-time analysis—which are critical for studying dyadic or group interactions, volumetric motion analysis, and closed-loop experimental paradigms in drug development and systems neuroscience.

Multi-Animal Tracking with DeepLabCut

Core Methodology

Multi-animal tracking in DLC is typically achieved through the maDLC pipeline. The process involves:

- Project Creation: A multi-animal project is initialized, defining all individuals (e.g.,

animal1,animal2) and keypoints. - Annotation: For each frame in the training set, all keypoints on all animals are labeled. Identity is maintained during this process.

- Training: A neural network (e.g., ResNet-50/101 with deconvolution layers) is trained to detect all keypoints and assign them to individual instances using a graph-based association method.

- Inference & Tracking: The model predicts keypoints across the video. A tracking algorithm (e.g,

tracklets) then links detections over time to maintain individual identity, often using motion prediction and visual features.

Key Experimental Protocol (Social Interaction Assay)

Objective: Quantify social proximity and directed behaviors between two mice in an open field during a novel compound test.

Protocol:

- Animals: Two age- and weight-matched C57BL/6J mice, habituated to handling.

- Apparatus: A rectangular open-field arena (40cm x 40cm), lit uniformly from above. One top-down, high-speed camera (100 fps) is used.

- DLC Workflow:

- Create an

maDLCproject with labels:nose,left_ear,right_ear,centroid,tailbasefor each animal. - Extract 500 frames from various pilot videos. Annotate all keypoints for both animals in these frames.

- Train network for 1.03M iterations until train/test error plateaus.

- Analyze novel test videos: run inference, then refine tracks using the

trackletsalgorithm with a motion model.

- Create an

- Analysis: Compute derived metrics: inter-animal distance (nose-to-nose), time spent in social zone (<5 cm), and velocity.

Table 1: Performance Metrics of maDLC vs. Manual Scoring

| Metric | maDLC (Mean ± SD) | Manual Scoring | Notes |

|---|---|---|---|

| Detection Accuracy (PCK@0.2) | 98.5% ± 0.7% | 100% (gold standard) | Percentage of Correct Keypoints at 20% body length threshold |

| Identity Swap Rate | 0.12 swaps/min | 0 swaps/min | Lower is better; depends on occlusion frequency |

| Processing Speed | 25 fps (on NVIDIA RTX 3080) | ~2 fps (human) | For 1024x1024 resolution video |

| Inter-animal Distance Error | 1.2 mm ± 0.8 mm | N/A | Critical for social proximity analysis |

Table 2: Key Reagent Solutions for Social Behavior Assays

| Item | Function | Example Vendor/Product |

|---|---|---|

| DeepLabCut (maDLC) | Open-source software for multi-animal pose estimation. | GitHub: DeepLabCut |

| High-Speed Camera | Captures fast, nuanced social movements (e.g., sniffing, chasing). | Basler acA2040-120um |

| EthoVision XT | Commercial alternative/validation tool for tracking and behavior analysis. | Noldus Information Technology |

| Custom Python Scripts | For calculating derived social metrics from DLC output. | (In-house development) |

| Test Compound | Novel therapeutic agent (e.g., OXTR agonist) for modulating social behavior. | Tocris Bioscience (example) |

3D Pose Estimation with Multiple Cameras

Core Methodology

3D reconstruction requires synchronizing video streams from multiple cameras (typically 2-4) with known positions.

- Camera Calibration: Record a calibration video of a checkerboard pattern moved throughout the volume. Use the DLC

camera_calibrationfunction to compute intrinsic (focal length, distortion) and extrinsic (position, rotation) parameters for each camera. - 2D Pose Estimation: Run DLC (single- or multi-animal) on each synchronized video from all cameras.

- Triangulation: Use the calibration parameters and the corresponding 2D keypoints from at least two camera views to compute the 3D (x, y, z) coordinate for each keypoint in each frame via direct linear transform (DLT) or bundle adjustment.

Key Experimental Protocol (Volumetric Gait Analysis)

Objective: Assess the 3D kinematics of a rat's gait in a large arena before and after a neuropathic injury model.

Protocol:

- Animals: Adult Long-Evans rats.