DeepLabCut Complete Guide 2024: Mastering Project Setup, Analysis, and Validation for Biomedical Research

This comprehensive guide provides researchers, scientists, and drug development professionals with a complete workflow for creating, managing, and validating DeepLabCut projects.

DeepLabCut Complete Guide 2024: Mastering Project Setup, Analysis, and Validation for Biomedical Research

Abstract

This comprehensive guide provides researchers, scientists, and drug development professionals with a complete workflow for creating, managing, and validating DeepLabCut projects. From foundational concepts and step-by-step project creation (Intent 1) to advanced model training, multi-animal tracking, and behavioral analysis (Intent 2), the article addresses common pitfalls, performance optimization, and GPU acceleration (Intent 3). It culminates in rigorous validation protocols, statistical analysis of pose data, and comparisons with commercial alternatives (Intent 4), equipping users to implement robust, reproducible markerless pose estimation for preclinical studies and translational research.

What is DeepLabCut? A Primer for Researchers on Markerless Pose Estimation

DeepLabCut (DLC) is an open-source toolkit that enables robust markerless pose estimation of user-defined body parts across species. Within the broader thesis of DeepLabCut project creation and management research, the core concept represents a paradigm shift: leveraging transfer learning from computer vision (specifically, human pose estimation models like DeeperCut) to solve the problem of quantifying animal behavior without the need for specialized hardware or invasive markers. This technical guide details the underlying architecture, data requirements, and validation protocols essential for rigorous behavioral phenotyping in research and drug development.

Core Technical Architecture: Adapting Human Pose Networks

The foundational innovation of DLC is the application of a pre-trained deep neural network (ResNet, MobileNet, or EfficientNet) to animal pose estimation via transfer learning. A small set of user-labeled frames "fine-tunes" the last convolutional blocks of the network.

Table 1: Core DLC Network Backbone Comparison (Performance Summary)

| Backbone Model | Typical mAP (on benchmark datasets) | Relative Inference Speed | Recommended Use Case |

|---|---|---|---|

| ResNet-50 | High (~92-95%) | Medium | Standard lab conditions, high accuracy priority. |

| ResNet-101 | Very High (~94-96%) | Slow | Complex behaviors, multi-animal scenarios. |

| MobileNetV2 | Good (~85-90%) | Very Fast | Real-time applications, resource-limited hardware. |

| EfficientNet-B0 | High (~91-94%) | Fast | Optimal balance of speed and accuracy. |

mAP: mean Average Precision for keypoint detection.

Experimental Protocol: Network Training & Fine-tuning

- Frame Extraction & Labeling: Extract 100-200 frames from your video corpus using k-means clustering to ensure diversity. Manually label user-defined body parts (e.g., snout, left_forepaw, tail_base) using the DLC GUI.

- Configuration & Initialization: Define the project (

config.yaml) specifying the skeleton, body parts, and the pre-trained network backbone. Initialize the model using weights from ImageNet and DeeperCut. - Fine-tuning: Train the network for a set number of iterations (typically 103,000). Data augmentation (rotation, scaling, cropping) is applied automatically to prevent overfitting.

- Evaluation: The model is evaluated on a held-out portion (~5-20%) of labeled frames. The primary metric is the test error (in pixels), representing the mean distance between predicted and true labels.

From Pixels to Behavioral Metrics: The Analysis Pipeline

Pose estimation outputs (X, Y coordinates and likelihood for each body part per frame) are the raw data for quantification.

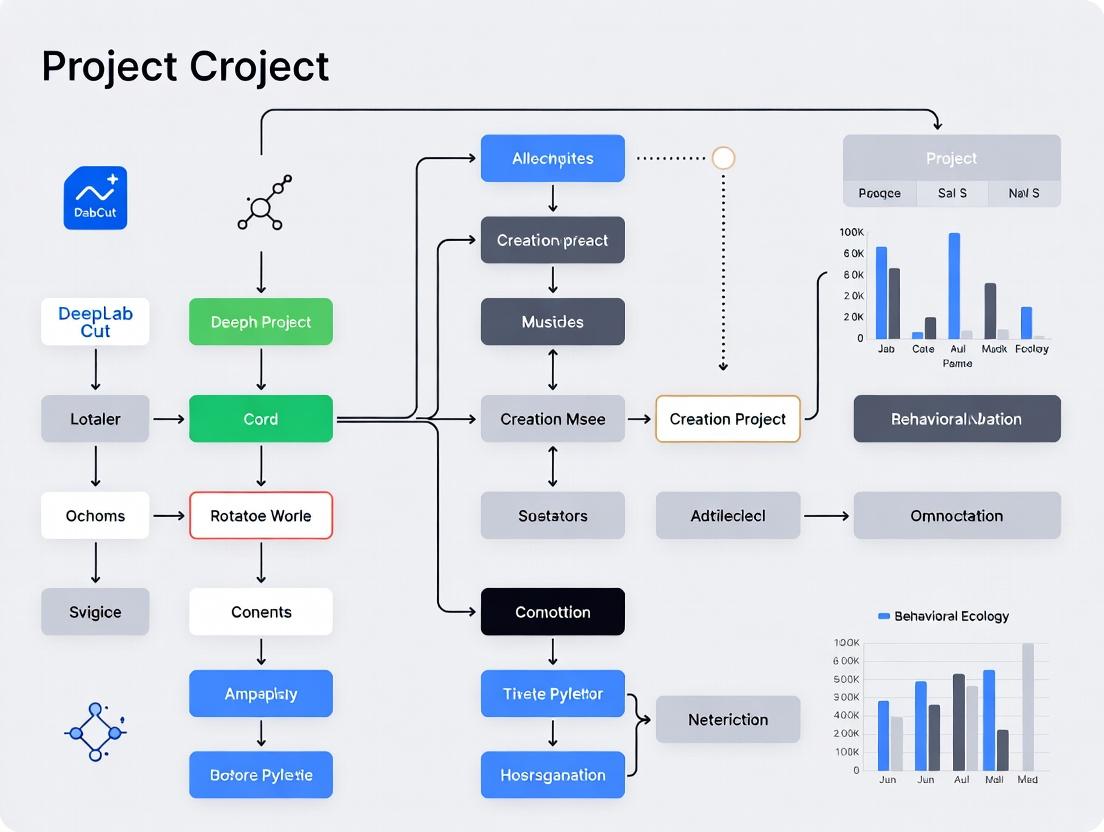

Workflow Diagram:

Title: DeepLabCut Behavioral Quantification Workflow

Experimental Protocol: Trajectory Post-Processing & Feature Extraction

- Filtering: Apply a median filter or Savitzky-Golay filter to smooth trajectories. Use pandas or DLC's

filterpredictionsfunction. - Imputation: For low-likelihood predictions (p<0.6), interpolate using linear or spline methods.

- Kinematic Feature Extraction:

- Speed/Distance: Calculate Euclidean distance between successive points for a body part centroid.

- Angles: Compute joint angles (e.g., elbow, knee) from three related body parts.

- Distances: Measure distances between body parts (e.g., nose-to-object).

- Ethograms: Use supervised (e.g., Random Forest) or unsupervised (e.g., PCA-then-clustering) methods to classify behavioral states (e.g., rearing, grooming) from kinematic features.

Validation: Essential for Scientific Rigor

A core tenet of the thesis is that robust project management requires rigorous validation.

Table 2: Key Validation Experiments & Metrics

| Validation Type | Protocol | Key Quantitative Metric | Acceptance Threshold |

|---|---|---|---|

| Train-Test Error | Compare model error on training vs. held-out test frames. | Test Error (px) | Test error < training error + tolerance. Indicates no overfitting. |

| Inter-Observer Reliability | Have multiple human labelers annotate the same frames. | Pearson's r / ICC | r or ICC > 0.99 for high reliability. |

| Marker-Based Comparison | Compare DLC estimates to traditional markers (e.g., reflective dots). | Mean Absolute Error (MAE) | MAE < 5px (or relevant real-world unit, e.g., 2mm). |

| Downstream Analysis | Compare a known experimental effect using DLC vs. manual scoring. | Statistical Power (Effect size, p-value) | DLC should detect the effect with equal or greater statistical power. |

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials & Tools for a DLC Project

| Item / Solution | Function / Purpose |

|---|---|

| High-Speed Camera | Captures motion with sufficient temporal resolution (e.g., 50-1000 fps) to avoid motion blur. |

| Consistent Lighting Setup | Ensures uniform illumination, minimizing shadows and contrast changes that degrade model performance. |

| Calibration Object (Checkerboard) | For camera calibration; corrects lens distortion and enables conversion from pixels to real-world units (mm/cm). |

| DLC-Compatible GPU (NVIDIA) | Accelerates model training and inference. An RTX 3070 or better is recommended for efficient workflow. |

| Data Curation Software (DLC GUI, FrameExtractor) | Tools for extracting diverse training frames and manually labeling body parts. |

| Post-Processing Suite (NumPy, SciPy, pandas) | Libraries for smoothing, filtering, and analyzing pose estimation data in Python. |

| Behavioral Annotation Software (BORIS, SimBA) | Enables labeling of behavioral events for training supervised classifiers on top of DLC output. |

The core concept of DeepLabCut—transferring computer vision to behavioral neuroscience and pharmacology—demands meticulous project management. From network selection and training to rigorous validation and kinematic analysis, each step must be documented and optimized. For drug development professionals, this pipeline offers an objective, high-throughput method to quantify behavioral phenotypes, locomotor effects, and drug responses with unprecedented detail, transforming subjective observations into quantifiable, statistically robust data.

This whitepaper explores three pivotal application domains for markerless pose estimation via DeepLabCut (DLC), contextualized within a broader research thesis on scalable, reproducible DLC project management. Effective management of model training, dataset versioning, and inference pipeline orchestration is critical for deriving quantitative, translational insights in these fields.

Neuroscience: Circuit Dynamics and Behavior

DLC enables high-throughput, precise quantification of naturalistic and evoked behaviors, linking neural activity (e.g., from calcium imaging or electrophysiology) to kinematic variables.

Key Quantitative Insights

Table 1: DLC-Driven Behavioral Metrics in Rodent Models

| Behavioral Paradigm | Key DLC-Extracted Metric | Typical Baseline Value (Mouse) | Neural Correlate | Impact of DLC Automation |

|---|---|---|---|---|

| Open Field Test | Locomotion Speed (cm/s) | 5-10 cm/s | Striatal DA release | Throughput increased 10x vs. manual scoring |

| Social Interaction | Nose-to-Nose Distance (mm) | <20 mm for interaction | Prefrontal cortex BLA activity | Inter-observer reliability >0.95 (Cohen's Kappa) |

| Fear Conditioning | Freezing Bout Duration (s) | 10-30 s bouts post-tone | Amygdala → PAG pathway | Enables sub-second bout detection, >99% accuracy |

| Rotarod | Body Center Sway (pixels/frame) | 2-5 px/frame at mid-speed | Cerebellar Purkinje cell spiking | Allows continuous performance gradient vs. binary fall latency |

Experimental Protocol: Integrating DLC withIn VivoElectrophysiology

Aim: To correlate striatal neuron spiking with forelimb kinematics during a skilled reaching task.

Materials:

- Animal: Adult C57BL/6J mouse, implanted with a chronic driveable microelectrode array targeting the dorsolateral striatum.

- Behavioral Setup: Plexiglass chamber with a narrow slit for reaching, high-speed camera (250 fps), pellet dispenser.

- Software: DLC (for pose estimation), SpikeSorting (e.g., Kilosort), custom MATLAB/Python scripts for synchronization.

Methodology:

- Task Training: Habituate mouse to chamber, then shape to reach for food pellets. Train until success rate >60% for 3 consecutive days.

- DLC Model Creation: a. Labeling: Extract 500 frames from multiple sessions/animals. Label keypoints: paw_dorsum, paw_center, digits, wrist, elbow, shoulder. b. Training: Use ResNet-50 backbone; train for 750k iterations. Evaluate on a held-out video; ensure test error <5 pixels (or ~2mm).

- Synchronized Data Acquisition: a. Send a TTL pulse from the neural acquisition system to an LED in the camera view at session start. b. Record behavior (250 fps video) and neural data (30 kHz) simultaneously for 20 trials/session.

- Kinematic Feature Extraction: a. Use DLC to infer keypoints on all videos. b. Calculate: reach velocity (paw_dorsum), trajectory smoothness (jerk), and grip aperture (distance between digits).

- Neural Correlation Analysis: a. Align neural spikes to reach onset. b. Use generalized linear models (GLMs) to predict firing rate from kinematic features (e.g., velocity, position).

Diagram 1: Workflow for Neural & Kinematic Data Integration.

Pharmacology: High-Throughput Phenotypic Screening

DLC facilitates objective, granular measurement of drug effects on behavior, moving beyond categorical scores to continuous, multivariate phenotypes.

Key Quantitative Insights

Table 2: Pharmacological Effects Quantified by DLC in Preclinical Models

| Drug Class | Model Organism | Behavioral Assay | Primary DLC Metric (Change from Vehicle) | Typical Effect Size (Cohen's d) |

|---|---|---|---|---|

| SSRI (e.g., Fluoxetine) | Mouse | Tail Suspension Test | Immobility posture variance | d = 1.2 (↓ variance) |

| Psychostimulant (e.g., Amphetamine) | Rat | Open Field | Spatial entropy / path complexity | d = 2.0 (↑ complexity) |

| Analgesic (e.g., Morphine) | Mouse | Von Frey (static) & Gait | Weight-bearing asymmetry & gait duty cycle | d = 1.8 (↓ asymmetry) |

| Anxiolytic (e.g., Diazepam) | Zebrafish | Novel Tank Dive | Time in top zone & descent angle | d = 1.5 (↑ top time) |

Experimental Protocol: Screening for Motor Side Effects

Aim: To quantitatively assess extrapyramidal side effects (EPS) of novel antipsychotic candidates using gait analysis.

Materials:

- Animals: Groups of n=12 male Sprague-Dawley rats per drug condition.

- Drugs: Test compound, haloperidol (positive control), vehicle.

- Setup: Enclosed corridor with transparent walls, floor-mounted high-speed camera (500 fps), mirror at 45° for side view.

- Software: DLC, DeepGraphPipe for gait cycle analysis.

Methodology:

- DLC Model Refinement: a. Use a pre-trained model on rodent gait. Fine-tune with 200 frames from the specific setup, labeling: snout, left/right hind/fore paw, tail_base, iliac_crest.

- Dosing & Recording: a. Administer drug (s.c. or p.o.) 60 min pre-test. Place rat in corridor, record 3 uninterrupted gait cycles (~10s) at 500 fps. Repeat for all animals.

- Gait Parameter Extraction: a. Infer keypoints via DLC. Use algorithms to identify stance/swing phases for each limb. b. Calculate: stride length, swing speed, base of support (BOS), and inter-limb coordination (phase lags).

- Statistical Analysis: a. Perform one-way ANOVA across groups (vehicle, haloperidol, test compound) for each gait parameter. b. Use principal component analysis (PCA) on all kinematic features to derive a composite "EPS score."

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Reagents for DLC-Enhanced Pharmacology Studies

| Item | Function/Description | Example Product/Catalog |

|---|---|---|

| Fluorescent Fur Markers | Non-invasive, high-contrast labeling for multi-animal tracking. | BioGems FluoroMark NIR Dyes |

| Calibration Grid | For spatial calibration (px to cm) and correcting lens distortion. | Thorlabs 3D Camera Calibration Target |

| Synchronization Hardware | Generates TTL pulses to sync video with other data streams (EEG, force plate). | National Instruments USB-6008 DAQ |

| EthoVision Integration Module | Allows import of DLC coordinates for advanced analysis in established platforms. | Noldus EthoVision XT DLC Bridge |

| High-Performance GPU Workstation | Local training of large DLC models (≥ ResNet-101) on sensitive data. | NVIDIA RTX A6000, 48GB VRAM |

Preclinical Models: Translational Validation

DLC provides objective, continuous biomarkers of disease progression and treatment efficacy in neurological and psychiatric disorder models.

Key Quantitative Insights

Table 4: DLC Biomarkers in Neurodegenerative & Neuropsychiatric Models

| Disease Model | Genetic/Lesion | Traditional Readout | DLC-Derived Digital Biomarker | Correlation with Histopathology (r) |

|---|---|---|---|---|

| Parkinson's (PD) | 6-OHDA striatal lesion | Apomorphine-induced rotations | Gait symmetry index & stride length variability | r = -0.89 with striatal TH+ neurons |

| Huntington's (HD) | Q175 knock-in mouse | Latency to fall (rotarod) | Paw clasper probability during grooming | r = -0.92 with striatal volume (MRI) |

| Autism Spectrum (ASD) | Shank3 knockout mouse | Three-chamber sociability | Ultrasonic vocalization (USV) rate during proximity | r = 0.85 with prefrontal synapse count |

| ALS | SOD1(G93A) mouse | Survival, weight loss | Hindlimb splay angle during suspended tail | r = 0.94 with motor neuron loss |

Experimental Protocol: Longitudinal Assessment in a PD Model

Aim: To track progressive motor deficits and levodopa response in the 6-OHDA mouse model.

Materials:

- Animals: C57BL/6 mice, unilateral 6-OHDA injection into medial forebrain bundle.

- Drug: L-DOPA/benserazide (25/12.5 mg/kg, i.p.).

- Setup: Home-cage-like arena with clear walls, ceiling-mounted camera (100 fps), soft flooring.

- Software: DLC, SLEAP (for multi-animal tracking if co-housed), custom R scripts for longitudinal analysis.

Methodology:

- Baseline & Post-Lesion Recording: a. Record 30-minute exploratory behavior pre-surgery (baseline) and at weekly intervals post-lesion for 6 weeks.

- Acute Drug Challenge (Week 6): a. Record pre-injection behavior (30 min), administer L-DOPA, record post-injection behavior (60 min).

- Multi-Animal DLC Analysis: a. Train a DLC model with identity labels (Mouse1nose, Mouse2nose, etc.) if animals are co-housed. b. Extract keypoints: snout, left/right_fore/hind_paw, tail_base.

- Digital Biomarker Calculation: a. Laterality Index: (Contralateral - Ipsilateral paw use)/(Total paw use) during rearing. b. Bradykinesia Score: Median movement speed of forepaws during ambulation. c. Dyskinesia Score: Quantify abnormal, repetitive limb movements post-L-DOPA using frequency analysis of paw trajectories.

- Longitudinal Modeling: a. Use linear mixed-effects models to analyze biomarker progression over weeks, with animal ID as a random effect.

Diagram 2: Preclinical PD Model Assessment Pipeline.

The integration of DeepLabCut into neuroscience, pharmacology, and preclinical model validation generates high-dimensional, quantitative behavioral data that demands rigorous project management. The broader thesis on DLC project creation must address critical pillars: 1) Version Control for training datasets and model configurations, 2) Automated Pipelines for scalable inference and feature extraction, and 3) Standardized Metadata to ensure reproducibility across labs and translational stages. Mastering this management framework is essential for transforming raw pose tracks into robust, actionable biological insights.

This guide provides a comprehensive technical framework for establishing a reproducible computational environment essential for DeepLabCut (DLC) project creation and management research. Within the broader thesis context, a robust and standardized setup is the foundational pillar for ensuring the validity, reproducibility, and scalability of behavioral analysis experiments in neuroscience and drug development. This document details the system prerequisites, software installation protocols, and environment configuration required for DLC, a premier tool for markerless pose estimation.

System Requirements & Compatibility

Successful deployment of DeepLabCut requires consideration of hardware and base software compatibility. The following tables summarize the minimum and recommended specifications.

Table 1: Minimum System Requirements for DLC

| Component | Specification | Rationale |

|---|---|---|

| Operating System | Ubuntu 18.04+, Windows 10/11, or macOS 10.14+ | Core compatibility with required libraries and drivers. |

| CPU | 64-bit processor (Intel i5 or AMD equivalent) | Handles data management and preprocessing tasks. |

| RAM | 8 GB | Minimum for managing training datasets and models. |

| Storage | 10 GB free space | For OS, software, and initial project files. |

| GPU | (Optional) NVIDIA GPU with Compute Capability ≥ 3.5 | Enables GPU acceleration for training and inference. |

Table 2: Recommended System Requirements for Optimal Performance

| Component | Specification | Rationale |

|---|---|---|

| Operating System | Ubuntu 20.04 LTS or Windows 11 | Best-supported environments with long-term stability. |

| CPU | Intel i7/AMD Ryzen 7 or higher (≥8 cores) | Faster data augmentation and video processing. |

| RAM | 32 GB or higher | Essential for large batch sizes and high-resolution video. |

| Storage | SSD with ≥ 50 GB free space | High-speed I/O for video reading and checkpoint saving. |

| GPU | NVIDIA GPU with 8+ GB VRAM (e.g., RTX 3070/3080, A-series) | Critical for reducing training time from days to hours. CUDA Compute Capability ≥ 7.5. |

Table 3: Software Dependency Matrix

| Software | Recommended Version | Purpose | Mandatory |

|---|---|---|---|

| Python | 3.8, 3.9 | Core programming language. | Yes |

| CUDA (GPU users) | 11.2, 11.8 | NVIDIA parallel computing platform. | For GPU |

| cuDNN (GPU users) | 8.1, 8.9 | GPU-accelerated library for deep neural networks. | For GPU |

| FFmpeg | Latest | Video handling and processing. | Yes |

| Graphviz | Latest | For visualizing model architectures. | Optional |

Experimental Protocol: Environment Setup

This protocol details the step-by-step methodology for creating an isolated, functional DLC environment, a critical experiment in any computational thesis research pipeline.

Protocol 3.1: Base Installation of Miniconda and Python

Objective: To install the Miniconda package manager, which facilitates the creation of isolated Python environments.

Materials:

- A computer meeting the specifications in Table 1 or 2.

- Stable internet connection.

Methodology:

- Download: Navigate to the official Miniconda website. Download the installer for your operating system and architecture (64-bit). The recommended version uses Python 3.9.

- Execute Installer:

- Windows: Run the

.exeinstaller. Select "Install for: Just Me" and check "Add Miniconda3 to my PATH environment variable." - macOS/Linux: Open a terminal. Run

bash Miniconda3-latest-MacOSX-x86_64.sh(or the Linux equivalent). Follow prompts, agreeing to the license and allowing initialization.

- Windows: Run the

- Verification: Open a new terminal (Anaconda Prompt on Windows) and execute

conda --versionandpython --version. Successful installation returns version numbers for both.

Protocol 3.2: Creation and Activation of the DLC Conda Environment

Objective: To construct a dedicated Conda environment with a specific Python version to prevent dependency conflicts.

Methodology:

- Create a new environment named

dlc(or similar) with Python 3.9:

Activate the new environment:

The terminal prompt should change to indicate

(dlc)is active.

Protocol 3.3: Installation of DeepLabCut and Core Dependencies

Objective: To install the DeepLabCut package and its essential dependencies within the isolated environment.

Methodology:

- Ensure the

dlcenvironment is active. - Update core packages:

Install DeepLabCut from PyPI. As of the latest search, the standard version is installed via:

For the latest alpha/beta releases with new features, researchers may use

pip install deeplabcut --pre.- Install system utilities:

Protocol 3.4 (For GPU Users): CUDA and cuDNN Configuration

Objective: To configure the environment for GPU-accelerated deep learning, drastically reducing model training time.

Methodology:

- Verify GPU: Ensure an NVIDIA GPU is installed. Check Compute Capability compatibility.

- Install CUDA/cuDNN via Conda (Recommended): This method avoids system-wide installs. Within the

dlcenvironment:

Configure Environmental Variables (Linux/macOS): Ensure the system uses the Conda-installed libraries. Add to your

~/.bashrcor~/.zshrc:Verification: In a Python shell within the

dlcenvironment, run:A non-empty list confirms GPU recognition.

Verification and Initialization Experiment

Objective: To validate the installation and perform the initial steps of a DLC project as per the thesis research workflow.

Protocol:

- Activate the

dlcenvironment. - Launch Python or Jupyter Notebook.

- Execute the following test imports:

- Create a Test Project (Conceptual): The core function for initiating thesis research.

Visualizations

Title: DLC Environment Setup Workflow

Title: Thesis Research Context and Phases

The Scientist's Toolkit: Research Reagent Solutions

Table 4: Essential Computational Reagents for DLC Research

| Item/Software | Function in Experiment | Specification/Notes |

|---|---|---|

Conda Environment (dlc) |

Isolated chemical vessel. Prevents dependency "reagent" conflicts between projects. | Must be created with Python 3.8 or 3.9. |

| DeepLabCut (PyPI Package) | Primary assay kit. Provides all core functions for pose estimation. | Install via pip. Track version for reproducibility. |

| TensorFlow / PyTorch Backend | Engine for neural network operations. The "reactor" for model training. | GPU version requires CUDA/cuDNN. DLC uses TF by default. |

| FFmpeg | Video processing tool. Handles "sample" (video) decoding, cropping, and format conversion. | Install via Conda-Forge. Essential for data ingestion. |

| Jupyter Notebook / Lab | Electronic lab notebook. Enables interactive, documented analysis and visualization. | Install in dlc env: pip install jupyter. |

| NVIDIA GPU Drivers & CUDA Toolkit | Catalytic accelerator. Drastically reduces "reaction" (training) time via parallel processing. | Mandatory for high-throughput research. Use Conda install. |

| Labeling Tool (DLC GUI) | Manual annotation instrument. Used for creating ground-truth training data. | Launched via deeplabcut.label_frames(). |

| Video Recording System | Sample acquisition apparatus. Source of raw behavioral data. | Should produce well-lit, high-resolution, stable video. |

Within the broader thesis on DeepLabCut (DLC) project creation and management, a foundational understanding of the core directory structure is paramount. This technical guide dissects the anatomy of a DLC project, focusing on the three pivotal components: the config.yaml file, the videos directory, and the labeled-data folder. For researchers, scientists, and drug development professionals, mastering these elements is critical for ensuring reproducible, scalable, and robust markerless pose estimation experiments, which are increasingly vital in preclinical behavioral phenotyping and translational research.

Theconfig.yamlFile: The Project Blueprint

The config.yaml (YAML Ain't Markup Language) file is the central configuration hub that defines all project parameters. It is generated during project creation and must be edited prior to initiating workflows.

Core Configuration Parameters

Below is a summary of the essential quantitative and string parameters that must be defined.

Table 1: Mandatory Configuration Parameters in config.yaml

| Parameter | Data Type | Default/Example | Function & Impact |

|---|---|---|---|

Task |

String | 'Reaching' | Project name; influences folder naming. |

scorer |

String | 'ResearcherX' | Human labeler/network ID for tracking. |

date |

String | '2024-01-15' | Date of project creation. |

bodyparts |

List | ['paw', 'finger1', 'finger2'] | Ordered list of body parts to track. |

skeleton |

List of Lists | [['paw','finger1'], ['paw','finger2']] | Defines connections for visualization. |

NumFrames |

Integer | 20 | # of frames to extract/label from all videos. |

iteration |

Integer | 0 | Training iteration index (increments automatically). |

TrainingFraction |

List | [0.95] | Fraction of data for training set; remainder is test. |

Editing Protocol

- Locate the File: Navigate to the project directory (e.g.,

MyReachingProject-2024-01-15). - Open with a Text Editor: Use a plain text editor (e.g., VS Code, Notepad++). Avoid word processors.

- Edit Key Fields: Modify

bodyparts,skeleton, andNumFramesto match experimental design. - Save the File: Ensure the YAML structure (indentation, colons) is preserved.

ThevideosDirectory: Raw Input Repository

This directory contains the original video files for analysis. Proper organization is crucial for batch processing.

Video Specifications & Preparation Protocol

Experimental Protocol: Video Acquisition & Pre-processing

- Format: Use widely compatible formats (e.g.,

.mp4,.avi,.mov). DeepLabCut typically expects videos with a constant frame rate. - Resolution & Frame Rate: Record at the highest resolution and frame rate feasible for your hardware. Common ranges are 640x480 to 1920x1080 pixels at 30-100 fps. Higher values increase spatial/temporal precision but demand more computational resources.

- Placement: Copy or symlink all videos for a project into the

videosfolder. DLC will reference paths relative to this directory. - Pre-processing (Optional): For large datasets or standardized analysis, videos may be cropped, concatenated, or deinterlaced using tools like FFmpeg before being placed in the directory.

Thelabeled-dataFolder: Ground Truth Storage

This folder contains subdirectories for each video used in the training dataset. Each subdirectory holds the extracted frames and human-annotated data.

Structure and Contents

Each subfolder (e.g., labeled-data/video1name/) contains:

CollectedData_[Scorer].h5: The key file storing (x, y) coordinates and likelihood for all labeled bodyparts across extracted frames.CollectedData_[Scorer].csv: A human-readable version of the.h5data.img[number].png: The individual frames extracted from the video for manual labeling.machine_results_file.h5: (Generated later) Contains network predictions on the labeled frames.

Labeling Protocol

- Frame Extraction: Run

deeplabcut.extract_frames(config_path)to select frames from videos, either automatically or manually. - Manual Annotation: Run

deeplabcut.label_frames(config_path)to open the GUI. Click to place each bodypart on every extracted frame. - Quality Check: Run

deeplabcut.check_labels(config_path)to visualize annotations and correct any outliers. - Create Training Dataset: Run

deeplabcut.create_training_dataset(config_path)to generate the final, shuffled dataset for the network. This creates thetraining-datasetsfolder.

Integrated Workflow & Relationship

The interaction between these three components forms the backbone of the DLC project lifecycle.

Diagram Title: DLC Core Component Dataflow

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 2: Key Reagents & Computational Tools for DLC Projects

| Item | Category | Function in DLC Context |

|---|---|---|

| High-Speed Camera | Hardware | Captures high-frame-rate video essential for resolving rapid movements (e.g., rodent gait, reaching). |

| Consistent Lighting System | Hardware | Ensures uniform illumination, reducing video noise and improving model generalization. |

| Animal Housing & Behavioral Arena | Wetware/Equipment | Standardized environment for generating reproducible behavioral video data. |

| FFmpeg | Software | Open-source tool for video format conversion, cropping, and pre-processing. |

| CUDA-enabled GPU (e.g., NVIDIA RTX) | Hardware | Accelerates deep network training and video analysis by orders of magnitude. |

| TensorFlow/PyTorch | Software | Backend deep learning frameworks on which DeepLabCut is built. |

| Jupyter Notebooks | Software | Interactive environment for running DLC pipelines and analyzing results. |

| Pandas & NumPy | Software | Python libraries used extensively by DLC for managing coordinate data and numerical operations. |

| Labeling GUI (DLC built-in) | Software | Interface for efficient, precise manual annotation of body parts on extracted frames. |

This guide provides a technical framework for a critical decision in the DeepLabCut (DLC) project pipeline: whether to train a pose estimation model from random initialization or to fine-tune a pre-trained model. This choice significantly impacts project timelines, computational resource expenditure, and final model performance, particularly in specialized biomedical and pharmacological research settings. The decision is contextualized within the broader research thesis on optimizing DLC project creation and management for robust, reproducible scientific outcomes.

Quantitative Comparison: Training from Scratch vs. Fine-tuning

The following table summarizes key quantitative findings from recent literature and benchmark studies relevant to markerless pose estimation in laboratory animals.

Table 1: Comparative Analysis of Training Approaches for Pose Estimation

| Metric | Training from Scratch | Leveraging Pre-trained Models |

|---|---|---|

| Typical Training Data Required | 1000s of labeled frames across diverse poses & animals. | 100-500 carefully selected labeled frames per new viewpoint/animal. |

| Time to Convergence (GPU hrs) | 50-150 hours (varies by network size). | 5-25 hours for fine-tuning. |

| Mean Pixel Error (MPE) on held-out test set | High initial error, converges to baseline (~5-10 px) with sufficient data. | Lower initial error, often achieves lower final MPE (~3-7 px) with less data. |

| Risk of Overfitting | High with limited or homogeneous training data. | Reduced, as model starts with general feature representations. |

| Generalization to Novel Conditions | Poor if training data is not exhaustive. | Generally better; pre-trained features are more robust to minor appearance changes. |

| Computational Cost (CO2e) | High (approx. 2-4x higher than fine-tuning). | Lower, due to reduced training time. |

| Suitability for Novel Species/Apparatus | Necessary if no related pre-trained model exists. | Highly efficient if a model trained on a related species (e.g., mouse→rat) exists. |

Experimental Protocols for Model Training

Protocol for Training a DeepLabCut Model from Scratch

Objective: To create a de novo pose estimation network for a novel experimental subject with no available pre-trained weights.

- Data Curation: Collect a large, diverse video dataset (N>5 animals). Extract frames to cover the full behavioral repertoire and variance in appearance.

- Labeling: Manually annotate a substantial training set (recommended: 500-1000 frames initially). Use multiple labelers and consensus labeling if possible.

- Configuration: In the DLC config file, set

init_weights: 'scratch'. Choose a base architecture (e.g., ResNet-50, EfficientNet). - Training: Execute

deeplabcut.train_network(...)with a low initial learning rate (e.g., 0.001). Use early stopping based on validation loss. - Evaluation: Use

deeplabcut.evaluate_network(...)to compute test error and visualize predictions. Iteratively label more frames from "hard" examples identified by the network.

Protocol for Fine-tuning a Pre-trained DeepLabCut Model

Objective: To adapt an existing, high-performing model to a new but related task (e.g., new laboratory strain, slightly different camera angle).

- Model Selection: Identify the most relevant pre-trained model from the DLC Model Zoo (e.g.,

DLC_DLC_resnet50_mouse_shoulder_Jul21for rodent forelimb work). - Data Curation & Labeling: Collect a smaller, targeted video dataset. Label a focused set of frames (200-500) that capture the difference from the pre-trained model's domain.

- Configuration: In the DLC config file, set

init_weights: /path/to/pre-trained/model. Optionally "freeze" early layers (keep_trainable_layers: 0-10) to retain general features. - Training: Execute training with a very low learning rate (e.g., 1e-5) to allow gentle adaptation. Monitor for catastrophic forgetting.

- Evaluation: Evaluate on the new test set. Compare performance to the base model's performance on its original task to ensure robustness is maintained.

Visualizing the Decision Workflow and Training Processes

Diagram 1: DLC Project Training Path Decision Tree

Diagram 2: High-Level Model Training & Transfer Workflow

The Scientist's Toolkit: Key Research Reagents & Materials

Table 2: Essential Research Toolkit for DeepLabCut Project Creation

| Item / Solution | Function / Purpose | Example/Note |

|---|---|---|

| High-Speed Camera | Captures fine-grained motion for accurate labeling and training. | Cameras with ≥ 100 fps; global shutter preferred (e.g., FLIR, Basler). |

| Consistent Lighting System | Minimizes appearance variance, a major confound for neural networks. | LED panels with diffusers for even, shadow-free illumination. |

| Animal Handling & Housing | Standardizes animal state (stress, circadian rhythm) affecting behavior. | IVC cages, standardized enrichment, handling protocols. |

| Video Annotation Software | Creates ground truth data for training and evaluation. | DeepLabCut's GUI, SLEAP, or custom labeling tools. |

| Computational Hardware (GPU) | Accelerates model training by orders of magnitude. | NVIDIA GPUs (RTX 4090, A100) with ≥ 12GB VRAM. |

| Pre-trained Model Zoo | Provides starting points for transfer learning, saving time and data. | DeepLabCut Model Zoo, Tierpsy, OpenMonkeyStudio. |

| Data Augmentation Pipeline | Artificially expands training data, improving model robustness. | Built into DLC: rotation, scaling, lighting jitter, motion blur. |

| Behavioral Arena & Apparatus | Standardized experimental environment for reproducible data collection. | Open-field boxes, rotarod, elevated plus maze with consistent dimensions. |

| Model Evaluation Suite | Quantifies model performance to guide iterative improvement. | Tools for calculating RMSE, p-cutoff analysis, loss plots. |

Step-by-Step Project Creation: From Video Data to Trained Model

This guide constitutes the foundational stage of a comprehensive research thesis on standardized, reproducible project creation and management using DeepLabCut (DLC). Effective behavioral analysis in neuroscience and drug development hinges on rigorous initial setup. Project initialization and configuration are critical, yet often overlooked, determinants of downstream analytical validity and inter-laboratory reproducibility. This whitepaper provides an in-depth technical protocol for establishing a robust DLC project framework, contextualized within best practices for scientific computation and data management.

Core Quantitative Metrics for Project Initialization

The initial phase involves decisions with quantitative implications for training efficiency and model accuracy. Based on current benchmarking studies (2023-2024), the following parameters are paramount.

Table 1: Critical Initial Configuration Parameters and Their Impact

| Parameter | Typical Range | Recommended Starting Value (for Novel Project) | Impact on Training & Inference | Justification |

|---|---|---|---|---|

| Number of Labeled Frames (Total) | 100 - 1000+ | 200 - 500 | Directly correlates with model robustness; diminishing returns after ~500-800 high-quality frames. | Balances labeling effort with performance; sufficient for initial network generalization. |

| Extraction Interval (for labeling) | 1 - 100 frames | 5 - 20 | Higher intervals increase frame diversity but may miss subtle postures. | Ensures coverage of diverse behavioral states while managing dataset size. |

Training Iterations (max_iters) |

50,000 - 1,000,000+ | 200,000 - 500,000 | Prevents underfitting (too low) and overfitting (too high). | Default networks (ResNet-50) often converge in this range. |

| Number of Training/Test Set Splits | 1 - 10+ | 5 | Provides robust estimate of model performance variance. | Standard for cross-validation in machine learning; yields mean ± std. dev. for evaluation metrics. |

Image Size (cropped in config) |

Height x Width (pixels) | Network default (e.g., 400, 400) | Larger sizes retain detail but increase compute/memory cost. | Defaults are optimized for pretrained backbone networks. |

Experimental Protocol: Project Creation and Configuration

Methodology for Stage 1

Objective: To programmatically create a new DeepLabCut project and customize its configuration file (config.yaml) for a specific experimental paradigm in behavioral pharmacology.

Materials & Software:

- DeepLabCut version 2.3.8 or later (installed in a Conda environment).

- A collection of raw video files (

.mp4,.avi) representing the behavior of interest. - Python 3.7+ interpreter and terminal.

Procedure:

Environment Activation and Video Inventory:

Create a spreadsheet listing all video files, including metadata (e.g., subject ID, treatment group, date, frame rate). This is crucial for reproducible project management.

Project Creation via API: Execute the following Python code, replacing placeholders with your project details.

This generates a project directory with the structure:

Pharmacology_OpenField-Experimenter-YYYY-MM-DD/Locate and Customize the Configuration File: Navigate to the project directory. The primary configuration file is named

config.yaml. Open it in a structured text editor (e.g., VS Code, Sublime Text). Do not use standard word processors.Critical Customizations (config.yaml):

bodyparts: Define an ordered list of anatomical keypoints. Order is critical and must be consistent.skeleton: Define connections between bodyparts for visualization. Does not affect training.project_path: Verify this points to the absolute path of your project folder.video_sets: This dictionary is automatically populated. Verify paths are correct.numframes2pick: Set the total number of frames to be initially extracted for labeling (e.g.,200).date&scorer: These are auto-populated; do not edit manually.

Configuration Validation: After editing, it is advisable to load the config in Python to check for integrity.

Visualizing the Initialization Workflow

Diagram 1: DeepLabCut Project Initialization and Configuration Workflow

The Scientist's Toolkit: Essential Research Reagent Solutions

Table 2: Key Materials and Software for DLC Project Initialization

| Item | Category | Function & Rationale |

|---|---|---|

| DeepLabCut (v2.3.8+) | Core Software | Open-source toolbox for markerless pose estimation based on transfer learning with deep neural networks. |

| Anaconda/Miniconda | Environment Manager | Creates isolated Python environments to manage dependencies and ensure project reproducibility across systems. |

| High-Quality Video Data | Primary Input | Raw behavioral videos (min. 30 fps, consistent lighting, high contrast between animal and background). Critical data quality dictates model ceiling. |

| Text Editor (VS Code/Sublime) | Configuration Tool | For editing YAML configuration files without introducing hidden formatting characters that cause parsing errors. |

| Metadata Spreadsheet | Documentation | Tracks video origin, experimental conditions (e.g., drug dose, time post-administration), and subject information. Essential for analysis grouping. |

| Project Directory Template | Organizational Schema | Pre-defined folder hierarchy (e.g., videos/, labeled-data/, training-datasets/, dlc-models/) enforced by DLC, ensuring consistent data organization. |

| Computational Resource (GPU) | Hardware | NVIDIA GPU (e.g., CUDA-compatible) significantly accelerates neural network training, reducing time from days to hours. |

Within the broader thesis on DeepLabCut (DLC) project lifecycle optimization for preclinical research, Stage 2 is a critical computational bottleneck. This technical guide details methodologies for the efficient extraction, selection, and management of video frames, which directly impacts downstream pose estimation accuracy and model training efficiency in behavioral pharmacology and neurodegenerative disease research.

Efficient frame management sits between raw video acquisition (Stage 1) and network training (Stage 3). For drug development professionals, systematic sampling ensures that extracted frames statistically represent the full behavioral repertoire across treatment groups, control conditions, and temporal phases of drug response, minimizing annotation labor while maximizing model generalizability.

Core Quantitative Performance Metrics

Current state-of-the-art tools and strategies are evaluated against the following benchmarks, crucial for high-throughput analysis in industrial labs.

Table 1: Frame Extraction Tool Performance Comparison (2024)

| Tool / Library | Extraction Rate (fps) | CPU Load (%) | Memory Use per 1min 1080p (MB) | Supported Formats | Key Advantage |

|---|---|---|---|---|---|

| FFmpeg (hwaccel) | 980 | 15-30 | ~120 | .mp4, .avi, .mov | Hardware acceleration |

| OpenCV (cv2.VideoCapture) | 150 | 60-80 | ~450 | All major | Integration simplicity |

| DALI (NVIDIA) | 2200 | 10-25 | ~180 | .mp4, .h264 | GPU pipeline, optimal for DLC |

| PyAV | 750 | 40-60 | ~200 | All major | Pure Python, robust |

| Decord (Amazon) | 650 | 30-50 | ~150 | .mp4 | Designed for ML |

Table 2: Frame Selection Strategy Impact on DLC Model Performance

| Selection Strategy | % of Original Frames Used | Final Model RMSE (pixels) | Training Time (hrs) | Annotation Labor (hrs) |

|---|---|---|---|---|

| Uniform Random | 0.5% | 8.2 | 12.5 | 8.0 |

| K-means Clustering (on optical flow) | 0.5% | 6.7 | 11.8 | 8.0 |

| Adaptive (motion-based) | 0.8% | 5.9 | 14.2 | 12.8 |

| Full Video (baseline) | 100% | 5.8 | 48.0 | 160.0 |

| Time-window Stratified | 1.0% | 7.1 | 13.5 | 10.5 |

Experimental Protocols for Frame Sampling

Protocol 3.1: K-means Clustering for Postural Diversity Sampling

Objective: Select a maximally informative subset of frames representing the variance in animal posture.

- Pre-processing: Extract frames at 1 fps from all experimental videos using FFmpeg (

ffmpeg -i input.mp4 -vf fps=1 frame_%04d.png). - Feature Computation: Use a pre-trained CNN (e.g., ResNet-18, with final layer removed) to generate a 512-dimensional feature vector for each frame, capturing high-level visual features.

- Dimensionality Reduction: Apply PCA to reduce features to 50 dimensions, preserving >95% variance.

- Clustering: Perform K-means clustering on the reduced feature space. The number of clusters k is determined by the elbow method, typically targeting 0.5-1% of total frames.

- Frame Selection: From each cluster, randomly select n/k frames, where n is the desired total number of frames for labeling.

Protocol 3.2: Adaptive, Motion-Triggered Extraction

Objective: Oversample periods of high activity for detailed kinematic analysis in motor studies.

- Motion Calculation: Use OpenCV to compute the absolute frame difference (sum of pixel differences) between consecutive frames at native video FPS.

- Thresholding: Apply a dynamic threshold (median absolute deviation) to identify high-motion intervals.

- Window Extraction: For each triggered high-motion event, extract frames at the native rate for a 500ms window before and after the peak.

- Background Sampling: Interleave low-motion frames at 0.1 fps to ensure static postures are represented.

Protocol 3.3: Stratified Sampling by Experimental Condition

Objective: Ensure balanced representation for multi-condition drug studies.

- Metadata Association: Log each video with metadata:

Animal_ID,Treatment,Dose,Time_Post_Injection. - Quota Assignment: Determine the target number of frames per condition (e.g., 200 frames per treatment group).

- Condition-Specific Extraction: Execute uniform or clustering-based sampling (Protocol 3.1) within each metadata-defined subgroup independently.

- Pooling: Aggregate selected frames into the final training set, ensuring no demographic or treatment bias.

Visualization of Workflows

Title: DLC Stage 2 Frame Management Workflow

Title: K-means Frame Selection Pipeline

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Tools for Efficient Video Frame Management

| Item / Solution | Function in Protocol | Example Product / Library | Key Consideration for Drug Research |

|---|---|---|---|

| High-Speed Video Storage | Raw video hosting for batch processing | NAS (QNAP TS-1640), AWS S3 Glacier | Must comply with FDA 21 CFR Part 11 for data integrity. |

| Hardware Video Decoder | Accelerates frame extraction | NVIDIA NVENC, Intel Quick Sync Video | Reduces pre-processing time in high-throughput behavioral screens. |

| Feature Extraction Model | Provides vector representations for clustering | PyTorch Torchvision ResNet-18 | Pre-trained on ImageNet; sufficient for posture feature distillation. |

| Clustering Library | Executes K-means or DBSCAN on frame features | scikit-learn, FAISS (for GPU) | FAISS enables clustering over millions of frames from large cohorts. |

| Metadata Database | Links video files to experimental conditions | SQLite, LabKey Server | Critical for stratified sampling by treatment group and dose. |

| Frame Curation GUI | Manual review and pruning of selected frames | DeepLabCut's Frame Extractor GUI, Custom Tkinter apps | Allows PI oversight to exclude artifact frames (e.g., animal not in view). |

| Version Control for Frames | Tracks selected frame sets across model iterations | DVC (Data Version Control), Git LFS | Ensures reproducibility of which frames were used to train a published model. |

Within the context of a DeepLabCut (DLC) project lifecycle, Stage 3—the labeling of experimental image or video frames—is a critical determinant of final model performance. This phase bridges the gap between raw data collection and neural network training. For researchers, scientists, and drug development professionals utilizing DLC for behavioral phenotyping or kinematic analysis in preclinical studies, a rigorous, reproducible labeling strategy is paramount. This guide details methodologies for manual labeling, orchestrating multi-annotator workflows, and optimizing use of the DLC labeling graphical user interface (GUI) to ensure high-quality training datasets.

Manual Labeling: Protocol and Precision

Manual labeling involves a single annotator identifying and marking keypoints across a curated set of training frames. The protocol demands consistency and attention to anatomical or procedural ground truth.

Experimental Protocol for Manual Labeling:

- Frame Extraction: Using the DLC function

extract_frames, select a representative subset of video data (typically 100-1000 frames). Ensure coverage of all behavioral states, viewpoints, and lighting conditions present in the full dataset. - Labeling Interface Initialization: Launch the DLC labeling GUI via

label_frames. Load the configuration file and the extracted frames. - Keypoint Identification: For each frame, systematically click on the precise pixel location of each defined body part. Adhere to a consistent order (e.g., nose, left ear, right ear, ... base of tail).

- Zoom & Pan: Use the mouse wheel and right-click drag to zoom and navigate for sub-pixel accuracy, especially for small or occluded keypoints.

- Saving: Save labels frequently. DLC creates a

.csvfile and a.h5file containing the(x, y)coordinates and confidence score (initially set to 1 for manual labels) for each keypoint.

Multi-Annotator Workflows for Ground Truth Consensus

For high-stakes research, employing multiple annotators reduces individual bias and provides a measure of label reliability. The standard methodology involves computing the inter-annotator agreement.

Experimental Protocol for Multi-Annotator Labeling:

- Annotation Team Training: Standardize the labeling criteria among annotators using a written protocol with example images.

- Parallel Labeling: Have

kannotators (wherek ≥ 2) label the same set ofnframes independently. - Data Aggregation: Collect the

kseparate label files for the common frame set. - Agreement Analysis: Calculate the consensus. A common metric is the Mean Inter-Annotator Distance (MIAD). For each keypoint

jand framei, compute the Euclidean distance between the coordinates provided by each pair of annotators, then average across all pairs and frames.

Quantitative Data on Inter-Annotator Agreement: Table 1: Example Inter-Annotator Agreement Metrics (Synthetic Data)

| Keypoint | Mean Inter-Annotator Distance (pixels) | Standard Deviation (pixels) | Consensus Confidence Score |

|---|---|---|---|

| Snout | 2.1 | 0.8 | 0.98 |

| Left Forepaw | 5.7 | 2.3 | 0.85 |

| Tail Base | 3.4 | 1.5 | 0.94 |

| Average (All Keypoints) | 3.8 | 1.9 | 0.91 |

- Consolidation: Use the DLC function

comparevideolabelingsto visualize disagreements. The final training labels can be created by taking the median coordinate from all annotators for each keypoint, or by selecting the labels from the most reliable annotator as defined by the lowest average deviation from the group median.

Multi-Annotator Consensus Workflow

Labeling GUI Tips for Efficiency and Accuracy

The DLC GUI is the primary tool for this stage. Mastery of its features drastically improves throughput and label quality.

Key GUI Functions and Shortcuts:

- Multi-frame Labeling: Use

JandKto move to the next/previous frame while keeping the same keypoint selected. This enables rapid labeling of a single body part across consecutive frames. - Keypoint Navigation: Use the number keys

1,2,3, etc., to jump to a specific keypoint label within the current frame. - Label Correction: Right-click on a plotted keypoint to delete it. Middle-click to zoom to the full image.

- Display Toggles: Use

Fto toggle the display of keypoint labels andGto toggle the grid, reducing visual clutter. - Efficiency Tip: For highly reproducible postures, label one keypoint completely across all frames before moving to the next, leveraging muscle memory.

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Materials for DeepLabCut Labeling and Validation

| Item | Function in Labeling Workflow |

|---|---|

| High-Resolution Camera | Captures source video with sufficient spatial resolution to distinguish keypoints of interest (e.g., individual toe joints). |

| Consistent Lighting System | Eliminates shadows and variance in appearance, ensuring consistent keypoint visibility across sessions. |

| Animal Coat Markers (Non-toxic) | Optional. Provides visual contrast on animals with uniform fur, easing identification of occluded limbs. |

| Dedicated GPU Workstation | Accelerates the subsequent DLC model training but also provides smooth GUI performance during frame extraction and label visualization. |

| Annotation Protocol Document | Critical for multi-annotator workflows. Defines the exact anatomical landmark for each keypoint with reference images. |

| Data Storage Solution (NAS/SSD) | High-speed storage is required for rapid loading of thousands of high-resolution frames during labeling. |

Signaling Pathway: From Raw Data to Trained Model

The labeling stage is a pivotal component in the overall signaling pathway that transforms experimental observation into a quantitative analytical model.

DLC Project Pipeline with Labeling Stage

Within the context of a DeepLabCut (DLC) project for behavioral analysis in preclinical drug development, Stage 4 is pivotal. It bridges the gap between labeled data and a deployable pose estimation model. This stage involves the systematic creation of a robust training dataset and the strategic configuration of a neural network backbone (e.g., ResNet, EfficientNet). The quality of this stage directly impacts the model's accuracy, generalizability, and utility for high-stakes applications like quantifying drug-induced behavioral phenotypes.

Curating and Augmenting the Training Dataset

The training dataset is constructed from the annotated frames generated in Stage 3. Its composition is critical for model performance.

Dataset Splitting Strategy

A standard split ensures unbiased evaluation. The following table summarizes a typical distribution:

Table 1: Standard Dataset Split for DeepLabCut Model Training

| Split Name | Percentage of Data | Primary Function |

|---|---|---|

| Training Set | 80-95% | Used to directly update the neural network's weights via backpropagation. |

| Test Set | 5-20% | Used for final, unbiased evaluation of the model's performance after all training is complete. Never used during training or validation. |

| Validation Set | (Often taken from Training) | Used during training to monitor for overfitting and to tune hyperparameters (e.g., learning rate schedules). |

Protocol: The split is typically performed randomly at the video level (not the frame level) to prevent data leakage. For a project with 10 annotated videos, 8 might be used for training/test, and 2 held out as the exclusive test set. From the 8 training videos, 20% of the extracted frames are often automatically held out as a validation set during DLC's training process.

Data Augmentation Protocols

Augmentation artificially expands the training dataset by applying label-preserving transformations, improving model robustness to variability.

Table 2: Common Data Augmentation Parameters in DeepLabCut

| Augmentation Type | Typical Parameter Range | Purpose |

|---|---|---|

| Rotation | ± 25 degrees | Invariance to camera tilt or animal orientation. |

| Translation (X, Y) | ± 0.2 (fraction of frame size) | Invariance to animal position within the frame. |

| Scaling | 0.8x - 1.2x | Invariance to distance from camera. |

| Shearing | ± 0.1 (shear angle in radians) | Simulates perspective changes. |

| Color Jitter (Brightness, Contrast, Saturation, Hue) | Varies by channel | Robustness to lighting condition changes. |

| Motion Blur | Probability: 0.0 - 0.5 | Robustness to fast movement, a key factor in behavioral studies. |

| Cutout / Occlusion | Probability: 0.0 - 0.5 | Forces network to rely on multiple context cues, critical for handling partial occlusion. |

Experimental Protocol: Augmentations are applied stochastically on-the-fly during training. A standard DLC configuration might apply a random combination of rotation (±25°), translation (±0.2), and scaling (0.8-1.2) to every training image in each epoch. The specific pipeline is defined in the

pose_cfg.yamlconfiguration file.

Title: Workflow for On-the-Fly Data Augmentation in Training

Configuring the Neural Network Backbone

DLC leverages pre-trained neural networks via transfer learning. The backbone (e.g., ResNet, EfficientNet) extracts visual features which are then used by deconvolutional layers to predict keypoint heatmaps.

Backbone Comparison for Behavioral Analysis

Choosing a backbone involves a trade-off between speed, accuracy, and computational cost.

Table 3: Comparison of Common Backbones in DeepLabCut for Behavioral Research

| Backbone Architecture | Typical Depth | Key Strengths | Considerations for Drug Development |

|---|---|---|---|

| ResNet-50 | 50 layers | Excellent balance of accuracy and speed; widely benchmarked; highly stable. | Default choice for most assays. Sufficient for tracking 5-20 bodyparts in standard rodent setups. |

| ResNet-101 | 101 layers | Higher accuracy than ResNet-50 due to increased depth and capacity. | Useful for complex poses or many bodyparts (e.g., full mouse paw digits). Increases training/inference time. |

| ResNet-152 | 152 layers | Maximum representational capacity in the ResNet family. | Diminishing returns on accuracy vs. compute. Rarely needed unless data is extremely complex. |

| EfficientNet-B0 | Compound scaled | State-of-the-art efficiency; achieves comparable accuracy to ResNet-50 with fewer parameters. | Ideal for high-throughput screening or real-time applications. May require careful hyperparameter tuning. |

| EfficientNet-B3/B4 | Compound scaled | Higher accuracy than B0, still more efficient than comparable ResNets. | Good choice when accuracy is paramount but GPU memory is constrained. |

| MobileNetV2 | 53 layers | Extremely fast and lightweight. | Accuracy trade-off is significant. Best for proof-of-concept or deployment on edge devices. |

Transfer Learning and Hyperparameter Configuration

The pre-trained backbone is fine-tuned on the annotated animal pose data. Key hyperparameters govern this process.

Experimental Protocol: Network Training Configuration

- Initialization: Load weights from a network pre-trained on ImageNet. Replace the final classification layer with deconvolutional layers for heatmap prediction.

- Training Schedule: Use a multi-step learning rate decay.

- Initial Learning Rate: 0.001 (1e-3)

- Decay Steps: Defined by total iterations (e.g., reduce by factor of 10 at 50% and 75% of training).

- Optimizer: Typically Adam or SGD with momentum.

- Batch Size: Maximize based on available GPU memory (e.g., 8, 16, 32). Larger batches provide more stable gradient estimates.

- Iterations: Train for until the loss on the validation set plateaus (e.g., 200,000 to 1,000,000 iterations for large projects).

- Loss Function: Mean Squared Error (MSE) over the predicted heatmaps and target Gaussian maps.

Title: Transfer Learning Architecture for Pose Estimation in DeepLabCut

The Scientist's Toolkit: Research Reagent Solutions

Table 4: Essential Materials for DeepLabCut Model Training & Evaluation

| Item / Solution | Function in Stage 4 |

|---|---|

| High-Performance GPU (NVIDIA RTX A6000, V100, or consumer-grade RTX 4090/3090) | Accelerates the computationally intensive neural network training and evaluation process. VRAM (≥ 8GB) determines feasible batch size and model complexity. |

| DeepLabCut Software Environment (Python, TensorFlow/PyTorch, DLC GUI/API) | The core software platform providing the infrastructure for dataset management, network configuration, training, and evaluation. |

| Curated & Annotated Image Dataset (from Stage 3) | The fundamental reagent for model training. Quality and diversity directly determine the model's upper performance limit. |

Configuration File (pose_cfg.yaml) |

The "protocol" document specifying all training parameters: backbone choice, augmentation settings, learning rate, loss function, and iteration count. |

| Validation & Test Video Scenes | Held-out data used as a bioassay to quantify the model's generalization performance and ensure it is not overfitted to the training set. |

| Evaluation Metrics Scripts (e.g., for RMSE, Precision, Train/Test Error plots) | Tools to quantitatively measure model performance, comparable to an assay readout. Critical for benchmarking and publication. |

Within the broader thesis on DeepLabCut (DLC) project lifecycle management, Stage 5 represents the critical operational phase where computational models are forged. This stage translates annotated data into a functional pose estimation tool, demanding rigorous management of iterative optimization, state persistence, and performance tracking. This guide details the protocols and considerations for researchers, particularly in biomedical and pharmacological contexts, where reproducibility and quantitative rigor are paramount.

Iterations: The Engine of Optimization

Model training in DLC is an iterative optimization process that minimizes a loss function, adjusting network parameters to improve prediction accuracy.

Core Iteration Dynamics

The standard DLC pipeline, built upon architectures like ResNet or MobileNet, utilizes stochastic gradient descent (SGD) or Adam optimizers. Each iteration involves a forward pass (prediction) and a backward pass (gradient calculation and weight update) on a batch of data.

Key Quantitative Parameters:

| Parameter | Typical Range / Value (ResNet-50 based network) | Function & Impact |

|---|---|---|

| Batch Size | 1 - 16 (limited by GPU VRAM) | Number of samples processed per iteration. Smaller sizes can regularize but increase noise. |

| Total Iterations | 100,000 - 1,000,000+ | Total optimization steps. Dependent on network size, dataset complexity, and desired convergence. |

| Learning Rate | 0.001 - 0.00001 | Step size for weight updates. Often scheduled to decay over time for stable convergence. |

| Shuffle Iteration | Every 1,000 - 5,000 iterations | Re-randomizes training/validation split to prevent overfitting to a static validation set. |

Experimental Protocol: Setting Up Iterations

- Configuration: In the

config.yamlfile, setiterationvariable to0. Definesave_iters(checkpoint frequency) anddisplay_iters(loss logging frequency). - Network Selection: Choose a base network (e.g.,

resnet_50) balancing speed and accuracy. Deeper networks require more iterations. - Initialization: Start training from scratch (

init_weights: random) or using pre-trained weights (init_weights: pretrained) for transfer learning, which reduces required iterations. - Launch Command: Execute training via

deeplabcut.train_network(config_path).

Checkpoints: Safeguarding Progress and Enabling Analysis

Checkpoints are snapshots of the model's state at a specific iteration, crucial for resilience, evaluation, and deployment.

Checkpoint System Overview:

| Checkpoint Type | Contents | Primary Use Case |

|---|---|---|

| Regular Checkpoint | Model weights, optimizer state, iteration number. | Resuming interrupted training; Analyzing intermediate models. |

| Evaluation Checkpoint | "Best" model weights based on validation loss. | Final model for deployment; Benchmarking performance. |

Experimental Protocol: Managing Checkpoints

- Frequency Setting: In

config.yaml, setsave_iters: 50000. For long trainings, save every 50k-100k iterations. - Resuming Training: If interrupted, set

init_weightsto the path of the last checkpoint file (e.g.,./dlc-models/iteration-0/projectJan01-trainset95shuffle1/train/snapshot-500000) and restart training. It will auto-resume from that iteration. - Model Evaluation: Use

deeplabcut.evaluate_network(config_path, Shuffles=[1])on specific checkpoint iterations to compare performance metrics (e.g., Mean Average Error) across training stages.

Monitoring Loss: The Primary Diagnostic

The loss function quantifies the discrepancy between predicted and true keypoint locations. Monitoring training and validation loss is essential for diagnosing model behavior.

Loss Metrics and Interpretation

| Loss Curve Trend | Interpretation | Potential Action |

|---|---|---|

| Training & Validation Loss Decrease Steadily | Model is learning effectively. | Continue training. |

| Training Loss Decreases, Validation Loss Plateaus/Increases | Overfitting to training data. | Increase augmentation, apply stronger regularization, reduce network capacity, or collect more diverse training data. |

| Loss Stagnates Early | Learning rate may be too low or network architecture insufficient. | Increase learning rate or consider a more powerful base network. |

| Loss is Volatile | Learning rate may be too high or batch size too small. | Decrease learning rate or increase batch size if possible. |

Experimental Protocol: Active Loss Monitoring & Analysis

- Real-time Plotting: DLC automatically generates plots in the model directory (

train/logs). Monitor these during training. - Quantitative Validation: After training, use

deeplabcut.plot_training_results(config_path, Shuffles=[1])to generate a comprehensive plot of loss vs. iteration and accuracy metrics. - Cross-validation Analysis: For robust thesis-level research, employ k-fold cross-validation. Manually partition data into k subsets, train k models, and aggregate loss/error metrics to report mean ± SEM, ensuring findings are not dependent on a single data split.

Visualizing the Training Management Workflow

Title: DeepLabCut Training, Checkpoint, and Loss Monitoring Workflow

The Scientist's Toolkit: Research Reagent Solutions for DLC Training

| Item / Solution | Function in Experiment | Technical Notes |

|---|---|---|

| Labeled Training Dataset | The foundational reagent. Provides ground truth for supervised learning. | Must be diverse, representative, and extensively augmented (rotation, scaling, lighting). |

| Pre-trained CNN Weights (e.g., ImageNet) | Enables transfer learning, drastically reducing required iterations and data. | Standard in DLC. Initializes feature extractors with general image recognition priors. |

| NVIDIA GPU with CUDA Support | Accelerates matrix operations during training, making iterative optimization feasible. | A modern GPU (e.g., RTX 3090/4090, A100) is essential for timely experimentation. |

DeepLabCut config.yaml File |

The experimental protocol document. Specifies all hyperparameters and paths. | Must be version-controlled. Key to exact reproducibility of training runs. |

| TensorFlow / PyTorch Framework | The underlying computational engine for defining and optimizing neural networks. | DLC 2.x is built on TensorFlow. Provides automatic differentiation for backpropagation. |

Checkpoint Files (.index, .data-00000-of-00001, .meta) |

Persistent storage of model state. Allow for pausing, resuming, and auditing training. | Regularly archived to prevent data loss. The "best" checkpoint is used for final analysis. |

Loss Log File (e.g., train/logs.csv) |

Time-series data of training and validation loss. Primary diagnostic for model convergence. | Should be parsed and analyzed programmatically for objective stopping decisions. |

Evaluation Suite (deeplabcut.evaluate_network) |

Quantifies model performance using metrics like Mean Average Error (pixels). | Provides objective, quantitative evidence of model accuracy for research publications. |

Within the broader research framework of DeepLabCut (DLC) project lifecycle management, Stage 6 represents the critical validation phase. This stage determines whether a trained pose estimation model is scientifically reliable for downstream analysis in behavioral pharmacology, neurobiology, and preclinical drug development. Rigorous evaluation, encompassing both quantitative loss metrics and qualitative video assessment, is paramount to ensure that extracted kinematic data are valid for statistical inference and hypothesis testing.

Analyzing the Loss Plot: Interpretation and Diagnostic Criteria

The loss plot is the primary quantitative diagnostic tool for training convergence. It visualizes the model's error (predicted vs. true labels) over iterations for both training and validation datasets.

Key Metrics from a Standard DLC Training Output: Table 1: Quantitative Benchmarks for Interpreting Loss Plots

| Metric | Target Range/Shape | Interpretation & Implication |

|---|---|---|

| Final Training Loss | Typically < 0.001 - 0.01 (varies by project) | Absolute error magnitude. Lower is better, but must be evaluated with validation loss. |

| Final Validation Loss | Should be within ~10-20% of Training Loss | Direct measure of model generalizability. A large gap indicates overfitting. |

| Loss Curve Convergence | Smooth, asymptotic decrease to a plateau | Indicates stable and complete learning. |

| Training-Validation Gap | Small, parallel curves at convergence | Ideal scenario, suggesting excellent generalization. |

| Plateau Duration | Last 10-20% of iterations show minimal change | Suggests training can be terminated. |

Experimental Protocol for Loss Plot Analysis:

- Generate Plot: Using DLC's

deeplabcut.evaluate_networkfunction, plot losses over iterations from thescorerfolder. - Visual Inspection: Check for smooth, asymptotic descent of both curves. Sharp spikes may indicate unstable learning or poor data.

- Quantitative Comparison: Calculate the ratio of Validation Loss to Training Loss at the final iteration. A ratio >1.2 often signals overfitting.

- Diagnose Anomalies:

- Overfitting (Validation loss >> Training loss): Reduce model capacity (e.g.,

net_type='resnet_50'instead of101), increase data augmentation, or add more labeled frames. - Underfitting (Both losses high): Increase model capacity, train for more iterations, or check labeling accuracy.

- High Variance (Curves noisy): Increase batch size or normalize pixel intensities in videos.

- Overfitting (Validation loss >> Training loss): Reduce model capacity (e.g.,

Diagram Title: Loss Plot Analysis Decision Workflow

Evaluating Videos: Qualitative and Quantitative Assessment

Quantitative loss must be validated by qualitative assessment on held-out videos. This ensures the model performs reliably in diverse, real-world scenarios.

Experimental Protocol for Video Evaluation:

- Create a Novel Video Set: Compile 2-3 representative videos not used in training or validation. These should cover the full behavioral repertoire and experimental conditions.

- Run Pose Estimation: Use

deeplabcut.analyze_videosto process the novel videos. - Generate Labeled Videos: Use

deeplabcut.create_labeled_videoto visualize predictions. - Systematic Scoring:

- Frame-by-Frame Inspection: Manually scroll through a random sample of frames (≥ 50) to check for gross errors (e.g., limb swaps, predictions drifting to background).

- Trajectory Smoothness: Observe the plotted trajectories for physical plausibility (no large, discontinuous jumps).

- Quantitative Error Estimation (Optional but Recommended): Manually label a small subset (e.g., 100 frames) from the novel video. Use

deeplabcut.evaluate_networkon this new data to compute a true test error.

Table 2: Video Evaluation Checklist & Acceptance Criteria

| Evaluation Dimension | Acceptance Criteria | Tool/Method |

|---|---|---|

| Labeling Accuracy | >95% of body parts correctly located per frame in sampled frames. | Visual inspection of labeled videos. |

| Limb Swap Incidence | Rare (<1% of frames) or absent for keypoints. | Visual inspection, especially during crossing events. |

| Trajectory Plausibility | Paths are smooth, continuous, and biologically possible. | Observation of tracked paths in labeled video. |

| Robustness to Occlusion | Predictions remain stable during brief occlusions (e.g., by cage wall). | Inspect frames where animal contacts environment. |

| Generalization | Consistent performance across different animals, lighting, or sessions. | Evaluate multiple held-out videos. |

The Scientist's Toolkit: Research Reagent Solutions for DLC Evaluation

Table 3: Essential Toolkit for DLC Performance Evaluation

| Item | Function/Explanation |

|---|---|

| DeepLabCut (v2.3+) | Core open-source software platform for markerless pose estimation. |

| Labeled Training Dataset | The curated set of extracted frames and human-annotated keypoints used for model training. |

| Held-Out Video Corpus | A set of novel, unlabeled videos representing experimental variability, used for final evaluation. |

| GPU-Accelerated Workstation | Essential for efficient training and rapid video analysis (e.g., NVIDIA RTX series). |

| Video Annotation Tool (DLC GUI) | Integrated graphical interface for rapid manual labeling of evaluation frames if needed. |

| Statistical Software (Python/R) | For calculating derived metrics (e.g., velocity, distance) from evaluated pose data for downstream analysis. |

| Project Management Log | A detailed record of model parameters, training iterations, and evaluation results for reproducibility. |

Diagram Title: Stage 6 Evaluation to Model Decision Flow

Within the comprehensive framework of a DeepLabCut project for behavioral analysis in biomedical research, Stage 7 represents the critical juncture where trained models are deployed for pose estimation on novel data. This stage transforms raw video inputs into quantitative, time-series data, generating H5 and CSV files that serve as the foundational dataset for downstream kinematic and behavioral analysis. For researchers in neuroscience and drug development, rigorous execution of this phase is paramount for ensuring reproducible, high-fidelity measurements of animal or human pose, which can be correlated with experimental interventions.

Core Inference Process: From Video to Coordinates

The inference pipeline utilizes the optimized neural network (typically a ResNet-50 or EfficientNet backbone with a deconvolutional head) saved during training. The process involves loading the model, configuring the inference environment, and processing video frames to predict keypoint locations with associated confidence values.

Key Technical Steps:

- Environment Configuration: Inference is run using TensorFlow or PyTorch, depending on the DeepLabCut version. GPU acceleration is strongly recommended.

- Video Preprocessing: Each video is divided into frames. Frames may be cropped or scaled based on the project configuration to match the network's expected input dimensions.

- Forward Pass: Each frame is passed through the network, producing heatmaps (probability distributions) for each defined body part.

- Prediction Extraction: The (x, y) coordinates for each keypoint are extracted from the heatmaps, typically by locating the pixel with the maximum probability.

- Confidence Scoring: A value between 0 and 1 is assigned per keypoint per frame, derived from the heatmap intensity.

Quantitative Performance Metrics Table

The following table summarizes common evaluation metrics for pose estimation models, relevant for assessing inference quality before full analysis.

| Metric | Description | Typical Target Value (DLC Projects) | Relevance to Inference Output |

|---|---|---|---|

| Train Error (px) | Mean pixel distance between labeled and predicted points on training set. | < 5-10 px | Indicates model learning capacity. |

| Test Error (px) | Mean pixel distance on the held-out test set. | < 10-15 px | Primary indicator of generalizability. |

| Mean Average Precision (mAP) | Object Keypoint Similarity (OKS)-based metric for multi-keypoint detection. | > 0.8 (varies by keypoint size) | Holistic model performance measure. |

| Inference Speed (FPS) | Frames processed per second on target hardware. | > 30-100 FPS (GPU-dependent) | Determines practical throughput for large-scale studies. |

| Confidence Score (p) | Per-keypoint likelihood. Analysis-specific thresholding required. | p > 0.6 for reliable points | Used to filter low-confidence predictions in downstream analysis. |

Detailed Experimental Protocol for Running Inference

Protocol: Batch Inference on Novel Video Data Using DeepLabCut

Materials: Trained DeepLabCut model (model.pb or .pt file), associated project configuration file (config.yaml), novel video files, high-performance computing environment with GPU.

Methodology:

- Initialization: Launch a Python environment with DeepLabCut installed. Import necessary modules (

deeplabcut). - Path Configuration: Update the