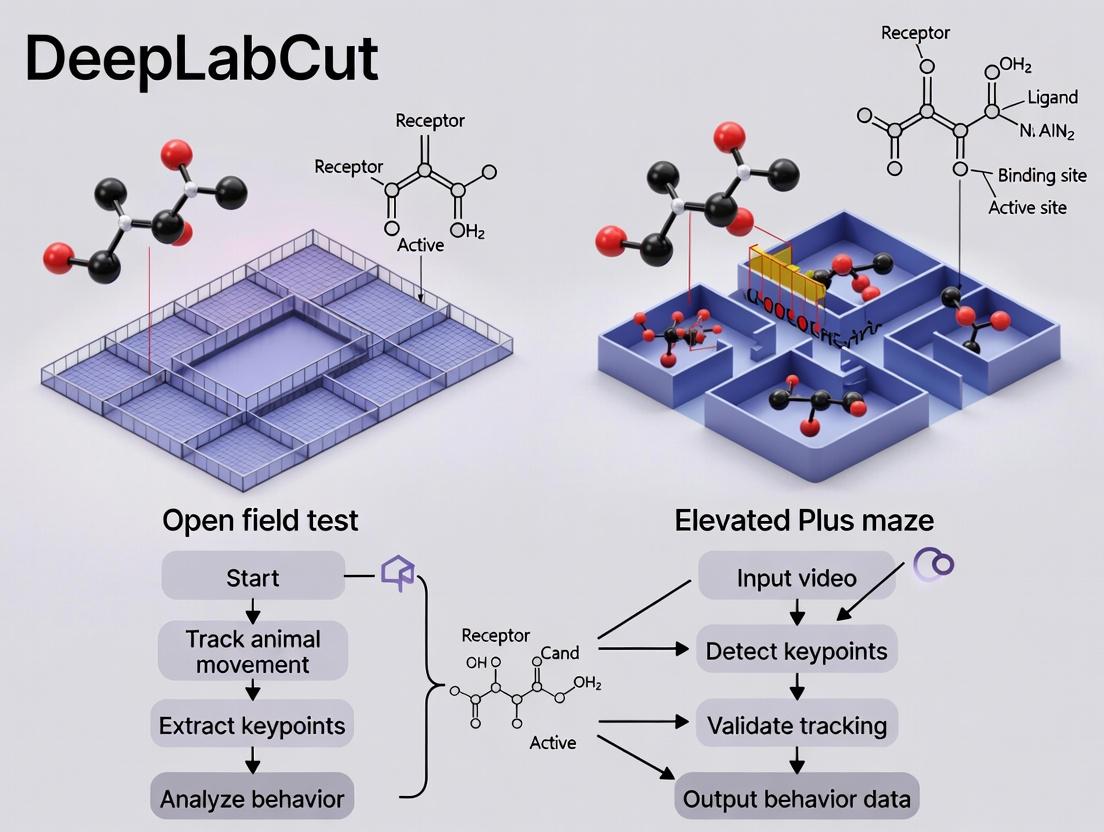

DeepLabCut for Behavioral Neuroscience: A Complete Guide to Automated Analysis of Open Field and Elevated Plus Maze Tests

This comprehensive guide explores the application of DeepLabCut, an open-source deep learning framework, for automated pose estimation and behavioral analysis in two fundamental rodent anxiety tests: the Open Field Test...

DeepLabCut for Behavioral Neuroscience: A Complete Guide to Automated Analysis of Open Field and Elevated Plus Maze Tests

Abstract

This comprehensive guide explores the application of DeepLabCut, an open-source deep learning framework, for automated pose estimation and behavioral analysis in two fundamental rodent anxiety tests: the Open Field Test (OFT) and the Elevated Plus Maze (EPM). Aimed at researchers and drug development scientists, the article first establishes the foundational principles of markerless tracking and its advantages over traditional methods. It then provides a detailed, step-by-step methodology for implementing DeepLabCut, from project setup and data labeling to network training and trajectory analysis. The guide addresses common troubleshooting challenges and optimization strategies for real-world laboratory conditions. Finally, it critically validates DeepLabCut's performance against established manual scoring and commercial software, discussing its impact on data reproducibility, throughput, and the discovery of novel behavioral biomarkers in preclinical psychopharmacology research.

Why DeepLabCut? Revolutionizing Rodent Behavioral Analysis with Markerless Tracking

This application note details the evolution of behavioral phenotyping methodologies, framed within the context of a broader thesis on implementing DeepLabCut (DLC) for automated, markerless pose estimation in classic rodent behavioral assays: the Open Field Test (OFT) and the Elevated Plus Maze (EPM). We provide updated protocols and data comparisons to guide researchers in transitioning from manual to machine learning-based analysis.

Comparative Data: Manual vs. Automated Phenotyping

Table 1: Performance Comparison of Scoring Methods in OFT & EPM

| Metric | Manual Scoring | Traditional Computer Vision (e.g., Thresholding) | DeepLabCut-Based ML |

|---|---|---|---|

| Time per 10-min trial | 30-45 mins | 5-10 mins | 2-5 mins (post-model training) |

| Inter-rater Reliability (IRR) | 0.70-0.85 (Cohen's Kappa) | N/A | >0.95 (vs. ground truth) |

| Keypoint Tracking Accuracy | N/A | Low in poor lighting/clutter | ~97% (pixel error <5) |

| Assay Throughput | Low | Medium | High |

| Measurable Parameters | Limited (~5-10) | Moderate (10-15) | Extensive (50+, including kinematics) |

| Susceptibility to Subject Coat Color | Low | High | Low (with proper training) |

Table 2: Sample Phenotyping Data from DLC-Augmented Assays (Representative Values)

| Behavioral Parameter | OFT (Control Group Mean ± SEM) | EPM (Control Group Mean ± SEM) | Primary Inference |

|---|---|---|---|

| Total Distance Travelled (cm) | 2500 ± 150 | 800 ± 75 | General locomotor activity |

| Time in Center/Open Arms (s) | 120 ± 20 | 180 ± 25 | Anxiety-like behavior |

| Rearing Count | 35 ± 5 | N/A | Exploratory drive |

| Head-Dipping Count (EPM) | N/A | 12 ± 3 | Risk-assessment behavior |

| Grooming Duration (s) | 90 ± 15 | N/A | Self-directed behavior / stress |

| Average Velocity (cm/s) | 4.2 ± 0.3 | 2.1 ± 0.2 | Movement dynamics |

Detailed Experimental Protocols

Protocol 1: Establishing a DeepLabCut Workflow for OFT and EPM

Objective: To create and deploy a DLC model for automated behavioral scoring.

- Video Acquisition:

- Record rodent behavior (e.g., C57BL/6J mouse) in standard OFT (40cm x 40cm) or EPM apparatus.

- Use consistent, diffuse lighting to avoid shadows. Place camera directly overhead.

- Frame Rate: 30 fps. Resolution: 1080p (1920x1080) minimum.

- Save videos in a lossless format (e.g., .avi, .mp4 with high bitrate).

DLC Project Creation & Labeling:

- Install DeepLabCut (>=2.3.0) in a Python environment.

- Create a new project for each assay. Define key body parts:

snout,left_ear,right_ear,center_back,tail_base. - Extract ~100-200 frames from your video corpus across various conditions and animals.

- Manually label the defined keypoints on each extracted frame to create the ground truth training dataset.

Model Training:

- Use a pre-trained ResNet-50 or MobileNet-v2 as the base network.

- Train the network for 103,000 iterations, evaluating loss plots (train and test) to avoid overfitting.

- Success Criterion: Train error plateaus and test error is within 2-5 pixels.

Video Analysis & Pose Estimation:

- Analyze new videos using the trained model (

deeplabcut.analyze_videos). - Filter predicted poses using

deeplabcut.filterpredictions(e.g., median filter with window length 5).

- Analyze new videos using the trained model (

Behavioral Feature Extraction:

- Use DLC output (

.h5files) to calculate metrics. - For OFT: Compute

time_in_center,total_distance,rear_count(snout velocity/position threshold). - For EPM: Compute

time_in_open_arms,entries_per_arm,head_dips(snout position relative to maze edge). - Use custom scripts (Python/R) for statistical analysis.

- Use DLC output (

Protocol 2: Validation Against Manual Scoring

Objective: To validate the DLC-derived metrics against traditional human scoring.

- Blinded Manual Scoring: Have 2-3 trained experimenters manually score a randomly selected subset of videos (n=20) for core parameters (time in zone, entries).

- Automated Scoring: Run the same videos through the validated DLC pipeline.

- Statistical Agreement Analysis:

- Calculate Intraclass Correlation Coefficient (ICC) or Pearson's r between manual and DLC scores for continuous data.

- Perform Bland-Altman analysis to assess bias between methods.

- Acceptance Threshold: ICC > 0.90 indicates excellent agreement, allowing replacement of manual scoring.

Visual Workflows

Title: DLC Workflow for Behavioral Analysis

Title: From Keypoints to Behavioral Metrics

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for ML-Driven Behavioral Phenotyping

| Item | Function & Specification | Example/Notes |

|---|---|---|

| Behavioral Apparatus | Standardized testing environment. OFT: 40x40cm open arena. EPM: Two open & two closed arms, elevated ~50cm. | Clever Sys Inc., Med Associates, custom-built. |

| High-Speed Camera | High-resolution video capture for precise movement tracking. Min: 1080p @ 30fps. | Logitech Brio, Basler ace, or similar USB/network cameras. |

| Diffuse Lighting System | Provides consistent, shadow-free illumination crucial for computer vision. | LED panels with diffusers, IR lighting for dark phase. |

| DeepLabCut Software | Open-source toolbox for markerless pose estimation via deep learning. | Install via pip/conda. Requires GPU (NVIDIA recommended) for efficient training. |

| Labeling Interface (DLC GUI) | Graphical tool for creating ground truth data by manually annotating animal body parts. | Integrated within DeepLabCut. |

| Compute Hardware | Accelerates model training. A dedicated GPU drastically reduces training time. | NVIDIA GPU (GTX 1080 Ti or higher) with CUDA/cuDNN support. |

| Data Analysis Suite | Software for statistical analysis and visualization of extracted behavioral features. | Python (Pandas, NumPy, SciPy), R, commercial options (EthoVision XT). |

| Animal Cohort | Genetically or pharmacologically defined experimental and control groups. | Common: C57BL/6J mice, Sprague-Dawley rats. N ≥ 10/group for robust stats. |

This application note details the core principles and protocols for implementing DeepLabCut (DLC), an open-source toolbox for markerless pose estimation based on transfer learning with deep neural networks. Framed within a broader thesis on behavioral neuroscience, this document focuses on deploying DLC for the automated analysis of rodent behavior in standard pharmacological assays, specifically the Open Field Test (OFT) and the Elevated Plus Maze (EPM). The precise quantification of posture and movement afforded by DLC enables researchers and drug development professionals to extract high-dimensional, unbiased ethological data, surpassing traditional manual scoring.

Core Technical Principles

DeepLabCut's power stems from adapting state-of-the-art deep learning architectures originally designed for human pose estimation (e.g., DeeperCut, MobileNetV2, ResNet) to animals. The core workflow involves:

- Frame Selection: Extracting representative frames from videos.

- Labeling: Manually annotating body parts (keypoints) on these frames.

- Training: Using these labels to fine-tune a pre-trained neural network.

- Evaluation: Assessing the network's prediction accuracy on held-out data.

- Analysis: Analyzing new videos to generate pose estimation data for downstream behavioral analysis.

Key principles include transfer learning (leveraging features learned on large image datasets like ImageNet), data augmentation (artificially expanding the training set via rotations, cropping, etc.), and multi-stage refinement for improved prediction confidence.

Application in OFT and EPM: Key Metrics & Protocols

The application of DLC transforms traditional manual scoring into automated, quantitative phenotyping. Below are core measurable outputs for OFT and EPM.

Table 1: Key Behavioral Metrics Quantified by DeepLabCut

| Assay | Primary Metric (DLC-Derived) | Description & Pharmacological Relevance | Typical Baseline Values (Mouse, C57BL/6J)* |

|---|---|---|---|

| Open Field Test | Total Distance Traveled | Sum of centroid movement. Measures general locomotor activity. Sensitive to stimulants/sedatives. | 2000-4000 cm / 10 min |

| Time in Center Zone | Duration spent in defined central area. Measures anxiety-like behavior (thigmotaxis). Increased by anxiolytics. | 15-30% of session | |

| Rearing Frequency | Count of upright postures (from snout/keypoint tracking). Measures exploratory drive. | 20-50 events / 10 min | |

| Elevated Plus Maze | % Open Arm Time | (Time in Open Arms / Total Time) * 100. Gold standard for anxiety-like behavior. Increased by anxiolytics. | 10-25% of session |

| Open Arm Entries | Number of entries into open arms. Often combined with time. | 3-8 entries / 5 min | |

| Risk Assessment Postures | Quantified stretch-attend postures (via pixel/sculpt analysis). Ethologically relevant measure of conflict. | Protocol dependent |

*Values are approximate and highly dependent on specific experimental setup, animal strain, and habituation.

Experimental Protocol 1: Video Acquisition for DLC Analysis

Aim: To record high-quality, consistent video for optimal pose estimation in OFT and EPM. Materials: Rodent OFT/EPM apparatus, high-contrast background, uniform lighting, high-resolution camera (≥1080p, 30 fps), tripod, video acquisition software. Procedure:

- Setup: Position camera directly above OFT (for top-down view) or at a slight angle for EPM to capture all arms. Ensure uniform, shadow-free illumination.

- Background: Use a solid, non-reflective background color (e.g., white or black) that contrasts with the animal's fur.

- Calibration: Place a ruler or object of known scale in the arena floor and record it to enable pixel-to-cm conversion later.

- Recording: Start recording. Introduce animal to the center of the OFT or the central platform of the EPM.

- Acquisition: Record for standard test duration (e.g., 10 min for OFT, 5 min for EPM). Maintain consistent room lighting and noise levels.

- File Management: Save videos in a lossless or high-quality compressed format (e.g., .avi, .mp4 with H.264 codec). Name files systematically (e.g.,

Drug_Dose_AnimalID_Date.avi).

Experimental Protocol 2: DLC Workflow for Behavioral Analysis

Aim: To train a DeepLabCut network and analyze videos for OFT/EPM. Materials: Computer with GPU (recommended), DeepLabCut software (via Anaconda), labeled training datasets, recorded behavioral videos. Procedure:

- Project Creation: Create a new DLC project specifying the assay (OFT/EPM) and defining the body parts (keypoints: snout, ears, centroid, tail base, paws for OFT; plus trunk for EPM).

- Frame Extraction & Labeling: Extract frames from multiple videos to capture diverse poses and lighting. Manually label all keypoints on ~100-200 frames using the DLC GUI.

- Network Training: Create a training dataset and configure the neural network parameters (e.g., using ResNet-50). Train the network for ~50,000-200,000 iterations until the loss plateaus.

- Network Evaluation: Use the built-in evaluation tools to analyze the network's performance on a held-out "test" set. Scrutinize frames with low confidence and refine training if necessary.

- Video Analysis: Process all experimental videos through the trained network to obtain pose estimation data (X,Y coordinates and confidence per keypoint per frame).

- Post-Processing: Filter predictions based on confidence, correct rare outliers, and calculate derived metrics (Table 1) using custom scripts or DLC's analysis functions.

- Statistical Analysis: Export data for statistical comparison between treatment groups (e.g., using ANOVA or t-tests).

Title: DeepLabCut Workflow for Behavioral Analysis

The Scientist's Toolkit

Table 2: Essential Research Reagent Solutions & Materials

| Item | Function in DLC Workflow | Example/Notes |

|---|---|---|

| DeepLabCut Software | Core open-source platform for creating, training, and evaluating pose estimation models. | Install via Anaconda. Versions 2.x+ offer improved features. |

| GPU-Accelerated Workstation | Drastically reduces time required for network training and video analysis. | NVIDIA GPU with CUDA support (e.g., RTX 3090/4090). |

| High-Resolution Camera | Captures clear video with sufficient detail for accurate keypoint detection. | USB 3.0 or GigE camera with global shutter (e.g., Basler, FLIR). |

| Behavioral Apparatus | Standardized testing environment for OFT and EPM assays. | Commercially available or custom-built with consistent dimensions. |

| High-Contrast Bedding/Background | Maximizes contrast between animal and environment, improving model accuracy. | Use white bedding for dark-furred mice, and vice versa. |

| Video Conversion Software | Converts proprietary camera formats to DLC-compatible files (e.g., .mp4, .avi). | FFmpeg (open-source) or commercial tools. |

| Data Analysis Suite | For statistical analysis and visualization of DLC-derived metrics. | Python (Pandas, NumPy, Seaborn) or R (ggplot2). |

| Labeling Tool (Integrated in DLC) | GUI for manual annotation of body parts on training image frames. | DLC's built-in GUI is the standard. |

Title: DeepLabCut's Transfer Learning Principle

Within the broader thesis on employing DeepLabCut (DLC) for automated, markerless pose estimation in rodent behavioral neuroscience, precise operational definitions of key anxiety-related metrics are paramount. This document provides detailed application notes and protocols for quantifying anxiety-like behavior in the Open Field Test (OFT) and Elevated Plus Maze (EPM), two cornerstone assays. By standardizing these definitions, DLC-based analysis pipelines can generate reproducible, high-throughput data for researchers and drug development professionals.

Core Anxiety-Related Metrics and Quantitative Summaries

The following metrics are derived from the animal's positional tracking data (typically the centroid or base-of-tail point) generated by DLC.

Table 1: Key Anxiety-Related Metrics in OFT and EPM

| Test | Primary Metric | Definition | Interpretation (Increased Value Indicates...) | Typical Baseline Ranges (C57BL/6J Mouse) |

|---|---|---|---|---|

| Open Field Test (OFT) | Center Time (%) | (Time spent in center zone / Total session time) * 100 | ↓ Anxiety-like behavior | 10-25% (in a 40cm center zone of a 100cm arena) |

| Center Distance (%) | (Distance traveled in center zone / Total distance traveled) * 100 | ↓ Anxiety-like behavior | 15-30% | |

| Total Distance (m) | Total path length traveled in the entire arena. | General locomotor activity | 15-30 m (10-min test) | |

| Elevated Plus Maze (EPM) | Open Arm Time (%) | (Time spent in open arms / Total time on all arms) * 100 | ↓ Anxiety-like behavior | 20-40% |

| Open Arm Entries (%) | (Entries into open arms / Total entries into all arms) * 100 | ↓ Anxiety-like behavior | 30-50% | |

| Total Arm Entries | Sum of entries into all arms (open + closed). | General locomotor activity | 10-25 entries (5-min test) |

Detailed Experimental Protocols

Protocol 1: Open Field Test (OFT) for Mice

Objective: To assess anxiety-like behavior (center avoidance) and general locomotor activity. Materials: Open field arena (e.g., 100 x 100 cm), white LED illumination (~300 lux at center), video camera mounted overhead, computer with DLC and analysis software (e.g., Bonsai, EthoVision, custom Python scripts). Procedure:

- Habituation: Transport animals to the testing room at least 1 hour prior to testing.

- Setup: Clean the arena thoroughly with 70% ethanol between subjects. Ensure consistent, diffuse lighting.

- Testing: Gently place the mouse in the center of the arena. Start video recording immediately.

- Session: Allow free exploration for 10 minutes.

- Termination: Return the mouse to its home cage.

- Analysis: Use DLC to track the animal's position. Define a virtual center zone (e.g., 40 x 40 cm for a 100 cm arena). Calculate metrics from Table 1.

Protocol 2: Elevated Plus Maze (EPM) for Mice

Objective: To assess anxiety-like behavior based on the conflict between exploring novel, open spaces and the innate aversion to elevated, open areas. Materials: Elevated plus maze (open arms: 30 x 5 cm; closed arms: 30 x 5 cm with 15-20 cm high walls; elevation: 50-70 cm), dim red or white light (<50 lux on open arms), video camera, computer with DLC. Procedure:

- Habituation: As per OFT.

- Setup: Clean maze with 70% ethanol. Ensure arms are level and lighting is even.

- Testing: Place the mouse in the central platform (10 x 10 cm), facing an open arm. Start recording.

- Session: Allow free exploration for 5 minutes.

- Termination: Return the mouse to its home cage.

- Analysis: Use DLC to track the animal's position. Define virtual zones for open arms, closed arms, and center. An "entry" is defined as the center point of the animal crossing into an arm. Calculate metrics from Table 1.

Visualization of DLC-Based Workflow for Anxiety Phenotyping

DLC Workflow for Anxiety Tests

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 2: Key Research Reagent Solutions for Behavioral Phenotyping

| Item | Function/Brief Explanation |

|---|---|

| DeepLabCut (DLC) | Open-source software for markerless pose estimation via deep learning. Converts video into time-series coordinate data for keypoints (e.g., nose, tail base). |

| High-Resolution USB/Network Camera | Captures high-frame-rate video for precise tracking. Global shutter is preferred to reduce motion blur. |

| Behavioral Arena (OFT & EPM) | Standardized apparatuses. OFT: Large, open, often white acrylic box. EPM: Plus-shaped maze elevated above ground with two open and two enclosed arms. |

| Ethanol (70%) | Standard cleaning agent to remove olfactory cues between animal trials, preventing interference. |

| Video Recording/Analysis Software (e.g., Bonsai, EthoVision) | Used to acquire video streams or analyze DLC output to compute behavioral metrics based on virtual zones. |

| Anxiolytic Control (e.g., Diazepam) | Benzodiazepine positive control used to validate assay sensitivity (should increase open arm/center exploration). |

| Anxiogenic Control (e.g., FG-7142) | Inverse benzodiazepine agonist used as a negative control (should decrease open arm/center exploration). |

| Data Analysis Environment (Python/R) | For implementing custom scripts to process DLC output, calculate advanced metrics, and perform statistics. |

1. Introduction: Framing within a DLC Thesis for OFT & EPM

This document details application notes and protocols for using DeepLabCut (DLC)-based pose estimation to quantify rodent behavior in the Open Field Test (OFT) and Elevated Plus Maze (EPM). The broader thesis posits that DLC overcomes critical limitations of traditional manual scoring and basic video tracking by providing an objective, high-throughput framework for extracting rich, high-dimensional behavioral data. This shift enables more sensitive and reproducible phenotyping in neuropsychiatric and pharmacological research.

2. Comparative Advantages: Quantitative Summary

Table 1: Method Comparison for OFT/EPM Analysis

| Metric | Traditional Manual Scoring | Traditional Automated Tracking (Threshold-Based) | DeepLabCut-Based Pose Estimation |

|---|---|---|---|

| Objectivity | Low (Inter-rater variability ~15-25%) | Medium (Sensitive to lighting, contrast) | High (Algorithm-defined, consistent) |

| Throughput | Low (5-10 min/video for basic measures) | High (Batch processing possible) | Very High (Batch processing of deep features) |

| Primary Data | Discrete counts, latencies, durations. | Centroid XY, basic movement, time-in-zone. | Full-body pose (X,Y for 8-12+ body parts), dynamics. |

| Rich Data Extraction | Limited to predefined acts. | Limited to centroid-derived metrics. | High (Gait, posture, micro-movements, risk-assessment dynamics) |

| Sensitivity to Drug Effects | Moderate, coarse. | Moderate for locomotion. | High, can detect subtle kinematic changes. |

3. Application Notes & Key Protocols

3.1. Protocol: Implementing DLC for OFT/EPM from Data Acquisition to Analysis

A. Experimental Setup & Video Acquisition:

- Use a consistent, well-lit arena with a high-contrast, uniform background (e.g., white for dark-furred rodents).

- Mount camera(s) orthogonally to the plane of the maze. For EPM, ensure both open and closed arms are fully visible.

- Record videos at a minimum of 30 fps, with consistent resolution (e.g., 1920x1080). Save in a lossless or high-quality compressed format (e.g., .mp4 with H.264).

- Calibration: Place a ruler or object of known dimension in the maze plane at the start/end of sessions for pixel-to-cm conversion.

B. DeepLabCut Workflow:

- Frame Selection: Extract frames (~100-200) from a subset of videos representing the full behavioral repertoire and varying animal positions.

- Labeling: Manually label key body parts (e.g., snout, ears, center-back, tail-base, tail-tip, all four paws) on the extracted frames using DLC's GUI.

- Training: Create a training dataset (80% of labeled frames). Train a neural network (e.g., ResNet-50) until the loss plateau (typically 200k-500k iterations). Validate on the remaining 20%.

- Video Analysis: Use the trained model to analyze all experimental videos, generating CSV files with X,Y coordinates and confidence for each body part per frame.

C. Post-Processing & Derived Metrics:

- Filtering: Apply a median filter or ARIMA model to smooth trajectories. Use confidence thresholds (e.g., 0.9) to filter low-likelihood points.

- Core OFT Metrics: Calculate from the centroid (center-back point):

- Total Distance Travelled (cm)

- Velocity (cm/s)

- Time in Center Zone (vs. periphery)

- Number of Center Entries

- Core EPM Metrics: Calculate from the snout point:

- % Time in Open Arms

- Open Arm Entries

- Total Arm Entries (measure of general activity)

- Rich Kinematic & Postural Metrics (DLC-Specific):

- Risk Assessment (EPM): Stretch-attend postures quantified by distance between snout and tail-base, or snout proximity to open arm entry while hind-paws are in closed arm.

- Gait Analysis (OFT): Stride length, stance width, from paw trajectories.

- Postural Compaction (Anxiety): Variance in area of polygon defined by all body points.

- Micro-movements: Velocity of individual body parts (e.g., head-scanning).

3.2. Protocol: Validating DLC Against Traditional Measures for Pharmacological Studies

- Objective: Correlate novel DLC-derived metrics with established manual scores to validate sensitivity.

- Method:

- Administer an anxiolytic (e.g., diazepam, 1 mg/kg i.p.) or anxiogenic (e.g., FG-7142, 5 mg/kg i.p.) to rodent cohorts (n=8-12/group).

- Conduct OFT and EPM 30 minutes post-injection.

- Blinded Manual Scoring: A trained rater scores videos for traditional measures (time in center/open arms).

- DLC Analysis: Process the same videos through the established DLC pipeline.

- Statistical Correlation: Perform Pearson/Spearman correlation between manual scores and DLC-derived metrics (both traditional zone-based and novel kinematic).

- Expected Outcome: High correlation (>0.85) for zone-based metrics, demonstrating convergent validity. Novel DLC metrics (e.g., postural compaction) may show larger effect sizes for drug treatment, revealing enhanced sensitivity.

4. Visualization: Experimental Workflow & Data Extraction Logic

Diagram Title: DLC Analysis Workflow for OFT & EPM from Video to Metrics

5. The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Materials for DLC-based OFT/EPM Studies

| Item | Function & Rationale |

|---|---|

| DeepLabCut Software (v2.3+) | Open-source pose estimation toolbox. Core platform for model training and analysis. |

| GPU Workstation (NVIDIA) | Accelerates neural network training and video analysis, reducing processing time from days to hours. |

| High-Resolution USB/Network Camera | Provides clear, consistent video input. Global shutter cameras reduce motion blur. |

| Standard OFT & EPM Arenas | Consistent physical testing environments. Opt for white arenas for dark-furred rodents to aid contrast. |

| Video Conversion Software (e.g., FFmpeg) | Standardizes video formats (to .mp4 or .avi) for reliable processing in DLC pipeline. |

| Python Data Stack (NumPy, pandas, SciPy) | For custom post-processing, filtering of DLC outputs, and calculation of derived metrics. |

| Statistical Software (R, PRISM, Python/statsmodels) | For advanced analysis of high-dimensional behavioral data, including multivariate statistics. |

| Behavioral Annotation Software (BORIS, EthoVision XT) | Optional. For creating ground-truth labeled datasets to validate DLC-classified complex behaviors. |

This document outlines the essential prerequisites and setup protocols for employing DeepLabCut (DLC) for markerless pose estimation in preclinical behavioral neuroscience, specifically within the context of a thesis investigating rodent behavior in the Open Field Test (OFT) and Elevated Plus Maze (EPM). These paradigms are critical for assessing anxiety-like behaviors, locomotor activity, and the efficacy of pharmacological interventions in drug development. Robust hardware, software, and data collection practices are fundamental to generating reliable, reproducible data for downstream analysis.

Hardware Prerequisites

Optimal hardware ensures efficient DLC model training and seamless video acquisition.

Table 1: Recommended Hardware Specifications

| Component | Minimum Specification | Recommended Specification | Function |

|---|---|---|---|

| Computer (Training/Inference) | CPU: 8-core modern, RAM: 16GB, GPU: NVIDIA with 4GB VRAM (CUDA compatible) | CPU: 12+ cores, RAM: 32GB+, GPU: NVIDIA RTX 3080/4090 with 8+ GB VRAM | Accelerates neural network training and video analysis. |

| Camera | HD (720p) webcam, 30 fps | High-resolution (1080p or 4K) machine vision camera (e.g., Basler, FLIR), 60-90 fps | Captures high-quality, consistent video data for accurate pose estimation. |

| Lighting | Consistent room lighting | Dedicated, diffuse IR or white light arrays (e.g., LED panels) | Eliminates shadows and ensures consistent contrast; IR enables dark phase recording. |

| Data Storage | 500 GB SSD | 2+ TB NVMe SSD (for active projects), Network-Attached Storage (NAS) for archiving | Fast storage for video files and model files; secure backup solution. |

Software & Environment Setup

A stable software stack is crucial for reproducibility.

- Operating System: Linux (Ubuntu 20.04/22.04 LTS) or Windows 10/11. Linux is often preferred for stability in high-performance computing.

- DeepLabCut Installation: The recommended method is via Anaconda environment.

- Create a new conda environment:

conda create -n dlc python=3.8. - Activate it:

conda activate dlc. - Install DLC:

pip install deeplabcut.

- Create a new conda environment:

- CUDA and cuDNN: For GPU support, install NVIDIA CUDA Toolkit (v11.8 or 12.x) and corresponding cuDNN libraries matching your DLC version.

- Video Handling Software: Install FFmpeg for video file conversion and processing (

conda install -c conda-forge ffmpeg).

Data Collection Best Practices Protocol

Consistent video acquisition is the most critical factor for successful DLC analysis.

Protocol 1: Standardized Video Recording for OFT and EPM

Objective: To capture high-fidelity, consistent video recordings of rodent behavior suitable for DLC pose estimation.

Materials:

- Behavioral apparatus (OFT arena, EPM).

- Recommended hardware as per Table 1.

- Calibration grid (checkerboard or similar).

- Tripod or fixed mounting system.

- Sound-attenuating chamber (optional but recommended).

Procedure:

- Apparatus Setup: Place the OFT or EPM in a dedicated, isolated room. Ensure the apparatus is clean and free of olfactory cues between subjects.

- Camera Mounting: Secure the camera directly above the OFT (top-down view) or at an elevated angle for the EPM to capture all arms. The entire apparatus must be in frame with minimal unused space.

- Lighting Calibration: Illuminate the arena uniformly. Eliminate glare, reflections, and sharp shadows. For anxiety tests, IR lighting is used to record in darkness.

- Background Optimization: Use a high-contrast, solid-colored background (e.g., white arena on black background, or vice versa). Ensure it is non-reflective and consistent across all recordings.

- Spatial Calibration: Place a checkerboard grid in the arena plane and record a short video. This will be used later in DLC to convert pixels to real-world measurements (cm).

- Video Settings: Set resolution to at least 1920x1080, frame rate to 60 fps. Use a lossless or high-quality codec (e.g., H.264). Ensure consistent settings for all subjects.

- Recording Session:

- Acclimate the animal to the testing room for ≥30 minutes.

- Start recording.

- Gently place the animal in the designated start location (center of OFT, center platform of EPM).

- Record the session (typically 5-10 minutes for EPM, 10-30 minutes for OFT).

- Remove the animal, stop recording.

- Clean the apparatus thoroughly with 70% ethanol before the next subject.

- File Management: Name video files systematically (e.g.,

DrugGroup_AnimalID_Date_Task.avi). Store raw videos in a secure, backed-up location.

The Scientist's Toolkit: Key Research Reagent Solutions

Table 2: Essential Materials for DLC-mediated OFT/EPM Studies

| Item | Function & Relevance |

|---|---|

| DeepLabCut (Open-Source) | Core software for creating custom pose estimation models to track specific body parts (nose, ears, center, tail base, paws). |

| High-Contrast Animal Markers (Optional) | Small, non-toxic markers on fur can aid initial training data labeling for difficult-to-distinguish body parts. |

| EthoVision XT or Similar | Commercial benchmark software; can be used for complementary analysis or validation of DLC-derived tracking data. |

| Anaconda Python Distribution | Manages isolated software environments, preventing dependency conflicts and ensuring project reproducibility. |

| Jupyter Notebooks | Interactive environment for running DLC workflows, documenting analysis steps, and creating shareable reports. |

| Data Annotation Tools (DLC's GUI, COCO Annotator) | Used for manually labeling frames to generate the ground-truth training dataset for the DLC network. |

| Statistical Packages (Python: SciPy, statsmodels; R) | For performing inferential statistics on DLC-derived behavioral endpoints (e.g., time in open arms, distance traveled). |

| Anxiolytic/Anxiogenic Agents (e.g., Diazepam, FG-7142) | Pharmacological tools for validating the behavioral assay and DLC's sensitivity to drug-induced behavioral changes. |

Visualized Workflows

Diagram 1: DLC Workflow for Behavioral Analysis

Diagram 2: Experimental & Data Flow in a Pharmacological Study

Step-by-Step Protocol: Implementing DeepLabCut for OFT and EPM Analysis

Application Notes

Initializing a DeepLabCut (DLC) project is the foundational step for applying markerless pose estimation to behavioral neuroscience paradigms like the open field test (OFT) and elevated plus maze (EPM). These tests are central to preclinical research in anxiety, locomotor activity, and drug efficacy. Proper project configuration ensures reproducible, high-quality tracking of ethologically relevant body parts (e.g., nose, center of mass, base of tail for risk assessment in EPM). The selection of training frames, definition of the body parts, and configuration of the project configuration file (config.yaml) directly impact downstream analysis metrics such as time in open arms, distance traveled, and thigmotaxis.

Protocols

Protocol 1: Creating a New DeepLabCut Project

Objective: To create a new DLC project for analyzing OFT and EPM videos.

Methodology:

- Environment Setup: Activate your DLC environment (e.g.,

conda activate dlc). - Launch Python: Open a Python interactive session or Jupyter notebook.

- Import and Initialize: Use the

create_new_projectfunction.

- Output: The function returns the path to the project's configuration file (

config.yaml). This file is the central hub for all subsequent steps.

Protocol 2: Configuring the Project Configuration File (config.yaml)

Objective: To tailor the project settings for rodent OFT and EPM analysis.

Methodology:

- Open Configuration File: The

path_configvariable points to theconfig.yamlfile. Open it in a text editor. - Edit Critical Parameters:

bodyparts: Define the anatomical points of interest.

- Save the File.

Protocol 3: Extracting and Labeling Training Frames

Objective: To create a ground-truth training dataset.

Methodology:

- Extract Frames:

- Label Frames Manually:

- Check Annotations:

Table 1: Recommended config.yaml Parameters for Rodent OFT/EPM Studies

| Parameter | Recommended Setting | Purpose & Rationale |

|---|---|---|

numframes2pick |

20-30 per video | Balances training set diversity with manual labeling burden. |

bodyparts |

5-8 keypoints (see Protocol 2) | Captures essential posture. Too many can reduce accuracy. |

skeleton |

Defined connections | Improves labeling consistency and visualization of posture. |

cropping |

Often True for EPM |

Removes maze structure outside the central platform and arms to focus on animal. |

dotsize |

12 | Display size for labels in the GUI. |

alphavalue |

0.7 | Transparency of labels in the GUI. |

Table 2: Typical Video Specifications for Training Data

| Specification | Requirement | Reason |

|---|---|---|

| Resolution | ≥ 1280x720 px | Higher resolution improves keypoint detection accuracy. |

| Frame Rate | 30 fps | Standard rate captures natural rodent movement. |

| Lighting | Consistent, high contrast | Minimizes shadows and ensures clear animal silhouette. |

| Background | Static, untextured | Simplifies the learning problem for the neural network. |

| Video Format | .mp4, .avi | Widely compatible codecs (e.g., H.264). |

Visualizations

DLC Project Initialization Workflow

Key File Relationships in DLC Setup

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for DLC OFT/EPM Video Acquisition

| Item | Function in OFT/EPM Research |

|---|---|

| High-Definition USB/POE Camera | Captures high-resolution (≥720p), low-noise video of rodent behavior. A fixed, top-down mount is essential for OFT and EPM. |

| Infrared (IR) Light Array & IR-Pass Filter | Enables consistent lighting in dark/dim phases without disturbing rodents. The filter blocks visible light, allowing only IR illumination. |

| Behavioral Arena (OFT Box & EPM) | Standardized apparatuses. OFT: 40x40 cm to 100x100 cm open box. EPM: Two open and two closed arms elevated ~50 cm. |

| Sound-Attentuating Chamber | Isolates the experiment from external auditory and visual disturbances, reducing environmental stress confounds. |

| Video Acquisition Software | Software (e.g., Bonsai, EthoVision, OBS Studio) to record synchronized, timestamped videos directly to a defined file format (e.g., .mp4). |

| Calibration Grid/Ruler | Placed in the arena plane to convert pixel coordinates to real-world distances (cm) for accurate distance traveled measurement. |

| Dedicated GPU Workstation | (For training) A powerful NVIDIA GPU (e.g., RTX 3080/4090 or Tesla series) drastically reduces DeepLabCut model training time. |

Application Notes

For research employing DeepLabCut (DLC) to analyze rodent behavior in the Open Field Test (OFT) and Elevated Plus Maze (EPM), rigorous data preparation is foundational. This phase directly determines the accuracy and generalizability of the resulting pose estimation models. Key considerations include behavioral pharmacodynamics, environmental consistency, and downstream analytical goals.

Video Selection Criteria

Videos must capture the full behavioral repertoire elicited by the test. For drug development studies, this includes vehicle and treated cohorts across a dose-response range.

- OFT: Videos should capture locomotion (center vs. periphery), rearing, freezing, and grooming.

- EPM: Videos must clearly document open/closed arm entries, time in open arms, risk-assessment postures (stretched attend), and head dips.

Quantitative Video Metadata Requirements:

| Parameter | Open Field Test Specification | Elevated Plus Maze Specification | Rationale |

|---|---|---|---|

| Resolution | ≥ 1280x720 pixels | ≥ 1280x720 pixels | Ensures sufficient pixel information for keypoint detection. |

| Frame Rate | 30 fps | 30 fps | Adequate for capturing ambulatory and ethological behaviors. |

| Minimum Duration | 10 minutes | 5 minutes | Allows for behavioral expression post-habituation in OFT; sufficient for EPM exploration. |

| Lighting | Consistent, shadow-minimized | Consistent, shadow-minimized | Prevents artifacts and ensures consistent model performance. |

| Cohort Size (n) | ≥ 8 animals per treatment group | ≥ 8 animals per treatment group | Provides statistical power for detecting drug effects on behavior. |

| Camera Angle | Directly overhead | Directly overhead | Eliminates perspective distortion for accurate 2D pose estimation. |

Frame Extraction Strategy

Frame extraction aims to create a training dataset representative of all behavioral states and animal positions.

- Extraction Rate: For typical OFT/EPM studies, extracting frames from 5-20% of the available videos is sufficient. A higher percentage may be needed for complex pharmacological manipulations.

- Method: Use DLC's

extract_outlier_framesfunction (based on network prediction confidence) after initial training, in addition to random stratified sampling across videos and conditions initially. - Goal: The final training set must include frames from all experimental groups (control vs. drug-treated) to avoid bias.

Labeling Strategy for OFT/EPM

Labeling defines what the model learns. A consistent, anatomically grounded strategy is critical.

- Core Body Parts: snout, left/right ear, neck (base of skull), chest (center of torso), tailbase.

- OFT-Specific: Additional points on the spine may be added for nuanced gait or rearing analysis.

- EPM-Specific: Ensure labels are visible and unambiguous when the animal is on both open and closed arms.

- Labeling Protocol: Multiple annotators should label the same subset of frames to establish and maintain inter-rater reliability (>95% agreement). Use a standardized anatomical guide.

Experimental Protocols

Protocol 1: Video Acquisition for Pharmacological OFT/EPM Studies

Objective: To record high-quality, consistent behavioral videos for DLC pose estimation in drug efficacy screening. Materials: See "Scientist's Toolkit" below. Procedure:

- Setup: Calibrate overhead camera(s) to capture the entire apparatus. Ensure uniform, diffuse lighting. Remove any reflective surfaces.

- Synchronization: Start video recording 60 seconds before animal introduction. Record a synchronization signal (e.g., LED flash) if multiple data streams are used.

- Trial Execution: For OFT, gently place the animal in the center. For EPM, place the animal in the central square, facing an open arm. Allow the trial to run for the prescribed duration (e.g., 10 min OFT, 5 min EPM).

- Post-Trial: Remove the animal and clean the apparatus with 70% ethanol between trials to remove odor cues.

- Data Management: Name video files systematically (e.g.,

Drug_Dose_AnimalID_Date.avi). Store raw videos in a secure, backed-up repository.

Protocol 2: Iterative Frame Extraction & Training Set Curation for DLC

Objective: To create a robust, balanced training set of frames for DLC model training. Materials: DeepLabCut (v2.3+), High-performance computing workstation. Procedure:

- Initial Sampling: From your video corpus, use DLC to randomly extract 50-100 frames per video, stratified across all experimental groups (e.g., Control, DrugLow, DrugHigh).

- Initial Labeling & Training: Label these frames completely. Train a preliminary DLC network for 50,000-100,000 iterations.

- Outlier Frame Extraction: Use the trained network to analyze all videos. Employ DLC's

extract_outlier_framesfunction (based on p-cutoff) to identify frames where prediction confidence is low across the dataset. - Augment Training Set: Add these outlier frames to your training set. Relabel them carefully.

- Refinement Loop: Retrain the model with the augmented set. Repeat steps 3-4 until model performance plateaus (as measured by train/test error).

Protocol 3: Multi-Annotator Reliability Assessment for Labeling

Objective: To ensure labeling consistency, a prerequisite for a reliable DLC model. Materials: DLC project with initial frame set, 2-3 trained annotators. Procedure:

- Selection: Randomly select 100 frames from the training set across all conditions.

- Independent Labeling: Have each annotator label the selected frames independently using the predefined anatomical guide.

- Calculation: Use DLC to calculate the inter-annotator agreement (mean pixel distance between labels for the same body part across annotators).

- Alignment: If agreement for any body part is >5 pixels (for HD video), review discrepancies as a team, refine the labeling guide, and relabel until high reliability is achieved.

Visualization

DLC Workflow for OFT/EPM

OFT/EPM Video Selection Logic

The Scientist's Toolkit

| Item | Function in OFT/EPM-DLC Research |

|---|---|

| High-Definition USB Camera (e.g., Logitech Brio) | Provides ≥720p resolution video with consistent frame rate; essential for clear keypoint detection. |

| Diffuse LED Panel Lighting | Eliminates harsh shadows and flicker, ensuring uniform appearance of the animal across the apparatus. |

| Open Field Arena (40cm x 40cm x 40cm) | Standardized enclosure for assessing locomotor activity and anxiety-like behavior (thigmotaxis). |

| Elevated Plus Maze (Open/Closed Arms 30cm L x 5cm W) | Standard apparatus for unconditioned anxiety measurement based on open-arm avoidance. |

| 70% Ethanol Solution | Used for cleaning apparatus between trials to remove confounding olfactory cues. |

| DeepLabCut Software (v2.3+) | Open-source toolbox for markerless pose estimation of user-defined body parts. |

| High-Performance GPU Workstation | Accelerates the training of DeepLabCut models, reducing iteration time from days to hours. |

| Automated Video File Naming Script | Ensures consistent, informative metadata is embedded in the filename (Drug, Dose, AnimalID, Date). |

| Standardized Anatomical Labeling Guide | Visual document defining exact pixel location for each body part label (e.g., "snout tip") to ensure inter-rater reliability. |

Within the broader thesis on employing DeepLabCut (DLC) for automated behavioral analysis in rodent models of anxiety—specifically the Open Field Test (OFT) and Elevated Plus Maze (EPM)—the efficiency and accuracy of the initial labeling process is paramount. This stage involves manually defining key body parts on a set of training frames to generate a ground-truth dataset. An optimized protocol for labeling body parts like the snout, center of mass, and tail base directly dictates the performance of the resulting neural network, impacting the reliability of derived ethologically-relevant metrics such as time in center, distance traveled, and risk-assessment behaviors.

Application Notes & Protocols

Protocol: Strategic Frame Selection for Labeling

Objective: To extract a representative set of training frames that maximizes model generalizability across diverse postures, lighting conditions, and viewpoints encountered in OFT and EPM experiments.

Methodology:

- Video Compilation: Concatenate short, representative video clips (e.g., 1-2 min each) from multiple experimental subjects across different treatment groups (e.g., vehicle vs. drug). Ensure coverage of all arena quadrants and maze arms.

- Frame Extraction with DLC: Use the

deeplabcut.extract_frames()function with the'kmeans'clustering method. This algorithm selects frames based on visual similarity, ensuring diversity. - Recommended Quantity: Extract 100-200 frames from the compiled video per camera view. For a typical single-view setup, 150 frames often provides a robust starting dataset.

- Manual Curation: Review extracted frames and add supplemental frames manually to capture edge-case poses (e.g., full rearing, tight turns, grooming) that may be underrepresented.

Protocol: Efficient Body Part Definition & Labeling Workflow

Objective: To consistently and accurately label defined body parts across hundreds of training images.

Methodology:

- Body Part List Definition: Define a consistent, hierarchical list of body parts. Start with core points critical for OFT/EPM analysis.

Table 1: Recommended Body Parts for Rodent OFT/EPM Analysis

Body Part Name Anatomical Definition Primary Use in OFT/EPM snout Tip of the nose Head direction, nose-poke exploration, entry into zone. leftear Center of the left pinna Head direction, triangulation for head angle. rightear Center of the right pinna Head direction, triangulation for head angle. center Midpoint of the torso, between scapulae Calculation of locomotor activity (center point). tail_base Proximal start of the tail, at its junction with the sacrum Body axis direction, distinction from tail movement.

Labeling Process: a. Launch the DLC labeling GUI (

deeplabcut.label_frames()). b. Label body parts in a consistent order (e.g., snout → leftear → rightear → center → tail_base) to minimize errors. c. Utilize the "Jump to Next Unlabeled Frame" shortcut (Ctrl + J) to speed navigation. d. For occluded or ambiguous points (e.g., ear not visible), do not guess. Leave the point unlabeled; DLC can handle missing labels. e. Employ the "Multi-Image Labeling" feature: label a point in one frame, then click across subsequent frames to propagate the label with fine-tuning.Quality Control: After initial labeling, use

deeplabcut.check_labels()to visually inspect all labels for consistency and accuracy across frames.

Data Presentation

Table 2: Impact of Labeling Frame Count on DLC Model Performance in an EPM Study

| Training Frames Labeled | Number of Animals in Training Set | Final Model Test Error (pixels) | Resulting Accuracy for "Open Arm Time" (%) |

|---|---|---|---|

| 50 | 3 | 12.5 | 87.2 |

| 100 | 5 | 8.2 | 92.1 |

| 200 | 8 | 5.7 | 96.4 |

| Note: Performance is also highly dependent on the representativeness of the labeled frames and the network architecture. Data is illustrative. |

Visualization: Workflow & Pathway Diagrams

Title: DLC Labeling & Training Workflow

Title: DLC Model Training Pathway

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for Efficient DLC Labeling in Behavioral Neuroscience

| Item / Solution | Function / Purpose |

|---|---|

| DeepLabCut Software Suite (v2.3+) | Open-source toolbox for markerless pose estimation based on transfer learning. |

| High-Resolution CCD Camera | Provides consistent, sharp video input under variable lighting (e.g., infrared for dark cycle). |

| Uniform Behavioral Arena | OFT and EPM with high-contrast, non-reflective surfaces to simplify background subtraction. |

| Dedicated GPU Workstation (e.g., with NVIDIA RTX card) | Accelerates the training of the deep neural network, reducing iteration time. |

| Standardized Animal Markers (optional) | Small, non-invasive fur marks can aid initial labeler training for subtle body parts. |

| Project Management Spreadsheet | Tracks labeled videos, frame counts, labelers, and model versions for reproducibility. |

Application Notes

Within a thesis investigating rodent behavioral phenotypes in the Open Field Test (OFT) and Elevated Plus Maze (EPM) using DeepLabCut (DLC), the network training phase is critical for translating raw video into quantifiable, ethologically relevant data. This phase bridges labeled data and robust pose estimation, directly impacting the validity of conclusions regarding anxiety-like behavior and locomotor activity in pharmacological studies. Proper configuration, training, and evaluation ensure the model generalizes across different lighting, animal coats, and apparatuses, which is paramount for high-throughput drug development pipelines.

Configuring Parameters for DLC Model Training

The configuration file (config.yaml) defines the project and training parameters. Key parameters include:

- Network Architecture:

resnet-50is a common backbone, offering a balance of accuracy and speed.mobilenet_v2may be selected for faster inference. - Training Iterations (

num_iterations): Typically set between 50,000 to 200,000. Lower iterations risk underfitting; higher iterations risk overfitting. - Batch Size (

batch_size): Memory dependent. Common sizes are 1, 2, 4, or 8. Smaller batches can have a regularizing effect. - Data Augmentation: Parameters like

rotation,cropping,flipping, andbrightnessvariation are essential for improving model robustness to real-world variability in EPM/OFT videos. - Shuffling: The

shuffleparameter (e.g.,shuffle=1) determines which training/validation split is used, crucial for evaluating stability.

Table 1: Typical DLC Training Configuration for OFT/EPM Studies

| Parameter | Recommended Setting | Rationale for OFT/EM Research |

|---|---|---|

| Network Backbone | ResNet-50 | Proven accuracy for pose estimation in rodents. |

| Initial Learning Rate | 0.001 | Default effective rate for Adam optimizer. |

| Number of Iterations | 100,000 - 200,000 | Sufficient for complex multi-animal scenes. |

| Batch Size | 4-8 | Balances GPU memory and gradient estimation. |

| Augmentation: Rotation | ± 15° | Accounts for variable animal orientation. |

| Augmentation: Flip (mirror) | Enabled | Exploits behavioral apparatus symmetry. |

| Training/Validation Split | 95/5 | Maximizes training data; validation monitors overfit. |

Training Protocol

Protocol: DeepLabCut Model Training for Behavioral Analysis Objective: Train a convolutional neural network to reliably track user-defined body parts (e.g., nose, ears, center, tail base) in video data from OFT and EPM assays.

Materials:

- DeepLabCut software environment (Python, TensorFlow).

- Labeled dataset (created from extracted video frames).

- High-performance workstation with NVIDIA GPU (CUDA enabled).

- Configuration file (

config.yaml).

Procedure:

- Project Setup: Ensure all labeled training datasets are in the project folder. Verify the

config.yamlfile paths are correct. - Initiate Training: Open a terminal in the DLC environment. Run the training command:

deeplabcut.train_network(config_path) - Monitor Training: The terminal will display iteration number, loss (train and test), and learning rate. DLC also creates plots in the

dlc-modelsdirectory. - Evaluate While Training: Use TensorBoard (

deeplabcut.tensorboard(config_path)) to monitor loss curves and visualize predictions on validation frames in real-time. - Stop Criteria: Training can be stopped when the loss plateaus and the validation loss remains stable and low (typically below 0.5-2 px error, depending on resolution). Early stopping can prevent overfitting.

Evaluating Model Performance

Evaluation uses held-out data (the validation set) not seen during training.

Key Metrics:

- Mean Test Error (Pixel Error): Average Euclidean distance between network prediction and human labeler ground truth. The primary metric.

- Train Error: Should be close to but slightly lower than test error. A large gap indicates overfitting.

- p-Value (Likelihood): The probability that the observed error occurred by chance. A value > 0.99 indicates excellent confidence.

- Tracking Confidence: Per-frame likelihood score output by the network for each body part.

Protocol: Model Evaluation and Analysis

- Evaluate Network: Run

deeplabcut.evaluate_network(config_path, Shuffles=[shuffle])after training completes. This generates the evaluation results. - Analyze Videos: Apply the trained model to novel videos using

deeplabcut.analyze_videos(config_path, videos). - Create Labeled Videos: Generate output videos with predicted body parts overlaid using

deeplabcut.create_labeled_video(config_path, videos). - Plot Trajectories: Use

deeplabcut.plot_trajectories(config_path, videos)to visualize animal paths in OFT or EPM.

Table 2: Performance Benchmark for a Trained DLC Model (Example)

| Metric | Value | Interpretation |

|---|---|---|

| Number of Training Iterations | 150,000 | Sufficient for convergence. |

| Final Train Error (pixels) | 1.8 | Good model fit to training data. |

| Final Test Error (pixels) | 2.5 | Good generalization to unseen data. |

| p-Value | 0.999 | Excellent model confidence. |

| Frames per Second (Inference) | ~45 (on GPU) | Suitable for high-throughput analysis. |

The Scientist's Toolkit: Key Research Reagent Solutions

Table 3: Essential Materials for DLC-Based OFT/EPM Behavioral Phenotyping

| Item | Function/Application |

|---|---|

| DeepLabCut (Open-Source Software) | Core platform for markerless pose estimation via transfer learning. |

| High-Resolution, High-FPS Camera | Captures fine-grained rodent movement (e.g., rearing, head dips in EPM). |

| Uniform Behavioral Apparatus Lighting | Minimizes shadows and contrast variations, simplifying model training. |

| GPU Workstation (NVIDIA, CUDA) | Accelerates model training and video analysis by orders of magnitude. |

| Automated Behavioral Arena (OFT/EPM) | Standardized environment for consistent video recording across trials. |

| Video Annotation Tool (DLC GUI) | Enables efficient manual labeling of body parts on extracted frames. |

| Data Analysis Pipeline (Python/R) | For post-processing DLC outputs into behavioral metrics (e.g., time in open arms, distance traveled). |

Visualizations

DLC Network Training & Evaluation Workflow

From Video to Behavioral Metrics via DLC

This document details the protocols for video analysis and trajectory extraction using pose estimation, a core methodological component of a broader thesis employing DeepLabCut (DLC) for behavioral phenotyping in rodent models of anxiety and locomotion. The thesis investigates the effects of novel pharmacological agents on behavior in the Open Field Test (OFT) and Elevated Plus Maze (EPM). Accurate, high-throughput generation of pose estimates from video data is the foundational step for quantifying exploratory behavior, anxiety-like states (e.g., time in center/open arms), and locomotor kinematics.

Core Principles & Current State (Based on Live Search)

Modern pose estimation for neuroscience research leverages transfer learning with deep neural networks. Pre-trained models on large image datasets are fine-tuned on a relatively small set of user-labeled frames to accurately track user-defined body parts (keypoints) across thousands of video frames. DLC remains a predominant, open-source solution. Recent advancements emphasize the importance of model robustness (to lighting, occlusion), inference speed, and integration with downstream analysis pipelines for trajectory and kinematic derivation.

Table 1: Comparison of Key Pose Estimation Frameworks for Behavioral Science

| Framework | Key Strength | Typical Inference Speed (FPS)* | Best Suited For |

|---|---|---|---|

| DeepLabCut | Excellent balance of usability, accuracy, and active community. | 20-50 | Standard lab setups, multi-animal tracking, integration with scientific Python stack. |

| SLEAP | Top-tier accuracy for complex poses and multi-animal scenarios. | 10-30 | High-demand tracking tasks, social interactions, complex morphologies. |

| OpenPose | Real-time performance, strong for human pose. | >50 | Real-time applications, setups with high-end GPUs. |

| APT (AlphaPose) | High accuracy in crowded or occluded scenes. | 15-40 | Experiments with significant object occlusion. |

*Speed depends heavily on hardware (GPU), video resolution, and number of keypoints.

Experimental Protocol: Generating Pose Estimates with DeepLabCut

Protocol 3.1: Project Initialization & Configuration

- Installation: Create a dedicated Conda environment and install DeepLabCut (v2.3.8 or later).

- Project Creation: Use

dlc.create_new_project('ProjectName', 'ResearcherName', ['/path/to/video1.mp4', '/path/to/video2.mp4']). - Define Keypoints: Strategically select body parts relevant to OFT/EPM (e.g., nose, ears, centroid, tail_base). For EPM, consider paw points for precise arm entry/exit detection.

Protocol 3.2: Data Labeling & Model Training

- Extract Frames: Extract frames from all videos across the dataset (

dlc.extract_frames). Use 'kmeans' method to ensure a diverse training set. - Label Frames: Manually label the defined keypoints on ~200-500 extracted frames using the DLC GUI. Critical Step: Ensure consistency and accuracy.

- Create Training Dataset: Run

dlc.create_training_datasetto generate the labeled dataset. Choose a robust network architecture (e.g.,resnet-50ormobilenet_v2_1.0for speed). - Train Network: Configure the

pose_cfg.yamlfile (adjust iterations, batch size). Initiate training (dlc.train_network). Training typically runs for 200,000-500,000 iterations until the loss plateaus (monitor with Tensorboard).

Protocol 3.3: Video Analysis & Pose Estimation

- Evaluate Model: Use the DLC GUI to evaluate the trained model on a labeled set of frames it has never seen. Refine training if mean pixel error is unacceptable (>5-10 pixels for typical setups).

- Analyze Videos: Analyze all experimental videos using

dlc.analyze_videosto generate pose estimates (output:.h5files containing X,Y coordinates and likelihood for each keypoint per frame). - Create Labeled Videos: Generate labeled videos with trajectories (

dlc.create_labeled_video) for visual verification of tracking accuracy.

Protocol 3.4: Data Curation & Trajectory Extraction

- Filter Predictions: Use

dlc.filterpredictions(e.g., with a Kalman filter) to smooth trajectories and correct brief occlusions based on keypoint likelihood scores. - Export Data: Export filtered data to CSV or MATLAB formats for downstream analysis.

- Extract Core Trajectories: Process coordinate data to generate primary trajectories (e.g., centroid movement) and derive secondary metrics:

- OFT: Total distance, velocity, time in center/periphery, thigmotaxis ratio.

- EPM: Entries into and time spent in open/closed arms, number of head dips, risk assessment postures.

Diagrams

Diagram 1: DLC Workflow for OFT/EPM Analysis

Diagram 2: From Pose to Behavioral Metrics

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Materials for DLC-based Video Analysis

| Item | Function & Specification | Rationale for Use |

|---|---|---|

| High-Resolution Camera | CMOS or CCD camera with ≥ 60 FPS and 1080p resolution. Global shutter preferred. | Ensures clear, non-blurry frames for accurate keypoint detection, especially during fast movement. |

| Consistent Lighting System | IR or visible light panels providing uniform, shadow-free illumination. | Reduces video variability, a major source of model error. IR allows for night-phase behavior recording. |

| Behavioral Arena (OFT/EPM) | Standardized dimensions (e.g., 40cm x 40cm OFT; EPM arms 50cm long, 10cm wide). High-contrast coloring (white arena, black walls). | Ensures experimental consistency and facilitates zone definition for trajectory analysis. |

| Dedicated GPU Workstation | NVIDIA GPU (RTX 3070 or higher) with ≥ 8GB VRAM. | Dramatically accelerates model training (days to hours) and video analysis. |

| Data Storage Solution | Network-attached storage (NAS) or large-capacity SSDs (≥ 2TB). | Raw video files and associated data are extremely large and must be securely stored and backed up. |

| DeepLabCut Software Suite | Installed in a managed Python environment (Anaconda). | The core open-source platform for implementing the entire pose estimation pipeline. |

| Automated Analysis Scripts | Custom Python scripts for batch video processing, data filtering, and metric extraction. | Enables reproducible, high-throughput analysis of large experimental cohorts, crucial for drug studies. |

This application note details the post-processing pipeline for extracting validated behavioral metrics from raw coordinate data generated by DeepLabCut (DLC) in rodent models of anxiety and exploration, specifically the Open Field Test (OFT) and Elevated Plus Maze (EPM). Framed within a broader thesis on the application of machine learning-based pose estimation in neuropharmacology, this document provides standardized protocols for calculating velocity, zone occupancy, and dwell time, which are critical for assessing drug effects on locomotor activity and anxiety-like behavior.

Within the thesis context, DeepLabCut provides robust, markerless tracking of rodent position. However, raw (x, y) coordinates are not biologically meaningful endpoints. This document bridges that gap, defining the protocols to transform DLC outputs into quantifiable, publication-ready metrics that are the gold standard in preclinical psychopharmacology research.

Core Behavioral Metrics: Definitions and Calculations

Velocity and Movement Analysis

Velocity is a primary measure of general locomotor activity, essential for differentiating anxiolytic/anaesthetic effects from stimulant properties in drug studies.

Protocol 1: Calculating Instantaneous Velocity

- Input: DLC output CSV file containing

x,ycoordinates andlikelihoodfor a body point (e.g., center-of-mass) across n frames. - Filtering: Apply a likelihood threshold (e.g., 0.95). Coordinates below threshold are interpolated.

- Pixel-to-cm Conversion: Use a known scale (e.g., maze dimensions) to derive a conversion factor.

- Calculation: For each frame i, compute the distance from frame i-1.

distance_cm(i) = sqrt( (x(i)-x(i-1))^2 + (y(i)-y(i-1))^2 ) * conversion_factorvelocity_cm/s(i) = distance_cm(i) * framerate - Smoothing: Apply a rolling median or Savitzky-Golay filter to reduce digitization noise.

- Output Metrics: Mean velocity (entire session), distance traveled (sum of all distances), and mobility/immobility bouts.

Table 1: Representative Velocity Data in Vehicle-Treated C57BL/6J Mice

| Metric | Open Field Test (10 min) | Elevated Plus Maze (5 min) |

|---|---|---|

| Total Distance Traveled (m) | 25.4 ± 3.1 | 8.7 ± 1.2 |

| Mean Velocity (cm/s) | 4.2 ± 0.5 | 2.9 ± 0.4 |

| % Time Mobile (>2 cm/s) | 62.5 ± 5.3 | 48.1 ± 6.7 |

Zone Definition and Dwell Time

Anxiety-like behavior is inferred from spatial preference for "safe" vs. "aversive" zones.

Protocol 2: Defining Zones and Calculating Dwell Time & Entries

- Zone Definition (OFT):

- Center Zone: A user-defined central area (typically 25-50% of total arena area).

- Periphery: The remaining area, adjacent to walls.

- Zone Definition (EPM):

- Open Arms: The two exposed arms without walls.

- Closed Arms: The two arms enclosed by high walls.

- Center Square: The intersection area.

- Logical Assignment: For each video frame, determine if the animal's coordinate lies within a defined polygon for each zone.

- Dwell Time Calculation: Sum the time (frames) spent in each zone.

- Arm/Zone Entry Criteria: Define an entry as the body center point crossing into a zone with >50% of the body length. A minimum exit distance (e.g., 2 cm) should be enforced to prevent spurious oscillations at borders.

- Output Metrics: % Time in each zone, number of entries into each zone.

Table 2: Key Anxiety-Related Metrics in EPM for Drug Screening

| Metric | Vehicle Control | Anxiolytic (Diazepam 1 mg/kg) | Anxiogenic (FG-7142 10 mg/kg) |

|---|---|---|---|

| % Time in Open Arms | 15.2 ± 4.1 | 32.8 ± 6.5* | 5.3 ± 2.1* |

| Open Arm Entries | 6.5 ± 1.8 | 12.1 ± 2.4* | 2.8 ± 1.2* |

| Open/Total Arm Entries Ratio | 0.25 ± 0.06 | 0.42 ± 0.08* | 0.12 ± 0.05* |

| Total Arm Entries | 26.0 ± 3.5 | 28.5 ± 4.2 | 21.4 ± 5.1 |

Significantly different from vehicle control (p < 0.05, simulated data for illustration).

Integrated Workflow: From Video to Metrics

Diagram Title: Workflow: Video to Behavioral Metrics

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 3: Key Materials for OFT/EPM Behavioral Analysis

| Item | Function & Rationale |

|---|---|

| DeepLabCut Software Suite | Open-source toolbox for markerless pose estimation. Generates the foundational (x,y) coordinate data. |

| Custom Python/R Analysis Scripts | For implementing protocols for velocity calculation, zone assignment, and dwell time summarization. |

| High-Contrast Testing Arena | OFT: White floor with dark walls, or vice versa. EPM: Matte white paint for open arms, black for closed arms. Enhances DLC tracking accuracy. |

| Calibration Grid/Ruler | Placed in the arena plane prior to experiments to establish pixel-to-centimeter conversion factor. |

| Video Recording System | High-definition (≥1080p), high-frame-rate (≥30 fps) camera mounted directly above apparatus for a planar view. |

| EthoVision XT or Similar | Commercial software providing a benchmark and validation tool for custom DLC post-processing pipelines. |

| Data Validation Dataset | A manually annotated set of videos (e.g., using BORIS) to verify the accuracy of the automated DLC→metrics pipeline. |

Advanced Protocol: Integrated Analysis for Drug Development

Protocol 3: Multi-Experiment Phenotypic Profiling This protocol contextualizes OFT and EPM within a broader screening battery.

Diagram Title: Drug Screening Logic: OFT & EPM Integration

Procedure:

- Administer test compound or vehicle to rodent subjects (n ≥ 8/group).

- Conduct OFT (e.g., 10 min), followed by EPM (e.g., 5 min) after a suitable inter-test interval.

- Process videos through the DLC and post-processing pipeline (Protocols 1 & 2).

- Apply integrated decision logic (see diagram):

- Path A (Anxiolytic Candidate): Significant increase in EPM open arm time AND OFT center time, with no significant change in total distance traveled (rules out locomotor confounds).

- Path B (Locomotor Effect): Significant change in OFT total distance. Requires follow-up tests to distinguish stimulant vs. sedative properties.

- Path C (Anxiogenic/Disruptive): Significant decrease in EPM open arm time and/or OFT center time.

- Generate a compound profile table for lead prioritization.

The transformation of raw DLC coordinates into standardized behavioral metrics is a critical, non-trivial step in modern computational ethology. The protocols and frameworks provided here ensure that data derived from open-source pose estimation tools meet the rigorous, interpretable standards required for preclinical drug development and behavioral neuroscience research within the OFT and EPM paradigms.

Solving Real-World Challenges: Optimizing DeepLabCut for Robust and Reliable Results

DeepLabCut (DLC) has become a cornerstone tool for markerless pose estimation in preclinical behavioral neuroscience, particularly in Open Field Test (OFT) and Elevated Plus Maze (EPM) paradigms. These tests are critical for assessing anxiety-like behaviors, locomotion, and the efficacy of novel pharmacological agents in rodent models. The reliability of conclusions drawn from DLC analysis is entirely contingent on the quality of the trained neural network. This application note details protocols to identify and mitigate the most common training pitfalls—overfitting, poor generalization, and labeling errors—within the specific context of OFT and EPM research.

Pitfall 1: Overfitting and Protocols for Detection & Mitigation

Overfitting occurs when a model learns the noise and specific details of the training dataset to the extent that it performs poorly on new, unseen data. In OFT/EPM studies, this manifests as high accuracy on training frames but failure to reliably track animals from different cohorts, under different lighting, or with subtle physical variations.

Quantitative Indicators of Overfitting

Table 1: Key Metrics for Diagnosing Overfitting in DLC Models

| Metric | Well-Fitted Model | Overfit Model | Measurement Protocol |

|---|---|---|---|

| Train Error (pixels) | Low and stable (e.g., 2-5 px) | Extremely low (e.g., <1 px) | Reported by DLC after evaluate_network. |

| Test Error (pixels) | Comparable to Train Error (e.g., 3-6 px) | Significantly higher than Train Error (e.g., 10+ px) | Error on the held-out test set from evaluate_network. |

| Validation Loss Plot | Decreases then plateaus. | Decreases continuously, while train loss drops sharply. | Plot from DLC's plot_utils. |

| Generalization to New Videos | High tracking accuracy. | Frequent label swaps, loss of tracking, jitter. | Manual inspection of sample predictions on novel data. |

Experimental Protocol: Creating a Robust Training Set to Prevent Overfitting

Objective: To assemble a training dataset that maximizes variability and prevents the network from memorizing artifacts.

- Video Sourcing: Collect videos from multiple experimental cohorts, treatment groups (vehicle vs. drug), and days.

- Frame Extraction: Use DLC's

extract_outlier_framesfunction (based on network predictions) to sample challenging frames from a preliminary model, in addition to random frame selection from all source videos. - Diversity Criteria: Ensure extracted frames represent:

- Animal Variability: Different animals, coat colors, sizes.

- Environmental Variability: Lighting gradients, time of day, minor setup variations.

- Behavioral Variability: All key postures (rearing, grooming, stretched-attend postures in EPM, center vs. periphery in OFT).

- Data Partitioning: Adhere to an 80/10/10 split for training/validation/test sets, ensuring no animal appears in more than one set.

Diagram 1: Workflow to prevent overfitting in DLC.

Pitfall 2: Poor Generalization and Protocols for Assessment

Poor generalization is the failure of a model to perform accurately on data from a distribution different from the training set. For drug development, this is critical: a model trained only on saline-treated rats may fail on drug-treated animals exhibiting novel motor patterns.

Protocol: Systematic Generalization Test

Objective: Quantify model performance across systematic experimental variations.

- Create a Test Battery: Record short (2-5 min) videos of a new animal under controlled variations:

- Test A: Standard conditions (identical to training).

- Test B: Altered lighting (e.g., 20% brighter/dimmer).

- Test C: Novel arena object (e.g., a small block in OFT).

- Test D: Animal from a different genetic background or treatment group.

- Analyze with

analyze_videosand thencreate_labeled_video. - Quantify: Calculate mean prediction confidence (likelihood) and manually score error rate (e.g., number of frame errors per minute) for each test condition. Table 2: Generalization Test Results Example

| Test Condition | Mean Likelihood | Error Rate (errors/min) | Pass/Fail |

|---|---|---|---|

| Standard (A) | 0.98 | 0.2 | Pass |

| Altered Lighting (B) | 0.95 | 0.8 | Pass |

| Novel Object (C) | 0.65 | 5.1 | Fail |

| Different Strain (D) | 0.71 | 3.8 | Fail |

Pitfall 3: Labeling Errors and Protocols for Correction

Inconsistent or inaccurate labeling is the most pernicious error, leading to biased and irreproducible models. For EPM, mislabeling the "center zone" boundary can directly corrupt the primary measure (time in open arms).

Protocol: Iterative Refinement and Consensus Labeling

Objective: Generate a gold-standard labeled dataset.

- Initial Labeling: Label the extracted frames following a strict, documented protocol (e.g., "nose point is the tip of the snout, not the base").

- Train Initial Model: Train a model for a few iterations (e.g., 50k).

- Extract Outliers: Use

extract_outlier_framesto find frames with high prediction loss. - Consensus Review: Have two independent researchers relabel the outlier frames. Resolve discrepancies by joint review or a third expert.

- Iterate: Refine the labels, merge them into the dataset, and retrain. Repeat steps 3-5 until performance plateaus.

Diagram 2: Iterative labeling refinement protocol.

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Toolkit for Robust DLC-Based OFT/EPM Studies

| Item / Solution | Function & Rationale |

|---|---|

| DeepLabCut (v2.3+) | Core software for markerless pose estimation. Essential for defining keypoints (nose, paws, base of tail) relevant to OFT/EPM behavioral quantification. |

| Standardized OFT/EPM Arenas | Consistent physical dimensions, material, and color (often white for contrast). Critical for reducing environmental variance that harms generalization. |

| Controlled, Indirect Lighting System | Eliminates sharp shadows and glare, which are major sources of visual noise and labeling ambiguity. |

| High-Resolution, High-FPS Camera | Provides clear spatial and temporal resolution for precise labeling of fast-moving body parts during rearing or exploration. |

| Video Synchronization Software | Enables multi-view recording or synchronization with physiological data, enriching downstream analysis. |

| Automated Behavioral Analysis Pipeline (e.g., BENTO, SLEAP) | Used downstream of DLC for classifying poses into discrete behaviors (e.g., open arm entry, grooming bout). |

| Statistical Software (Python/R) | For analyzing derived metrics (distance traveled, time in center, arm entries) and performing group comparisons relevant to drug efficacy. |

1. Introduction and Thesis Context Within the broader thesis of employing DeepLabCut (DLC) for automated behavioral analysis in rodent models—specifically the Open Field Test (OFT) for general locomotion/anxiety and the Elevated Plus Maze (EPM) for anxiety-like behavior—a paramount challenge is ensuring robustness under real-world experimental variability. Key confounds include fluctuating lighting, partial animal occlusions, and interactions between multiple animals. This Application Note details protocols and optimization strategies to mitigate these issues, ensuring reliable, high-throughput data for preclinical research in neuroscience and drug development.

2. Data Presentation: Impact of Variable Conditions on DLC Performance

Table 1: Quantitative Effects of Common Variable Conditions on DLC Pose Estimation Accuracy (Summarized from Recent Literature)

| Variable Condition | Typical Metric Impacted | Reported Performance Drop (vs. Ideal) | Mitigation Strategy |

|---|---|---|---|

| Sudden Lighting Change | Mean Pixel Error (MPE) | Increase of 15-25% | Data augmentation, multi-condition training. |

| Progressive Occlusion (e.g., by maze wall) | Likelihood (p-value) of keypoint | Drop to <0.8 for >50% occlusion | Multi-animal configuration, occlusion augmentation. |

| Multiple Animals (Identity Swap) | Identity Swap Count per session | 5-20 swaps in 10-min video | Use identity mode in DLC, unique markers. |