DeepLabCut: The Open-Source Pose Estimation Toolbox Transforming Behavioral Research in Neuroscience & Drug Development

This comprehensive guide explores DeepLabCut (DLC), the leading open-source toolbox for markerless pose estimation.

DeepLabCut: The Open-Source Pose Estimation Toolbox Transforming Behavioral Research in Neuroscience & Drug Development

Abstract

This comprehensive guide explores DeepLabCut (DLC), the leading open-source toolbox for markerless pose estimation. Designed for researchers, scientists, and drug development professionals, it provides foundational knowledge, a step-by-step methodology for implementation, advanced troubleshooting and optimization techniques, and a critical analysis of validation and comparative performance. This article empowers scientists to harness DLC's capabilities to quantify animal behavior with unprecedented precision, accelerating translational neuroscience and pre-clinical drug discovery.

What is DeepLabCut? A Foundational Guide to Markerless Pose Estimation for Researchers

The quantification of behavior through precise pose estimation is fundamental to neuroscience, biomechanics, and pre-clinical drug development. Traditional methods, reliant on physical markers, present significant limitations in throughput, animal welfare, and experimental scope. This whitepaper, framed within the context of broader research on the open-source DeepLabCut (DLC) toolbox, details how deep learning-based markerless tracking represents a paradigm shift. We provide a technical comparison, detailed experimental protocols, and essential resources to empower researchers in adopting this transformative technology.

The Limitations of Traditional Marker-Based Tracking

Traditional methods require the attachment of physical markers (reflective, colored, or LED) to subjects. This introduces experimental confounds and logistical barriers.

Table 1: Quantitative Comparison of Tracking Methodologies

| Parameter | Traditional Marker-Based | DeepLabCut (Markerless) |

|---|---|---|

| Setup Time per Subject | 10-45 minutes | < 5 minutes (after model training) |

| Subject Invasiveness/Stress | High (shaving, gluing, surgical attachment) | None to Minimal (handling only) |

| Behavioral Artifacts | High risk (weight of markers, restricted movement) | Negligible |

| Hardware Cost (beyond camera) | High (specialized IR/LED systems, emitters) | Low (standard consumer-grade cameras) |

| Re-tagging Required | Frequently (due to loss/obscuration) | Never |

| Scalability (# of tracked points) | Low (typically <10) | Very High (50+ body parts feasible) |

| Generalization to New Contexts | Poor (markers may be obscured) | High (with proper training data) |

| Keypoint Accuracy (pixel error) | Variable; prone to marker drift | ~2-5 px (human); ~3-10 px (animal models) |

| Throughput for Large Cohorts | Low | High |

DeepLabCut: Core Technical Principles

DLC leverages transfer learning with deep neural networks (e.g., ResNet, EfficientNet) to perform pose estimation in video data. A user provides a small set of labeled frames (~100-200), which fine-tune a pre-trained network to detect user-defined body parts in new videos with high accuracy and robustness.

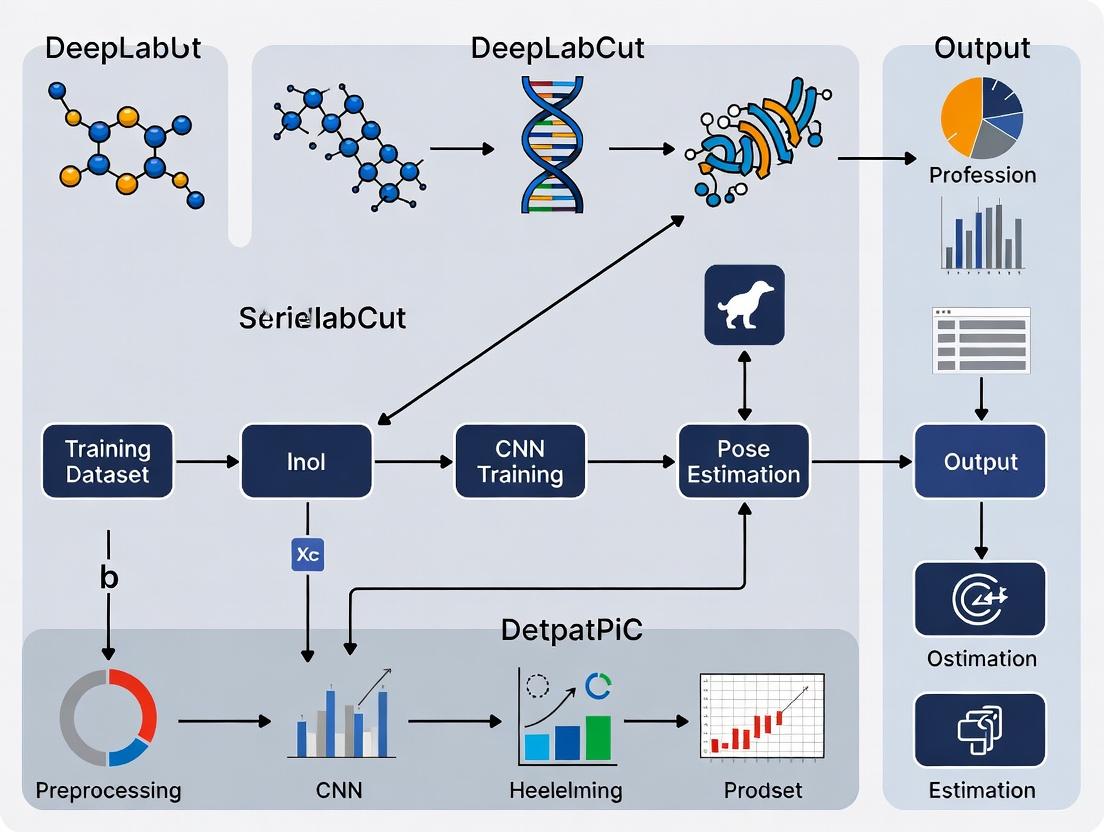

Diagram Title: DeepLabCut Model Training and Analysis Workflow

Experimental Protocol: Implementing DLC for Rodent Behavioral Analysis

This protocol details a standard workflow for training a DLC network to track keypoints (e.g., snout, left/right forepaws, tail base) in a home-cage locomotion assay.

Materials & Setup

- Subjects: Cohort of 10-12 mice/rats.

- Apparatus: Standard home cage, placed in a consistent lighting environment.

- Hardware: One consumer-grade USB camera (e.g., Logitech) mounted stably above the cage. Ensure uniform lighting to minimize shadows.

- Software: DeepLabCut (Python environment) installed as per official instructions.

Step-by-Step Procedure

- Video Acquisition: Record 10-minute videos of each animal in the apparatus. Use

.mp4or.aviformat. For training, select videos from 3-4 animals that represent diverse postures (rearing, grooming, locomotion, resting). Project Creation:

Frame Extraction: Extract frames from the selected videos to create a training dataset.

Labeling: Using the DLC GUI, manually label the defined body parts on the extracted frames. This creates the "ground truth" data.

Training Dataset Creation: Generate training and test sets from the labeled frames.

Model Training: Initiate network training. This is computationally intensive; use a GPU if available.

Network Evaluation: Evaluate the model's performance on the held-out test frames. The key metric is test error (in pixels).

Video Analysis: Apply the trained model to analyze new, unlabeled videos.

Post-Processing: Create labeled videos and extract data (CSV/HDF5 files) for statistical analysis.

The Scientist's Toolkit: Essential Research Reagent Solutions

Table 2: Key Resources for Markerless Pose Estimation Experiments

| Item | Function/Description | Example/Note |

|---|---|---|

| High-Speed Camera | Captures fast movements without motion blur. Essential for gait analysis or rodent reaching. | Basler acA series, FLIR Blackfly S |

| Consumer RGB Camera | Cost-effective for most general behavior tasks (locomotion, social interaction). | Logitech C920, Raspberry Pi Camera Module 3 |

| Dedicated GPU | Accelerates neural network training dramatically (from days to hours). | NVIDIA RTX 4000/5000 series (workstation), Tesla series (server) |

| Behavioral Arena | Standardized experimental environment. Critical for generating consistent video data. | Open Field boxes, T-mazes, custom acrylic enclosures |

| Data Annotation Tool | Software for generating ground truth labels. The core "reagent" for training. | DeepLabCut's built-in GUI, SLEAP, Anipose |

| Computational Environment | Software stack for reproducible analysis. | Python 3.8+, Conda/Pip, Docker container with DLC installed |

| Post-Processing Software | For analyzing trajectory data, calculating kinematics, and statistics. | Custom Python/R scripts, DeepLabCut's analysis tools, SimBA, MARS |

Signaling Pathways & Downstream Analysis Logic

Markerless tracking data serves as the input for advanced behavioral and neurological analysis.

Diagram Title: From Pose Estimation to Behavioral Phenotype

DeepLabCut and related markerless tracking technologies have fundamentally disrupted the study of behavior by removing the physical and analytical constraints of traditional methods. By offering high precision without invasive marking, enabling the tracking of numerous naturalistic body parts, and leveraging scalable deep learning, DLC provides researchers and drug development professionals with a powerful, flexible, and open-source toolkit. This shift allows for more ethologically relevant, higher-throughput, and more reproducible quantification of behavior, accelerating discovery in neuroscience and pre-clinical therapeutic development.

DeepLabCut represents a paradigm shift in markerless pose estimation, built upon the foundational principle of applying deep neural networks (DNNs), initially developed for object classification, to the problem of keypoint detection in animals and humans. This whitepaper, framed within broader thesis research on the DeepLabCut open-source toolbox, details the core mechanism that enables this leap: transfer learning. By leveraging networks pre-trained on massive image datasets (e.g., ImageNet), DeepLabCut achieves state-of-the-art accuracy with remarkably few user-labeled training frames, making it an indispensable tool for researchers in neuroscience, biomechanics, and drug development.

Theoretical Foundation: Transfer Learning for Pose Estimation

Transfer learning circumvents the need to train a DNN from scratch, which requires millions of labeled images and substantial computational resources. Instead, it utilizes a network whose early and middle layers have learned rich, generic feature detectors (e.g., edges, textures, simple shapes) from a source task (image classification). DeepLabCut adapts this network for the target task (keypoint localization) by:

- Initialization: Using the pre-trained weights of a network like ResNet or EfficientNet as the starting point.

- Adaptation: Replacing the final classification layer with a new head for predicting spatial probability maps (confidence maps) for each body part.

- Fine-tuning: Retraining primarily the new head and later layers on the small, domain-specific labeled dataset, while optionally fine-tuning earlier layers.

Architectural Backbones: ResNet vs. EfficientNet

DeepLabCut's performance hinges on the choice of backbone feature extractor. Two predominant architectures are supported.

| Feature | ResNet-50 | ResNet-101 | EfficientNet-B0 | EfficientNet-B3 |

|---|---|---|---|---|

| Core Innovation | Residual skip connections mitigate vanishing gradient | Deeper version of ResNet-50 | Compound scaling (depth, width, resolution) | Balanced mid-size model in EfficientNet family |

| Typical Top-1 ImageNet Acc. | ~76% | ~77.4% | ~77.1% | ~81.6% |

| Parameter Count | ~25.6 Million | ~44.5 Million | ~5.3 Million | ~12 Million |

| Inference Speed | Moderate | Slower | Fast | Moderate |

| Key Advantage for DLC | Proven reliability, extensive benchmarks | Higher accuracy for complex scenes | Extreme parameter efficiency, good for edge devices | Optimal accuracy/efficiency trade-off |

| Best Use Case | General-purpose pose estimation | Projects requiring maximum accuracy from ResNet family | Resource-constrained environments, fast iteration | High accuracy demands with moderate compute resources |

Experimental Protocol: Implementing Transfer Learning with DeepLabCut

The following methodology details a standard experimental pipeline for creating a DeepLabCut model.

Project Initialization & Data Labeling

- Frame Extraction: Extract video frames (typically 100-1000) capturing the full behavioral repertoire and diverse viewpoints.

- Labeling: Manually annotate body parts on the extracted frames using the DeepLabCut GUI to create a ground truth dataset.

- Data Partitioning: Split the labeled data into training (e.g., 95%) and test (e.g., 5%) sets.

Network Configuration & Training

- Backbone Selection: Choose a network architecture (e.g.,

resnet50,efficientnet-b0) in the DeepLabCut configuration file. - Parameter Setting: Define hyperparameters such as initial learning rate (

1e-4), batch size, number of training iterations (e.g., 200,000), and data augmentation options (rotation, scaling, cropping). - Fine-tuning Strategy:

- Freeze early layers: Initially, keep weights of the pre-trained backbone fixed, training only the newly added head.

- Full fine-tuning: After initial training, optionally unfreeze all layers for additional fine-tuning with a lower learning rate (

1e-5).

Evaluation & Analysis

- Test Set Evaluation: Use the held-out test images to generate predictions. Calculate the mean average Euclidean error (in pixels) and the percentage of correct keypoints under a specified threshold (e.g., 5% of the image diagonal).

- Video Analysis: Apply the trained model to novel videos for pose estimation.

- Refinement: If performance is unsatisfactory on certain frames, add those frames to the training set, label them, and refine the model.

Key Performance Data and Benchmarks

Quantitative results from representative studies illustrate the efficacy of the transfer learning approach.

Table 1: Performance Comparison on Benchmark Datasets (Example Metrics)

| Backbone Model | Training Frames | Test Error (pixels) | Inference Time (ms/frame) | Dataset (Representative) |

|---|---|---|---|---|

| ResNet-50 | 200 | 4.2 | 15 | Lab Mouse Open Field |

| ResNet-101 | 200 | 3.8 | 22 | Lab Mouse Open Field |

| EfficientNet-B0 | 200 | 5.1 | 8 | Lab Mouse Open Field |

| EfficientNet-B3 | 200 | 3.5 | 12 | Lab Mouse Open Field |

| ResNet-50 | 500 | 2.1 | 15 | Drosophila Wings |

| EfficientNet-B3 | 500 | 1.9 | 12 | Drosophila Wings |

Note: Error is average Euclidean distance between prediction and ground truth. Inference time measured on an NVIDIA Tesla V100 GPU. Data is illustrative of trends reported in the literature.

Visualization of Core Concepts

Diagram 1: DeepLabCut Transfer Learning Workflow

Diagram 2: ResNet vs. EfficientNet Architecture Logic

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 2: Key Reagents and Materials for a DeepLabCut Study

| Item | Function/Role in Experiment | Example/Notes |

|---|---|---|

| Animal Model | Biological subject for behavioral phenotyping. | C57BL/6J mouse, Drosophila melanogaster, Rattus norvegicus. |

| Experimental Arena | Controlled environment for video recording. | Open field box, rotarod, T-maze, custom behavioral setup. |

| High-Speed Camera | Captures motion at sufficient resolution and frame rate. | ≥ 30 FPS, 1080p resolution; IR-sensitive for dark cycle. |

| Synchronization Hardware | Aligns video with other data streams (e.g., neural). | TTL pulse generators, data acquisition boards (DAQ). |

| Calibration Object | Converts pixels to real-world units (mm/cm). | Checkerboard or object of known dimensions. |

| DeepLabCut Software Suite | Core platform for model training and analysis. | deeplabcut==2.3.8 (or latest). Includes GUI and API. |

| Pre-trained Model Weights | Enables transfer learning; starting point for training. | ResNet weights from PyTorch TorchHub or TensorFlow Hub. |

| GPU Workstation | Accelerates model training and video analysis. | NVIDIA GPU (≥8GB VRAM), e.g., RTX 3080, Tesla V100. |

| Labeling Tool (GUI) | Enables manual annotation of ground truth data. | Integrated DeepLabCut Labeling GUI. |

| Data Analysis Environment | For post-processing pose data and statistics. | Python (NumPy, SciPy, Pandas) or MATLAB. |

This whitepaper details the DeepLabCut (DLC) ecosystem within the context of ongoing open-source research for markerless pose estimation. The core thesis posits that DLC's multi-interface architecture—spanning an accessible desktop GUI to a programmable high-performance Python API—democratizes advanced behavioral quantification while enabling scalable, reproducible computational research. This dual approach accelerates the translation of behavioral phenotyping into drug discovery pipelines, where robust, high-throughput analysis is paramount.

Ecosystem Architecture and Quantitative Performance

DLC is built on a modular stack that balances usability with computational power. The following table summarizes the core components and their quantitative performance benchmarks based on recent community evaluations.

Table 1: DLC Ecosystem Components & Performance Benchmarks

| Component | Primary Interface | Key Function | Target User | Typical Inference Speed (FPS)* | Model Training Time (hrs)* |

|---|---|---|---|---|---|

| DLC GUI | Graphical User Interface (Desktop) | Project creation, labeling, training, video analysis | Novice users, biologists | 30-50 (CPU), 200-500 (GPU) | 2-12 (varies by dataset size) |

| DLC Python API | deeplabcut library (Jupyter, scripts) |

Programmatic pipeline control, batch processing, customization | Researchers, engineers, drug developers | 50-80 (CPU), 500-1000+ (GPU) | 1-8 (optimized configuration) |

| Model Zoo | Online Repository / API | Pre-trained models for common animals (mouse, rat, human, fly) | All users seeking transfer learning | N/A | N/A |

| Active Learning | GUI & API (refine_template) |

Network-based label refinement | Users improving datasets | N/A | N/A |

| DLC-Live! | Python API / C++ | Real-time pose estimation & feedback | Neuroscience (closed-loop) | 100-150 (USB camera) | N/A |

*FPS: Frames per second on standard hardware (CPU: Intel i7, GPU: NVIDIA RTX 3080). Times depend on network size (e.g., ResNet-50 vs. MobileNetV2) and number of training iterations.

Core Experimental Protocols

Protocol A: Creating a New Project via GUI (Standard Workflow)

- Launch & Project Creation: Open Anaconda Prompt, activate DLC environment (

conda activate DLC-GPU), launch GUI (python -m deeplabcut). Click "Create New Project," enter experimenter name, project name, and select videos for labeling. - Data Labeling: In the "Labeling" tab, extract frames (uniformly or by clustering). Manually label body parts on ~100-200 frames per video, creating a ground truth dataset.

- Training Configuration: Navigate to "Manage Project," then "Edit Config File." Define

numframes2pickfor training, select a neural network backbone (e.g.,resnet_50), and setiterationparameter (e.g.,iteration=0). - Model Training: Select "Train Network." This generates a training dataset, shuffles it, and initiates training on the specified GPU/CPU. Monitor loss plots (

train/pose_net_loss) and evaluation metrics (test/pose_net_loss) in TensorBoard. - Video Analysis & Evaluation: Post-training, use "Analyze Videos" to run inference. Use "Evaluate Network" to compute mean pixel error on a held-out frame set. Optionally, use "Plot Trajectories" and "Create Videos" for visualization.

Protocol B: High-Throughput Analysis via Python API

This protocol is for batch processing and integration into larger pipelines, crucial for drug development screens.

Visualizing the DLC Workflow and Data Flow

Diagram 1: High-Level DLC Ecosystem Architecture

Diagram 2: Detailed Training and Inference Pipeline

The Scientist's Toolkit: Essential Research Reagents & Solutions

Table 2: Key Reagents and Computational Tools for DLC Research

| Item / Solution | Category | Function in DLC Research | Example Product / Library |

|---|---|---|---|

| Labeled Training Dataset | Biological Data | Ground truth for supervised learning; defines keypoints (e.g., paw, snout, tail base). | Custom-generated from experimental video. |

| Pre-trained Model Weights | Computational | Enables transfer learning, reducing training time and required labeled data. | DLC Model Zoo (mouse, rat, human, fly). |

| GPU Compute Resource | Hardware | Accelerates model training and video inference by orders of magnitude. | NVIDIA RTX series with CUDA & cuDNN. |

| Python Data Stack | Software Libraries | Enables post-processing, statistical analysis, and visualization of pose data. | NumPy, SciPy, pandas, Matplotlib, Seaborn. |

| Behavioral Arena | Experimental Hardware | Standardized environment for consistent video recording and stimulus presentation. | Open-Source Behavior (OSB) rigs, Med Associates. |

| Video Acquisition Software | Software | Records high-fidelity, synchronized video from one or multiple cameras. | Bonsai, DeepLabCut Live!, CAMERA (NI). |

| Annotation Tools | Software | Alternative for initial frame labeling or correction. | CVAT (Computer Vision Annotation Tool), Labelbox. |

| Statistical Analysis Tool | Software | Performs advanced statistical testing and modeling on derived kinematics. | R, Statsmodels, scikit-learn for machine learning. |

This whitepaper examines the transformative role of the DeepLabCut (DLC) toolbox in modern biomedical research, positioned within the broader thesis that accessible, open-source pose estimation is catalyzing a paradigm shift in quantitative biology. By enabling markerless, high-precision tracking of animal posture and movement, DLC provides a foundational tool for integrative studies across neuroscience, pharmacology, and behavioral phenotyping.

Quantifying Behavioral Phenotypes with DLC

Behavioral analysis is the cornerstone of models for neurological disorders, drug efficacy, and genetic function. DLC moves beyond manual scoring or restrictive trackers by using transfer learning to train deep neural networks to track user-defined body parts across species.

Key Quantitative Outcomes from Recent Studies: Table 1: Representative DLC Applications in Behavioral Phenotyping

| Study Focus | Model/Subject | Key Measured Variables | Quantitative Outcome (DLC vs. Traditional) |

|---|---|---|---|

| Gait Analysis | Mouse (Parkinson's model) | Stride length, hindlimb base of support, paw angle | Detected a 22% reduction in stride length (p<0.001) with higher precision than treadmill systems. |

| Social Interaction | Rat (Social Defeat) | Inter-animal distance, orientation, approach velocity | Quantified a 3.5x increase in avoidance time in defeated rats with 95% fewer manual annotations. |

| Fear & Anxiety | Mouse (Open Field, EPM) | Rearing count, time in center, head-dipping frequency | Achieved 99% accuracy in freeze detection, correlating (r=0.92) with manual scoring. |

| Pharmacological Response | Zebrafish (locomotion) | Tail beat frequency, turn angle, burst speed | Identified a 40% decrease in bout frequency post-treatment with sub-millisecond temporal resolution. |

Experimental Protocol: DLC Workflow for Novel Object Recognition Test

- Video Acquisition: Record multiple mice (e.g., C57BL/6J) in an open arena with a novel object introduced in trial 2. Use consistent, high-contrast lighting at 30 fps.

- Frame Labeling: Extract ~100-200 frames from multiple videos, ensuring variation in animal pose and position. Manually label keypoints (e.g., snout, ears, tail base, all paws) using the DLC GUI.

- Network Training: Train a ResNet-50 based network for 1.03 million iterations until the train and test errors (pixel distance) plateau. Use a shuffle=1 train/test split.

- Pose Estimation: Analyze all videos with the trained network to obtain tracked keypoint coordinates and confidence scores.

- Post-Processing: Filter low-confidence predictions (<0.95) and smooth trajectories using a Savitzky-Golay filter.

- Behavioral Feature Extraction: Calculate:

- Object exploration: Time spent with snout within 2 cm of the object.

- Orientation: Animal's head direction relative to the object.

- Kinematics: Velocity and acceleration profiles during approach.

- Statistical Analysis: Compare exploration time between familiar and novel object phases using a paired t-test.

DLC Experimental Analysis Pipeline

Advancing Neuroscience Through Kinematic Analysis

DLC allows neuroscientists to link neural activity to precise kinematic variables, creating a closed loop between circuit manipulation and behavioral output.

Experimental Protocol: Correlating Neural Activity with Limb Kinematics

- Surgery & Implantation: Implant a microdrive array or a head-mounted mini-scope for calcium imaging (e.g., GCaMP) over the motor cortex or striatum in a mouse. Allow for recovery and viral expression.

- Behavioral Task: Train mouse to perform a reach-to-grasp task or run on a textured wheel.

- Synchronized Recording: While performing DLC tracking (tracking paws, digits, wrist), simultaneously record neural spike data or fluorescence signals. Synchronize video and neural data using TTL pulses.

- Kinematic Decomposition: Use DLC outputs to define movement onset, velocity profiles, joint angles, and success/failure of grasps.

- Alignment & Modeling: Align kinematic features with neural activity timestamps. Use generalized linear models (GLMs) to predict neural activity from multi-joint kinematics or decode kinematics from population activity.

Table 2: Key Reagents for Integrated Neuroscience & DLC Studies

| Research Reagent / Tool | Function in Experiment |

|---|---|

| AAV9-CaMKIIa-GCaMP8m | Drives strong expression of a fast calcium indicator in excitatory neurons for imaging neural dynamics. |

| Chronic Cranial Window (e.g., 3-5 mm) | Provides optical access for long-term in vivo two-photon or mini-scope imaging. |

| Grayscale CMOS Camera (e.g., 100+ fps) | High-speed video capture essential for resolving rapid limb and digit movements. |

| Microdrive Electrode Array (e.g., 32-128 channels) | Allows for stable recording of single-unit activity across days during behavior. |

| Data Synchronization Hub (e.g., NI DAQ) | Precisely aligns video frames, neural samples, and stimulus triggers with millisecond accuracy. |

| DeepLabCut-Live! | Enables real-time pose estimation for closed-loop feedback stimulation protocols. |

Neural-Kinematic Data Integration

Enhancing Pharmacology with Objective Behavioral Biomarkers

In drug discovery, DLC offers sensitive, objective, and high-dimensional readouts of drug effects, moving beyond simplistic activity counts.

Experimental Protocol: High-Throughput Phenotypic Screening in Zebrafish

- Animal Preparation: Array zebrafish larvae (e.g., 5-7 dpf) in a 96-well plate, one larva per well.

- Drug Administration: Add vehicle or drug compound (e.g., neuroactive small molecule) to each well using an automated liquid handler.

- Video Recording: Use a backlit, high-resolution camera array to record from all wells simultaneously for 30 minutes post-treatment at 50 fps.

- Multi-Animal DLC Analysis: Process videos using DLC with a network trained to track the head, trunk, and tail tip of the larvae.

- Biomarker Calculation: For each well, compute:

- Total locomotor activity: Mean distance traveled per minute.

- Bout kinematics: Mean duration, frequency, and peak angular velocity of tail movements.

- Complex patterns: Seizure-like rapid convulsions or circling behavior.

- Dose-Response Analysis: Fit kinematic biomarkers (e.g., tail beat frequency) against log(dose) to calculate EC50/IC50 values. Compare the sensitivity of kinematic biomarkers versus traditional activity counts.

Pharmacological Screening Workflow

The Scientist's Toolkit: Essential Research Reagents & Solutions

Table 3: Key Research Toolkit for DLC-Enhanced Biomedical Research

| Item | Category | Function & Relevance to DLC |

|---|---|---|

| DeepLabCut Software Suite | Software | Core open-source platform for markerless pose estimation via transfer learning. |

| High-Speed Camera (e.g., >100 fps) | Hardware | Captures rapid movements (gait, reach, tail flick) for precise kinematic analysis. |

| Near-Infrared (IR) Illumination & IR-sensitive Camera | Hardware | Enables behavioral recording during dark phases (nocturnal rodents) or for optogenetics without visual interference. |

| Synchronization Hardware (e.g., Arduino, NI DAQ) | Hardware | Precisely aligns DLC-tracked video with neural recordings, stimulus delivery, or other temporal events. |

| Automated Behavioral Arenas (e.g., Phenotyper) | Hardware | Provides controlled, replicable environments for long-term, home-cage monitoring compatible with DLC tracking. |

| 3D DLC Extension or Anipose Library | Software | Enables 3D pose reconstruction from multiple camera views for complex kinematic analysis in 3D space. |

| Behavioral Annotation Tool (e.g., BORIS, SimBA) | Software | Used in conjunction with DLC outputs to label behavioral states (e.g., grooming, attacking) for supervised behavioral classification. |

Framed within the thesis of DLC's transformative potential, this guide illustrates its central role in creating a new standard for measurement in biomedical research. By providing granular, quantitative, and objective data streams from behavior, DLC tightly bridges the gap between molecular/cellular neuroscience, pharmacological intervention, and complex phenotypic outcomes, driving more reproducible and insightful discovery.

This whitepaper details the essential prerequisites for conducting research using DeepLabCut (DLC), an open-source toolbox for markerless pose estimation. Within the broader thesis of advancing DLC's application in biomedical research, establishing a robust, reproducible computational environment is paramount. This guide provides a current, technical specification of hardware, software, and data requirements tailored for researchers, scientists, and drug development professionals.

Hardware Requirements

Performance in DLC is dictated by two computational phases: labeling/training (computationally intensive) and inference (can be lightweight). Hardware selection should align with project scale and throughput needs.

Central Processing Unit (CPU)

The CPU handles data loading, preprocessing, and inference. While a GPU accelerates training, a modern multi-core CPU is essential for efficient data pipeline management.

Table 1: CPU Recommendations for DeepLabCut Workflows

| Use Case | Recommended Cores | Example Model (Intel/AMD) | Key Rationale |

|---|---|---|---|

| Minimal/Inference Only | 4-6 cores | Intel Core i5-12400 / AMD Ryzen 5 5600G | Sufficient for video analysis with pre-trained models. |

| Standard Research Training | 8-12 cores | Intel Core i7-12700K / AMD Ryzen 7 5800X | Handles parallel data augmentation and batch processing during GPU training. |

| Large-scale Dataset Training | 16+ cores | Intel Core i9-13900K / AMD Ryzen 9 7950X | Maximizes throughput for generating large training sets and multi-animal projects. |

Graphics Processing Unit (GPU)

The GPU is the most critical component for model training. DLC leverages TensorFlow/PyTorch backends, which utilize NVIDIA CUDA and cuDNN libraries for parallel computation.

Table 2: GPU Specifications for Model Training Efficiency

| GPU Model | VRAM (GB) | FP32 Performance (TFLOPS) | Suitable Project Scale | Estimated Training Time Reduction* |

|---|---|---|---|---|

| NVIDIA GeForce RTX 4060 | 8 | ~15 | Small datasets (<1000 frames), proof-of-concept. | Baseline (1x) |

| NVIDIA GeForce RTX 4070 Ti | 12 | ~40 | Standard single-animal projects, moderate video resolution. | ~2.5x |

| NVIDIA RTX A5000 | 24 | ~27 | Multi-animal, high-resolution, or 3D DLC projects. | ~1.8x (but larger batch sizes) |

| NVIDIA GeForce RTX 4090 | 24 | ~82 | Large-scale, high-throughput research, rapid iteration. | ~5x |

| NVIDIA H100 (Data Center) | 80 | ~120 | Institutional-scale, model development, massive datasets. | >8x |

*Reduction is a relative estimate vs. baseline for a standard 200k-iteration ResNet-50 training. Actual speed depends on network architecture, batch size, and data pipeline.

Experimental Protocol: Benchmarking GPU Performance for DLC

- Objective: Quantify the impact of GPU VRAM and TFLOPS on DLC model training time.

- Methodology:

- Standardized Dataset: Use the open-source "Reaching Mouse" dataset from DLC's tutorials. Extract a consistent subset (e.g., 500 labeled frames).

- Fixed Parameters: Train a ResNet-50-based network for 200,000 iterations with a batch size of 8. Use the same

config.yamlfile across all tests. - Hardware Variants: Perform identical training on systems equipped with GPUs from Table 2.

- Metrics: Record total training time (hours:minutes), peak VRAM utilization (GB), and average iteration time (ms).

- Expected Outcome: A linear-logarithmic relationship where increased TFLOPS significantly reduces time, and larger VRAM enables larger batch sizes, further optimizing throughput.

Diagram Title: GPU Benchmarking Protocol for DLC

Software & Environment Requirements

A controlled software environment prevents dependency conflicts and ensures reproducibility.

Core Software Stack

Table 3: Essential Software Components & Versions

| Software | Recommended Version | Role in DLC Pipeline | Installation Method |

|---|---|---|---|

| Python | 3.8, 3.9, or 3.10 | Core programming language for DLC and dependencies. | Via Anaconda. |

| Anaconda | 2023.09 or later | Manages isolated Python environments and packages. | Download from anaconda.com. |

| DeepLabCut | 2.3.13 or later | Core pose estimation toolbox. | pip install deeplabcut in conda env. |

| TensorFlow | 2.10 - 2.13 (for GPU) | Deep learning backend for DLC. Must match CUDA version. | pip install tensorflow (or tensorflow-gpu). |

| PyTorch | 1.12 - 2.1 (for 3D/Transformer) | Alternative backend for DLC's flexible networks. | conda install pytorch torchvision. |

| CUDA Toolkit | 11.2, 11.8, or 12.0 | NVIDIA's parallel computing platform for GPU acceleration. | From NVIDIA website. |

| cuDNN | 8.1 - 8.9 | GPU-accelerated library for deep neural networks. | From NVIDIA website (requires login). |

Environment Setup Protocol

- Objective: Create a reproducible, conflict-free DLC environment.

- Methodology:

- Install Anaconda.

- Open a terminal (Anaconda Prompt on Windows) and create a new environment:

conda create -n dlc_env python=3.9. - Activate it:

conda activate dlc_env. - Install DLC core:

pip install "deeplabcut[gui,tf]"for standard use with TensorFlow. - For GPU support, install TensorFlow matching your CUDA version (e.g., for CUDA 11.8):

pip install tensorflow==2.13. - Verify installation:

python -c "import deeplabcut; print(deeplabcut.__version__)".

Diagram Title: DLC Software Stack Dependency Flow

Data Requirements

The quality and structure of input data are the primary determinants of DLC model accuracy.

Video Data Specifications

- Formats:

.mp4,.avi,.mov,.mj2(recommended: MP4 with H.264 codec). - Resolution: Minimum 640x480 pixels. Higher resolution (e.g., 1080p) provides more spatial information but increases compute load.

- Frame Rate: Must be appropriate for the behavior. Standard rodent studies use 30-60 fps; high-speed motions may require >200 fps.

- Lighting & Consistency: Uniform, high-contrast illumination is critical. Background should be static and distinct from the animal.

Dataset Curation & Labeling Protocol

- Objective: Create a high-quality training dataset for a novel behavior.

- Methodology:

- Frame Extraction: From multiple videos representing different subjects, lighting, and viewpoints, extract frames of interest. DLC's

extract_outlier_framesfunction is recommended over uniform sampling. - Labeling: Using the DLC GUI, manually annotate body parts on each extracted frame. This creates a labeled dataset.

- Dataset Splitting: The labeled dataset is partitioned into a training set (~90-95%) for model learning and a test set (~5-10%) for unbiased evaluation.

- Training: The model learns to map image patches to pose coordinates from the training set.

- Evaluation: Model performance is quantitatively assessed on the held-out test set using metrics like Mean Average Error (pixels).

- Frame Extraction: From multiple videos representing different subjects, lighting, and viewpoints, extract frames of interest. DLC's

Table 4: Key Research Reagent Solutions for DLC Experiments

| Item/Tool | Function in DLC Research |

|---|---|

| High-Speed Camera | Captures fast, subtle movements (e.g., rodent paw kinematics, Drosophila wing beats). |

| Multi-Camera Rig | Enables 3D pose reconstruction via triangulation. Requires precise calibration. |

| Calibration Object | (e.g., Charuco board) Used to calibrate camera intrinsics/extrinsics for 3D DLC. |

| Behavioral Arena | Controlled environment to elicit and record specific behaviors of interest. |

| DLC Model Zoo | Repository of pre-trained models for common model organisms, providing a transfer learning starting point. |

| Compute Cluster Access | For large-scale hyperparameter optimization or processing vast video libraries. |

Diagram Title: DLC Data Pipeline from Video to Trained Model

Step-by-Step Guide: Implementing DeepLabCut for Robust Behavioral Analysis in Your Lab

This guide constitutes the foundational phase of a comprehensive research thesis on the DeepLabCut (DLC) open-source toolbox for markerless pose estimation. Phase 1 establishes the critical prerequisite framework that determines the success of all subsequent model training, analysis, and biological interpretation. A precisely defined behavioral task and anatomically grounded keypoints are non-negotiable for generating quantitative, reproducible, and biologically meaningful data, which is paramount for researchers in neuroscience, ethology, and preclinical drug development.

Defining the Behavioral Task and Experimental Design

The behavioral task must be operationally defined with quantifiable metrics. For drug development, this often involves tasks sensitive to pharmacological manipulation.

Table 1: Common Behavioral Paradigms in Preclinical Research

| Paradigm | Core Behavioral Measure | Typical Pharmacological Sensitivity | Key Tracking Challenges |

|---|---|---|---|

| Open Field Test | Locomotion (distance), Center Time, Thigmotaxis | Psychostimulants, Anxiolytics | Large arena, animal occlusions, lighting uniformity. |

| Elevated Plus Maze | Open Arm Entries & Time, Head Dipping | Anxiolytics, Anxiogenics | Complex 3D structure, rapid rearing movements. |

| Social Interaction | Sniffing Time, Contact Duration, Distance | Pro-social (e.g., oxytocin), Anti-psychotics | Occlusions, fast-paced interaction, identical animals. |

| Rotarod | Latency to Fall, Coordination | Motor impairants/enhancers (e.g., sedatives) | High-speed rotation, gripping posture. |

| Morris Water Maze | Path Efficiency, Time in Target Quadrant | Cognitive enhancers/impairants (e.g., scopolamine) | Water reflections, only head/back visible. |

Experimental Protocol: Standardized Open Field Test for Anxiolytic Screening

- Apparatus: A 40 cm x 40 cm x 30 cm opaque white arena under consistent diffuse illumination (300 lux).

- Acclimation: Animals are habituated to the testing room for 60 minutes.

- Drug Administration: Test compound or vehicle is administered i.p. 30 minutes pre-test.

- Recording: The animal is placed in the center of the arena. Behavior is recorded for 10 minutes using a static, overhead camera (1080p, 30 fps, H.264 codec).

- Cleaning: The arena is thoroughly cleaned with 70% ethanol between trials to remove olfactory cues.

- Analysis: Primary outcomes are total distance traveled (cm) and time spent in the central 20 cm x 20 cm zone.

Defining Anatomical Keypoints: Principles and Applications

Keypoints are virtual markers placed on specific body parts. Their selection must be hypothesis-driven and anatomically unambiguous.

Table 2: Keypoint Definition Guidelines for Robust Tracking

| Principle | Description | Example (Mouse) | Poor Choice |

|---|---|---|---|

| High Contrast | Point lies at a visible boundary. | Tip of the nose. | Center of the fur on the back. |

| Anatomical Consistency | Point has a consistent biological landmark. | Base of the tail at the spine. | "Middle" of the tail. |

| Multi-View Consistency | Point is identifiable from different angles. | Whisker pad (visible from side and top). | Outer canthus of the eye (top view only). |

| Task Relevance | Point is essential for the behavioral measure. | Grip points (paws) for rotarod. | Ears for rotarod performance. |

| Kinematic Model | Points allow for joint angle calculation. | Shoulder, elbow, wrist for forelimb reach. | Single point on the whole forelimb. |

Experimental Protocol: Keypoint Labeling for Gait Analysis

- Camera Setup: Use a high-speed camera (≥ 100 fps) placed laterally to capture sagittal plane movement. Ensure the entire stride cycle is visible.

- Keypoint List: Define 12 keypoints: snout, left/right ear, shoulder, elbow, wrist, hip, knee, ankle, metatarsophalangeal (MTP) joint, and tail base.

- Labeling in DLC: Using the DeepLabCut GUI, an expert labeler annotates each keypoint across hundreds of frames extracted from multiple videos, ensuring labels are placed precisely at the anatomical landmark across all postures and lighting conditions.

Title: Phase 1 Workflow for DeepLabCut Project Creation

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for Behavioral Phenotyping with DLC

| Item | Function | Example Product/Consideration |

|---|---|---|

| High-Speed Camera | Captures fast movements without motion blur. Critical for gait or whisking. | FLIR Blackfly S, Basler acA2040-90um. |

| Wide-Angle Lens | Allows full view of behavioral arena in confined spaces. | Fujinon DF6HA-1B 2.8mm lens. |

| Infrared (IR) Illumination | Enables recording in dark/dim conditions for circadian or anxiety tests. | 850nm LED arrays (invisible to rodents). |

| Diffuse Lighting Panels | Eliminates sharp shadows that confuse pose estimation models. | LED softboxes with diffusers. |

| Backdrop & Arena Materials | Provides uniform, high-contrast background. | Non-reflective matte paint (e.g., N5 gray). |

| Synchronization Trigger | Aligns video with other data streams (e.g., electrophysiology, stimuli). | Arduino-based TTL pulse generator. |

| Calibration Object | For multi-camera setup or 3D reconstruction. | Charuco board (checkerboard + ArUco markers). |

| Automated Behavioral Chamber | Standardizes stimulus delivery and environment. | Med Associates, Lafayette Instrument. |

| Data Storage Solution | High-throughput video requires massive storage. | Network-Attached Storage (NAS) with RAID. |

| DeepLabCut Software Suite | Core pose estimation toolbox. | DLC 2.3+ with TensorFlow/PyTorch backend. |

Integrating Phase 1 into the Broader Research Pipeline

The outputs of Phase 1—a well-defined behavioral corpus and a carefully annotated set of keypoints—feed directly into the computational core of the thesis. The quality of this input data constrains the maximum achievable performance of the convolutional neural network in Phase 2 and dictates the biological validity of the extracted kinematic and behavioral features in later analysis phases. A failure in precise definition at this stage introduces noise and artifact that cannot be algorithmically remediated later.

Title: Data Flow from Phase 1 to Hypothesis Testing

Within the context of advancing DeepLabCut (DLC), an open-source toolbox for markerless pose estimation based on transfer learning, the curation of high-quality training datasets is the single most critical factor determining model performance. Phase 2 of a DLC research pipeline moves from project definition to the creation of a robust, generalizable training set. This guide details efficient labeling strategies and best practices for this phase, targeting researchers in neuroscience, biomechanics, and drug development where DLC is increasingly used for high-throughput behavioral phenotyping.

Core Principles for Efficient Data Labeling

The goal is to maximize model accuracy while minimizing human labeling effort. Key principles include:

- Frame Selection Diversity: The training set must encapsulate the full variance of the animal's posture, behavior, lighting, and camera angles encountered during the entire experiment.

- Active Learning & Iterative Labeling: Initial models trained on a small, diverse set are used to predict on new frames. Frames with low model confidence (high prediction error) are prioritized for subsequent labeling rounds.

- Leveraging Transfer Learning: DLC's core strength is fine-tuning pretrained networks (e.g., ResNet-50) on a relatively small number of user-labeled frames (typically 100-1000). Strategic labeling focuses on providing the network with the specific information it lacks.

Quantitative Comparison of Labeling Strategies

The following table summarizes the efficiency and outcomes of different labeling strategies as evidenced in recent literature and community practice.

Table 1: Comparison of Training Set Curation Strategies for DLC

| Strategy | Description | Typical # of Labeled Frames | Estimated Time Investment | Key Outcome & Use Case |

|---|---|---|---|---|

| Uniform Random Sampling | Randomly select frames from across all videos. | 200-500 | Moderate | Creates a baseline model. May miss rare but critical postures. |

| K-means Clustering on Image Descriptors | Cluster frames using image features (e.g., from pretrained network) and sample from each cluster. | 100-200 | Lower (automated) | Maximizes visual diversity efficiently. Excellent for initial training set. |

| Active Learning (Prediction Error-based) | Train initial model, run on new data, label frames where the model is most uncertain. | Iterative, +50-100 per round | Higher (iterative) | Most efficient for improving model on difficult cases. Reduces final error rate. |

| Behavioral Bout Sampling | Identify and sample key behavioral epochs (e.g., rearing, gait cycles) from ethograms. | 150-300 | High (requires prior analysis) | Optimal for behavior-specific models and ensuring coverage of dynamic poses. |

| Temporal Window Sampling | Select a random frame, then also include its immediate temporal neighbors (±5-10 frames). | 200-400 | Moderate | Helps the model learn temporal consistency and motion blur. |

Detailed Experimental Protocol for Iterative Active Learning

This protocol is considered a best practice for achieving high accuracy with optimized labeling effort.

1. Initial Diverse Training Set Creation:

- Extract frames from all experimental videos, considering different subjects, sessions, and conditions.

- Use K-means clustering (k=20-30) on the pixel intensities or features from a pretrained network to group visually similar frames.

- Manually label 5-10 frames from each cluster, ensuring all body parts are accurately marked. This yields a first training set of 100-200 frames.

2. Initial Network Training:

- Configure DLC to use a ResNet-50 backbone (or similar).

- Train the network for a modest number of iterations (e.g., 200k) on the initial set. Use 95% for training, 5% for validation.

3. Active Learning Loop:

- Step A - Prediction: Use the trained model to analyze a large, unlabeled portion of your video data.

- Step B - Identification: Extract the mean per-joint prediction confidence (or locate frames with high prediction error) from DLC's output. Sort frames from lowest confidence to highest.

- Step C - Labeling: Manually label the top 50-100 frames where the model performed worst. Incorporate these into the training set.

- Step D - Re-training: Re-train the model from scratch or fine-tune the existing model on the augmented training set.

- Step E - Evaluation: Monitor the train and test errors. Continue loop until test error plateaus at an acceptable threshold (e.g., <5 pixels for your specific setup).

Workflow and Logical Diagram

Diagram 1: Iterative Training Set Curation Workflow

The Scientist's Toolkit: Essential Research Reagents & Solutions

Table 2: Key Resources for DLC Training Set Curation

| Item / Solution | Function & Role in Training Set Curation |

|---|---|

| DeepLabCut (v2.3+) | Core open-source software. Provides GUI and API for project management, labeling, training, and analysis. |

| Labeling Interface (DLC-GUI) | Integrated graphical tool for manual body part annotation. Supports multi-frame labeling and refinement. |

| FFmpeg | Open-source command-line tool for reliable video processing, frame extraction, and format conversion. |

| Google Colab / Jupyter Notebooks | Environment for running automated scripts for frame sampling (K-means), active learning analysis, and result visualization. |

| High-Resolution Camera | Provides clear input video. Global shutter cameras are preferred to reduce motion blur for fast movements. |

| Consistent Illumination Setup | Critical for reducing visual variance not related to posture, simplifying the learning task for the network. |

| Behavioral Annotation Software (e.g., BORIS, EthoVision) | Used pre-DLC to identify and sample specific behavioral bouts for targeted frame inclusion in the training set. |

| Compute Resource (GPU) | Essential for efficient model training (NVIDIA GPU with CUDA support). Enables rapid iteration. |

This phase represents the critical juncture in a DeepLabCut-based pose estimation pipeline where configured data is transformed into a functional pose estimator. Within the broader thesis on DeepLabCut's applicability in behavioral pharmacology and neurobiology, this stage determines the model's accuracy, generalizability, and ultimately, the reliability of downstream kinematic analyses for quantifying drug effects. Proper configuration and launch are paramount for producing research-grade models.

Core Configuration Parameters & Quantitative Benchmarks

The training configuration is defined in the pose_cfg.yaml file. Key parameters, their functions, and empirically-derived optimal ranges are summarized below.

Table 1: Core Training Configuration Parameters for ResNet-50/101 Based Networks

| Parameter Group | Parameter | Recommended Value / Range | Function & Impact on Training |

|---|---|---|---|

| Network Architecture | net_type |

resnet_50, resnet_101 |

Backbone feature extractor. ResNet-101 offers higher capacity but slower training. |

num_outputs |

Equal to # of body parts | Defines the number of heatmap predictions (one per body part). | |

| Data Augmentation | rotation |

-25 to 25 degrees |

Increases robustness to animal orientation. Critical for unconstrained behavior. |

scale |

0.75 to 1.25 |

Improves generalization to size variations (e.g., different animals, distances). | |

elastic_transform |

on (probability ~0.1) |

Simulates non-rigid deformations, enhancing robustness. | |

| Optimization | batch_size |

8, 16, 32 |

Limited by GPU memory. Smaller sizes can regularize but may slow convergence. |

learning_rate |

0.0001 to 0.005 (Initial) |

Lower rates (e.g., 0.001) are typical for fine-tuning; critical for stability. | |

decay_steps |

10000 to 50000 |

Steps for learning rate decay. Higher for longer training schedules. | |

decay_rate |

0.9 to 0.95 |

Factor by which learning rate decays. | |

| Training Schedule | multi_step |

[200000, 400000, 600000] |

Steps at which learning rate drops (for multi-step decay). |

save_iters |

5000, 10000 |

Interval (in steps) to save model snapshots for evaluation. | |

display_iters |

100 |

Interval to display loss in console. | |

| Loss Function | scoremap_dir |

./scores |

Directory for saved score (heatmap) files. |

locref_regularization |

0.01 to 0.1 |

Regularization strength for locality prediction. | |

partaffinityfield_predict |

true/false |

Enables Part Affinity Fields (PAFs) for multi-animal DLC. |

Table 2: Typical Performance Benchmarks Across Model Types (Example Data)

| Model / Dataset | Training Iterations | Train Error (pixels) | Test Error (pixels) | Inference Speed (FPS)* |

|---|---|---|---|---|

| ResNet-50 (Mouse, 8 parts) | 200,000 | 2.1 | 3.5 | 45 |

| ResNet-101 (Rat, 12 parts) | 400,000 | 1.8 | 3.1 | 32 |

| ResNet-50 + Augmentation | 200,000 | 2.5 | 3.3 | 45 |

| ResNet-101 + PAFs (2 mice) | 500,000 | 2.3 | 3.8 | 28 |

*FPS measured on NVIDIA GTX 1080 Ti.

Detailed Training Launch Protocol

Experimental Protocol: Launching Model Training

Pre-launch Verification:

- Confirm the

project_path/config.yamlpoints to the correct training dataset (training-dataset.mat). - Verify that the

project_path/dlc-modelsdirectory contains the model folder with the generatedpose_cfg.yaml. - Ensure GPU drivers and CUDA/cuDNN libraries (for TensorFlow) are correctly installed (

nvidia-smi).

- Confirm the

Command Line Launch (Standard):

- Activate the correct Python environment (e.g.,

conda activate DLC-GPU). - Navigate to the project directory.

Execute the training command:

shuffle: Corresponds to the shuffle number of the training dataset.gputouse: Specify GPU ID (0for first GPU).max_snapshots_to_keep: Controls disk usage by pruning old snapshots.

- Activate the correct Python environment (e.g.,

Distributed/Headless Launch (for HPC clusters):

Create a Python script (

train_script.py):Submit via a job scheduler (e.g., SLURM) with requested GPU resources.

Monitoring Training:

- Console Output: Monitor the displayed loss (

loss,loss-l1,loss-l2) everydisplay_iters. A steady decrease indicates proper learning. - TensorBoard (Advanced): Launch TensorBoard pointing to the model directory to visualize loss curves, heatmap predictions, and computational graph.

- Checkpoint Evaluation: Use saved snapshots (

snapshot-<iteration>) for periodic evaluation on a labeled evaluation set usingdeeplabcut.evaluate_network.

- Console Output: Monitor the displayed loss (

Stopping Criteria:

- Primary: Loss plateaus over ~20,000-50,000 iterations.

- Secondary: Evaluation error (on a held-out set) ceases to improve.

- Typical training duration: 200,000 to 1,000,000 iterations (days of compute).

Visualizing the Training Workflow & Logic

Diagram 1: Neural Network Training Loop Logic Flow

The Scientist's Toolkit: Key Reagent Solutions for DLC Training

Table 3: Essential "Research Reagent Solutions" for Training

| Item | Function & Purpose in the "Experiment" |

|---|---|

Labeled Training Dataset (training-dataset.mat) |

The fundamental reagent. Contains frames, extracted patches, and coordinate labels. Quality and diversity directly determine model performance ceiling. |

Configuration File (pose_cfg.yaml) |

The experimental protocol. Defines the model architecture, augmentation "treatments," and optimization "conditions." |

| Pre-trained Backbone Weights (ResNet, ImageNet) | Enables transfer learning. Provides generic visual feature detectors, drastically reducing required labeled data and training time compared to random initialization. |

| GPU Compute Resource (NVIDIA CUDA Cores) | The catalyst. Accelerates matrix operations in forward/backward passes by orders of magnitude, making deep network training feasible (hours/days vs. months). |

| Optimizer "Solution" (Adam, RMSprop) | The mechanism for iterative weight updating. Adam is the default, adjusting the learning rate per parameter for stable convergence. |

| Data Augmentation Pipeline (Rotation, Scaling, Noise) | Synthetic data generation. Artificially expands training set variance, acting as a regularizer to prevent overfitting and improve model robustness. |

| Validation Dataset (Held-out labeled frames) | The quality control assay. Provides an unbiased metric (test error) to monitor generalization and determine the optimal stopping point. |

This guide details the critical phase of model evaluation and refinement within a DeepLabCut (DLC)-based pose estimation pipeline, as part of a broader thesis on advancing open-source tools for behavioral analysis in drug development. After network training, systematic assessment of model performance is paramount to ensure reliable, reproducible keypoint detection suitable for downstream scientific analysis.

Key Performance Metrics and Quantitative Analysis

Performance is evaluated using a suite of error metrics calculated on a held-out test dataset. The following table summarizes core quantitative measures.

Table 1: Core Performance Metrics for Pose Estimation Models

| Metric | Formula/Description | Interpretation | Typical Target (for lab animals) |

|---|---|---|---|

| Mean Test Error | (Σ ‖ytrue - ypred‖) / N, in pixels. | Average Euclidean distance between predicted and ground-truth keypoints. | < 5 pixels (or < body part length) |

| Train Error | Error calculated on the training set. | Indicates model learning capacity; too low suggests overfitting. | Slightly lower than test error. |

| p-value (from p-test) | Likelihood that error is due to chance. | Statistical confidence in predictions. | p < 0.05 (ideally p < 0.001) |

| RMSE (Root Mean Square Error) | sqrt( mean( (ytrue - ypred)² ) ) | Punishes larger errors more severely. | Comparable to Mean Test Error. |

| Accuracy @ Threshold | % of predictions within t pixels of truth. | Fraction of "correct" predictions given a tolerance. | e.g., >95% @ t=5px |

Title: Model Evaluation Metrics Calculation Flow

Experimental Protocols for Evaluation

Protocol 3.1: Standard Train-Test Split Evaluation

- Data Preparation: After labeling, split the data into a training set (typically 95%) and a test set (5%) using DLC's

create_training_datasetfunction, ensuring shuffled splits. - Model Training: Train the network (e.g., ResNet-50, EfficientNet) on the training set until loss plateaus.

- Error Calculation: Use DLC's

evaluate_networkfunction to predict keypoints on the held-out test set. The toolbox automatically computes mean pixel error and RMSE per keypoint and across all keypoints. - Statistical p-test: Run

analyze_videoon a labeled test video, then useplot_trajectoriesandextract_mapsto generate p-values, assessing if the error is significantly lower than chance.

Protocol 3.2: Iterative Refinement via Active Learning

This protocol is crucial for improving an initial model.

Table 2: Key Reagents & Tools for Iterative Refinement

| Item | Function/Description |

|---|---|

| DeepLabCut (v2.3+) | Core open-source toolbox for model training, evaluation, and label refinement. |

| Labeled Video Dataset | The core input: videos with human-annotated keypoints for training and testing. |

| Extracted Frames | Subsampled video frames used for labeling and network input. |

Scoring File (*.h5) |

File containing model predictions for new frames. |

| Refinement GUI | DLC's graphical interface for correcting low-confidence predictions. |

| High-Performance GPU | (e.g., NVIDIA RTX A6000, V100) Essential for efficient model retraining. |

Title: Iterative Model Refinement Loop

- Initial Evaluation: Run the initial model on a diverse set of videos (not just the test set). Use DLC's

analyze_videoand plot likelihood distributions to identify frames with low prediction confidence. - Frame Extraction: Extract a new set of frames where the model is most uncertain (

filterframesfunction) or made clear errors. - Active Learning Labeling: Load these frames and the model's predictions into the DLC GUI. Manually correct erroneous predictions, effectively creating new ground-truth data.

- Dataset Merging and Retraining: Merge the newly labeled frames with the original training dataset. Create a new training project or augment the existing one, then retrain the model from a pre-trained state (transfer learning).

- Re-evaluation: Repeat Protocol 3.1 on the updated test set. Iterate steps 1-4 until mean test error plateaus and meets the target threshold.

Interpreting Results and Troubleshooting

Table 3: Common Performance Issues and Refinement Actions

| Symptom | Potential Cause | Corrective Action |

|---|---|---|

| High Train & Test Error | Underfitting, insufficient training data, overly simplified network. | Increase network capacity (deeper net), augment training data, train for more iterations. |

| Low Train Error, High Test Error | Overfitting to the training set. | Increase data augmentation (scaling, rotation, lighting), add dropout, use weight regularization, gather more diverse training data. |

| High Error for Specific Keypoints | Keypoint is occluded, ambiguous, or poorly represented in data. | Perform targeted active learning for frames containing that keypoint, review labeling guidelines. |

| Good p-test but High Pixel Error | Predictions are consistent but biased from true location. | Check for systematic labeling errors in the training set; refine labels. |

Title: Troubleshooting High Test Error

Rigorous evaluation and iterative refinement form the bedrock of generating robust pose estimation models with DeepLabCut. By systematically quantifying error through train-test splits, employing statistical validation (p-test), and leveraging active learning for targeted improvement, researchers can produce models with the precision required for sensitive applications in neuroscience and pre-clinical drug development. This cyclical process of measure, diagnose, and refine ensures that the tool's output is a reliable foundation for subsequent behavioral biomarker discovery.

Within the ongoing research of the DeepLabCut open-source toolbox, Phase 5 represents a critical juncture moving from proof-of-concept analysis on single videos to robust, scalable pipelines for large-scale, reproducible science. This phase addresses the core computational and methodological challenges researchers face when deploying pose estimation in high-throughput settings common in modern behavioral neuroscience and preclinical drug development. This technical guide details the architectures, validation protocols, and data management strategies necessary for this scale-up.

Core Architectural Challenges in Scaling

Scaling DeepLabCut from single videos to large datasets involves overcoming bottlenecks in data storage, computational throughput, and analysis reproducibility.

Quantitative Comparison of Scaling Approaches

Table 1: Comparison of Data Management and Processing Strategies for Large-Scale Pose Estimation

| Strategy | Description | Throughput (Videos/Hr)* | Storage Impact | Best For |

|---|---|---|---|---|

| Local Storage & Processing | Single workstation with attached storage. | 10-50 (GPU dependent) | High local redundancy | Single-lab, initial pilots. |

| Network-Attached Storage (NAS) | Centralized storage with multiple compute nodes. | 50-200 | Efficient, single source of truth | Mid-sized consortia, standardized protocols. |

| High-Performance Computing (HPC) | Cluster with job scheduler (SLURM, PBS). | 200-1000+ | Requires managed parallel I/O | Institution-wide, batch processing. |

| Cloud-Based Pipelines | Elastic compute (AWS, GCP) with object storage. | Scalable on-demand | Pay-per-use, high durability | Multi-site collaborations, burst compute. |

| Distributed Edge Processing | Lightweight analysis at acquisition sites. | Variable | Distributed, requires sync | Large-scale phenotyping across labs. |

*Throughput estimates for inference (not training) using a ResNet-50-based DeepLabCut model on 1024x1024 video at 30 fps. Actual performance depends on hardware, video resolution, and frame rate.

Workflow for Large-Scale Deployment

The transition requires a structured workflow encompassing data ingestion, model deployment, result aggregation, and quality control.

Title: Workflow for Scaling DeepLabCut to Large Video Datasets

Experimental Protocols for Validation at Scale

Rigorous validation is paramount when generating large pose-estimation datasets. The following protocols ensure reliability.

Protocol: Cross-Validation Across Subjects and Sessions

Objective: To assess model generalizability across individuals and time, preventing overfitting to specific subjects or recording conditions.

Methodology:

- Dataset Partitioning: For a dataset of N animals over S sessions, implement a leave-one-group-out scheme. Partitions include:

- Leave-One-Subject-Out: Train on N-1 animals, test on the held-out animal.

- Leave-One-Session-Out: Train on S-1 sessions, test on the held-out session.

- Model Training: Train a DeepLabCut model (e.g., ResNet-101 backbone) for each partition using the same hyperparameters (network stride, iterations, augmentation pipeline).

- Evaluation Metrics: Calculate the following on the test set:

- Mean Average Error (MAE) in pixels, relative to human-labeled ground truth.

- Percentage of Correct Keypoints (PCK) at a threshold of 5% of the animal's body length.

- Tracking Consistency: Frame-to-frame movement plausibility (velocity outliers).

- Statistical Reporting: Report mean ± standard deviation of MAE and PCK across all folds. Performance drop >15% in a fold indicates potential bias.

Protocol: Assessing Computational Efficiency & Throughput

Objective: To benchmark pipeline components and identify bottlenecks for large datasets.

Methodology:

- Benchmark Setup: Use a standardized video clip (e.g., 10 min, 1920x1080, 30 fps) and a pre-trained DeepLabCut model.

- Component Timing: Instrument the code to log processing time for:

- Video I/O and frame decoding.

- Pre-processing (cropping, resizing).

- Model inference (forward pass).

- Post-processing (confidence filtering, smoothing).

- Data writing (CSV, HDF5).

- Scalability Test: Run the pipeline on 1, 10, 50, and 100 video copies in parallel on the target infrastructure (HPC cluster, cloud instance). Record total wall-clock time and compute resource utilization (GPU/CPU, RAM).

- Bottleneck Analysis: Identify the component whose time increases linearly or super-linearly with batch size (e.g., I/O often becomes the bottleneck).

Table 2: Benchmark Results for Inference Pipeline on Different Hardware

| Hardware Setup | Inference Time per Frame (ms) | FPS Achieved | Bottleneck Identified | Est. Cost per 1000 hrs Video* |

|---|---|---|---|---|

| Laptop (CPU: i7, No GPU) | 320 | ~3 | CPU Compute | N/A (Time prohibitive) |

| Workstation (Single RTX 3080) | 12 | ~83 | GPU Memory | N/A |

| HPC Node (4x A100 GPUs) | 3 | ~333 | Parallel File I/O | $$ |

| Cloud Instance (AWS p3.2xlarge) | 15 | ~67 | Data Transfer Egress | $$$ |

*Estimated cloud compute cost; does not include storage. $$ indicates moderate cost, $$$ indicates higher cost.

The Scientist's Toolkit: Key Research Reagent Solutions

Table 3: Essential Tools & Materials for Large-Scale Video Analysis with DeepLabCut

| Item / Solution | Function / Purpose | Example / Note |

|---|---|---|

| DeepLabCut Model Zoo | Repository of pre-trained models for common model organisms (mouse, rat, fly). | Reduces training time; provides baseline for transfer learning. |

| DLC2Kinematics | Post-processing toolbox for calculating velocities, accelerations, and angles from pose data. | Essential for deriving behavioral features. |

| SimBA | Software for Interpreting Mouse Behavior Annotations. | Used downstream for supervised behavioral classification of pose sequences. |

| Bonsai | High-throughput visual programming environment for real-time acquisition and processing. | Can trigger recordings and run real-time DLC inference. |

| DataJoint | A relational data pipeline framework for neurophysiology and behavior. | Manages the entire pipeline from raw video to processed pose data in a MySQL database. |

| CVAT | Computer Vision Annotation Tool. | Web-based tool for efficient collaborative labeling of ground truth data at scale. |

| NWB (Neurodata Without Borders) | Standardized data format for storing behavioral and physiological data. | Ensures FAIR data principles; allows integration with neural recordings. |

| CodeOcean / WholeTale | Cloud-based reproducible research platforms. | Allows packaging of the complete DLC analysis environment for peer review and replication. |

Integrated Pipeline Architecture

A successful large-scale system integrates components for automated processing, quality control, and data management.

Title: Architecture of an Integrated Large-Scale Pose Estimation Pipeline

Scaling DeepLabCut from single videos to large datasets necessitates a shift from a standalone analysis tool to an integrated, automated pipeline. Success in Phase 5 is measured not only by the accuracy of keypoint predictions but by the throughput, reproducibility, and FAIRness of the entire data generation process. By adopting standardized validation protocols, leveraging scalable computing architectures, and utilizing the growing ecosystem of companion tools, researchers can robustly generate high-quality pose data at scale. This capability is foundational for large-scale behavioral phenotyping in neuroscience and the development of quantitative digital biomarkers in preclinical drug discovery.

This chapter details the critical post-processing phase following pose estimation with DeepLabCut (DLC). While DLC provides accurate anatomical keypoint coordinates, raw trajectories are inherently noisy. Direct analysis can lead to misinterpretation of animal behavior. This phase transforms raw coordinates into biologically meaningful, quantitative descriptors ready for hypothesis testing in neuroscience, pharmacology, and drug development.

Trajectory Smoothing and Denoising

Raw DLC outputs contain high-frequency jitter from prediction variance and occasional outliers (jumps). Smoothing is essential for deriving velocity and acceleration.

Core Methods:

- Savitzky-Golay Filter: Preserves important higher-moment features like acceleration peaks. Ideal for kinematic data.

- Kalman Filter: Optimal for online smoothing and predicting missing data, modeling both measurement noise and expected dynamics.

- Median Filter (for outlier removal): Effective for removing large, single-frame jumps without distorting the overall trajectory.

Experimental Protocol: Smoothing Pipeline

- Input: NumPy array or Pandas DataFrame of 2D/3D coordinates from DLC (

X,Y, [Z],likelihood). - Likelihood Thresholding: Set a threshold (e.g., 0.95). Mark coordinates below threshold as

NaN. - Outlier Correction: Apply a 1D median filter with a window of 5 frames to each coordinate stream.

- Gap Interpolation: Use linear interpolation for small gaps (<10 frames) of

NaNvalues. - Primary Smoothing: Apply a Savitzky-Golay filter (window length=9, polynomial order=3) to interpolated data.

- Output: Smoothed, continuous trajectories for all keypoints.

Smoothing workflow for DLC data

Feature Extraction

This step converts smoothed trajectories into behavioral features. Features can be kinematic (motion-based) or postural (shape-based).

Table 1: Core Extracted Behavioral Features

| Feature Category | Specific Feature | Calculation (Discrete) | Biological/Drug Screening Relevance |

|---|---|---|---|

| Kinematic | Velocity (Body Center) | ΔPosition / ΔTime | Locomotor activity, sedation, agitation. |

| Kinematic | Acceleration | ΔVelocity / ΔTime | Movement initiation, vigor. |

| Kinematic | Movement Initiation | Velocity > threshold for t > min_duration | Bradykinesia, psychomotor retardation. |

| Kinematic | Freezing | Velocity < threshold for t > min_duration | Fear, anxiety, catalepsy. |

| Postural | Distance (Nose-Tail Base) | Euclidean distance | Body elongation, stretching. |

| Postural | Spine Curvature | Angle between vectors (e.g., neck-hip, hip-tail) | Rigidity, posture in pain models. |

| Postural | Paw Reach Amplitude | Max Y-coordinate of forepaw | Skilled motor function, stroke recovery. |

| Dynamic | Gait Stance/Swing Ratio | (Paw on ground time) / (Paw in air time) | Motor coordination, ataxia, Parkinsonism. |

Experimental Protocol: Feature Extraction from Paw Data

- Define Keypoints: Identify

forepaw_L,forepaw_R,hindpaw_L,hindpaw_R,snout,tail_base. - Calculate Body Center: Median of

snout,tail_base, and hip keypoints. - Compute Kinematics: Apply finite difference to body center coordinates for velocity/acceleration.

- Extract Postural Features: For each frame, compute all distances and angles of interest (e.g., inter-paw distances, back angles).

- Event Detection: Apply thresholds to derived time series (e.g., velocity < 2 cm/s for >500ms = freezing bout).

Hierarchy of feature extraction from trajectories

Statistical Analysis for Drug Development

The final step links features to experimental conditions (e.g., drug dose, genotype).

Core Analytical Frameworks:

- Dose-Response Analysis: Fit Hill curves to feature means (e.g., total distance moved vs. log[dose]) to estimate EC₅₀/ED₅₀.

- Multivariate Analysis: Principal Component Analysis (PCA) or t-SNE to visualize global behavioral state. Linear Discriminant Analysis (LDA) to classify treatment groups.

- Time-Series Analysis: Compare feature evolution post-treatment (e.g., kinetics of drug effect) using mixed-effects models.

- Bout Analysis: Analyze structure of discrete behaviors (e.g., grooming bouts) for frequency, duration, and sequential patterning (Markov models).

Table 2: Statistical Tests for Common Experimental Designs in Drug Screening

| Experimental Design | Primary Question | Recommended Statistical Test | Post-Hoc / Modeling |

|---|---|---|---|

| Two-Group (e.g., Vehicle vs. Drug) | Does the drug alter feature X? | Independent t-test (parametric) or Mann-Whitney U (non-parametric) | Calculate Cohen's d for effect size. |

| >2 Groups (Multiple Doses) | Is there a dose-dependent effect? | One-way ANOVA or Kruskal-Wallis test | Dunnett's test (vs. control). Fit sigmoidal dose-response. |

| Longitudinal (Repeated Measures) | How does behavior change over time post-dose? | Two-way ANOVA (Time × Treatment) or mixed-effects model | Bonferroni post-tests. Model kinetics. |

| Multivariate Phenotyping | Can treatments be distinguished by all features? | PCA for visualization, LDA for classification | Report loadings and classification accuracy. |

Experimental Protocol: Dose-Response Analysis

- Feature Aggregation: For each animal, calculate the mean of a primary feature (e.g., velocity) during a defined post-treatment epoch.

- Group Means: Calculate mean ± SEM for each dose group (n=8-12 animals).

- Curve Fitting: Fit a four-parameter logistic (4PL) Hill function:

Y = Bottom + (Top-Bottom) / (1 + 10^((LogEC50 - X)*HillSlope)), whereX = log10(dose). - Parameter Estimation: Extract

EC50,HillSlope, andEfficacy (Top-Bottom)with 95% confidence intervals from the model fit. - Visualization: Plot raw data points, group means, and the fitted curve.

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Computational Tools for DLC Post-Processing

| Item (Software/Package) | Function | Key Application in Phase 6 |

|---|---|---|

| SciPy (signal.savgol_filter, interpolate) | Signal processing and interpolation. | Implementation of Savitzky-Golay smoothing and gap filling. |

| Pandas DataFrames | Tabular data structure. | Organizing keypoint coordinates, likelihoods, and derived features. |

| NumPy | Core numerical operations. | Efficient calculation of distances, angles, and velocities via vectorization. |

| statsmodels / scikit-posthocs | Advanced statistical testing. | Running ANOVA with correct post-hoc comparisons (e.g., Dunnett's). |

| NonLinear Curve Fitting (e.g., SciPy, GraphPad Prism) | Dose-response modeling. | Fitting Hill equation to derive EC₅₀ and efficacy. |

| scikit-learn | Multivariate analysis. | Performing PCA and LDA for behavioral phenotyping. |

| Bonsai-Rx / DeepLabCut-Live! | Real-time processing. | Advanced: Online smoothing and feature extraction for closed-loop experiments. |

Optimizing DeepLabCut: Advanced Troubleshooting for Accuracy, Speed, and Reliability

Within the research landscape utilizing the DeepLabCut (DLC) open source pose estimation toolbox, the success of behavioral analysis in neuroscience and drug development hinges on the performance of trained neural networks. Models must generalize well to new, unseen video data from different experimental sessions, animals, or lighting conditions. This technical guide details the diagnosis and remediation of three core training failures—overfitting, underfitting, and poor generalization—specific to the DLC pipeline, providing researchers and drug development professionals with actionable protocols.

Core Concepts and Diagnostics

Defining Failures in the DLC Context

- Overfitting: The model learns the training dataset too well, including its noise and specific augmentations, leading to high precision on training frames but poor performance on the labeled test set and novel videos. This is often indicated by a low training error but a high test error.

- Underfitting: The model fails to capture the underlying patterns of the pose data. It performs poorly on both training and test sets, typically due to insufficient model capacity or inadequate training.

- Poor Generalization: The model performs adequately on the standard test split but fails when deployed on videos from new experimental conditions (e.g., different cohort, cage type, or camera angle). This is a critical failure mode for real-world scientific application.

Quantitative Diagnostics

Key metrics are extracted from DLC's evaluation_results DataFrame and plotting functions.

Table 1: Key Diagnostic Metrics from DeepLabCut Training

| Metric | Source (DLC Function/Analysis) | Typical Underfitting Profile | Typical Overfitting Profile | Target for Generalization |

|---|---|---|---|---|

| Train Error (pixel) | evaluate_network |

High (>10-15px, depends on scale) | Very Low (<2-5px) | Slightly above test error |

| Test Error (pixel) | evaluate_network |

High (>10-15px) | High (>10-15px) | Low, minimized |

| Train-Test Gap | Difference of above | Small (model is equally bad) | Large (>5-8px) | Small (<3-5px) |

| Learning Curves | plot_utils.plot_training_loss |

Plateaued at high loss | Training loss ↓, validation loss ↑ after a point | Both curves decrease and stabilize close together |

| PCK@Threshold | plotting.plot_heatmaps, plotting.plot_labeled_frame |

Low across thresholds | High on train, low on test | High on both train and test sets |

Title: Diagnostic Workflow for DLC Training Failures

Experimental Protocols for Remediation

Protocol A: Mitigating Overfitting

Objective: Increase model regularization to reduce reliance on training-specific features.