Mastering DeepLabCut: A Comprehensive Guide to Refining Your Training Dataset for Reliable Behavioral Analysis

This guide provides a systematic framework for researchers, scientists, and drug development professionals to refine DeepLabCut training datasets.

Mastering DeepLabCut: A Comprehensive Guide to Refining Your Training Dataset for Reliable Behavioral Analysis

Abstract

This guide provides a systematic framework for researchers, scientists, and drug development professionals to refine DeepLabCut training datasets. It covers foundational principles for dataset assembly, practical methodologies for annotation and training, advanced troubleshooting techniques for low-accuracy models, and robust validation strategies. The article equips users with the knowledge to produce high-performing pose estimation models, ensuring the reproducibility and validity of behavioral data in preclinical research.

Laying the Groundwork: Core Principles of an Effective DeepLabCut Training Set

Troubleshooting Guides & FAQs

Q1: During DeepLabCut (DLC) training, my model's loss plateaus at a high value and does not decrease, even after many iterations. What dataset issues could be causing this?

A: This is a classic sign of poor dataset quality. Common root causes include:

- Insufficient Variability: The training set lacks diversity in animal posture, lighting, camera angles, or experimental conditions present in your actual videos.

- Inconsistent Labeling: Human error or ambiguity in defining body parts leads to noisy ground truth labels. A key metric is the inter-rater reliability score; a low score (<0.9) indicates problematic labels.

- Class Imbalance: Certain poses or viewpoints are dramatically underrepresented.

- Low-Resolution or Blurry Frames: The network cannot extract meaningful features from poor-quality images.

Protocol for Diagnosis & Correction:

- Compute Label Consistency: Use DLC's

analyze_videos_over_timefunction or evaluate the network's predictions on labeled frames. Manually review frames with the highest prediction error. - Augmentation Check: Ensure you are using appropriate data augmentation during training (e.g.,

rotation=30, shear=10, scaling=.2). If performance is poor on specific conditions, augment to include them. - Refine the Dataset: Remove ambiguous frames or re-label them with multiple annotators to reach consensus. Actively add new, diverse frames from problematic videos to your training set.

Q2: My DLC model generalizes poorly to new experimental cohorts or slightly different laboratory setups. How can I improve dataset robustness?

A: This indicates a lack of domain shift robustness in your training data. The dataset is likely overfitted to the specific conditions of the initial videos.

Protocol for Creating a Generalizable Dataset:

- Multi-Cohort/Setup Inclusion: From the project's inception, incorporate video data from at least 3 different animal cohorts, different times of day, and at least 2 slightly different hardware setups (e.g., different cameras, cage types).

- Strategic Frame Selection: Use DLC's

extract_outlier_framesfunction based on the network's prediction uncertainty (p-value) on new videos. Add these outlier frames to the training set and re-label. - Quantitative Validation: Hold out one entire experimental cohort or setup as a test set. Monitor performance metrics specifically on this held-out data to gauge generalizability.

Q3: What are the key quantitative metrics to track for dataset quality, and what are their target values?

A: Track these metrics throughout the dataset refinement cycle.

| Metric | Description | Target Value (Good) | Target Value (Excellent) | Measurement Tool |

|---|---|---|---|---|

| Inter-Rater Reliability | Agreement between multiple human labelers on the same frames. | > 0.85 | > 0.95 | Cohen's Kappa or ICC |

| Train-Test Error Gap | Difference between loss on training vs. held-out test set. | < 15% | < 5% | DLC Training Logs |

| Mean Pixel Error (MPE) | Average distance between predicted and true label in pixels. | < 5 px | < 2 px | DLC Evaluation |

| Prediction Confidence (p-value) | Network's certainty for each prediction across videos. | > 0.9 (median) | > 0.99 (median) | DLC Analysis |

Q4: How many labeled frames do I actually need for reliable DLC pose estimation?

A: The number is highly dependent on complexity, but quality supersedes quantity. Below is a data-driven guideline.

| Experiment Complexity | Minimum Frames (Initial) | Recommended After Active Learning | Key Consideration |

|---|---|---|---|

| Simple (1-2 animals, clear view) | 150-200 | 400-600 | Focus on posture diversity. |

| Moderate (social interaction, occlusion) | 300-400 | 800-1200 | Must include frames with occlusions. |

| Complex (multiple animals, dynamic bg) | 500+ | 1500+ | Requires rigorous multi-animal labeling. |

Protocol for Efficient Labeling:

- Start with the minimum frames from the table above, selected randomly from a representative video.

- Train an initial network for ~200,000 iterations.

- Use active learning: run the network on new videos, extract outlier frames with low confidence, label them, and add them to the training set.

- Iterate steps 2-3 until performance on a held-out validation set plateaus.

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function in Dataset Curation |

|---|---|

| DeepLabCut (DLC) | Open-source toolbox for markerless pose estimation; core platform for training and evaluation. |

| COLAB Pro / Cloud GPU | Provides scalable, high-performance computing for iterative model training without local hardware limits. |

| Labelbox / CVAT | Advanced annotation platforms that enable collaborative labeling, quality control, and inter-rater reliability metrics. |

| Active Learning Loop Scripts | Custom Python scripts to automate extraction of low-confidence (high-loss) frames from new videos for targeted labeling. |

| Statistical Suite (ICC, Kappa) | Libraries (e.g., pingouin in Python) to quantitatively measure labeling consistency across multiple human raters. |

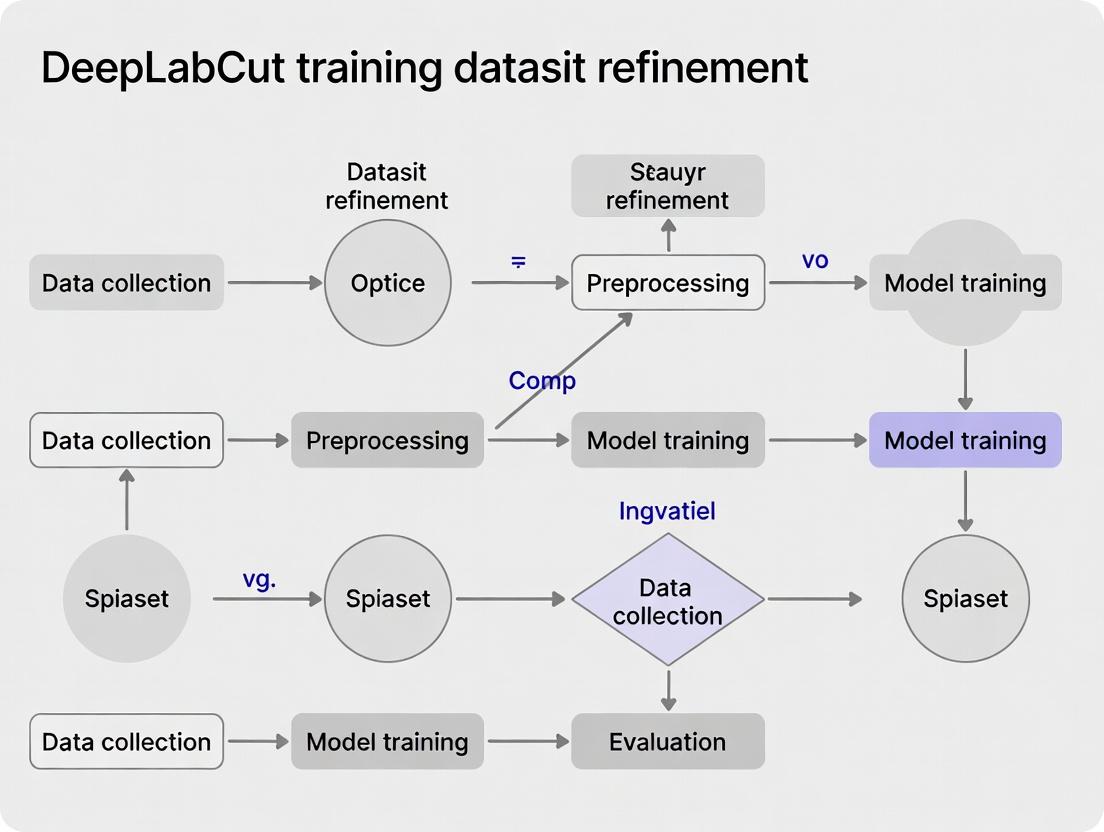

Experimental Workflow Diagram

Diagram Title: DeepLabCut Dataset Refinement Cycle Workflow

Dataset Quality Impact Pathway

Diagram Title: How Dataset Issues Cause Model Failure

Troubleshooting Guides & FAQs

Q1: What is the minimum number of annotated frames required to train a reliable DeepLabCut model? A: While more data generally improves performance, a well-annotated, diverse training set is more critical than sheer volume. For a new experiment, we recommend starting with 100-200 frames extracted from multiple videos across different experimental sessions and subjects. Quantitative benchmarks from recent literature are summarized below:

Table 1: Recommended Frame Counts for Training Set

| Experiment Type / Subject | Minimum Frames | Optimal Range | Key Consideration |

|---|---|---|---|

| Rodent (e.g., mouse reaching) | 100 | 200-500 | Ensure coverage of full behavioral repertoire. |

| Drosophila (fruit fly) | 150 | 250-600 | Include various orientations and wing positions. |

| Human pose (lab setting) | 200 | 400-1000 | Account for diverse clothing and lighting. |

| Refinement Technique | Added Frames per Iteration | Typical Iterations | Purpose |

| Active Learning | 50-100 | 3-5 | Target low-confidence predictions. |

| Augmentation | N/A (synthetic) | Applied during training | Increase dataset robustness virtually. |

Q2: How do I select which keypoints (body parts) are essential for my behavioral analysis? A: Keypoint selection must be driven by your specific research question. For drug development studies assessing locomotor activity, keypoints like the nose, base of tail, and all four paws are essential. For fine motor skill tasks (e.g., grasping), include individual digits and wrist joints. Always include at least one "fixed" reference point (e.g., a stable point in the arena) to correct for subject movement within the frame. The protocol is:

- Define Behavioral Metrics: List all quantitative measures (e.g., velocity, joint angle, time interacting).

- Map Metrics to Anatomy: Identify the minimal set of body parts whose 2D positions directly inform those metrics.

- Prioritize Visibility: Avoid keypoints that are frequently occluded unless critical; occlusion can be managed but requires more training data.

- Consult Literature: Review similar published studies to establish a standard.

Q3: My model performs well on some subjects but poorly on others within the same experiment. How can I improve generalization? A: This indicates a subject variability issue in your training dataset. Follow this refinement protocol:

- Diagnose: Use DeepLabCut's

analyze_videosandcreate_labeled_videofunctions on the failing subjects. Identify systematic failures (e.g., consistent left-paw mislabeling). - Extract Frames: From the videos of the poorly performing subjects, extract new frames (50-100) that capture the problematic poses/contexts.

- Annotate & Merge: Annotate these new frames and add them to your existing training dataset. This ensures the model sees the diversity of appearance across your entire subject pool.

- Re-train: Re-train the network from the weights of your previous model (transfer learning). Performance should now be more consistent across subjects.

Q4: How should I handle occlusions (e.g., a mouse limb being hidden) during frame annotation?

A: For occluded keypoints that are not visible in the image, you must not guess their location. In the DeepLabCut annotation interface, right-click (or use the designated shortcut) to mark the keypoint as "occluded" or "not visible." This labels the keypoint with a specific value (e.g., 0,0,0 or with a low probability flag). Training the model on these explicit "invisible" labels teaches it to recognize occlusion, which is preferable to introducing erroneous positional data.

Q5: What are the best practices for defining the "subject" and bounding box during data extraction? A: The subject is the primary animal/object of interest. For single-animal experiments:

- Use an automated tool (like DeepLabCut's built-in detector or a pretrained model) to generate initial bounding boxes around the subject in each frame of your video.

- Manually verify and correct a subset of these boxes. The box should be snug but include the entire subject and all keypoints in all possible behavioral states (e.g., a rearing rat).

- For multi-animal experiments, you must use Multi-Animal DeepLabCut. Define each animal as a unique "individual" and ensure consistent labeling across frames. This requires more extensive training data that disambiguates overlapping animals.

The Scientist's Toolkit: Key Research Reagent Solutions

Table 2: Essential Materials for DeepLabCut Dataset Creation

| Item / Reagent | Function / Purpose |

|---|---|

| High-Speed Camera (e.g., >90 fps) | Captures fast, subtle movements critical for kinematic analysis in drug response studies. |

| Contrastive Markers (Non-toxic paint, retro-reflective beads) | Applied to subjects to temporarily enhance visual contrast of keypoints, simplifying initial annotation. |

| Standardized Arena with Consistent Lighting | Minimizes environmental variance, ensuring the model learns subject features, not background artifacts. |

| DeepLabCut Software Suite (v2.3+) | Open-source platform for markerless pose estimation; the core tool for model training and analysis. |

| GPU Workstation (NVIDIA, with CUDA support) | Accelerates the training of deep neural networks, reducing model development time from days to hours. |

| Video Synchronization System | Essential for multi-camera setups to align views for 3D reconstruction or multiple vantage points. |

| Automated Behavioral Chamber (e.g., operant box) | Integrates pose tracking with stimulus presentation and data logging for holistic phenotyping. |

| Data Augmentation Pipeline (imgaug, Albumentations) | Software libraries to artificially expand training datasets with rotations, flips, and noise, improving model robustness. |

Experimental Workflow Diagram

Keypoint Confidence & Refinement Logic Diagram

Technical Support Center

Troubleshooting Guides & FAQs

Q1: My trained DeepLabCut model fails to generalize to new sessions or animals. The error is high on frames where the posture or behavior looks novel. What is the most likely cause and how can I fix it?

A: This is a classic symptom of a non-diverse training dataset. Your network has overfit to the specific postures, lighting, and backgrounds in your selected frames. To fix this:

- Implement Strategic Frame Selection: Return to your extracted video frames and use DeepLabCut's

extract_outlier_framesfunction (based on network prediction uncertainty) to find challenging frames. Manually add these to your training set. - Enforce Behavioral Diversity: Actively review your video and label frames that represent the extremes of your behavior of interest (e.g., fully stretched, fully curled, left turns vs. right turns). Do not just label "typical" postures.

- Augment Your Data: Use DeepLabCut's built-in augmentation (imgaug) during training. Enable

rotation,lighting, andmotion_blurto simulate variability.

Q2: I have hours of video. How do I systematically select a minimal but sufficient number of frames for labeling without bias?

A: A manual, multi-pass approach is recommended for robustness.

- Pass 1 - Uniform Random: Use

kmeansextraction on a subset of videos to get a base set of n frames (e.g., 20) that cover appearance variability. - Pass 2 - Behavioral Anchoring: Manually identify key behavioral events from ethograms and sample 5-10 frames around each event.

- Pass 3 - Outlier Recruitment: After training an initial network, use it to analyze all videos and extract the top k outlier frames (highest prediction error). Label these and add them to the dataset. Iterate 2-3 times.

Q3: What quantitative metrics should I track to ensure my frame selection strategy is improving dataset diversity?

A: Monitor the following metrics in a table during each labeling iteration:

Table 1: Key Metrics for Dataset Diversity Assessment

| Metric | Calculation Method | Target Trend | Purpose |

|---|---|---|---|

| Training Error (pixels) | Mean RMSE from DLC training logs | Decreases & converges | Measures model fit on labeled data. |

| Test Error (pixels) | Mean RMSE on a held-out video | Decreases significantly | Measures generalization to unseen data. |

| Number of Outliers | Frames above error threshold in new data | Decreases over iterations | Induces reduction in model uncertainty. |

| Behavioral Coverage | Count of frames per behavior state | Becomes balanced | Ensures all behaviors are represented. |

Q4: Does frame selection strategy differ for primate social behavior vs. rodent gait analysis?

A: Yes, the source of variability differs.

- Primate Social Studies: Diversity must come from inter-animal variability (size, fur color), social configurations (dyads, triads), and occlusion. Prioritize frame selection from multiple animals and challenging social tangles.

- Rodent Gait Analysis: Diversity must capture the full gait cycle (stance, swing) for all limbs, turning kinetics, and speed variability (walk, trot, gallop). Use treadmill trials at controlled speeds to ensure cycle coverage.

Experimental Protocol: Iterative Frame Diversification for Training Set Refinement

Objective: To create a robust DeepLabCut pose estimation model by iteratively refining the training dataset to maximize postural and behavioral variability.

Materials:

- Recorded video data (.mp4, .avi)

- DeepLabCut (v2.3+)

- Computing cluster or GPU workstation

Procedure:

- Initialization: Create a new DeepLabCut project. From 10-20% of your videos, extract 100 frames using

kmeansclustering to capture broad visual diversity (background, lighting). - Labeling Round 1: Manually label all body parts in these frames.

- Initial Training: Train a network for 50k iterations. This is your Initial Model.

- Outlier Frame Extraction: Use the Initial Model to analyze all source videos. Run

extract_outlier_framesfrom the GUI or API, selecting the top 0.5% of frames with the highest prediction uncertainty. - Labeling Round 2: Label the extracted outlier frames. Merge this new dataset with the initial training set.

- Refined Training: Train a new network from scratch on the combined dataset for 150k+ iterations. This is your Refined Model.

- Validation & Evaluation: Analyze a completely new, held-out video with the Refined Model. Use the

create_video_with_all_detectionsfunction to visually inspect performance. Quantify by comparing the Test Error (Table 1) of the Initial vs. Refined Model. - Iteration: If error persists in specific behaviors, return to Step 4, targeting videos containing those behaviors.

Diagrams

Title: Iterative Training Dataset Refinement Workflow

Title: Mapping Variability Sources to Selection Strategies

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Materials for Behavioral Video Analysis & Dataset Creation

| Item | Function in Experiment |

|---|---|

| High-Speed Camera (≥100 fps) | Captures rapid movements (e.g., rodent gait, wingbeats) without motion blur, enabling precise frame extraction for dynamic poses. |

| Wide-Angle Lens | Allows capture of multiple animals in a social context or a large arena, increasing postural and interactive variability per frame. |

| Ethological Software (e.g., BORIS, EthoVision) | Used to create an ethogram and log behavioral events, guiding targeted frame selection around key behaviors. |

| GPU Workstation (NVIDIA RTX Series) | Accelerates DeepLabCut model training, enabling rapid iteration of the "train -> evaluate -> refine" cycle for dataset development. |

| Dedicated Animal ID Markers (e.g., fur dye, colored tags) | Provides consistent visual cues for distinguishing similar-looking individuals in social groups, critical for accurate multi-animal labeling. |

| Controlled Lighting System | Minimizes uncontrolled shadow and glare variability, though frames under different lighting should still be sampled to improve model robustness. |

Troubleshooting Guides and FAQs

Q1: During multi-labeler annotation for DeepLabCut, we observe high inter-labeler variance for specific body parts (e.g., wrist). What is the primary cause and how can we resolve it?

A1: High variance typically stems from ambiguous protocol definitions. Resolve this by:

- Refining the Annotation Guide: Create a visual guide with exemplar images showing correct and incorrect placements for the problematic landmark. Define the anatomical anchor precisely (e.g., "center of the lateral bony prominence of the wrist").

- Implementing a Calibration Round: Have all labelers annotate the same small set of frames (20-50). Calculate the Mean Pixel Distance (MPD) between labelers for each landmark.

- Consensus Meeting: Review high-disagreement frames as a group to establish a consensus rule, then update the formal protocol.

Q2: Our labeled dataset shows good labeler agreement, but DeepLabCut model performance plateaus. Could inconsistent labels still be the issue?

A2: Yes. Consistent but systemically biased labels can limit model performance. Troubleshoot using:

- Quantitative Check: Calculate the standard deviation of each landmark's position across all labelers and frames. A low per-landmark standard deviation indicates labeler agreement, but does not guarantee accuracy.

- Protocol Review: Check if the protocol forces labelers to choose a pixel when the true location is occluded. Implement rules for "occlusion labeling" (e.g., extrapolate from adjacent frames or mark as "not visible").

- Gold Standard Test: Have a senior researcher label a subset of frames as a "gold standard." Compute the MPD of all other labelers against this standard to uncover systematic bias.

Q3: What is the most efficient workflow to merge annotations from multiple labelers into a single training set for DeepLabCut?

A3: The recommended workflow is to use statistical aggregation rather than simple averaging.

- Outlier Removal: For each frame and landmark, remove annotations that are >3 standard deviations from the median position (using robust statistics like Median Absolute Deviation).

- Aggregate: Compute the median (not mean) x and y coordinates from the remaining annotations for each landmark. The median is resistant to residual outliers.

- Quality Metric: Record the Inter-Labeler Agreement (ILA) score—the mean pixel distance between all labelers' annotations after outlier removal—for each frame. This score can later be used to weight samples during training.

Q4: How many labelers are statistically sufficient for a high-quality DeepLabCut training dataset?

A4: The number depends on target ILA. Use this pilot study method:

- Start with 3-4 labelers on a pilot image set (100 frames).

- Sequentially add labelers in batches, recalculating the aggregate label and its stability after each batch.

- Stop when the aggregate landmark position changes less than a pre-set threshold (e.g., 0.5 pixels) with the addition of a new labeler's data. Typically, 3-5 well-trained labelers are sufficient for most behaviors.

Table 1: Impact of Annotation Protocol Detail on Labeler Agreement

| Protocol Detail Level | Mean Inter-Labeler Distance (px) | Std Dev of Distance | Time per Frame (sec) |

|---|---|---|---|

| Basic (Landmark Name Only) | 8.5 | 4.2 | 3.1 |

| Intermediate (+ Text Description) | 5.1 | 2.7 | 4.5 |

| Advanced (+ Visual Exemplars) | 2.3 | 1.1 | 5.8 |

Table 2: Effect of Labeler Consensus Method on DeepLabCut Model Performance

| Consensus Method | Train Error (px) | Test Error (px) | Generalization Gap |

|---|---|---|---|

| First Labeler's Annotations | 4.1 | 12.7 | 8.6 |

| Simple Average | 3.8 | 10.2 | 6.4 |

| Median + Outlier Removal | 2.9 | 7.3 | 4.4 |

Experimental Protocols

Protocol 1: Initial Labeler Training and Calibration

Objective: To standardize labeler understanding and quantify baseline agreement. Methodology:

- Training: Labelers study the annotation protocol document and visual guide.

- Calibration Set: Each labeler independently annotates an identical set of 50 randomly selected frames from the experimental corpus.

- Analysis: Compute a pairwise Inter-Labeler Agreement (ILA) matrix using Mean Pixel Distance (MPD) for all landmarks.

- Feedback Session: Labelers with ILA >2 standard deviations from the mean review their annotations with the project lead. The protocol is clarified if multiple labelers err on the same landmark.

Protocol 2: Iterative Annotation with Quality Control

Objective: To produce the final aggregated dataset with continuous quality monitoring. Methodology:

- Batch Assignment: Distribute unique frames to labelers, ensuring 20% overlap (i.e., 1 in 5 frames is annotated by 2+ labelers).

- Weekly ILA Check: Calculate MPD on the overlapping frames each week. Flag any labeler whose ILA drifts significantly.

- Aggregation: Upon completion, run the outlier removal and median aggregation algorithm (see FAQ A3) across all labelers for every frame.

- Final Audit: Project lead reviews aggregated labels for 5% of frames, selected at random, to validate final quality.

Visualizations

Workflow for Multi-Labeler Annotation Protocol

Algorithm for Aggregating Multiple Annotations

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function in Annotation Protocol Development |

|---|---|

| DeepLabCut Labeling Interface | The core software tool for placing anatomical landmarks on video frames. Consistency depends on its intuitive design and zoom capability. |

| Visual Annotation Guide (PDF/Web) | A living document with screenshot exemplars for correct/incorrect labeling, critical for resolving ambiguous cases. |

| Inter-Labeler Agreement (ILA) Calculator | A custom script (Python/R) to compute Mean Pixel Distance between labelers across landmarks and frames. |

| Annotation Aggregation Pipeline | Automated script to perform outlier removal and median aggregation of coordinates from multiple labelers. |

| Gold Standard Test Set | A small subset of frames (50-100) annotated by a senior domain expert, used to validate protocol accuracy and detect systemic bias. |

| Project Management Board (e.g., Trello, Asana) | Tracks frame assignment, labeler progress, and QC flags to manage the workflow of multiple annotators. |

Step-by-Step Dataset Refinement: From Raw Videos to a Robust Training Set

DeepLabCut Troubleshooting Guide & FAQs

FAQ 1: Why is my DeepLabCut model showing high training loss but low test error? What does this indicate about my dataset?

This typically indicates overfitting, where the model memorizes the training data but fails to generalize. It's a core dataset refinement issue.

- Primary Cause: Insufficient diversity and size of the training dataset. The model is learning noise and augmentations rather than robust pose features.

- Solution Protocol: Implement targeted dataset augmentation.

- Evaluate: Use the

analyze_videos_over_timefunction to plot train/test error. A large gap confirms overfitting. - Augment: Apply a systematic augmentation strategy. For a starter dataset of 200 frames, augment to 1000+ frames.

- Refine Training: Retrain the model with the augmented dataset and a stronger regularization parameter (e.g., increase

weight_decayin the pose_cfg.yaml file). - Re-evaluate: Check if the gap between training and test error decreases.

- Evaluate: Use the

Table 1: Impact of Dataset Augmentation on Model Overfitting

| Experiment Condition | Training Dataset Size (Frames) | Augmentation Methods Applied | Final Training Loss | Final Test Error | Train-Test Error Gap |

|---|---|---|---|---|---|

| Baseline (Overfit) | 200 | None | 1.2 | 8.5 | 7.3 |

| Refinement Iteration 1 | 1000 | Rotation (±15°), Contrast (±20%), Flip (Horizontal) | 3.8 | 5.1 | 1.3 |

| Refinement Iteration 2 | 1000 | Above + Motion Blur (kernel size 5), Scaling (±10%) | 4.5 | 4.8 | 0.3 |

FAQ 2: How do I resolve consistently high pixel errors for a specific body part (e.g., the tail base) across all videos?

This points to a labeling inconsistency or occlusion/ambiguity for that specific keypoint.

- Primary Cause: Ambiguous visual definition of the keypoint or inconsistent labeling by the human annotator across frames.

- Solution Protocol: Refine labels for the problematic keypoint.

- Evaluate: Use the

evaluate_networkfunction and filter the results to show frames with the highest error for the specific keypoint. - Extract & Relabel: Extract these high-error frames using

extract_outlier_frames. Manually re-inspect and correct the labels for the problematic keypoint in the refinement GUI. - Augment Strategically: Create additional synthetic training examples for this keypoint by augmenting only the corrected frames with transformations that mimic the challenging conditions (e.g., partial occlusion, extreme rotation).

- Merge & Retrain: Merge the new refined frames with the original training set and retrain.

- Evaluate: Use the

Experimental Protocol: Targeted Keypoint Refinement

- Train initial network for 200k iterations.

- Evaluate on a held-out test video.

- Identify keypoint with mean pixel error > acceptable threshold (e.g., 10 px).

- Extract top 50 frames with maximum error for that keypoint.

- Relabel the keypoint in all 50 frames.

- Augment this set of 50 frames 4x (200 new frames).

- Add the 200 new frames to the original training set.

- Retrain network from pre-trained weights for 100k iterations.

- Re-evaluate test error.

FAQ 3: After refinement, my model performs well on lab recordings but fails in a new experimental setup (different arena, lighting). What is the next step?

This is a domain shift problem. The refined dataset lacks the visual features of the new environment.

- Primary Cause: The training dataset's feature distribution does not match the deployment environment.

- Solution Protocol: Domain adaptation through iterative refinement.

- Evaluate on New Domain: Run the model on the new environment video to create initial predictions.

- Extract Frames of Failure: Extract frames where confidence (p-cutoff) is low or predictions are physically impossible.

- Label & Integrate: Manually label these frames from the new environment. This is a critical augmentation step.

- Fine-Tune: Create a new training set combining 20% of the original diverse data and 80% of the new domain-specific data. Fine-tune the existing model on this combined set for 50k-75k iterations.

Diagram 1: The Iterative Refinement Workflow Cycle

Diagram 2: Protocol for Targeted Keypoint Refinement

The Scientist's Toolkit: Research Reagent Solutions for DeepLabCut Refinement

| Item | Function in Refinement Workflow | Example/Note |

|---|---|---|

| DeepLabCut (v2.3+) Software | Core platform for model training, evaluation, and label management. | Essential for running the iterative refinement cycle. |

| High-Resolution Camera | Captures source video data with sufficient detail for keypoint identification. | >1080p, high frame-rate for fast movements. |

| Controlled Lighting System | Minimizes domain shift by providing consistent illumination across experiments. | LED panels with diffusers reduce shadows and glare. |

| Video Augmentation Pipeline | Programmatically expands and diversifies the training dataset. | Use imgaug or albumentations libraries (integrated in DLC). |

| Computational Resource (GPU) | Accelerates the training and re-training steps in the iterative cycle. | NVIDIA GPU with >8GB VRAM recommended for efficient iteration. |

| Labeling Refinement GUI | Interface for manual correction of outlier frames identified during evaluation. | Built into DeepLabCut (refine_labels GUI). |

| Statistical Analysis Scripts | Custom Python/R scripts to calculate metrics beyond mean pixel error (e.g., temporal smoothness). | Critical for thorough evaluation of model performance. |

Technical Support Center

Troubleshooting Guide

Issue 1: Model consistently fails to label occluded body parts (e.g., a paw behind another limb).

- Symptom: High reprojection error and low p-cutoff values for specific markers during periods of occlusion.

- Diagnosis: Insufficient or low-quality training examples of the occluded state in the training dataset.

- Solution: Implement targeted data augmentation. Use synthetic occlusion generation by overlaying shapes or textures on non-occluded frames to create artificial training data. Refine the training set to include more frames where the occlusion naturally occurs, even if manual labeling is challenging (use interpolation tools after labeling clear keyframes).

Issue 2: Ambiguity between visually similar body parts (e.g., left vs. right hind paw in top-down view) causes label swaps.

- Symptom: Tracked points "jump" between adjacent, similar-looking body parts within a single session.

- Diagnosis: The model lacks contextual or relational understanding of body part topology.

- Solution: Incorporate a "graphical model" or "context refinement" step post-initial training. Employ a multi-animal configuration in DeepLabCut to leverage identity information, or post-process tracks using a motion prior that penalizes physically impossible distances or velocities between specific points.

Issue 3: Tool produces poor predictions on novel subjects or experimental setups.

- Symptom: Model generalizes poorly from the training dataset to new data.

- Diagnosis: Training dataset lacks diversity in subject morphology, lighting, background, and camera angles.

- Solution: Apply aggressive domain randomization during training. Curate a refinement set from the novel data, label a small subset (50-100 frames), and perform network refinement (transfer learning) to adapt the base model to the new conditions.

Frequently Asked Questions (FAQs)

Q1: What is the most efficient strategy to label frames with heavy occlusion for DeepLabCut? A1: Utilize the "adaptive" or "k-means" clustering feature in DeepLabCut's frame extraction to ensure your initial training set includes complex frames. During labeling, heavily rely on the interpolation function. Label the body part confidently in frames before and after the occlusion event, then let the tool interpolate the marker position for the occluded frames. You can then correct the interpolated position if a visual cue (like a tip of the limb) is still partially visible.

Q2: How can I quantify the ambiguity of a specific body part's label? A2: Use the p-cutoff value and the likelihood output from DeepLabCut. Consistently low likelihood for a particular marker across multiple videos is a strong indicator of inherent ambiguity or occlusion. You can set a p-cutoff threshold (e.g., 0.90) to filter out low-confidence predictions for analysis. See Table 1 for performance metrics linked to likelihood thresholds.

Q3: Are there automated tools to pre-annotate occluded body parts? A3: While fully automated occlusion handling is not native, you can use a multi-step refinement pipeline. First, train a base model on all clear frames. Second, use this model to generate predictions on the challenging, occluded frames. Third, manually correct these machine-generated labels. This "human-in-the-loop" active learning approach is far more efficient than labeling from scratch.

Q4: How does labeling ambiguity affect the overall performance of my pose estimation model? A4: Ambiguity directly increases label noise, which can reduce the model's final accuracy and its ability to generalize. It forces the model to learn inconsistent mappings, degrading performance. The key metric to monitor is the train-test error gap; a large gap can indicate overfitting to noisy or ambiguous training labels.

Table 1: Impact of Occlusion-Augmented Training on Model Performance Data synthesized from current literature on dataset refinement for pose estimation.

| Training Dataset Protocol | Mean Test Error (pixels) | Mean Likelihood (p-cutoff=0.90) | Label Swap Rate (%) | Generalization Score (to novel subject) |

|---|---|---|---|---|

| Baseline (Random Frames) | 12.5 | 0.85 | 8.7 | 65.2 |

| + Targeted Occlusion Frames | 9.1 | 0.91 | 5.1 | 78.9 |

| + Synthetic Occlusion Augmentation | 8.3 | 0.93 | 3.8 | 85.5 |

| + Graph-based Post-Processing | 7.8 | 0.95 | 1.2 | 87.1 |

Table 2: Efficiency Gain from Active Learning Annotation Refinement

| Refinement Method | Hours to Label 1000 Frames | Final Model Error Reduction vs. Baseline |

|---|---|---|

| Full Manual Labeling | 20.0 hrs | 0% (Baseline) |

| Model Pre-Labeling + Correction | 11.5 hrs | 15% improvement |

| Interpolation-Centric Workflow | 14.0 hrs | 8% improvement |

Experimental Protocol: Targeted Occlusion Augmentation

This protocol is designed to enhance a DeepLabCut model's robustness to occluded body parts.

1. Initial Model Training:

- Extract frames using k-means clustering (n=100) from several representative videos.

- Manually label all visible keypoints. For completely occluded points, provide your best estimate or leave unlabeled (handled in step 3).

- Train a standard ResNet-50 DeepLabCut network to convergence.

2. Synthetic Occlusion Generation:

- Apply data augmentation in the training pipeline that includes:

- Random elliptical "patches" dropped onto training images.

- Random noise bars.

- Adjust augmentation probability to 0.5.

- Alternatively, use an offline script to superimpose random shapes/textures onto training images, ensuring some occlude body parts, and add these augmented images to the training set.

3. Active Learning Refinement Loop:

- Use the current model to analyze new videos containing complex occlusions.

- Extract frames with the lowest average likelihood for specific body parts.

- Manually correct the labels on these "hard" frames, focusing on the occluded points using contextual clues and interpolation.

- Refine (continue training) the model on the expanded, corrected dataset for a few thousand iterations.

- Repeat steps 3a-d for 2-3 cycles.

Visualization: Experimental Workflow

Title: Active Learning Workflow for Occlusion Refinement

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for Dataset Refinement Experiments

| Item | Function in Context |

|---|---|

| DeepLabCut (v2.3+) | Open-source toolbox for markerless pose estimation; the core platform for model training and evaluation. |

| Labeling Interface (DLC-GUI) | The graphical environment for manual annotation of body parts, featuring key tools like interpolation and refinement. |

| Custom Python Scripts for Data Augmentation | To programmatically generate synthetic occlusions (random shapes, noise) and expand the training dataset. |

| High-Resolution Camera System | To capture original behavioral videos; higher resolution provides more pixel data for ambiguous body parts. |

| Compute Cluster with GPU (e.g., NVIDIA Tesla) | Essential for efficient training and refinement of deep neural network models within a practical timeframe. |

| Statistical Analysis Software (e.g., Python Pandas/Statsmodels) | For quantitative analysis of model outputs (error, likelihood), enabling data-driven refinement decisions. |

This technical support center provides troubleshooting guidance for researchers applying data augmentation techniques within DeepLabCut (DLC) projects, specifically framed within a thesis on training dataset refinement for robust pose estimation in behavioral pharmacology.

Troubleshooting Guides & FAQs

Q1: After implementing extensive spatial augmentations (rotation, scaling, shear) in DeepLabCut, my model's performance on validation videos decreases significantly. What is the likely cause and solution?

A: This often indicates a distribution mismatch between the augmented training set and your actual experimental data. Common causes and solutions are:

- Cause 1: Excessive augmentation ranges that generate unrealistic animal poses or contexts (e.g., a mouse rotated 90 degrees relative to gravity). The model learns non-generalizable features.

- Solution: Constrain augmentation parameters to physiologically or experimentally plausible limits. Use the

config.yamlfile to adjustrotation,scale, andshearranges. Start with conservative values (e.g., rotation: -15 to 15 degrees). - Cause 2: Augmentation of all training frames uniformly, including already high-quality, representative samples.

- Solution: Implement a targeted augmentation strategy. Use DLC's outlier detection (

deeplabcut.extract_outlier_frames) to identify under-represented poses or challenging frames, and apply stronger augmentations selectively to this subset.

Q2: How do I choose between photometric augmentations (brightness, contrast, noise) and spatial augmentations for my drug-treated animal videos?

A: The choice should be driven by the variance introduced by your experimental protocol.

- Use Photometric Augmentations (brightness, contrast, hue, motion blur) to simulate variances in: lighting conditions across experimental sessions, drug effects on pupil dilation/fur coloration, or artifacts from injection equipment. This is crucial for generalizing across different rigs or treatment days.

- Use Spatial Augmentations (rotation, scaling, cropping) to improve robustness to: the animal's angle relative to the camera, distance from the camera, or partial occlusions by cage features.

- Protocol: Conduct an ablation study. Train four models: (1) Baseline (no augmentations), (2) Photometric-only, (3) Spatial-only, (4) Combined. Evaluate on a held-out test set from multiple experimental conditions. The table below summarizes a typical finding from such an experiment.

Table 1: Impact of Augmentation Type on Model Generalization Error (Mean Pixel Error)

| Model Variant | Test Set (Control) | Test Set (Drug Condition A) | Test Set (Novel Lighting) | Overall Mean Error |

|---|---|---|---|---|

| Baseline (No Aug) | 5.2 px | 12.7 px | 15.3 px | 11.1 px |

| Photometric Only | 5.5 px | 10.1 px | 7.8 px | 7.8 px |

| Spatial Only | 5.4 px | 9.8 px | 14.9 px | 10.0 px |

| Combined | 5.6 px | 10.3 px | 8.1 px | 7.9 px |

Results indicate combined augmentation offers the best generalization across diverse test conditions.

Q3: My pipeline with augmentations runs significantly slower. How can I optimize training speed?

A: This is a common issue when using on-the-fly augmentation.

- Solution 1: Use TensorFlow's

prefetchandcacheoperations in your input data pipeline. Caching augmented images after the first epoch can dramatically speed up subsequent epochs. - Solution 2: Generate an expanded, static dataset. Pre-compute and save a fixed number of augmented versions for each training frame. This trades disk space for consistent training speed and allows for precise control over the augmented dataset size.

- Protocol for Pre-computation:

- Identify your final training set (from DLC's

create_training_dataset). - Define your augmentation pipeline (e.g., using

imgaugorAlbumentations). - For each training image and its corresponding label file (

.mat), applyNrandom augmentations (e.g., N=5). - Transform the keypoints accordingly for spatial augmentments. Critical: For rotation/scaling, apply the same transform matrix to the (x, y) coordinates.

- Save the new images and updated label files in the same structure as the original DLC training set.

- Update the DLC configuration file to point to this new, larger dataset.

- Identify your final training set (from DLC's

Q4: Can synthetic data generation (e.g., using a trained model or 3D models) be integrated with standard DLC augmentation?

A: Yes, this is an advanced refinement technique for extreme data scarcity.

- Solution: A two-stage augmentation pipeline.

- Stage 1 - Synthetic Generation: Use a base DLC model to generate pose estimations on unlabeled videos. Use high-confidence predictions as "pseudo-labels." Alternatively, use a 3D rodent model (e.g., in Blender) to render synthetic images and precise keypoints. This creates an initial synthetic dataset (

SynthSet). - Stage 2 - Traditional Augmentation: Apply the standard spatial/photometric augmentations to both your original human-labeled data (

OrigSet) and theSynthSet. - Training: Combine the augmented

OrigSetand augmentedSynthSetfor final model training. Always maintain a purely human-labeled validation set for unbiased evaluation.

- Stage 1 - Synthetic Generation: Use a base DLC model to generate pose estimations on unlabeled videos. Use high-confidence predictions as "pseudo-labels." Alternatively, use a 3D rodent model (e.g., in Blender) to render synthetic images and precise keypoints. This creates an initial synthetic dataset (

Title: Two-Stage Augmentation Pipeline with Synthetic Data

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Resources for Data Augmentation in DLC-Based Research

| Item / Solution | Function / Purpose | Example / Note |

|---|---|---|

DeepLabCut (config.yaml) |

Core configuration file to enable and control built-in augmentations (rotation, scale, shear, etc.). | Define affine and elastic transform parameters here. |

| Imgaug / Albumentations Libraries | Advanced, flexible Python libraries for implementing custom photometric and spatial augmentation sequences. | Allows fine-grained control (e.g., adding Gaussian noise, simulating motion blur). |

TensorFlow tf.data API |

Framework for building efficient, scalable input pipelines with on-the-fly augmentation, caching, and prefetching. | Critical for managing large, augmented datasets during training. |

| 3D Animal Model (e.g., OpenSim Rat) | Provides a source for generating perfectly labeled, synthetic training data from varied viewpoints. | Useful for bootstrapping models when labeled data is very limited. |

| Outlier Frame Extraction (DLC Tool) | Identifies frames where the current model is least confident, guiding targeted augmentation. | Use deeplabcut.extract_outlier_frames to find challenging cases. |

| High-Performance Computing (HPC) Cluster or Cloud GPU | Provides the computational resources needed for training multiple models with different augmentation strategies in parallel. | Essential for rigorous ablation studies and hyperparameter search. |

Technical Support Center

Troubleshooting Guides & FAQs

Q1: My DeepLabCut project contains videos from multiple experimental conditions (e.g., control vs. drug-treated). How do I ensure my training dataset is balanced across these conditions?

A: Imbalanced condition representation is a common issue. Use DeepLabCut's create_training_dataset function with the cfg parameter ConditionLabels set. First, label your video files in the project_config.yaml file by adding a condition field (e.g., condition: Control). When creating the training dataset, use the select_frames_from_conditions function to sample an equal number of frames from each condition label. This ensures the network learns pose estimation invariant to your experimental treatments.

Q2: I have labeled my conditions, but the automated frame selection is still picking too many similar frames from one high-motion video. What advanced techniques can I use?

A: Relying solely on motion-based selection (like k-means on frame differences) within a condition can be suboptimal. Implement a two-step protocol:

- Primary Sort by Condition: Group all video frames by their condition label.

- Secondary Sort by Diversity: Within each condition group, apply a clustering algorithm (e.g., k-means) on a feature space derived from a pretrained network (like MobileNetV2's penultimate layer). This selects diverse appearances within each experimental state.

Q3: After training with condition-balanced data, my model performs poorly on a specific condition (e.g., a particular drug treatment). How should I troubleshoot this?

A: This indicates potential domain shift. Follow this diagnostic workflow:

- Evaluate Condition-Specific Metrics: Generate separate evaluation results for each condition using

deeplabcut.evaluate_network. - Analyze the Failure Modes: Use

deeplabcut.analyze_videoson the problematic condition and thendeeplabcut.create_labeled_videoto visually inspect errors. - Refine Dataset: Based on analysis, perform targeted active learning. Extract frames where model confidence (p-cutoff) was low specifically for the poor-performing condition and add them to your training set.

Q4: What file format and structure should I use to store experimental metadata for it to be usable with DeepLabCut's condition-labeling functions?

A: The most robust method is to integrate metadata into the project's main configuration dictionary (cfg) or link to an external CSV. We recommend this structure in your config.yaml:

You can then parse this using yaml.safe_load and pandas DataFrame for frame selection logic.

Table 1: Impact of Condition-Balanced vs. Random Frame Selection on Model Performance

| Experimental Condition | Random Selection Test Error (px) | Condition-Balanced Selection Test Error (px) | % Improvement | Number of Frames per Condition in Training Set |

|---|---|---|---|---|

| Control (Saline) | 5.2 | 4.8 | 7.7% | 150 |

| Low Dose (5mg/kg) | 8.7 | 6.1 | 29.9% | 150 |

| High Dose (10mg/kg) | 12.5 | 7.3 | 41.6% | 150 |

| Average | 8.8 | 6.1 | 30.7% | 450 (Total) |

Table 2: Comparison of Frame Diversity Metrics Across Selection Methods

| Selection Method | Average Feature Distance Within Condition (↑ is better) | Average Feature Distance Across Conditions (↑ is better) | Condition Label Purity in Clusters (↓ is better) |

|---|---|---|---|

| Random | 0.45 | 0.52 | 0.61 |

| K-means (Global) | 0.71 | 0.68 | 0.55 |

| Condition-Guided + K-means | 0.69 | 0.75 | 0.22 |

Detailed Experimental Protocols

Protocol 1: Integrating Condition Labels for Initial Training Dataset Creation

Methodology:

- Metadata Annotation: In your DeepLabCut project's

config.yamlfile, under thevideo_setssection, add a key-value pair (e.g.,condition: Treatment_A) for each video file path. - Frame Extraction & Labeling: Run

deeplabcut.extract_framesto generate candidate frames from all videos. - Condition-Aware Selection: Write a custom Python script that:

a. Loads the

config.yamland the list of extracted frames. b. Groups image paths by their associatedconditionlabel. c. For each condition group, calculates image embeddings using a pretrained feature extractor. d. Applies k-means clustering (k = desired frames per condition) on the embeddings within each group. e. Selects the frame closest to each cluster center. - Dataset Compilation: The output is a final list of frame paths balanced across conditions. Use

deeplabcut.label_framesto proceed with manual labeling.

Protocol 2: Active Learning Loop for Condition-Specific Model Refinement

Methodology:

- Initial Training: Train a DeepLabCut model on the initial condition-balanced dataset.

- Condition-Specific Inference: Analyze new videos from all conditions using the trained model. Save the model's confidence scores (likelihood) for each body part in each frame.

- Identify Condition-Specific Gaps: For the target condition (e.g., where performance is poor), filter frames where the average likelihood across keypoints is below a threshold (e.g., 0.8).

- Diverse Sampling from Poor-Performing Condition: From the low-confidence pool for the target condition, perform a diversity sampling (e.g., feature-based clustering) to select a manageable number of frames (e.g., 50-100) for labeling.

- Iterative Refinement: Add the newly labeled frames (with their condition metadata) to the training set. Retrain the model and repeat evaluation from step 2.

Visualizations

Diagram 1: Condition-Aware Frame Selection Workflow

Diagram 2: Diagnostic & Refinement Pathway for Poor Condition Performance

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for Behavioral Experiments with DeepLabCut

| Item | Function in Context |

|---|---|

| DeepLabCut (v2.3+) | Core open-source software toolkit for markerless pose estimation. Enables the implementation of condition-labeling scripts. |

| Pretrained CNN (e.g., MobileNetV2, ResNet-50) | Used within DeepLabCut as a feature extractor for clustering frames based on visual appearance, independent of pose. |

| Behavioral Arena (Standardized) | A consistent testing chamber (e.g., open field, elevated plus maze) to ensure video background and lighting are uniform within and across condition groups. |

| Video Recording System (High-speed Camera) | Provides high-resolution, high-frame-rate video input. Critical for capturing subtle drug-induced behavioral changes. |

| Metadata Logging Software (e.g., BORIS, custom LabVIEW) | For accurately logging and time-syncing experimental condition labels (drug, dose, subject ID) with video files. |

| GPU Workstation (NVIDIA recommended) | Accelerates the training and evaluation of DeepLabCut models, enabling rapid iteration during the active learning refinement loop. |

| Data Storage & Versioning (e.g., DVC, Git LFS) | Manages versions of large training datasets, model checkpoints, and associated metadata, ensuring reproducibility of the refinement process. |

Diagnosing and Fixing Common DeepLabCut Training Set Pitfalls

This guide supports researchers in the DeepLabCut training dataset refinement project by providing diagnostic steps for interpreting model training loss plots.

Troubleshooting Guides & FAQs

Q1: My validation loss is consistently and significantly higher than my training loss. What does this indicate and how should I address it within my DeepLabCut pose estimation model? A1: This pattern strongly suggests overfitting. The model has memorized the training dataset specifics (including potential labeling noise or augmentations) and fails to generalize to the validation set.

- Action Protocol:

- Increase Dataset Size & Diversity: Add more varied experimental frames (e.g., different lighting, animal coats, camera angles) to your training set, as per our core thesis on dataset refinement.

- Apply/Increase Regularization: Implement or strengthen Dropout layers (e.g., from rate 0.2 to 0.5) or L2 weight regularization in the network.

- Reduce Model Complexity: Consider using a smaller backbone (e.g., switch from ResNet-101 to ResNet-50) if the dataset is limited.

- Reduce Augmentation Intensity: If using extreme spatial augmentations (large rotations, scaling), moderate them to prevent the model from learning unrealistic transformations.

Q2: Both my training and validation loss are high and decrease very slowly or remain stagnant. What is the issue? A2: This is a classic sign of underfitting. The model is too simple or the training is insufficient to capture the underlying patterns of keypoint relationships.

- Action Protocol:

- Increase Model Capacity: Use a more complex backbone network (e.g., ResNet-152) or add more layers to the decoder.

- Train for Longer Epochs: Significantly increase the number of training iterations, monitoring for when the loss finally begins a steady decline.

- Reduce Regularization: Temporarily disable or lower Dropout rates and L2 regularization to allow the model to learn more freely.

- Check Feature Quality: Ensure the image preprocessing (e.g., scaling, histogram equalization) is not destroying critical information for pose estimation.

Q3: After an initial decrease, both training and validation loss have flattened for many epochs with minimal change. What does this mean? A3: This indicates a training plateau. The optimizer (commonly Adam) can no longer find a direction to significantly lower the loss given the current learning rate.

- Action Protocol:

- Implement a Learning Rate Schedule: Use a scheduled reduction (e.g., reduce by factor of 10 when loss plateaus for 10 epochs).

- Manually Adjust Learning Rate: After a plateau, try reducing the learning rate by a factor of 10 and resuming training.

- Check Annotation Consistency: Re-inspect a sample of training and validation frames for labeling inconsistencies or errors, as refining this is central to our research.

- Explore Different Optimizers: Switch from Adam to SGD with Nesterov momentum, which can sometimes escape shallow plateaus.

Q4: My training loss decreases normally, but my validation loss is highly volatile (large spikes) between epochs. A4: This suggests a mismatch or problem with the validation data, or an excessively high learning rate.

- Action Protocol:

- Audit Validation Set: Ensure the validation set is correctly preprocessed and representative of the training data distribution. Verify no corrupted images are present.

- Lower Learning Rate: Decrease the initial learning rate by an order of magnitude to stabilize updates.

- Increase Batch Size: A larger batch size provides a more stable gradient estimate, potentially reducing validation volatility.

- Implement Gradient Clipping: Clip gradients to a maximum norm (e.g., 1.0) to prevent explosive updates from outlier batches.

Table 1: Diagnostic Patterns in Loss Plots

| Pattern | Training Loss | Validation Loss | Primary Diagnosis | Common in DeepLabCut when... |

|---|---|---|---|---|

| Diverging Curves | Low, continues to decrease | Starts increasing after a point | Overfitting | Training set is too small or lacks diversity in animal posture/background. |

| High Parallel Curves | High, decreases slowly | High, decreases slowly | Underfitting | Backbone network is too shallow for complex multi-animal tracking. |

| Plateaued Curves | Stable, minimal change | Stable, minimal change | Optimization Plateau | Learning rate is too low or architecture capacity is maxed for given data. |

| Volatile Validation | Normal, decreasing | Erratic, with sharp peaks | Data/Config Issue | Validation set contains anomalous frames or batch size is very small. |

Table 2: Recommended Hyperparameter Adjustments Based on Diagnosis

| Diagnosis | Learning Rate | Batch Size | Dropout Rate | Epochs | Primary Dataset Refinement Action |

|---|---|---|---|---|---|

| Overfitting | Consider slight decrease | Can decrease | Increase | Stop Early | Increase diversity & size of labeled set. |

| Underfitting | Can increase | Can increase | Decrease | Increase significantly | Ensure labeling covers full pose variation. |

| Plateauing | Schedule decrease | - | - | Continue post-LR drop | Add challenging edge-case frames. |

| Volatile Val. | Decrease | Increase | - | - | Scrutinize validation set quality. |

Experimental Protocols

Protocol 1: Systematic Diagnosis of a Suspicious Loss Plot

- Plot Generation: Ensure you are plotting loss values (e.g., MSE or RMSE) for both training and validation sets on the same axes, across all epochs.

- Baseline Comparison: Compare your plot against the patterns in Table 1.

- Controlled Alteration: Make one hyperparameter or dataset change at a time (e.g., only change Dropout rate from 0.2 to 0.5).

- Re-train & Compare: Re-train the model from scratch with the new setting and generate a new loss plot.

- Iterate: Based on the change, proceed with the next logical intervention from the troubleshooting guides.

Protocol 2: Creating a Robust Train/Validation Split for Behavioral Data

- Source Data: Pool all extracted video frames.

- Stratified Sampling: Ensure each set (train/val) contains proportional examples from each experimental condition, animal, and camera view.

- Temporal Separation: For time-series behavioral data, ensure frames from the same video clip are not split between train and validation sets to prevent data leakage.

- Size Allocation: For typical DeepLabCut projects, allocate 90-95% for training and 5-10% for validation.

- Sanity Check: Manually inspect a random sample of both sets to confirm representativeness.

Visualizations

Diagram 1: Loss Plot Diagnosis Workflow

Diagram 2: Dataset Refinement Feedback Loop for Model Improvement

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Toolkit for DeepLabCut Training & Diagnostics

| Item | Function/Explanation | Example/Note |

|---|---|---|

| DeepLabCut Suite | Core software for markerless pose estimation. | Includes deeplabcut.train, deeplabcut.evaluate. |

| TensorFlow/PyTorch | Underlying deep learning frameworks. | Required for creating and training models. |

| Plotting Library | Visualizing loss curves and metrics. | Matplotlib, Seaborn. |

| GPU Compute Resource | Accelerates model training significantly. | NVIDIA GPU with CUDA support. |

| Curated Video Database | Source material for training/validation frames. | High-resolution, well-lit behavioral videos. |

| Automated Annotation Tool | For efficient labeling of new training frames. | DeepLabCut's GUI, Active Learning features. |

| Hyperparameter Log | Tracks changes to LR, batch size, etc. | Weights & Biases, TensorBoard, or simple spreadsheet. |

| Validation Set "Bank" | A fixed, diverse set of frames for consistent evaluation. | Never used for training; critical for fair comparison. |

Troubleshooting Guides & FAQs

Q1: During DeepLabCut (DLC) training, my network shows persistently high error for a single, specific keypoint (e.g., the tip of a rodent's tail). What is the most likely cause and targeted remediation? A: This is a classic symptom of insufficient or poor-quality training data for that specific keypoint within a particular context. The network lacks the visual examples to generalize. The targeted remediation is to add training frames specifically where that keypoint is visible and labeled in varied contexts.

- Protocol:

- Identify High-Error Frames: From the DLC evaluation results, extract the frames with the highest mean pixel error for the problematic keypoint.

- Context Analysis: Manually review these frames to identify common failure contexts (e.g., extreme occlusion, unusual bending, motion blur, specific lighting).

- Frame Acquisition: Return to your raw video corpus and find new examples where the keypoint is visible in those challenging contexts, but also in standard poses.

- Label & Refine: Add these new frames to your training dataset, label the keypoint carefully, and retrain the network. Iterate 1-4 as needed.

Q2: My model performs well in most conditions but fails systematically in a specific experimental context (e.g., during a social interaction against a complex background). How should I address this? A: This indicates a context-specific generalization gap. Remediation involves enriching the training set with frames representative of that underrepresented context.

- Protocol:

- Define the Context: Precisely define the failing condition (e.g., "mouse in home-cage corner during social investigation").

- Strategic Sampling: From videos of experiments containing that context, perform targeted sampling. Do not just add more random frames.

- Balance the Dataset: Ensure the new context-specific frames do not overwhelm the original dataset to maintain performance on previous tasks.

- Retrain & Validate: Retrain the network and validate its performance separately on the previously good contexts and the newly targeted challenging context.

Q3: How many new frames should I add for a targeted remediation to be effective without causing overfitting or catastrophic forgetting? A: There is no fixed number; effectiveness is determined by diversity and quality of the new examples. However, a systematic approach is recommended.

- Protocol & Data:

- Start with a modest addition (e.g., 50-200 frames focused on the high-error keypoint/context).

- Retrain the network from its last trained state (resume training) for a limited number of iterations.

- Monitor the loss plots for both the training and held-out evaluation set. A significant divergence suggests overfitting.

- Evaluate on a separate, balanced test video containing both standard and challenging contexts. Use the following table to track key metrics:

| Metric | Before Remediation (Pixel Error) | After Remediation (Pixel Error) | Target Change |

|---|---|---|---|

| Problematic Keypoint (Avg) | Decrease >15% | ||

| Problematic Keypoint (Worst 5%) | Decrease >20% | ||

| Other Keypoints (Avg) | No significant increase | ||

| Inference Speed (FPS) | No significant decrease |

Q4: What are the computational trade-offs of implementing a targeted frame remediation strategy? A: The primary trade-off is between improved accuracy and increased data handling/compute time.

- Data:

- Storage: Adding high-resolution frames increases dataset size.

- Training Time: Retraining requires additional GPU hours. The increase is less than training from scratch if resuming from weights.

- Labeling Time: Human-in-the-loop labeling is the most time-intensive step. Efficient tools (like the DLC GUI) are critical.

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function in DLC Dataset Refinement |

|---|---|

| DeepLabCut (v2.3+) | Core software for pose estimation; provides tools for network training, evaluation, and refinement. |

| Labeling GUI (DLC) | Graphical interface for efficient manual labeling and correction of keypoints in extracted frames. |

| Jupyter Notebooks | Environment for running DLC pipelines, analyzing results, and visualizing error distributions. |

| Video Sampling Script | Custom Python script to programmatically extract frames based on error metrics or contextual triggers. |

| High-Contrast Animal Markers (e.g., non-toxic paint) | Used sparingly in difficult cases to temporarily enhance visual features of low-contrast keypoints for the network. |

| Dedicated GPU (e.g., NVIDIA RTX Series) | Accelerates the network retraining process, making iterative refinement feasible. |

| Structured Data Storage (e.g., HDF5 files) | Manages the expanded dataset of frames, labels, and associated metadata efficiently. |

Experimental Workflow for Targeted Remediation

Diagram Title: Targeted Remediation Workflow for DLC

Keypoint Error Diagnostic & Remediation Logic

Diagram Title: High-Error Keypoint Diagnostic Tree

Technical Support Center

Troubleshooting Guides & FAQs

Q1: During DeepLabCut training, my loss plateaus early and does not decrease further. How should I adjust the learning rate and training iterations? A1: An early plateau often indicates a learning rate that is too high or too low. First, implement a learning rate scheduler. Start with a baseline of 0.001 and reduce it by a factor of 10 when the validation loss stops improving for 10 epochs. Increase the total training iterations to allow the scheduler to take effect. A common range for iterations in pose estimation is 500,000 to 1,000,000. Monitor the loss curve; a steady, gradual decline confirms correct adjustment.

Q2: My training is unstable, with the loss fluctuating wildly between batches. What is the likely cause related to batch size and learning rate? A2: This is a classic sign of a batch size that is too small coupled with a learning rate that is too high. Small batches provide noisy gradient estimates. Reduce the learning rate proportionally when decreasing batch size. Use the linear scaling rule as a guideline: if you multiply the batch size by k, multiply the learning rate by k. For DeepLabCut on typical lab hardware, a batch size of 8 is a stable starting point with a learning rate of 0.001.

Q3: How do I determine the optimal number of training iterations to avoid underfitting or overfitting in my behavioral analysis model? A3: Use iterative refinement guided by validation error. Split your dataset (e.g., 90% train, 10% validation). Train for a fixed, large number of iterations (e.g., 500k) while evaluating the validation error (PCK or RMSE) every 10,000 iterations. Plot the validation error curve. The optimal iteration point is typically just before the validation error plateaus or starts to increase. Early stopping at this point prevents overfitting.

Q4: When refining a DeepLabCut dataset, how should I balance adjusting network parameters versus adding more labeled training data? A4: Network parameter tuning should precede major data augmentation. Follow this protocol: First, optimize iterations, batch size, and learning rate on your current dataset (see Table 1). If the training error remains high, your model is underfitting; consider increasing model capacity or iterations. If the validation error is high while training error is low, you are overfitting; add more diversified training frames to your dataset before further parameter tuning.

Table 1: Parameter Performance on DeepLabCut Benchmark Datasets

| Dataset Type | Optimal Batch Size | Recommended Learning Rate | Typical Iterations to Convergence | Final Train Error (px) | Final Val Error (px) |

|---|---|---|---|---|---|

| Mouse Open Field | 8 - 16 | 0.001 - 0.0005 | 450,000 - 750,000 | 2.1 - 3.5 | 4.0 - 6.5 |

| Drosophila Courtship | 4 - 8 | 0.001 | 500,000 - 800,000 | 1.8 - 2.9 | 3.8 - 5.9 |

| Human Gait Lab | 16 - 32 | 0.0005 - 0.0001 | 600,000 - 950,000 | 3.5 - 5.0 | 6.5 - 9.0 |

Table 2: Impact of Batch Size on Training Stability (Learning Rate=0.001)

| Batch Size | Gradient Noise | Memory Usage (GB) | Time per 1k Iterations (s) | Recommended LR per Scaling Rule |

|---|---|---|---|---|

| 4 | High | ~2.1 | 85 | 0.001 |

| 8 | Medium | ~3.8 | 92 | 0.001 |

| 16 | Low | ~7.0 | 105 | 0.002 |

| 32 | Very Low | ~13.5 | 135 | 0.004 |

Experimental Protocols

Protocol A: Systematic Learning Rate Search

- Fix batch size (e.g., 8) and iterations (e.g., 100,000 for initial scout).

- Train identical DeepLabCut ResNet-50 models with learning rates [0.1, 0.01, 0.001, 0.0001].

- Plot training loss curves for the first 100k iterations.

- Select the learning rate that shows a smooth, monotonic decrease without divergence (high LR) or stagnation (low LR).

- Refine with a multiplicative factor of 3 search around the selected value (e.g., 0.003, 0.001, 0.0003).

Protocol B: Determining Maximum Efficient Batch Size

- Monitor GPU memory usage using tools like

nvidia-smi. - Start with a batch size of 4 and a conservatively low learning rate.

- Gradually increase the batch size until GPU memory utilization reaches 85-90%.

- Record this as your maximum feasible batch size (B_max).

- Test training stability at B_max with the scaled learning rate (LR * (B_max/8)).

Protocol C: Iteration Scheduling with Early Stopping

- Set a maximum iteration cap (e.g., 1,000,000).

- Evaluate the model on a held-out validation set every 10,000 iterations.

- Record the validation score (e.g., Mean Pixel Error).

- Implement a patience counter. If the validation score does not improve for 5 evaluation cycles (50k iterations), reduce the learning rate by 0.5.

- Stop training entirely if no improvement is seen for 10 evaluation cycles (100k iterations). The best model is the one at the iteration with the lowest validation error.

Visualizations

Title: DeepLabCut Parameter Optimization Workflow

Title: Learning Rate Impact on Training Dynamics

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function in Experiment | Example/Details |

|---|---|---|

| DeepLabCut (ResNet-50 Backbone) | Base convolutional neural network for feature extraction and pose estimation. | Pre-trained on ImageNet; provides robust initial weights for transfer learning. |

| NVIDIA GPU with CUDA | Hardware accelerator for high-speed matrix operations essential for deep learning. | Minimum 8GB VRAM (e.g., RTX 3070/4080) required for batch sizes > 8. |

| Adam Optimizer | Adaptive stochastic optimization algorithm; adjusts learning rate per parameter. | Default beta values (0.9, 0.999); used to update network weights. |

| Step Decay LR Scheduler | Predefined schedule to reduce learning rate at specific iterations. | Drops LR by 0.5 every 100k iterations; prevents oscillation near loss minimum. |

| Labeled Behavioral Video Dataset | Refined training data specific to the research domain (e.g., rodent gait). | Should contain diverse frames covering full behavioral repertoire and camera views. |

| Validation Set (PCK Metric) | Held-out data for evaluating model performance and preventing overfitting. | Uses Percentage of Correct Keypoints (PCK) at a threshold (e.g., 5 pixels) for scoring. |

| TensorBoard / Weights & Biases | Visualization toolkit for monitoring loss, gradients, and predictions in real-time. | Essential for diagnosing parameter-related issues like exploding gradients. |

Leveraging Transfer Learning and Fine-tuning with Pre-trained Models

Technical Support Center

Troubleshooting Guides & FAQs

Q1: During fine-tuning of a DeepLabCut (DLC) pose estimation model, I encounter "NaN" or exploding loss values almost immediately. What are the primary causes and solutions?

A: This is commonly caused by an excessively high learning rate for the new layers or the entire model during fine-tuning.

- Protocol: Implement a learning rate finder experiment. Perform a short training run (e.g., 100-200 iterations) while exponentially increasing the learning rate from a low value (1e-7) to a high value (1e-1). Plot loss vs. learning rate.

- Solution: Choose a learning rate from the region where the loss is decreasing steeply but steadily (typically 1-2 orders of magnitude lower than where it explodes). For fine-tuning a pre-trained ResNet backbone in DLC, start with a learning rate between 1e-4 and 1-5 for the new layers, and 1-2 orders of magnitude lower for the pre-trained layers.

Q2: My fine-tuned model performs worse on my refined dataset than the generic pre-trained model. What is happening?

A: This indicates catastrophic forgetting or a domain shift too large for the current fine-tuning strategy.

- Protocol: Conduct a staged fine-tuning and evaluation experiment:

- Freeze all pre-trained layers, train only the new head for 50k iterations. Evaluate.

- Unfreeze the last one or two stages of the backbone, reduce learning rate by 10x, train for another 50k iterations. Evaluate.

- Compare performance at each stage on a held-out validation set from your refined dataset.

- Solution: If performance degrades after stage 2, your refined dataset may be too small or divergent. Apply stronger data augmentation (e.g., motion blur, histograms matching) or consider a lighter unfreezing schedule. Layer-wise learning rate decay is also recommended.

Q3: How do I decide which layers of a pre-trained model to freeze and which to fine-tune for my specific animal behavior in drug development studies?

A: The decision should be based on the similarity between your data (e.g., rodent gait under compound) and the pre-training data (e.g., ImageNet), and the complexity of your refined keypoints.

- Protocol: Perform an ablation study on layer unfreezing. Create a table comparing the results of different unfreezing strategies on your validation set's Mean Average Error (MAE).

| Unfreezing Strategy | Trainable Params | MAE (pixels) | Training Time (hrs) | Notes |

|---|---|---|---|---|

| Only New Head | ~0.5M | 4.2 | 1.5 | Fast, but may not adapt features. |

| Last 2 Stages + Head | ~5M | 3.1 | 3.0 | Good balance for similar domains. |

| All Layers (Full FT) | ~25M | 2.8 | 6.5 | Best MAE, risk of overfitting on small sets. |

| Last Stage + Head | ~2M | 3.5 | 2.5 | Efficient for minor domain shifts. |

- Solution: Start with unfreezing only the last stage. If performance plateaus, iteratively unfreeze earlier stages while monitoring validation loss for overfitting.

Q4: When refining a DLC dataset with novel keypoints (e.g., specific paw angles), does transfer learning from a standard pose model still provide benefits?

A: Yes, but the benefit is primarily in the early and middle feature layers that detect general structures (edges, textures, limbs), not in the final keypoint localization layers.

- Protocol: Use a feature visualization technique. Pass sample images through the pre-trained and fine-tuned networks and visualize the activation maps of intermediate convolutional layers (e.g., using Grad-CAM). You will see that lower/mid-level features remain relevant.

- Solution: Use a pre-trained model (e.g., ResNet-50) as a backbone. Replace the final DLC prediction heads entirely with new ones matching your novel keypoint count. Strongly freeze the first half of the backbone during initial training.

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function in Fine-tuning for DLC Dataset Refinement |

|---|---|

| Pre-trained Model Weights (e.g., ResNet, EfficientNet) | Provides robust, generic feature extractors, drastically reducing required training data and time. The foundational "reagent" for transfer learning. |

| Refined/Labeled Dataset | The core experimental asset. High-quality, consistently labeled images/videos specific to your research domain (e.g., drug-treated animals). |

| Learning Rate Scheduler (e.g., Cosine Annealing) | Dynamically adjusts the learning rate during training, helping to converge to a better minimum and manage the fine-tuning of pre-trained weights. |

Feature Extractor Hook (e.g., PyTorch register_forward_hook) |

A debugging tool to extract and visualize activation maps from intermediate layers, diagnosing if features are being successfully transferred or forgotten. |

| Gradient Clipping | A stability tool that prevents exploding gradients by capping their maximum magnitude, crucial when fine-tuning deep pre-trained networks. |

| Data Augmentation Pipeline (e.g., Imgaug) | Synthetically expands your refined dataset by applying random transformations (rotation, shear, noise), improving model generalization and preventing overfitting. |

Experimental Workflow for Fine-tuning in DLC Research

Signaling Pathway: Transfer Learning Impact on Model Performance

Benchmarking Success: Validating Your Refined Model for Scientific Rigor

Troubleshooting Guides & FAQs

FAQ 1: During my DeepLabCut (DLC) training, my train error is low but my test error is very high. What does this mean and how can I fix it?

- Answer: This indicates severe overfitting. Your model has memorized the training data but fails to generalize to unseen data (the test set). This is a critical issue in dataset refinement research.

- Troubleshooting Steps:

- Increase Dataset Size & Diversity: This is the most effective solution. Add more labeled frames to your training set, ensuring they capture the full range of animal poses, lighting conditions, and camera angles relevant to your experiments.

- Apply Data Augmentation: Use DLC's built-in augmentation (e.g.,