The BDQSA Model: A Complete Framework for Preprocessing Behavioral Science Data in Drug Development Research

This article provides a comprehensive guide to the BDQSA (Background, Design, Questionnaires, Subjects, Apparatus) model for preprocessing behavioral science data.

The BDQSA Model: A Complete Framework for Preprocessing Behavioral Science Data in Drug Development Research

Abstract

This article provides a comprehensive guide to the BDQSA (Background, Design, Questionnaires, Subjects, Apparatus) model for preprocessing behavioral science data. Tailored for researchers and drug development professionals, it covers the model's foundational principles, step-by-step application methodology, common troubleshooting strategies, and validation against other frameworks. The guide bridges the gap between raw behavioral data collection and robust statistical analysis, ensuring data integrity for translational research and clinical trials.

What is the BDQSA Model? A Foundational Guide for Behavioral Data Preprocessing

Background

The BDQSA (Background, Design, Questionnaires, Subjects, Apparatus) framework is a standardized, modular model for the preprocessing phase of behavioral science data research. Its primary function is to ensure methodological rigor, reproducibility, and data quality before data collection begins. In the context of drug development—particularly for CNS (Central Nervous System) targets—this framework systematically captures metadata critical for interpreting trial outcomes. It addresses common pitfalls in behavioral research, such as inconsistent baseline reporting, environmental confounders, and unvalidated measurement tools, thereby strengthening the link between preclinical findings and clinical translation.

Design

The framework's design is a sequential, interdependent pipeline where each module informs the next. The Background module establishes the theoretical and neurobiological justification. The Design module defines the experimental protocol (e.g., between/within-subjects, control groups, randomization). The Questionnaires/Assays module selects and validates measurement instruments. The Subjects module specifies inclusion/exclusion criteria and sample size justification. The Apparatus module details the physical and software setup for data acquisition. This structure forces explicit documentation of variables that are often overlooked.

Questionnaires & Behavioral Assays

This module focuses on the operationalization of dependent variables. Selection must be hypothesis-driven and account for the target construct's multi-dimensionality (e.g., measuring both anhedonia and psychomotor agitation in depression models). A combination of validated, species-appropriate tools is required.

Table 1: Core Behavioral Assays for Preclinical CNS Drug Development

| Assay Category | Example Assays | Primary Construct Measured | Key Validation Consideration |

|---|---|---|---|

| Anxiety & Fear | Elevated Plus Maze, Open Field, Fear Conditioning | Avoidance, Hypervigilance | Lighting, noise levels, prior handling |

| Depression & Despair | Forced Swim Test, Tail Suspension Test, Sucrose Preference | Behavioral Despair, Anhedonia | Time of day, water temperature, habituation |

| Social Behavior | Three-Chamber Test, Social Interaction Test | Social Motivation, Recognition | Gender/Strain of stimulus animal, cage familiarity |

| Cognition | Morris Water Maze, Novel Object Recognition, T-Maze | Spatial Memory, Working Memory | Distinct visual cues, inter-trial interval consistency |

| Motivation & Reward | Operant Self-Administration, Conditioned Place Preference | Drug-Seeking, Reward Valuation | Reinforcer magnitude, schedule of reinforcement |

Detailed Protocol: Sucrose Preference Test (SPT) for Anhedonia

- Objective: To measure anhedonia, a core symptom of depression, by assessing the preference for a natural reward (sucrose solution) over water.

- Materials: Home cages, two identical drinking bottles (sipper tubes), 1-2% sucrose solution, tap water, scale.

- Pre-Test (Habituation): 48 hours prior, expose subjects to two bottles of water to prevent side bias. 24 hours prior, expose to one bottle of sucrose and one of water for 1 hour to acclimate.

- Test Procedure:

- Deprive animals of water for 12-16 hours (food ad libitum).

- At the start of the dark cycle, weigh and present two pre-weighed bottles: one with sucrose solution and one with water.

- Place bottles in a counterbalanced left/right position across subjects.

- Allow free access for 1-4 hours (duration must be consistent within a study).

- Re-weigh bottles. Calculate sucrose preference: [Sucrose intake (g) / Total fluid intake (g)] x 100.

- Critical Controls: Use fresh sucrose solution daily. Clean bottles to prevent contamination. Control for order effects by switching bottle positions midway in longer tests.

Subjects

This module demands a comprehensive biological and experimental history. It moves beyond simple strain/age/weight reporting to include factors that significantly modulate behavioral phenotypes.

Table 2: Subject Metadata Requirements in BDQSA

| Category | Required Data Points | Rationale |

|---|---|---|

| Biological Specs | Species, Strain, Supplier, Genotype, Age, Weight, Sex | Basal genetic and neurobiological differences impact behavior. |

| Housing & Husbandry | Cage type/尺寸, # animals per cage, bedding, light/dark cycle, room temp/humidity, diet, water access. | Environmental enrichment and stress affect models of depression/anxiety. |

| Life History | Weaning age, shipping history, prior testing, surgical/ pharmacological history. | Early life stress and test history are critical confounders. |

| Sample Size | N per group, total N, power analysis justification (alpha, power, effect size estimate). | Ensures statistical robustness and reduces Type I/II errors. |

Apparatus

Detailed apparatus specification minimizes "laboratory drift" and technical noise. Documentation should enable precise replication.

The Scientist's Toolkit: Essential Apparatus for Rodent Behavioral Research

| Item | Function & Specification Notes |

|---|---|

| Video Tracking System | (e.g., EthoVision, Any-Maze). Automated tracking of position, movement, and behavior. Must specify software version, sampling rate (e.g., 30 Hz), and tracking algorithm. |

| Sound-Attenuating Cubicles | Isolates experimental arena from external noise and light fluctuations. Must report ambient light level inside cubicle (lux) and background noise level (dB). |

| Behavioral Arena | (e.g., Open Field box, Maze). Specify exact material (white PVC, black acrylic), dimensions (cm), and wall height. |

| Calibrated Stimulus Delivery | For fear conditioning: precise shock generator (mA, duration). For operant boxes: pellet dispenser, liquid dipper, or syringe pump for drug infusion. Require calibration logs. |

| Data Acquisition Hardware | (e.g., Med-PC for operant chambers, Noldus IO Box). Interfaces apparatus with software. Document firmware version and configuration files. |

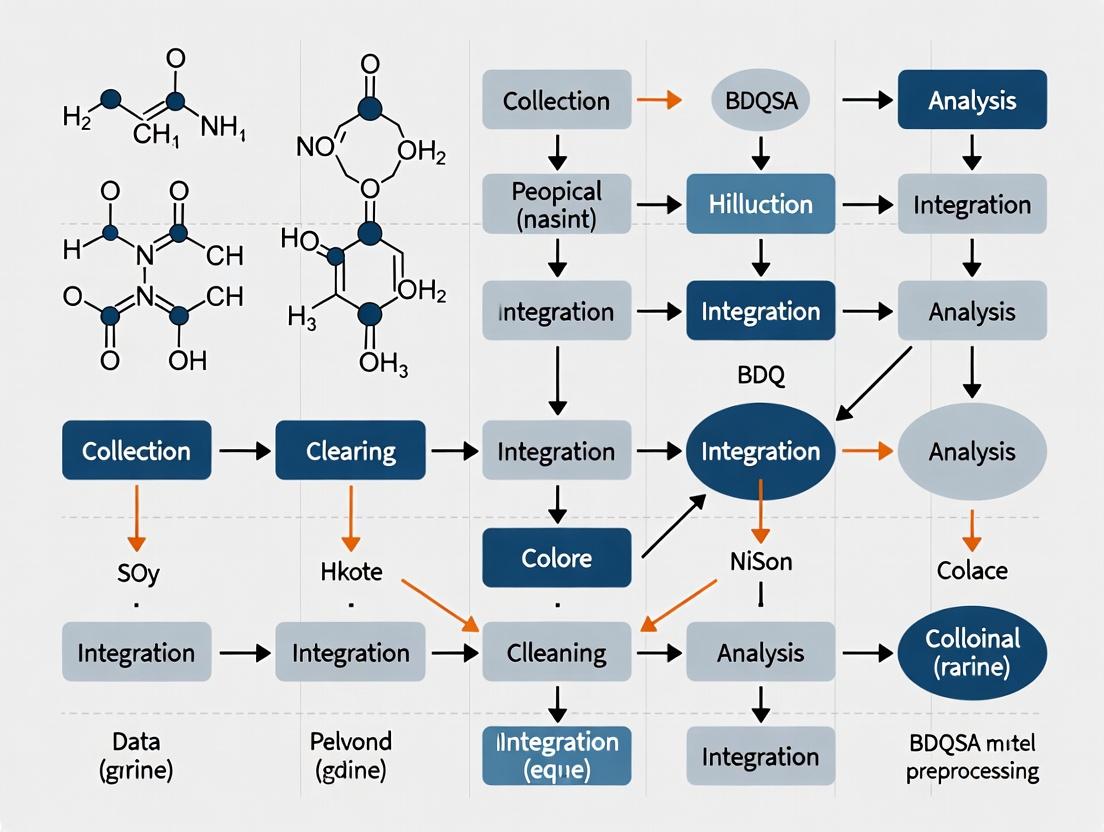

Visualizations

BDQSA Framework Sequential Workflow

Sucrose Preference Test Protocol Steps

The Critical Role of Preprocessing in Behavioral Science and Translational Research

Behavioral science and translational research generate complex, high-dimensional data from sources like video tracking, electrophysiology, and clinical assessments. The BDQSA model (Bias Detection, Quality control, Standardization, and Artifact removal) provides a systematic framework for preprocessing this data. This model is critical for ensuring that downstream analyses in neuropsychiatric drug development are valid, reproducible, and clinically meaningful. Effective preprocessing directly impacts the translational "bridge" from animal models to human clinical trials.

Application Notes: Key Preprocessing Stages & Impact

Bias Detection and Mitigation

Experimental bias can arise from experimenter effects, time-of-day testing, or apparatus variability. Preprocessing must identify and correct these confounds to isolate true biological or treatment signals.

- Application: In a longitudinal mouse study of an antidepressant candidate, systematic bias was detected in mobility metrics between cohorts tested in morning vs. evening sessions.

- Quantitative Impact: The table below shows the effect of bias correction on the primary outcome measure (Forced Swim Test immobility time).

Table 1: Impact of Temporal Bias Correction on Behavioral Readout

| Experimental Group | Raw Immobility Time (s) Mean ± SEM | Corrected Immobility Time (s) Mean ± SEM | p-value (vs. Control) |

|---|---|---|---|

| Vehicle Control (AM) | 185.2 ± 12.1 | 172.5 ± 10.8 | -- |

| Drug Candidate (PM) | 150.4 ± 15.3 | 165.8 ± 11.2 | 0.62 |

| Drug Candidate (Bias-Corrected) | 150.4 ± 15.3 | 142.1 ± 9.7 | 0.04 |

Quality Control (QC) and Artifact Removal

Automated behavioral data is contaminated by artifacts (e.g., temporary loss of video tracking, electrical noise in EEG). Rigorous QC pipelines are required.

- Application: Automated grooming detection in a rodent model of OCD using video analysis. Raw data includes frames where the animal is obscured by the cage lid.

- Protocol: A two-step QC protocol is implemented:

- Frame-by-frame Confidence Scoring: The pose estimation algorithm (e.g., DeepLabCut) outputs a confidence score (0-1). Frames with scores <0.9 are flagged.

- Artefact Interpolation: Flagged frames are not simply deleted. Grooming bout kinematics (e.g., paw trajectory) are interpolated from surrounding high-confidence frames using a cubic spline algorithm.

Table 2: Effect of QC on Grooming Bout Detection Accuracy

| QC Stage | Total Grooming Bouts Detected | False Positives (Manual Check) | False Negatives (Manual Check) | Detection F1-Score |

|---|---|---|---|---|

| Raw Output | 87 | 23 | 11 | 0.79 |

| After Confidence Filtering & Interpolation | 79 | 5 | 8 | 0.92 |

Standardization and Normalization

Data must be scaled and transformed to enable comparison across subjects, sessions, and labs. This is crucial for meta-analysis and building cross-species translational biomarkers.

- Application: Standardizing vocalization data (ultrasonic vocalizations in rodents, speech analysis in humans) for anxiety phenotyping.

- Protocol: Z-score normalization within subject, followed by cohort scaling.

- For each subject, extract features (e.g., call rate, mean frequency, duration).

- Normalize each feature to the subject's own baseline session:

z = (value - mean_baseline) / std_baseline. - Scale the entire treatment cohort (e.g., drug group) to the mean and standard deviation of the control cohort's post-treatment z-scores. This creates a standardized effect size.

Experimental Protocols

Protocol 1: Preprocessing for Video-Based Social Interaction Assay

Aim: To generate bias-free, QC'd interaction scores from raw video tracking data for screening pro-social drug compounds.

Materials: See "Scientist's Toolkit" below. Procedure:

- Data Acquisition: Record 10-minute sessions of test mouse with a novel conspecific in a rectangular arena. Use top-down camera at 30fps.

- Raw Tracking: Use EthoVision XT or similar software to generate raw time-series data: X,Y coordinates for test mouse and stimulus mouse, and arena zones (corner, center, interaction zone).

- BDQSA Preprocessing Pipeline:

- Bias Detection: Run a sham video (no animals) to check for uneven lighting causing false tracking. Plot heatmaps of occupancy for all control animals to detect side bias.

- Quality Control: Flag frames where tracking confidence is low or animals are merged. Interpolate short gaps (<10 frames). Discard sessions with >15% lost frames.

- Standardization: Calculate primary metric:

% time in interaction zone. Normalize this score for each animal to its performance in a prior habituation session (without stimulus) to control for baseline exploration. - Artifact Removal: Implement a "freeze detection" algorithm (velocity < 2 cm/s for >2s) to distinguish passive social interaction from immobility due to fear. Subtract freeze time from total interaction zone time.

- Output: A cleaned dataset containing, per subject: bias-corrected, freeze-artifact-removed, and habituation-normalized

% social interaction time.

Protocol 2: Preprocessing of EEG for Translational Sleep Architecture Analysis

Aim: To clean and stage rodent polysomnography (EEG/EMG) data for comparison with human sleep studies in neuropsychiatric drug development.

Materials: See "Scientist's Toolkit" below. Procedure:

- Raw Data Acquisition: Record 24-hour EEG (frontal and occipital leads) and EMG (nuchal muscle) signals in freely moving rodents. Sampling rate: 256 Hz.

- BDQSA Preprocessing Pipeline:

- Artifact Removal: Apply a 4th-order Butterworth bandpass filter (0.5-40 Hz) to EEG. Apply a 10-100 Hz bandpass and 60 Hz notch filter to EMG. Identify and replace major movement artifacts (EMG amplitude >10 SD from mean) using wavelet decomposition and reconstruction.

- Quality Control: Calculate the power spectral density (PSD) for each 4-second epoch. Epochs with total power in the 50-60 Hz band exceeding a set threshold are marked for manual review.

- Standardization: Use standardized scoring criteria (Rodent Sleep Consensus Committee) to label each epoch as Wake, NREM, or REM sleep based on delta (0.5-4 Hz)/theta (6-9 Hz) ratio and EMG amplitude.

- Bias Detection: Check for diurnal bias by comparing sleep architecture metrics (e.g., REM latency) between the first and second half of the light cycle for control animals. Apply a linear correction factor if a systematic drift is found.

- Output: A cleaned hypnogram (sleep stage plot) and derived metrics (sleep bout architecture, spectral power bands) ready for cross-species translational analysis with human PSG data.

Visualizations

BDQSA Preprocessing Sequential Workflow

BDQSA in Translational Biomarker Pipeline

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for Behavioral Data Preprocessing

| Item/Category | Example Product/Solution | Primary Function in Preprocessing |

|---|---|---|

| Behavioral Tracking Software | EthoVision XT, ANY-maze, DeepLabCut | Generates raw, coordinate-based time-series data from video for downstream QC and analysis. |

| Automated Sleep Scoring Software | SleepSign, NeuroKit2 (Python), SPIKE2 | Provides initial, standardized sleep/wake classification of EEG/EMG data prior to manual QC and artifact review. |

| Signal Processing Toolbox | MATLAB Signal Processing Toolbox, Python (SciPy, MNE-Python) | Enables filtering, Fourier transforms, and wavelet analysis for artifact removal and feature extraction. |

| Statistical Analysis Software | R (lme4, ggplot2), PRISM, Python (statsmodels, Pingouin) | Performs bias detection (linear mixed models), normalization, and generates QC visualizations. |

| Data Management Platform | LabKey Server, DataJoint, Open Science Framework (OSF) | Ensures standardized data structure, version control for preprocessing pipelines, and reproducible workflows. |

| Reference Datasets | Openly shared control group data, IBAGS (Intern. Behav. Arch.) | Provides essential baseline distributions for normalization and standardization steps within the BDQSA model. |

The evolution from experimental psychology to modern drug development represents a paradigm shift in understanding behavior and its biological underpinnings. This journey began with observational and behavioral studies, which provided the foundational metrics now essential in preclinical and clinical research. The contemporary approach is crystallized in data-driven models like the Behavioral Data Quality and Standardization Architecture (BDQSA), which provides a framework for preprocessing heterogeneous behavioral science data for integration with neurobiological and pharmacometric datasets. This standardization is critical for translating behavioral phenotypes into quantifiable targets for drug development.

Foundational Principles and the BDQSA Model

The BDQSA model formalizes the pipeline from raw behavioral data to analysis-ready variables suitable for computational modeling in drug discovery. Its core stages are:

Stage 1: Data Acquisition & Source Validation. Stage 2: Temporal Alignment & Synchronization. Stage 3: Artifact Detection & Quality Flagging. Stage 4: Behavioral Feature Extraction (Standardized Ethograms). Stage 5: Normalization & Multimodal Integration.

This model ensures that data from traditional psychological tests (e.g., rodent forced swim test, human ECG) and modern tools (digital phenotyping, videotracking) are processed with consistent rigor, enabling direct correlation with molecular data from high-throughput screening (HTS) and 'omics' platforms.

Application Notes: Translating Behavioral Assays into Drug Screening Pipelines

Application Note 1: Predictive Validity of Classic Behavioral Tests for Antidepressant Screening

Classical tests like the Forced Swim Test (FST) and Tail Suspension Test (TST) remain cornerstones. BDQSA preprocessing is applied to raw immobility/latency data to control for inter-lab variability (e.g., water temperature, observer bias) before integration with transcriptomic data from harvested brain tissue.

Table 1: Efficacy Metrics of Classic Antidepressants in Rodent Models

| Behavioral Test | Drug (Class) | Mean % Reduction in Immobility (±SEM) | Effective Dose Range (mg/kg, i.p.) | Correlation with Clinical Efficacy (r) |

|---|---|---|---|---|

| Forced Swim Test (Rat) | Imipramine (TCA) | 42.3% (±5.1) | 15-30 | 0.78 |

| Forced Swim Test (Mouse) | Fluoxetine (SSRI) | 35.7% (±4.8) | 10-20 | 0.72 |

| Tail Suspension Test (Mouse) | Bupropion (NDRI) | 38.9% (±6.2) | 20-40 | 0.65 |

| Sucrose Preference Test* | Venlafaxine (SNRI) | +25.1% Preference (±3.7) | 10-20 | 0.81 |

*Anhedonia model; data indicates increase in sucrose consumption.

Application Note 2: High-Throughput Phenotypic Screening in CNS Drug Discovery

Modern automated systems (e.g., Intellicage, PhenoTyper) generate vast multivariate data (location, activity, social proximity). BDQSA stages 4 & 5 extract composite "behavioral signatures." For example, a pro-social signature might integrate distance to conspecific, number of interactions, and ultrasonic vocalization frequency. These signatures are used as multivariate endpoints in HTS.

Table 2: Throughput and Data Yield of Automated Behavioral Systems

| System | Primary Readouts | Animals per Run | Data Points per Animal per 24h | Key Application in Drug Development |

|---|---|---|---|---|

| Home Cage Monitoring | Activity, Circadian rhythm, Feeding | 12-96 | 10,000+ | Chronic toxicity/safety pharmacology |

| Videotracking (EthoVision) | Path length, Velocity, Zone occupancy | 1-12 | 1,000-5,000 | Acute efficacy, anxiolytics |

| Automated Cognitive Chamber | Correct trials, Latency, Perseveration | 8-32 | 2,000-8,000 | Cognitive enhancers for Alzheimer's |

| Wireless EEG/EMG | Sleep architecture, Seizure events | 4-16 | 864,000+ (1kHz) | Anticonvulsants, sleep disorder drugs |

Detailed Experimental Protocols

Protocol 1: BDQSA-Compliant Forced Swim Test for Antidepressant Screening

Objective: To assess antidepressant-like activity of a novel compound with minimized experimental noise. Materials: See "Scientist's Toolkit" below. Preprocessing (BDQSA Stages 1-3):

- Acquisition & Validation: Record test sessions with synchronized overhead video and RFID animal ID. Metadata (water temp: 23-25°C, animal weight) is digitally logged.

- Temporal Alignment: Align video timeline with injection timeline (T0 = time of compound administration).

- Artifact Detection: Use software (e.g., DeepLabCut) to flag tracking errors (e.g., loss of animal due to splashing). Flagged periods are excluded from primary analysis. Procedure:

- Administer test compound or vehicle control (n=10-12/group) at predetermined time pre-test (e.g., 30 min for acute).

- Place rodent in transparent cylinder (height 40cm, diameter 20cm) filled with water (depth 30cm) for 6 min.

- Record entire session. BDQSA Stage 4: Analyze only the final 4 min. Software extracts immobility (movement only necessary to keep head above water), swimming, and climbing.

- BDQSA Stage 5: Normalize immobility time to vehicle control group mean (set as 100%). Perform outlier detection (e.g., Grubbs' test) on normalized data. Analysis: Compare normalized immobility between groups using one-way ANOVA. A significant reduction indicates antidepressant-like activity.

Protocol 2: Integrating Behavioral Phenotyping with Transcriptomics

Objective: To link a behavioral signature (e.g., social avoidance) to specific brain region gene expression changes. Materials: Automated social interaction arena, rapid brain dissection tools, RNA stabilization solution, RNA-seq kit. Procedure:

- Behavioral Phase: Run social interaction test (e.g., resident-intruder) with videotracking. Apply BDQSA to extract a "social interaction ratio" (time near intruder / time near object).

- Temporal Alignment (BDQSA Critical): At precisely 90 min post-test onset, euthanize animal and rapidly dissect target brain region (e.g., nucleus accumbens, prefrontal cortex). Snap-freeze in liquid N2.

- Multimodal Integration: Cluster animals based on behavioral signature (e.g., high vs. low interactors). Perform bulk RNA-seq on tissue from each cluster.

- Data Integration: Use differential expression analysis. Correlate expression of significant genes (e.g., FosB, Bdnf) with continuous social interaction ratio using linear models. This creates a gene expression signature predictive of the behavioral state.

Visualization: Pathways and Workflows

Title: BDQSA Data Preprocessing Pipeline for Behavioral Science

Title: Evolution from Behavior to Drug Development

The Scientist's Toolkit: Key Research Reagent Solutions

Table 3: Essential Materials for Behavioral Pharmacology

| Item Name | Supplier Examples | Function in Research |

|---|---|---|

| Videotracking Software (EthoVision XT) | Noldus Information Technology | Automates behavioral scoring (locomotion, zone occupancy) with high spatial/temporal resolution, replacing manual observation. |

| RFID Animal Tracking System | BioDAQ, TSE Systems | Enables continuous, individual identification and monitoring of animals in social home cages for longitudinal studies. |

| DeepLabCut AI Pose Estimation | Open-Source Toolbox (Mathis Lab) | Uses deep learning to track specific body parts (e.g., ear, tail base) from video, enabling detailed ethogram construction (e.g., grooming bouts). |

| Corticosterone ELISA Kit | Arbor Assays, Enzo Life Sciences | Quantifies plasma corticosterone levels as an objective, correlative measure of stress response in behavioral tests (FST, EPM). |

| c-Fos IHC Antibody Kit | Cell Signaling Technology, Abcam | Labels neurons activated during a behavioral task, allowing mapping of brain circuit engagement to specific behaviors. |

| Polymerase Chain Reaction (PCR) System | Bio-Rad, Thermo Fisher | Quantifies changes in gene expression (e.g., Bdnf, Creb1) in dissected brain regions following behavioral testing or drug administration. |

| LC-MS/MS System for Bioanalysis | Waters, Sciex | Measures ultra-low concentrations of drug compounds and metabolites in plasma or brain homogenate, essential for PK/PD studies. |

| High-Content Screening (HCS) System | PerkinElmer, Thermo Fisher | Automates imaging and analysis of in vitro cell-based assays (e.g., neurite outgrowth, GPCR internalization) for primary drug screening. |

Within the BDQSA (Behavioral Data Quality and Standardization Architecture) model for preprocessing behavioral science data, structured metadata is the foundational layer enabling reproducibility and advanced analysis. This framework addresses the inherent complexity and multidimensionality of behavioral research, particularly in drug development, where precise tracking of experimental conditions, subject states, and data transformations is critical.

Application Notes: Metadata Schema for Behavioral Studies

The following table outlines the core metadata categories mandated by the BDQSA model, their components, and their role in ensuring reproducibility.

Table 1: BDQSA Core Metadata Schema

| Category | Sub-Category | Description & Purpose | Format/Controlled Vocabulary Example |

|---|---|---|---|

| Study Design | Protocol Identifier | Unique ID linking data to the approved study protocol. | Persistent Digital Object Identifier (DOI) |

| Experimental Design Type | Specifies design (e.g., randomized controlled trial, crossover, open-label). | CTRL vocabulary: parallel_group, crossover, factorial | |

| Arms & Grouping | Defines control and treatment groups, including group size (n). | JSON structure defining group labels, assigned interventions, and subject count. | |

| Participant | Demographics | Age, sex, genetic background (strain, if non-human). | Age in days; Sex: M, F, O; Strain: C57BL/6J, Long-Evans |

| Inclusion/Exclusion Criteria | Machine-readable list of criteria applied. | Boolean logic statements referencing phenotypic measures. | |

| Baseline State | Pre-intervention behavioral or physiological baselines. | Numeric scores (e.g., baseline sucrose preference %, mean locomotor activity). | |

| Intervention | Compound/Stimulus | Treatment details (drug, dose, vehicle, route, timing). | CHEBI ID for compounds; Dose: mg/kg; Route: intraperitoneal, oral; Time relative to test. |

| Device/Apparatus | Description of equipment used for stimulus delivery or behavioral testing. | Manufacturer, model, software version. | |

| Data Acquisition | Behavioral Paradigm | Standardized name of the test (e.g., Forced Swim Test, Morris Water Maze). | Ontology term (e.g., NIF Behavior Ontology ID). |

| Raw Data File | Pointer to immutable raw data (sensor outputs, video files). | File path/URL with hash (SHA-256) for integrity check. | |

| Acquisition Parameters | Settings specific to the apparatus (e.g., maze diameter, trial duration, inter-stimulus interval). | Key-value pairs (e.g., "trialdurationsec": 300). | |

| Preprocessing (BDQSA) | Transformation Steps | Ordered list of data cleaning/processing operations applied. | List of actions: "raw_data_import", "artifact_removal_threshold: >3SD", "normalization_to_baseline" |

| Quality Metrics | Calculated metrics assessing data quality post-preprocessing. | "missing_data_percentage": 0.5, "signal_to_noise_ratio": 5.2 | |

| Software & Version | Exact computational environment used for preprocessing. | Container image hash (Docker/Singularity) or explicit library versions (e.g., Python 3.10, Pandas 2.1.0). |

Experimental Protocols

Protocol 1: Implementing the BDQSA Metadata Schema in a Preclinical Anxiety Study

Aim: To ensure full reproducibility of data collection and preprocessing for a study investigating a novel anxiolytic compound in the Elevated Plus Maze (EPM).

Materials:

- Subjects: Cohort of 40 male C57BL/6J mice, aged 10 weeks.

- Compound: Novel Agent X (NAX), dissolved in 0.9% saline with 1% DMSO.

- Apparatus: Standard EPM (arms 30 cm L x 5 cm W, closed walls 15 cm H, elevation 50 cm).

- Software: EthoVision XT v16, BDQSA-Preprocess v1.2 Python package.

Procedure:

- Metadata Template Instantiation: Create a new metadata record using the BDQSA JSON schema template. Populate Study Design and Participant categories prior to experimentation.

- Intervention Logging: Record precise details for each subject:

- Treatment: "NAX" or "Vehicle".

- Dose: "10 mg/kg".

- Route: "intraperitoneal".

- Injection-to-Test Interval: "30 min".

- Injection Volume: "5 mL/kg".

- Data Acquisition: Place subject in center of EPM facing an open arm. Record behavior for 5 minutes.

- Raw Data: Save the uncompressed video file (

.avi) with filename following pattern:[SubjectID]_[Treatment]_[Date].avi. - Acquisition Parameters: Log in metadata:

{"arena_dimensions_cm": "standard_EPM", "trial_duration_sec": 300, "light_level_lux": 100}.

- Raw Data: Save the uncompressed video file (

- Primary Data Extraction: Use EthoVision XT to generate raw track files (position (x,y) per timepoint).

- Preprocessing (BDQSA Pipeline):

a. Ingest: Run

bdqsa ingest --rawfile [trackfile.csv] --metadata [metadata.json]. b. Clean: Apply immobility threshold (speed < 2 cm/s for >1s is not considered exploration). c. Calculate: Derive primary measures: % time in open arms, total arm entries. d. Quality Check: Pipeline outputs quality metrics (e.g., tracking loss %). - Metadata Finalization: The preprocessing pipeline automatically appends the Transformation Steps, Quality Metrics, and Software Version to the metadata JSON file. This completed record is stored alongside the processed data file.

Visualizations

Diagram 1: BDQSA Metadata-Driven Workflow

Diagram 2: Signaling Pathway Impact Analysis Framework

The Scientist's Toolkit

Table 2: Essential Research Reagent Solutions for Reproducible Behavioral Analysis

| Item | Function in Reproducibility & Analysis | Example/Specification |

|---|---|---|

| Persistent Identifiers (PIDs) | Uniquely and permanently identify digital resources like protocols, datasets, and compounds, enabling reliable linking. | Digital Object Identifier (DOI), Chemical Identifier (CHEBI, InChIKey). |

| Controlled Vocabularies & Ontologies | Standardize terminology for experimental variables, behaviors, and measures, enabling cross-study data integration and search. | NIFSTD Behavior Ontology, Cognitive Atlas, Unit Ontology (UO). |

| Data Containerization Software | Encapsulate the complete data analysis environment (OS, libraries, code) to guarantee computational reproducibility. | Docker, Singularity. |

| Structured Metadata Schemas | Provide a machine-actionable template for recording all experimental context, as per the BDQSA model. | JSON-LD schema, ISA-Tab format. |

| Automated Preprocessing Pipelines | Apply consistent, version-controlled data transformation and quality control steps, logging all parameters. | BDQSA-Preprocess, DataJoint, SnakeMake workflow. |

| Electronic Lab Notebook (ELN) with API | Digitally capture experimental procedures and outcomes in a structured way, allowing metadata to be programmatically extracted. | LabArchives, RSpace, openBIS. |

| Reference Compounds & Validation Assays | Provide benchmark pharmacological tools to calibrate behavioral assays and confirm system sensitivity. | Known anxiolytic (e.g., diazepam) for anxiety models; known psychostimulant (e.g., amphetamine) for locomotor assays. |

Key Challenges in Raw Behavioral Data that BDQSA Addresses

Raw behavioral data from modern platforms (e.g., digital phenotyping, video tracking, wearable sensors) presents significant challenges for robust scientific analysis. The Behavioral Data Quality and Sufficiency Assessment (BDQSA) model provides a structured framework to preprocess and validate this data within research pipelines. This document details these challenges and the corresponding BDQSA protocols.

Table 1: Core Data Challenges & BDQSA Mitigation Strategies

| Challenge Category | Specific Manifestation | Impact on Analysis | BDQSA Phase Addresses |

|---|---|---|---|

| Completeness | Missing sensor reads, dropped video frames, participant non-compliance. | Biased statistical power, erroneous trend inference. | Sufficiency Assessment |

| Fidelity | Sensor noise (accelerometer drift), compression artifacts in video, sampling rate inconsistencies. | Reduced sensitivity to true signal, increased Type I/II errors. | Quality Verification |

| Context Integrity | Lack of timestamp synchronization between devices, inaccurate environmental metadata. | Incorrect causal attribution, inability to correlate multimodal streams. | Contextual Alignment |

| Standardization | Proprietary data formats (e.g., from different wearables), non-uniform units of measure. | Barriers to data pooling, replication failures, analytic overhead. | Normalization & Mapping |

| Ethical Provenance | Insufficient or ambiguous informed consent for secondary data use, poor de-identification. | Ethical breaches, data retraction, invalidated findings. | Provenance Verification |

Experimental Protocol 1: Multi-Sensor Temporal Synchronization & Gap Analysis

Aim: To quantify and correct temporal misalignment and data loss across concurrent behavioral data streams. Materials: See "Research Reagent Solutions" below. Procedure:

- Data Ingestion: Ingest raw timestamped data streams (e.g.,

.csv,.json) from all sources (motion capture, physiological wearables, experiment log) into a BDQSA-compliant data lake. - Reference Clock Alignment: Designate the most reliable source (e.g., motion capture system's internal clock) as the reference. Use the Network Time Protocol (NTP) logs from each device to calculate offset and drift. Apply linear or piecewise linear correction to all secondary streams.

- Gap Detection: For each data stream, calculate the difference between consecutive timestamps. Flag sequences where the difference exceeds the expected sampling interval by >5%. Use a sliding window (e.g., 10 sec) to identify periods where >20% of expected samples are missing.

- Interpolation Decision Matrix: Apply BDQSA rules:

- For gaps ≤3 samples, use linear interpolation.

- For gaps >3 samples but <1 sec, flag for statistical imputation (e.g., expectation-maximization) later.

- For gaps ≥1 sec, mark as

NULLand exclude from fine-grained sequence analysis.

- Validation: Output a synchronization report table listing corrected lags (ms) and a completeness heatmap per stream per participant.

Visualization 1: BDQSA Preprocessing Workflow

The Scientist's Toolkit: Research Reagent Solutions

| Item/Category | Example Product/Standard | Function in BDQSA Context |

|---|---|---|

| Time Synchronization | Network Time Protocol (NTP) server; Adafruit Ultimate GPS HAT. | Provides a microsecond-accurate reference clock for aligning disparate data streams. |

| Open Data Format | NDJSON (Newline-Delimited JSON); HDF5 for large-scale datasets. | Serves as a standardized, efficient container for heterogeneous behavioral data post-normalization. |

| De-Identification Tool | presidio (Microsoft); amnesia anonymization tool. |

Automates the removal or pseudonymization of Protected Health Information (PHI) from raw logs and metadata. |

| Data Quality Library | Great Expectations; Pandas-Profiling (now ydata-profiling). |

Provides programmable suites for validating data distributions, completeness, and schema upon ingestion. |

| Consent Management | REDCap (Research Electronic Data Capture) with dynamic consent modules. | Tracks participant consent scope and version, linking it cryptographically to derived datasets for provenance. |

Experimental Protocol 2: Fidelity Assessment for Video-Derived Behavioral Features

Aim: To quantify the signal-to-noise ratio in keypoint trajectories extracted from video and establish acceptance criteria. Materials: OpenPose or DeepLabCut for pose estimation; calibrated reference movement dataset; computed video quality metrics (e.g., BRISQUE). Procedure:

- Reference Data Collection: Record a short session of a participant performing standardized movements (e.g., finger-to-nose test, gait) in a controlled, high-fidelity environment (high frame rate, optimal lighting).

- Degraded Data Generation: Programmatically apply degradation transforms (e.g., Gaussian blur to simulate motion blur, JPEG compression, lowered frame rate) to copies of the reference video.

- Feature Extraction: Run identical pose estimation pipelines on the reference and all degraded videos. Extract time-series data for key joints (e.g., wrist, ankle).

- Fidelity Metric Calculation:

- Compute the Normalized RMSE (nRMSE) between the reference trajectory and each degraded trajectory.

- Calculate the Tracking Confidence Drop: Mean decrease in model confidence scores per frame.

- Compute the Spectral Entropy of the trajectory's velocity profile; increased noise raises entropy.

- BDQSA Thresholding: Establish failure flags: nRMSE > 0.15, Confidence Drop > 25%, or Spectral Entropy increase > 30% relative to reference. Data streams triggering flags require preprocessing (e.g., smoothing filter) or exclusion.

Visualization 2: Behavioral Signal Fidelity Verification Pathway

How to Implement BDQSA: A Step-by-Step Guide for Preprocessing Behavioral Datasets

Application Notes

This document constitutes Phase 1 (Documenting Background) of the Behavioral Data Quality and Sufficiency Assessment (BDQSA) model, a structured framework for preprocessing behavioral science data within translational research and drug development. The primary objective of this phase is to establish a rigorous, transparent foundation for subsequent data collection and analysis by explicitly defining the study context and hypotheses. This ensures that preprocessing decisions are hypothesis-driven and auditable, enhancing reproducibility and scientific validity.

In behavioral science research—particularly in areas like neuropsychiatric drug development, digital biomarkers, and cognitive assessment—raw data is often complex, multi-modal (e.g., ecological momentary assessments, actigraphy, cognitive task performance), and susceptible to noise and artifacts. Without a documented background, preprocessing can become arbitrary, introducing bias and obscuring true signals. This phase mandates the documentation of:

- Theoretical and Empirical Context: The scientific rationale and existing literature gap.

- Precise Research Questions and Hypotheses: Both primary and secondary.

- Operational Definitions: How constructs are translated into measurable variables.

- Anticipated Data Challenges: Expected noise sources, missing data patterns, and sufficiency thresholds relevant to the BDQSA model's later phases.

Key Background Parameters in Behavioral Science Research

Table 1: Common Quantitative Parameters in Behavioral Study Design

| Parameter Category | Typical Measures/Ranges | Relevance to BDQSA Preprocessing |

|---|---|---|

| Sample Size | Pilot: n=20-50; RCT: n=100-300 per arm; Observational: n=500+ | Determines statistical power for outlier detection and missing data imputation strategies. |

| Assessment Frequency | EMA: 5-10 prompts/day; Clinic Visits: Weekly-Biweekly; Actigraphy: 24/7 sampling at 10-100Hz | Informs rules for data density checks, temporal interpolation, and handling of irregular intervals. |

| Task Performance Metrics | Reaction Time (ms): 200-1500ms; Accuracy (%): 60-95%; Variability (CV of RT): 0.2-0.5 | Defines plausible value ranges for validity filtering and identifies performance artifacts. |

| Self-Report Scales | Likert Scales (e.g., 1-7, 0-10); Clinical Scores (e.g., HAM-D: 0-52, PANSS: 30-210) | Establishes bounds for logical value checks and floor/celling effect detection. |

| Expected Missing Data | EMA Compliance: 60-80%; Device Wear Time: 10-16 hrs/day; Attrition: 10-30% over 6 months | Sets thresholds for data sufficiency flags and guides imputation method selection. |

Experimental Protocol: Documenting Study Context & Hypotheses

Protocol Title: Systematic Background Documentation for BDQSA Phase 1

Objective: To produce a definitive background document that frames the research problem, states testable hypotheses, and pre-specifies key variables and expected data patterns to guide preprocessing.

Materials:

- Research proposal and protocol.

- Relevant literature (e.g., systematic reviews, methodological papers).

- BDQSA Phase 1 Documentation Template (See Diagram 1).

Procedure:

- Literature Synthesis:

- Conduct a focused review to summarize the current state of knowledge on the behavioral construct of interest (e.g., anhedonia, cognitive control).

- Identify the specific gap or limitation in existing measurement or data handling approaches that the study aims to address.

- Output: A concise narrative (≤500 words) and a table of key supporting references.

Hypothesis Formalization:

- State the primary research question in PICO/PECO format (Population, Intervention/Exposure, Comparison, Outcome).

- Convert the research question into a primary statistical hypothesis (H1) and its null (H0).

- List all secondary/exploratory hypotheses.

- Output: Explicit, falsifiable statements defining the relationships between independent and dependent variables.

Operational Mapping:

- For each variable in the hypotheses, specify its operational definition.

- Map each construct to its raw data source(s) (e.g., "Attention" → "Continuous Performance Task A' (sensitivity score) & EEG P300 amplitude").

- Define the unit of measurement and timepoints of collection.

- Output: A variable dictionary table.

Preprocessing Anticipation:

- For each primary data stream, list known technical and participant-driven sources of noise (e.g., device removal artifacts in actigraphy, random responding in surveys).

- Define initial, theory-driven rules for identifying invalid data (e.g., reaction times <100ms as anticipatory, >3SD from individual mean as outlier).

- Specify the minimum data density required for a participant's data to be considered sufficient for primary analysis (e.g., "≥70% EMA prompts answered, ≥5 valid days of actigraphy").

- Output: A preliminary BDQSA rule set for Phases 2-4 (Quality Checks, Cleansing, Sufficiency Assessment).

Integration & Sign-off:

- Compile all outputs into the single Background Document.

- The document must be version-controlled and signed by the Principal Investigator before data collection or unblinding commences.

Visualizations

Diagram 1: BDQSA Phase 1 Workflow

Diagram 2: From Construct to Variable Mapping

Research Reagent Solutions

Table 2: Essential Materials for Behavioral Data Background Definition

| Item | Function in Phase 1 | Example/Provider |

|---|---|---|

| Protocol & Statistic Analysis Plan (SAP) | Primary source document detailing study design, endpoints, and planned analyses. Guides operational mapping. | Internal study document; ClinicalTrials.gov entry. |

| Systematic Review Literature | Provides empirical context, effect sizes for power calculations, and identifies standard measurement tools. | PubMed, PsycINFO, Cochrane Library databases. |

| Measurement Tool Manuals | Provide authoritative operational definitions, validity/reliability metrics, and known administration artifacts. | APA PsycTests, commercial test publisher websites (e.g., Pearson). |

| Data Standard Vocabularies | Ontologies for standardizing variable names and attributes, enhancing reproducibility. | CDISC (Clinical Data Interchange Standards Consortium) terminology. |

| Electronic Data Capture (EDC) System Specs | Defines the raw data structure, format, and potential export quirks that preprocessing must handle. | REDCap, Medrio, Oracle Clinical specifications. |

| BDQSA Phase 1 Template | Structured form to ensure consistent and complete documentation across studies. | Internal framework document. |

| Version Control System | Tracks changes to the Background Document, maintaining an audit trail. | Git, SharePoint with versioning. |

1. Introduction Within the Behavioral Data Quality & Standardization Architecture (BDQSA) model, Phase 2, Cataloging Design, is pivotal for structuring raw observations into analyzable constructs. This document details the experimental paradigms and trial structures critical for preprocessing data in behavioral neuroscience and psychopharmacology. Standardizing this catalog ensures interoperability, reproducibility, and validity across studies, directly supporting translational drug development.

2. Key Experimental Paradigms: Classification & Metrics Behavioral paradigms are cataloged by primary domain, neural circuit, and output measures. The following table summarizes core paradigms.

Table 1: Core Behavioral Experimental Paradigms and Quantitative Outputs

| Domain | Paradigm Name | Primary Outcome Measures | Typical Duration | Common Species | BDQSA Data Class |

|---|---|---|---|---|---|

| Anxiety & Fear | Elevated Plus Maze (EPM) | % Time Open Arms, Open Arm Entries | 5 min | Mouse, Rat | Time-Series, Event |

| Anxiety & Fear | Fear Conditioning (Cued) | % Freezing (Context, Cue) | Training: 10-30 min; Recall: 5-10 min | Mouse, Rat | Time-Series, Scalar |

| Depression & Anhedonia | Sucrose Preference Test (SPT) | Sucrose Preference % = (Sucrose Intake/Total Fluid)*100 | 24-72 hr | Mouse, Rat | Scalar |

| Depression & Effort | Forced Swim Test (FST) | Immobility Time (sec), Latency to Immobility | 6 min | Mouse, Rat | Time-Series, Scalar |

| Learning & Memory | Morris Water Maze (MWM) | Escape Latency (sec), Time in Target Quadrant | 5-10 days | Mouse, Rat | Trajectory, Latency |

| Social Behavior | Three-Chamber Sociability Test | Interaction Time (Stranger vs. Object), Sociability Index | 10 min | Mouse | Time-Series, Event |

| Motor Function | Rotarod | Latency to Fall (sec) | Trial: 1-5 min | Mouse, Rat | Latency |

3. Detailed Protocol: Standardized Fear Conditioning for BDQSA Cataloging Objective: To generate high-quality, pre-processed fear memory data (freezing behavior) compatible with BDQSA data lakes. Materials:

- Fear conditioning chamber with grid floor, speaker, and LED cue light.

- Video tracking/Freezing analysis software (e.g., EthoVision, ANY-maze).

- Soundproof enclosure with consistent lighting.

- 70% ethanol and 1% acetic acid for context alteration.

Procedure:

- Habituation (Day 0): Place subject in training context for 10 min. No stimuli presented.

- Training (Day 1): a. Context A (Training): Clean with 70% ethanol. Subject placed in chamber. b. At 180 sec, present Conditional Stimulus (CS: 30 sec, 80 dB tone, 2.9 kHz). c. Terminate CS with Unconditional Stimulus (US: 2 sec, 0.7 mA footshock). d. Repeat CS-US pairing after 60 sec (variable interval). e. Remove subject 30 sec after final shock. Return to home cage.

- Contextual Recall Test (Day 2): a. Place subject in original Context A (70% ethanol) for 5 min. No CS or US. b. Record continuous video. Software quantifies freezing (% time immobile per min bin).

- Cued Recall Test (Day 3): a. Novel Context B: Alter visual cues, scent (1% acetic acid), floor texture. b. After 180 sec baseline, present CS (identical tone) for 180 sec. c. Record freezing during baseline and CS periods.

BDQSA Preprocessing: Raw video is processed to generate time-stamped freezing bouts. Data is cataloged with metadata tags: [Paradigm:FearConditioning], [Phase:Training/Recall], [Stimulus_CS:tone], [Stimulus_US:footshock].

4. Diagram: BDQSA Phase 2 - Experimental Paradigm Logic Flow

Title: BDQSA Phase 2: From Question to Trial Structure

5. Diagram: Fear Conditioning Trial Structure & Data Flow

Title: Fear Conditioning Protocol and Data Cataloging Pipeline

6. The Scientist's Toolkit: Key Reagents & Solutions for Behavioral Phenotyping

Table 2: Essential Research Reagents for Behavioral Assays

| Reagent / Material | Function / Role | Example Use Case | Considerations for BDQSA Cataloging |

|---|---|---|---|

| Sucrose Solution (1-4%) | Rewarding stimulus to measure anhedonia (loss of pleasure). | Sucrose Preference Test (SPT). | Concentration and preparation method must be documented as metadata. |

| Ethanol (70%) & Acetic Acid (1%) | Contextual cues for olfactory discrimination between different testing environments. | Fear Conditioning (distinguishing training vs. cued test context). | Critical for standardizing contextual variables; scent must be cataloged. |

| Automated Tracking Software (e.g., EthoVision XT) | Converts raw video into quantitative (x,y) coordinates and derived measures (velocity, immobility). | Any locomotor or ethological analysis (Open Field, EPM, MWM). | Software version and analysis settings (e.g., freezing threshold) are vital metadata. |

| Footshock Generator & Grid Floor | Delivers precise, calibrated unconditional stimulus (US) for aversive learning. | Fear Conditioning, Passive Avoidance. | Shock intensity (mA), duration, and number of pairings are core experimental parameters. |

| Auditory Tone Generator | Produces controlled conditional stimulus (CS). | Cued Fear Conditioning, Pre-Pulse Inhibition. | Frequency (Hz), intensity (dB), duration must be standardized and recorded. |

| Cleaning & Bedding Substrates | Controls olfactory environment, reduces inter-subject stress odors. | All in-vivo behavioral tests. | Type of bedding and cleaning regimen between subjects is a key environmental variable. |

Within the Behavioral Data Quality and Standardization Architecture (BDQSA) model, Phase 3 focuses on the standardization of measurement instruments, specifically questionnaires (Q). This phase ensures that data collected on latent constructs (e.g., depression, anxiety, quality of life) are reliable, valid, and comparable across studies—a critical prerequisite for robust meta-analyses and regulatory submissions in drug development.

Current Standards and Quantitative Comparison of Common Scales

The selection of a questionnaire depends on the construct, population, and required psychometric properties. The table below summarizes key standardized instruments relevant to clinical trials and behavioral research.

Table 1: Comparison of Standardized Questionnaires in Clinical Research

| Questionnaire Name | Primary Construct(s) | Number of Items | Scale Range | Cronbach's Alpha (Typical) | Average Completion Time (mins) | Key Applicability |

|---|---|---|---|---|---|---|

| Patient Health Questionnaire-9 (PHQ-9) | Depression Severity | 9 | 0-27 | 0.86 – 0.89 | 3-5 | Depression screening & severity monitoring |

| Generalized Anxiety Disorder-7 (GAD-7) | Anxiety Severity | 7 | 0-21 | 0.89 – 0.92 | 2-3 | Anxiety screening & severity monitoring |

| Insomnia Severity Index (ISI) | Insomnia Severity | 7 | 0-28 | 0.74 – 0.91 | 3-5 | Assessment of insomnia symptoms & treatment response |

| EQ-5D-5L | Health-Related Quality of Life | 5 + VAS | 5-digit profile / 0-100 VAS | 0.67 – 0.84 (index) | 2-4 | Health utility for economic evaluation |

| PANSS (Positive and Negative Syndrome Scale) | Schizophrenia Symptomatology | 30 | 30-210 | 0.73 – 0.83 (subscales) | 30-40 | Rated by clinician; gold standard for schizophrenia trials |

| SF-36 (Short Form Health Survey) | Health Status | 36 | 0-100 (per scale) | 0.78 – 0.93 (scales) | 10-15 | Broad health status profile |

Experimental Protocol: Standardized Administration and Scoring for a Clinical Trial

Protocol: Implementation and Scoring of the PHQ-9 in a Phase III Depression Trial

Objective: To reliably administer, score, and interpret the PHQ-9 questionnaire for assessing depression severity as a secondary endpoint.

Materials:

- Approved trial protocol and Statistical Analysis Plan (SAP).

- Patient Health Questionnaire-9 (PHQ-9) instrument (validated translation for site locale).

- Electronic Data Capture (EDC) system configured with forced entry ranges and logic checks.

- Training manual and certification for site raters/interviewers.

Procedure:

Step 1: Pre-Study Training & Qualification

- All clinical site personnel involved in patient assessment must complete a standardized training module.

- Training covers: instrument background, exact wording of questions and instructions, neutral probing techniques, and scoring rules.

- Personnel must pass a qualification test (e.g., score ≥90% on a test scoring vignettes) before interacting with trial participants.

Step 2: Administration at Study Visit

- The questionnaire is administered at baseline (screening/Visit 1) and at each subsequent scheduled efficacy assessment visit (e.g., Weeks 4, 8, 12).

- Provide the participant with the self-rated version in a quiet, private setting. If required per protocol, a trained interviewer may administer it.

- Instruct the participant: "Over the last 2 weeks, how often have you been bothered by any of the following problems?" Do not guide or interpret items for the participant.

- Ensure all 9 items are completed before the participant leaves. In EDC, items must be answered for form completion.

Step 3: Scoring & Data Entry

- Item Scoring: Each item (e.g., "Little interest or pleasure in doing things") is scored from 0 ("Not at all") to 3 ("Nearly every day").

- Total Score Calculation: Sum all 9 item scores. Total Score Range = 0-27.

- Algorithm:

PHQ9_Total = Item1 + Item2 + ... + Item9

- Algorithm:

- Severity Categorization (Per SAP):

- 0-4: Minimal depression

- 5-9: Mild depression

- 10-14: Moderate depression

- 15-19: Moderately severe depression

- 20-27: Severe depression

- Response Definition (Primary Analysis): A "treatment responder" is defined a priori in the SAP as a ≥50% reduction in PHQ-9 total score from baseline at Week 8.

- Enter scores directly into the EDC system. The system should automatically calculate the total score and flag out-of-range values or inconsistent responses (e.g., total score not matching item sum).

Step 4: Quality Control

- The clinical data management team runs periodic checks for missing data, visit window deviations, and scoring errors.

- Source Data Verification (SDV) is performed on 100% of primary endpoint assessments and a sample of others.

- Any deviations from the protocol are recorded as protocol deviations.

The Scientist's Toolkit: Key Research Reagent Solutions

Table 2: Essential Materials for Questionnaire Standardization & Implementation

| Item | Function in Standardization Process |

|---|---|

| Validated Instrument Libraries (e.g., PROMIS, ePROVIDE) | Repositories of licensed, linguistically validated questionnaires with documented psychometric properties for use in clinical research. |

| Electronic Data Capture (EDC) Systems | Platforms for electronic administration (ePRO) and data capture, ensuring standardized presentation, real-time scoring, and reduced transcription error. |

Statistical Software (e.g., R psych package, SPSS, Mplus) |

Used for calculating scale reliability (Cronbach's alpha), conducting confirmatory factor analysis (CFA), and establishing measurement invariance across study sites or subgroups. |

| Linguistic Validation Kit | A protocol for translation and cultural adaptation of instruments, including forward/backward translation, cognitive debriefing, and harmonization. |

| Rater Training & Certification Portal | Online platforms to ensure consistent administration and scoring across multicenter trials through standardized training modules and certification exams. |

| Standard Operating Procedure (SOP) Document | Defines the process for selection, administration, scoring, handling, and quality control of questionnaire data within the research organization. |

Visualization: Questionnaire Standardization Workflow in BDQSA

Standardization Workflow in BDQSA Phase 3

Within the BDQSA (Behavioral Data Quality & Standardization Assessment) model framework, Phase 4, Subject Profiling, is the critical juncture where raw participant data is transformed into a structured, analyzable cohort. This phase ensures the foundational validity of subsequent behavioral and quantitative analyses by rigorously defining who is studied, how they are grouped, and who is excluded.

Application Notes

Subject profiling serves as the operationalization of a study's target population. In behavioral science within drug development, this phase directly impacts the generalizability of findings, the detection of treatment signals, and regulatory acceptability. Key considerations include:

- Demographic Stratification: Variables like age, sex, gender identity, ethnicity, education, and socioeconomic status are not merely descriptive. They are potential effect modifiers or confounders for behavioral endpoints and pharmacokinetic/pharmacodynamic responses.

- Randomization & Allocation Concealment: Proper group allocation (e.g., treatment vs. placebo) is paramount for causal inference. The protocol must detail the method (e.g., computer-generated, block randomization) and steps to conceal allocation from participants and investigators until assignment.

- Exclusion as a Quality Control: Explicit exclusion criteria protect participant safety (e.g., excluding those with contraindications) and enhance internal validity by reducing noise from comorbid conditions or concomitant medications that could obscure the behavioral signal of interest.

Experimental Protocols

Protocol 1: Demographic & Baseline Characterization

Objective: To systematically collect, verify, and document baseline demographic and clinical characteristics of all enrolled subjects.

Methodology:

- Data Collection Point: Screening visit (Visit 1).

- Tools: Structured Case Report Forms (CRFs), validated electronic data capture (EDC) systems, and standardized interviews.

- Procedure:

- Informed Consent: Obtain prior to any data collection.

- Core Demographics: Record age (date of birth), sex assigned at birth, self-identified gender, race/ethnicity (per FDA/EMA guidelines), years of education, and primary language.

- Clinical Baseline: Administer standardized assessments for disease severity (e.g., Hamilton Rating Scale for Depression [HAM-D] for MDD trials), cognitive screening (e.g., MoCA), and medical history review.

- Data Verification: Cross-check subject-reported data with available medical records or ID documents where applicable and necessary.

- Database Entry: Double-data entry or automated EDC validation rules to ensure accuracy.

Protocol 2: Randomized Group Allocation

Objective: To assign eligible subjects to study arms in an unbiased manner to ensure group comparability at baseline.

Methodology:

- Allocation Framework: Utilize a pre-defined, computer-generated randomization schedule prepared by a biostatistician not involved in recruitment.

- Stratification: If required, stratify by key prognostic factors identified in the BDQSA model Phase 3 (e.g., severity score, age group, study site).

- Implementation (Centralized):

- Upon confirming a subject's eligibility, the site investigator accesses a secure, web-based randomization system (Interactive Web Response System - IWRS).

- The system inputs subject ID and stratification factors, and returns a unique allocation (e.g., "Subject-101 → Arm B, Kit #0457").

- The allocation is automatically logged and concealed from the investigator until the moment of assignment.

Protocol 3: Application of Exclusion Criteria

Objective: To consistently apply pre-defined scientific and safety criteria to screen out ineligible individuals.

Methodology:

- Multi-Stage Screening:

- Pre-Screen: Initial phone/web screen based on key exclusion criteria (e.g., age range, major comorbid diagnosis).

- In-Person Screening (Visit 1): Comprehensive assessment including:

- Medical/Psyciatric History: Structured Clinical Interview (SCID) to rule out excluded conditions.

- Laboratory Tests: Urine drug screen, hematology, serum chemistry, pregnancy test.

- Concomitant Medication Review: Cross-reference with protocol's prohibited medication list.

- Adjudication Committee: For borderline cases, a centralized eligibility adjudication committee (blinded to eventual allocation) reviews all data to make a final, consistent determination.

Data Presentation

Table 1: Standard Demographic & Baseline Data Collection Schema

| Variable | Measurement Method | Level of Measurement | BDQSA Phase Link |

|---|---|---|---|

| Age | Date of Birth | Continuous (years) | Phase 3 (Data Audit) |

| Sex Assigned at Birth | Medical Record/Self-report | Categorical (Male/Female) | - |

| Gender Identity | Self-report (e.g., two-step method) | Categorical | Phase 1 (Define) |

| Race/Ethnicity | Self-report per NIH/EMA categories | Categorical | Phase 1 (Define) |

| Education | Highest degree completed | Ordinal | - |

| Disease Severity | Validated scale (e.g., HAM-D, PANSS) | Continuous/Ordinal | Phase 2 (Quantify) |

| Cognitive Status | Screening tool (e.g., MoCA, MMSE) | Continuous | Phase 2 (Quantify) |

Table 2: Exemplary Exclusion Criteria for a Behavioral Trial in Major Depressive Disorder

| Criterion Category | Specific Example | Rationale |

|---|---|---|

| Clinical History | History of bipolar disorder, psychosis, or DSM-5 substance use disorder (moderate-severe) in past 6 months | To ensure a homogeneous sample and reduce confounding behavioral phenotypes. |

| Concomitant Meds | Use of CYP3A4 strong inducers (e.g., carbamazepine) within 28 days | Pharmacokinetic interaction with investigational drug. |

| Safety & Ethics | Active suicidal ideation with intent | Patient safety; requires immediate clinical intervention outside trial protocol. |

| Protocol Compliance | Inability to complete digital cognitive tasks per protocol | Would lead to missing data in core behavioral outcomes (Phase 2 of BDQSA). |

Diagrams

Subject Profiling Workflow in BDQSA Model

Randomization and Allocation Concealment

The Scientist's Toolkit

Table 3: Key Research Reagent Solutions for Subject Profiling

| Item | Function in Profiling | Example/Notes |

|---|---|---|

| Interactive Web Response System (IWRS) | Manages random allocation, maintains concealment, and often integrates drug inventory management. | Vendors: Medidata RAVE, Oracle Clinical. |

| Electronic Data Capture (EDC) System | Centralized platform for entering, storing, and validating demographic and baseline data with audit trails. | Vendors: Veeva Vault EDC, Medidata RAVE. |

| Structured Clinical Interviews (SCID) | Validated diagnostic tool to consistently apply psychiatric inclusion/exclusion criteria. | SCID-5 for DSM-5 disorders. |

| Laboratory Test Kits | Standardized panels for safety screening (hematology, chemistry) and eligibility (drug screen). | FDA-approved kits for consistent results across sites. |

| Cognitive Screening Tools | Brief, validated assessments to establish baseline cognitive function, a key behavioral variable. | Montreal Cognitive Assessment (MoCA), MMSE. |

| Centralized Adjudication Portal | Secure platform for eligibility committees to review de-identified subject data and make consensus decisions. | Often a customized module within the EDC. |

Within the Behavioral Data Quality & Standardization Assessment (BDQSA) model for preprocessing behavioral science data, Phase 5 is critical for establishing methodological reproducibility. This phase explicitly defines the apparatus, including hardware, software, and precise data collection parameters, to mitigate batch effects and ensure cross-study compatibility essential for drug development research.

Core Apparatus Specifications for Behavioral Phenotyping

Primary Data Acquisition Equipment

The following equipment is standard for high-throughput behavioral screening in preclinical models.

Table 1: Core Behavioral Apparatus Specifications

| Apparatus Category | Example Device/Model | Key Technical Parameter | Role in BDQSA Preprocessing |

|---|---|---|---|

| Video Tracking System | Noldus EthoVision XT, ANY-maze | Spatial Resolution: ≥ 720p @ 30 fps; Tracking Algorithm: DeepLabCut or proprietary | Generates raw locomotor (x,y,t) coordinates; Quality metric: % of frames tracked. |

| Operant Conditioning Chamber | Med Associates, Lafayette Instruments | Actuator Precision: ±1 ms; Photobeam Spacing: Standard 2.5 cm | Produces discrete event data (lever presses, nose pokes). Requires timestamp synchronization. |

| Acoustic Startle & Prepulse Inhibition System | San Diego Instruments SR-Lab | Sound Calibration: ±1 dB (SPL); Load Cell Sensitivity: 0.1g | Outputs waveform amplitude (V); Parameter: pre-pulse interval (ms). |

| In Vivo Electrophysiology | NeuroLux, SpikeGadgets | Sampling Rate: 30 kHz; Bit Depth: 16-bit | Raw neural spike data; Must be synchronized with behavioral timestamps. |

| Wearable Biotelemetry | DSI, Starr Life Sciences | ECG/EMG Sampling: 500 Hz; Data Logger Capacity: 4 GB | Continuous physiological data; Parameter: recording epoch length (s). |

Essential Software Stack

Software selection ensures data integrity from collection through initial preprocessing.

Table 2: Software Stack for Data Collection & Initial Processing

| Software Layer | Recommended Tools | Function in BDQSA Workflow | Key Configuration Parameter |

|---|---|---|---|

| Acquisition & Control | Bpod (r0.5+), PyBehavior, MED-PC | Presents stimuli, schedules contingencies, logs events. | State machine timing resolution (typically 1 ms). |

| Synchronization | LabStreamingLayer (LSL), Pulse Pal | Aligns timestamps across multiple devices (video, neural, physiology). | Network synchronization precision (target: <10 ms skew). |

| Initial Processing & QC | DeepLabCut, BORIS, custom Python scripts | Converts raw video to pose estimates; performs first-pass quality checks. | Confidence threshold for pose estimation (e.g., 0.9). |

| Data Orchestration | DataJoint, NWB (Neurodata Without Borders) | Structures raw and meta-data into a standardized, queryable format. | Schema version (e.g., NWB 2.5.0). |

Detailed Experimental Protocols

Protocol: Synchronized Multimodal Data Acquisition in a Fear Conditioning Paradigm

Objective: To collect temporally aligned video, freezing behavior, and amygdala neural activity during a cued fear conditioning task. Apparatus Setup:

- Operant Chamber: Place subject in a standard fear conditioning chamber with a metal grid floor connected to a scrambled shock generator.

- Video Acquisition: Position a high-definition camera (e.g., Basler acA1920-155um) orthogonally to the chamber. Set resolution to 1280x720 pixels at 30 fps. Use IR pass filter and IR illumination for dark phase.

- Audio System: Calibrate the tone generator (e.g., 85 dB, 2 kHz) using a sound pressure level meter (Extech 407736) placed at the chamber center.

- Electrophysiology: Connect a head-mounted preamplifier (Intan Technologies RHD2132) to a 32-channel drive. Set the Intan RHD2000 evaluation board to sample at 30 kHz.

- Synchronization: Use a LabStreamingLayer (LSL) setup. Configure a common clock server. Send unique digital TTL pulses from the behavioral control computer (Bpod) to the Intan system and the video acquisition computer via a Pulse Pal device at the onset of every trial event (tone, shock). Data Collection Parameters:

- Session Structure: 5 min habituation, 10 tone-shock pairings (tone: 30 s, shock: 1 s, 0.7 mA, co-terminating), 5 min post-conditioning.

- Preprocessing Alignment Flag: Record all timestamps relative to session start (Unix time). The LSL outlet for the Bpod must stream a unique session UUID.

Protocol: High-Throughput Open Field Test for Locomotor Phenotyping

Objective: To reliably quantify locomotor activity and center zone exploration in a cohort of 96 mice over 48 hours. Apparatus Setup:

- Infrared Photobeam Arrays: Utilize a Comprehensive Lab Animal Monitoring System (CLAMS). Calibrate each chamber's X-Y beam breaks (spaced 2.5 cm apart) using a standardized motorized calibration rod.

- Environmental Control: Program the chamber enclosure (Tecniplast) to maintain a 12:12 light-dark cycle, 22 ± 1°C, 45-55% humidity. Log environmental parameters every minute.

- Data Logging: Configure the manufacturer's software (e.g., Oxymax) to record beam break counts in 5-minute bins. Enable raw (x,y) coordinate export at 10 Hz. Data Collection Parameters:

- Acclimation Period: 24 hours prior to experimental recording.

- Recording Duration: 48 hours continuous.

- Quality Control Check: Perform a 10-minute validation recording with an empty chamber to establish baseline noise (<2 beam breaks/min). Chambers exceeding this require recalibration.

Visualization of the BDQSA Phase 5 Workflow

Title: BDQSA Phase 5 Apparatus & Data Collection Workflow

The Scientist's Toolkit: Key Research Reagent Solutions

Table 3: Essential Materials for Behavioral Data Collection

| Item | Function | Specification for BDQSA Compliance |

|---|---|---|

| Acoustic Calibrator | Calibrates speakers for auditory stimuli (PPI, fear conditioning) to ensure consistent dB SPL across trials and cohorts. | Must provide traceable calibration certificate; used daily before sessions. |

| Light Meter | Measures lux levels in behavioral arenas to standardize ambient illumination, a critical variable for anxiety tests. | Digital meter with cosine correction; calibration checked quarterly. |

| Standardized Bedding | Provides olfactory context; non-standard bedding introduces confounding variability. | Use identical, unscented, corn-cob bedding across all subjects and batches. |

| Timer Calibration Box | Independently verifies the millisecond precision of TTL pulses and software timers across all devices. | Validates that a 1000 ms software command triggers a 1000 ± 1 ms hardware pulse. |

| Reference Video Files | A set of pre-recorded animal movement videos with human-annotated "ground truth" positions. | Used to validate and benchmark the accuracy of any new video tracking installation or update. |

| Metadata Schema Template | A digital form (e.g., JSON schema) that forces entry of all apparatus parameters at collection time. | Must include fields for device model, firmware version, software version, and key settings (e.g., sampling rate, threshold). |

Integrating BDQSA Output with Statistical Software (R, Python, SPSS)

1. Introduction Within the broader thesis on the Behavioral Data Quality and Standardization Assessment (BDQSA) model, the critical step following data preprocessing is the integration of its output—cleaned, standardized, and quality-flagged datasets—into mainstream statistical environments. This protocol provides detailed application notes for researchers and drug development professionals to seamlessly transition BDQSA-curated behavioral science data into R, Python, and SPSS for advanced analysis.

2. BDQSA Output Structure & Data Mapping The BDQSA model generates a standardized output package, the structure of which is essential for integration.

Table 1: Core Components of BDQSA Output Package

| Component | Format | Description | Primary Use Case |

|---|---|---|---|

cleaned_dataset |

CSV, Parquet | The primary cleaned dataset with standardized variables. | Primary statistical analysis. |

quality_flags |

CSV | Row- and column-level flags indicating data quality issues (e.g., missing_threshold, variance_alert). |

Sensitivity analysis, data masking. |

metadata_dictionary |

JSON | Variable definitions, units, transformation logs, and scoring algorithms. | Analysis documentation, reproducible scripting. |

processing_log |

Text | Audit trail of all preprocessing steps applied by the BDQSA model. | Regulatory compliance, method reproducibility. |

3. Experimental Protocols for Integration

Protocol 3.1: Integration with R

Objective: To import BDQSA outputs into R for statistical modeling and visualization.

Materials: R (v4.3.0+), RStudio, tidyverse, jsonlite, haven packages.

Procedure:

- Set Working Directory: Use

setwd()to point to the BDQSA output directory. - Import Data:

main_data <- read_csv("cleaned_dataset.csv"). - Import & Merge Quality Flags:

flags <- read_csv("quality_flags.csv"); merge withmain_datausing a unique key (e.g., subject ID). - Load Metadata:

meta <- fromJSON("metadata_dictionary.json")to access variable labels and constraints. - Apply Quality Flags for Analysis: Subset high-quality data:

high_quality_data <- main_data %>% filter(flags$overall_flag == "PASS"). - Utilize in Analysis: Proceed with planned analyses (e.g., mixed-effects models using

lme4) on the prepared data frame.

Protocol 3.2: Integration with Python Objective: To load BDQSA outputs into Python for machine learning or computational analysis. Materials: Python (v3.9+), Jupyter, pandas, numpy, json, scikit-learn libraries. Procedure:

- Import Libraries:

import pandas as pd, json. - Read Datasets:

df = pd.read_csv('cleaned_dataset.parquet', engine='pyarrow')for efficiency. - Attach Quality Flags:

flags_df = pd.read_csv('quality_flags.csv'); merge usingpd.merge(). - Incorporate Metadata:

with open('metadata_dictionary.json') as f: meta = json.load(f)to guide feature engineering. - Data Splitting with Quality Control: Create a model-ready dataset:

train_set = df[flags_df['missingness_flag'] == 0].copy(). - Analysis Pipeline: Use the clean DataFrame in pipelines (e.g.,

sklearn.pipeline).

Protocol 3.3: Integration with SPSS Objective: To utilize BDQSA outputs within the SPSS GUI for traditional statistical testing. Materials: IBM SPSS Statistics (v28+). Procedure:

- Direct Import: Use

File > Open > Datato opencleaned_dataset.csv. - Define Variable Properties: Use the

metadata_dictionary.jsonto manually set variable labels, measurement levels, and value labels in the Variable View. - Merge Quality Flags: Use

Data > Merge Files > Add Variablesto importquality_flags.csv. - Filter Cases: Use

Data > Select Caseswith conditionquality_flags.overall_flag = 1to analyze only quality-passed data. - Syntax for Reproducibility: Document all steps in an SPSS syntax file (

*.sps).

4. Visualization of Integration Workflow

Title: BDQSA Output Integration Pathway to Statistical Software

5. The Scientist's Toolkit: Essential Research Reagent Solutions Table 2: Key Software Tools and Packages for Integration

| Tool/Package | Category | Primary Function in Integration |

|---|---|---|

R tidyverse |

R Package Suite | Data manipulation (dplyr), visualization (ggplot2), and importing (readr). |

R haven |

R Package | Import/export of SPSS, SAS, and Stata files for multi-platform workflows. |

Python pandas |

Python Library | Core data structure (DataFrame) for handling BDQSA tables and performing merges. |

Python pyarrow |

Python Library | Enables fast reading/writing of Parquet format BDQSA outputs. |

| IBM SPSS Statistics | GUI Software | Provides a point-and-click interface for analysts less familiar with scripting. |

| Jupyter Notebook | Development Environment | Creates reproducible narratives combining Python code, data, and visualizations. |

| JSON Viewer | Utility | Aids in inspecting the BDQSA metadata_dictionary.json structure. |

Within the broader thesis advocating for the Behavioral Data Quality and Sufficiency Assessment (BDQSA) model, this case study demonstrates its practical application as a preprocessing and quality control framework. The BDQSA model mandates a structured evaluation of data Quality (reliability, internal validity), Sufficiency (statistical power, external validity), and Analytical Alignment (fitness for intended statistical models) prior to formal analysis. Here, we apply BDQSA to common preclinical datasets modeling anxiety and cognitive impairment, highlighting how systematic preprocessing mitigates reproducibility issues in translational psychopharmacology.

BDQSA Application Notes for Preclinical Behavioral Data

A. Quality Dimension Assessment:

- Internal Validity Threats: Identify confounders specific to anxiety (e.g., altered locomotion confounding elevated plus maze (EPM) open arm time) and cognition (e.g., motivation or sensorimotor deficits confounding Morris water maze (MWM) performance).

- Data Reliability Checks: Implement outlier detection using pre-defined, protocol-based criteria (e.g., EPM: animal falling; MWM: floating; Social test: lack of exploration in all zones).

- Standardization: Audit protocol variables against guidelines like the NIH Toolkit or EMPRESS.

B. Sufficiency Dimension Assessment:

- Power Analysis Verification: Pre-register sample size justifications. For post-hoc assessment, calculate achieved power for key endpoints (e.g., % open arm time, latency to target).

- Batch Effects: Document and plan statistical control for unavoidable batch variations (operator, time of day, shipment cohort).

C. Analytical Alignment Dimension Assessment:

- Distribution Testing: Test normality (Shapiro-Wilk) and homoscedasticity (Levene's test) for parametric tests commonly used (t-tests, ANOVA).

- Missing Data Protocol: Define handling strategy (e.g., exclusion vs. imputation) for missing trials or technical failures.

Protocols for Key Behavioral Experiments

Protocol 1: Elevated Plus Maze (EPM) for Anxiety-like Behavior

- Objective: Assess unconditioned anxiety-like behavior based on rodent's natural aversion to open, elevated spaces.

- Apparatus: Plus-shaped maze with two open arms (no walls) and two enclosed arms (high walls), elevated ~50 cm.

- Procedure:

- Habituate animal to testing room for 60 minutes under dim light.

- Place animal in central square, facing an open arm.

- Record behavior for 5 minutes via overhead camera.