Analyzing Among-Individual Variation in R: A Complete Tutorial for Biomedical Research and Precision Medicine

This comprehensive R tutorial guides biomedical researchers through the complete analysis pipeline for quantifying and interpreting among-individual variation.

Analyzing Among-Individual Variation in R: A Complete Tutorial for Biomedical Research and Precision Medicine

Abstract

This comprehensive R tutorial guides biomedical researchers through the complete analysis pipeline for quantifying and interpreting among-individual variation. Covering foundational concepts, practical implementation with mixed-effects models, troubleshooting common issues, and validation techniques, this article provides essential tools for applications in pharmacology, clinical trial analysis, and personalized treatment strategies. We demonstrate key R packages (lme4, nlme, brms) with real-world examples to help scientists move beyond population averages and understand individual differences in treatment response, biomarker variability, and disease progression.

Why Individual Variation Matters: Foundational Concepts and Data Exploration in R

In biomedical research, accurately partitioning variation is fundamental for study design, analysis, and interpretation. This document provides key definitions and application notes within the context of an R tutorial for among-individual variation analysis research.

- Among-Individual Variation (Between-Subject Variation): The differences in a measured trait (e.g., biomarker level, drug response) that exist between different individuals in a population. This variation reflects stable, inherent differences such as genetics, long-term environmental exposures, or demographic factors.

- Within-Individual Variation (Intra-Individual Variation): The fluctuations in a measured trait that occur within the same individual over time or across repeated measurements. This variation can be due to circadian rhythms, transient environmental factors, measurement error, or biological stochasticity.

Table 1: Illustrative Sources of Variation in Common Biomedical Measurements

| Measurement Type | Primary Source of Among-Individual Variation | Primary Source of Within-Individual Variation | Typical Coefficient of Variation (CV) Range | |

|---|---|---|---|---|

| Fasting Plasma Glucose | Genetics, insulin resistance status, body composition | Time of day, recent diet, stress, activity | Among: 10-15% | Within: 5-8% |

| Serum Cholesterol (Total) | Genetic polymorphisms (e.g., LDLR, PCSK9), age, sex | Dietary intake over days, seasonal changes, assay variability | Among: 15-20% | Within: 6-10% |

| Blood Pressure (Systolic) | Vascular stiffness, chronic kidney disease, ethnicity | Circadian rhythm, acute stress, posture, recent activity | Among: 12-18% | Within: 8-12% |

| Plasma Cytokine (e.g., IL-6) | Chronic inflammatory status, autoimmune conditions | Acute infection, recent exercise, circadian oscillation | Among: 30-50%+ | Within: 20-40%+ |

| Drug PK Parameter (AUC) | Genetics (e.g., CYP450 polymorphisms), organ function | Food effect, concomitant medications, timing | Among: 25-70%+ | Within: 10-25% |

Table 2: Implications for Study Design Based on Variation Type

| Study Objective | Critical Variation to Characterize | Recommended Design | Key R Analysis Package (Example) |

|---|---|---|---|

| Identify biomarkers for disease risk | Among-Individual | Cross-sectional cohort study | lme4, nlme for ICC calculation |

| Assess reliability of a diagnostic test | Within-Individual (and Measurement Error) | Repeated measures on same subjects, same timepoint | psych (for ICC), blandr |

| Personalize dosing regimens | Both (Ratio of Among to Within) | Rich longitudinal pharmacokinetic sampling | nlme, brms for mixed-effects PK models |

| Track disease progression in an individual | Within-Individual | High-frequency longitudinal monitoring | mgcv for smoothing, changepoint |

Experimental Protocols

Protocol 3.1: Estimating the Intraclass Correlation Coefficient (ICC) for Biomarker Reliability

Objective: To quantify the proportion of total variance in a biomarker attributable to stable among-individual differences versus within-individual fluctuation/error.

Materials: See "The Scientist's Toolkit" (Section 5). Methodology:

- Study Design: Recruit a representative sample of N individuals (e.g., n=30). Collect k repeated samples (e.g., k=3) from each individual under standardized conditions (e.g., same time of day, fasting status), with sufficient spacing (e.g., 1 week apart) to capture typical within-individual variation.

- Sample Analysis: Process and assay all samples in a single batch using a validated protocol to minimize inter-batch measurement error.

- Data Analysis in R:

a. Model Fitting: Fit a one-way random-effects ANOVA model using the

lme4package.

- Interpretation: An ICC > 0.75 suggests high reliability (most variance is among-individual). ICC < 0.5 indicates high within-individual variation relative to among-individual differences, making single measurements less reliable for characterizing an individual.

Protocol 3.2: Longitudinal Mixed-Effects Modeling for Pharmacokinetic (PK) Analysis

Objective: To model drug concentration-time profiles, partitioning variation into population-level (fixed) effects, individual-specific (random) deviations (among-individual), and residual (within-individual) error.

Materials: See "The Scientist's Toolkit" (Section 5). Methodology:

- Study Design: Conduct a rich sampling or sparse sampling PK study in M individuals. Record dose, administration time, and sampling times precisely.

- Bioanalysis: Quantify drug concentration in all plasma samples.

- Data Analysis in R:

a. Base Model: Define a structural PK model (e.g., one-compartment oral). Use the

nlmepackage for nonlinear mixed-effects modeling.

Mandatory Visualizations

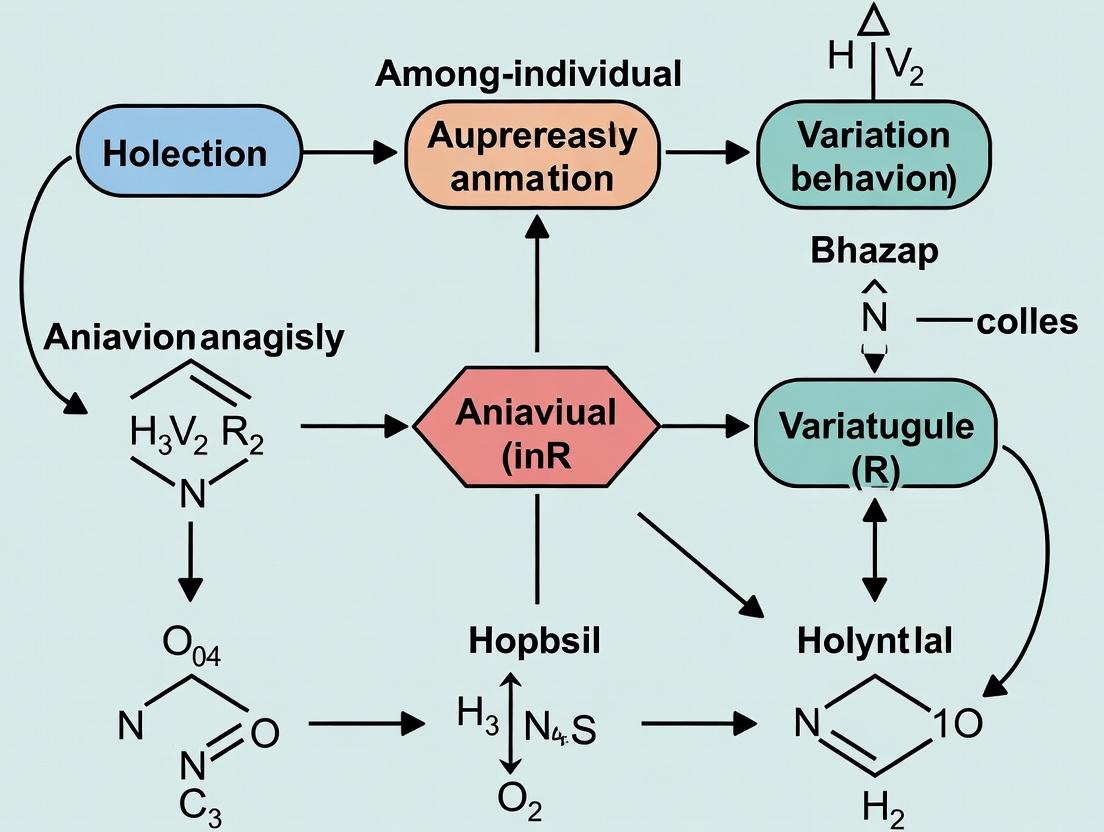

Title: Partitioning Total Biological Variation

Title: Analysis Workflow for Variation Studies

The Scientist's Toolkit

Table 3: Essential Research Reagent Solutions and Materials

| Item | Function / Relevance to Variation Analysis | Example Product / Specification |

|---|---|---|

| EDTA or Heparin Plasma Tubes | Standardized collection for biomarker stability. Minimizes pre-analytical within-individual variation due to clotting. | BD Vacutainer K2EDTA tubes |

| Liquid Chromatography-Mass Spectrometry (LC-MS) | Gold-standard for quantifying small molecule drugs and metabolites. High specificity reduces measurement error (a component of within-individual variance). | Triple quadrupole LC-MS systems |

| Multiplex Immunoassay Panels | Simultaneous quantification of multiple proteins (e.g., cytokines) from a single low-volume sample. Reduces technical variance when comparing analytes. | Luminex xMAP assays, Meso Scale Discovery (MSD) U-PLEX |

| Stable Isotope-Labeled Internal Standards (for LC-MS) | Corrects for sample-to-sample variability in extraction and ionization efficiency, isolating biological from technical within-run variation. | ¹³C or ²H-labeled analogs of target analytes |

| Digital Pharmacometric Software | Enables nonlinear mixed-effects modeling (NLMEM), the standard method for partitioning PK/PD variation. | R (nlmixr, Phoenix NLME), NONMEM |

| Biospecimen Repository Management System | Tracks longitudinal sample lineage, ensuring correct linking of repeats to an individual, fundamental for variance component analysis. | FreezerPro, OpenSpecimen |

The Critical Role of Variance Components in Pharmacology and Clinical Research

1. Introduction and Quantitative Data Synthesis Understanding the partitioning of total observed variability in pharmacological data is fundamental for robust study design, dose optimization, and personalized medicine. The total variance (σ²_Total) in a measured response (e.g., drug concentration, change in blood pressure) can be decomposed into key components.

Table 1: Primary Variance Components in Pharmacological Studies

| Variance Component | Symbol | Typical Source | Impact on Research |

|---|---|---|---|

| Between-Subject (Inter-individual) | σ²_BSV | Genetics, physiology, disease severity, demographics. | Drives dose individualization; key for identifying covariates. |

| Within-Subject (Intra-individual) | σ²_WSV | Time-varying factors, diet, adherence, circadian rhythms. | Determines required sampling frequency and bioequivalence criteria. |

| Residual (Unexplained) | σ²_RES | Analytical error, model misspecification, unpredictable fluctuations. | Defines the lower bound of predictive accuracy. |

| Between-Occasion | σ²_BOV | Visit-to-visit changes in patient status or environment. | Critical for study power in crossover designs and long-term trials. |

Table 2: Example Variance Estimates from a Simulated Pharmacokinetic Study of Drug X (PopPK Analysis)

| Parameter | Estimate | Between-Subject Variability (ω², CV%) | Residual Error (σ²) |

|---|---|---|---|

| Clearance (CL) | 5.2 L/h | 0.122 (35%) | Proportional: 0.106 |

| Volume (Vd) | 42.0 L | 0.089 (30%) | Additive: 1.2 mg/L |

| Bioavailability (F) | 0.85 | 0.211 (46%) | - |

2. Experimental Protocol: Quantifying Variance Components via Population Pharmacokinetic (PopPK) Analysis Objective: To estimate between-subject (BSV), between-occasion (BOV), and residual variability for key PK parameters using nonlinear mixed-effects modeling (NLMEM).

Materials & Software: R (v4.3+), nlme or nlmixr2 package, NONMEM or Monolix for validation, patient PK data.

Procedure:

- Data Structure: Prepare data in a column-wise format: ID, TIME, DV (dependent variable, e.g., concentration), AMT (dose), EVID (event ID), OCCASION (occasion number), COV1-COVn (covariates).

- Base Model Development:

- Specify structural PK model (e.g., 1- or 2-compartment) using

nlmixr2::rxode2()syntax. - Define initial parameter estimates and error model (e.g., combined additive and proportional).

- Assume log-normal distribution for BSV:

P_i = TVP * exp(η_i), where η_i ~ N(0, ω²). - Fit the model using the

foceiestimation algorithm.

- Specify structural PK model (e.g., 1- or 2-compartment) using

- BOV Integration:

- Extend the BSV model to include occasion-specific random effects:

P_ij = TVP * exp(η_i + κ_ij), where κ_ij ~ N(0, π²) for the jth occasion of subject i. - Nest the

κeffect within theIDandOCCASIONin the model code.

- Extend the BSV model to include occasion-specific random effects:

- Model Fitting & Diagnostics:

- Execute model fitting. Check convergence (gradient conditions, covariance step).

- Generate diagnostic plots: Observations vs. Population/Individual Predictions, Conditional Weighted Residuals vs. Time/Predictions.

- Compare models with/without BOV using likelihood ratio test (LRT) or Akaike Information Criterion (AIC).

- Variance-Covariance Output:

- Extract the estimated variance (ω², π²) and covariance terms from the model output's

omegamatrix. - Calculate %CV as

sqrt(exp(ω²) - 1) * 100. - Report final parameter and variance component estimates with precision (RSE%).

- Extract the estimated variance (ω², π²) and covariance terms from the model output's

3. Visualization: Variance Partitioning Workflow

Title: Partitioning Total Variance in Pharmacological Data

4. The Scientist's Toolkit: Key Reagents & Software for Variance Analysis Table 3: Essential Research Toolkit for Variance Component Analysis

| Item / Solution | Category | Primary Function in Analysis |

|---|---|---|

R with nlmixr2 & ggplot2 |

Software | Open-source platform for NLMEM model specification, fitting, and diagnostic visualization. |

| NONMEM | Software | Industry-standard software for robust PopPK/PD model fitting and variance-covariance estimation. |

| PsN (Perl-speaks-NONMEM) | Software Toolkit | Automates model diagnostics, bootstrapping, and covariate model building. |

| Validated LC-MS/MS Assay | Analytical Reagent | Provides the primary concentration (DV) data with characterized analytical error (part of σ²_RES). |

| Stable Isotope Labeled Internal Standards | Analytical Reagent | Minimizes pre-analytical and analytical variability in bioassays, reducing noise. |

| Clinical Data Management System (CDMS) | Software | Ensures accurate capture of dosing records, sampling times, and covariates critical for modeling. |

| Genetic DNA/RNA Sequencing Kits | Molecular Biology Reagent | Enables identification of genomic covariates (e.g., CYP450 polymorphisms) explaining σ²_BSV. |

For researchers analyzing among-individual variation in fields like behavioral ecology, pharmacology, and drug development, a robust R environment is critical. This protocol details the setup and use of three foundational packages: lme4 for fitting linear mixed-effects models to partition variance, ggplot2 for sophisticated data visualization, and performance for model diagnostics and comparison. These tools form the core of a reproducible workflow for quantifying individual differences.

Core Package Installation & Configuration

System Requirements and Pre-installation Check

- R Version: ≥ 4.0.0 is required for all current package versions.

- System Libraries: On Linux, ensure development tools are installed (e.g.,

build-essentialon Debian/Ubuntu). - RStudio: Recommended (version ≥ 2023.12.0+).

Installation Protocol

Execute the following commands in a fresh R session. The dependencies = TRUE argument ensures required auxiliary packages are installed.

Post-Installation Validation

Verify correct installation and check versions.

Table 1: Package Version Validation

| Package | Version | Loaded Successfully |

|---|---|---|

| lme4 | 1.1-35.1 | TRUE |

| ggplot2 | 3.5.0 | TRUE |

| performance | 0.13.0 | TRUE |

Essential Workflow for Among-Individual Variance Analysis

Experimental Data Structure Protocol

Data must be in long format. For a repeated measures study (e.g., drug response over time across individuals), columns should include:

Individual_ID: A unique factor for each subject (random effect).Time: Continuous or factor for measurement occasions.Response: Continuous dependent variable (e.g., concentration, activity score).Treatment: Experimental group factor (fixed effect).

Protocol: Fitting a Linear Mixed Model withlme4

This model partitions variance into within-individual (residual) and among-individual components.

Table 2: Key lme4 Functions for Variance Partitioning

| Function | Primary Use Case | Output Relevance |

|---|---|---|

lmer() |

Fit linear mixed models (Gaussian response) | Primary tool for variance component estimation. |

glmer() |

Fit generalized mixed models (non-Gaussian response) | For binomial, Poisson, etc., data. |

VarCorr() |

Extract variance and correlation components | Directly provides among-individual (Individual_ID) and residual variance. |

ranef() |

Extract Best Linear Unbiased Predictors (BLUPs) | Estimates of individual deviation from population mean. |

Protocol: Visualizing Individual Trajectories withggplot2

Create a spaghetti plot to visualize raw data and model predictions.

Protocol: Model Diagnostics & Comparison withperformance

Assess model quality and compare competing hypotheses about random effects structure.

Table 3: Key performance Metrics for Model Evaluation

| Metric | Interpretation for Among-Individual Studies |

|---|---|

| ICC (Intraclass Correlation) | Proportion of total variance due to among-individual differences. High ICC (>0.5) suggests substantial individual consistency. |

| Marginal/Conditional R² | Variance explained by fixed effects only (marginal) vs. fixed + random effects (conditional). |

| AIC (Akaike’s Criterion) | For model comparison; lower AIC suggests better fit, penalizing complexity. |

| Diagnostic Plots | Q-Q plot (normality of random effects), homogeneity of residuals. |

The Scientist's Toolkit: Research Reagent Solutions

Table 4: Essential Computational Reagents for Among-Individual Analysis

| Reagent (Package/Function) | Function |

|---|---|

| lme4::lmer() | Fits the linear mixed model to partition within- and among-individual variance. |

| performance::icc() | Calculates the Intraclass Correlation Coefficient, the key metric for individual repeatability. |

| ggplot2::geom_line() | Creates "spaghetti plots" to visualize raw individual trajectories. |

| performance::check_heteroscedasticity() | Diagnoses non-constant variance, a common violation in hierarchical data. |

| lme4::ranef() | Extracts individual-level random effects (BLUPs) for downstream analysis or ranking. |

| Matrix::sparseMatrix() | Underpins efficient computation of large random effects models (handled internally by lme4). |

| see::plot(check_model()) | Generates a multi-panel diagnostic plot for model assumptions, essential for reporting. |

Workflow & Conceptual Diagrams

Workflow for Individual Variation Analysis in R

Partitioning Variance in Mixed Models

Within the broader thesis on R tutorials for among-individual variation analysis in biological and pharmacological research, this protocol addresses the critical need for effective visualization. Understanding variation—between subjects, across time, and within treatments—is foundational in translational science. This document provides detailed application notes for creating two powerful, complementary visualizations: Raincloud Plots (for univariate distribution comparison) and Spaghetti Plots (for longitudinal trajectory analysis) using ggplot2, enabling researchers to transparently communicate complex variation patterns in their data.

Core Concepts & Data Presentation

Table 1: Comparison of Visualization Tools for Variation Analysis

| Feature | Raincloud Plot | Spaghetti Plot | Traditional Boxplot |

|---|---|---|---|

| Primary Purpose | Display full univariate distribution & summary statistics across groups. | Visualize individual longitudinal trajectories and group-level trends over time. | Display summary statistics (median, IQR) across groups. |

| Data Revealed | Raw data (points), density distribution, and summary statistics (box, median). | Individual subject lines, connecting points, and often a group mean trend line. | Only summary statistics; hides raw data and distribution shape. |

| Key for Variation | Highlights within-group distribution shape, outliers, and between-group differences. | Reveals between-individual variation in response patterns (slope, magnitude) and within-individual consistency. | Obscures distribution shape and individual data points. |

| Ideal Use Case | Comparing a single endpoint (e.g., biomarker level) across multiple treatment arms at one time point. | Analyzing repeated measures (e.g., patient response to a drug over weeks, PK/PD time series). | Quick, simplified comparison when data privacy or overplotting is a concern. |

| ggplot2 Elements | geom_jitter(), geom_violin(), geom_boxplot(), geom_half_violin() (via ggdist). |

geom_line(), geom_point(), geom_smooth(). |

geom_boxplot(). |

Experimental Protocols

Protocol 1: Creating a Raincloud Plot

Objective: To generate a comprehensive visualization comparing the distribution of a continuous variable (e.g., Final Tumor Volume) across multiple categorical groups (e.g., Drug Treatment Arms).

Methodology:

- Data Preparation: Ensure data is in a long format. Required variables: one continuous numeric column (

y_var) and one categorical column (group_var). - Package Installation: Execute

install.packages(c("ggplot2", "ggdist", "gghalves"))in R. - Plot Construction: Use the following R code template, replacing

data,group_var, andy_varwith your specific variables.

- Interpretation: Assess the density shape (width = variance, peaks = common values), median (boxplot center line), data spread (jittered points), and potential outliers.

Protocol 2: Creating a Spaghetti Plot

Objective: To visualize individual subject trajectories and overall group trends for a continuous outcome (e.g., Serum Concentration) measured over time across different cohorts.

Methodology:

- Data Structure: Data must be in long format with columns: Subject ID (

id_var), Time (time_var), Continuous Measure (y_var), and Group (group_var).

- Plot Construction: Use the following R code template.

- Interpretation: Observe the variation in individual paths (spaghetti strands) around the thick group mean line. Consistent, parallel strands indicate low among-individual variation; widely divergent strands indicate high variation.

Diagrams for Experimental Workflow

Diagram Title: Workflow for Choosing and Creating Variation Plots in R

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Tools for Variation Analysis & Visualization in R

Item

Function in Analysis

Example/Note

R & RStudio

Core computational environment for data analysis, statistics, and graphics.

Use current versions (R >=4.3.x). Integrated Development Environment (IDE) is highly recommended.

ggplot2 package

Primary grammar-of-graphics plotting system to construct layered, customizable visualizations.

Foundation for all plots herein. ggplot(data, aes()) + geom_*().

ggdist / gghalves

Extension packages providing specialized geoms for raincloud plots (half-violins, distribution summaries).

stat_halfeye(), geom_half_point() simplify raincloud construction.

tidyr / dplyr

Data wrangling packages to pivot data into long format and perform group-wise calculations.

pivot_longer(), group_by(), summarise() are essential preprocessing steps.

lme4 / nlme

Packages for fitting Linear Mixed-Effects Models (LMMs), the statistical backbone for quantifying sources of variation seen in spaghetti plots.

Models individual random effects (intercepts/slopes).

Clinical / Experimental Dataset

Structured data containing Subject ID, Time, Group, and Continuous Measures.

Must include repeated measures for spaghetti plots. Ensure ethical use and proper anonymization.

Calculating Intraclass Correlation Coefficient (ICC) as a Baseline Metric

The Intraclass Correlation Coefficient (ICC) is a fundamental statistic for quantifying reliability and agreement in repeated measures data. Within the broader thesis on among-individual variation analysis in R, the ICC serves as a critical baseline metric. It partitions total variance into within-individual and among-individual components, establishing the proportion of total variance attributable to consistent differences between subjects. This is a prerequisite for further analyses of plasticity, repeatability, and behavioral syndromes. In drug development, ICC is used to assess the reliability of assay measurements, observer consistency in clinical trials, and the stability of biomarker readings over time.

Core Concepts & Data Presentation

ICC values range from 0 to 1, with interpretations as follows:

Table 1: Interpretation of ICC Estimates

| ICC Value Range | Interpretation | Implication for Among-Individual Variation |

|---|---|---|

| < 0.5 | Poor Reliability | Low consistency; most variance is within individuals. Among-individual analysis may be unreliable. |

| 0.5 – 0.75 | Moderate Reliability | Fair degree of consistent among-individual differences. Baseline for further analysis is acceptable. |

| 0.75 – 0.9 | Good Reliability | Substantial among-individual variation. Data suitable for partitioning variance into individual-level effects. |

| > 0.9 | Excellent Reliability | High consistency. Among-individual differences dominate the total variance observed. |

ICC models are selected based on experimental design:

Table 2: Common ICC Models (Shrout & Fleiss, 1979; McGraw & Wong, 1996)

| Model | Description | Formula (Variance Components) | Use Case |

|---|---|---|---|

| ICC(1) | One-way random effects: Single rater/measurement for each target. | σ²target / (σ²target + σ²error) | Assessing consistency of a single measurement across subjects. |

| ICC(2,1) | Two-way random effects for agreement: Multiple raters, random sample. | σ²target / (σ²target + σ²rater + σ²error) | Agreement among a random set of raters (e.g., different technicians). |

| ICC(3,1) | Two-way mixed effects for consistency: Multiple raters, fixed set. | σ²target / (σ²target + σ²error) | Consistency of measurements across a fixed set of conditions or raters. |

Experimental Protocols

Protocol 1: Calculating ICC from a Behavioral Repeatability Study

Objective: To establish the baseline repeatability of a foraging latency measurement in a rodent model. Materials: See Scientist's Toolkit. Procedure:

- Experimental Design: Measure the latency to approach a food source for 30 individual animals. Perform this measurement three times per animal, with trials separated by 48 hours.

- Data Structuring: Organize data in a long format CSV file with columns:

Animal_ID,Trial,Latency. - R Analysis Script:

Protocol 2: Assessing Assay Reliability in Drug Development

Objective: To determine the inter-rater reliability of a cytotoxicity score in a high-content screening assay. Procedure:

- Design: Select 50 representative cell culture images. Have four trained raters score each image on a scale of 1-10 for cytotoxicity.

- Data Structuring: CSV file with columns:

Image_ID,Rater_ID,Score. - R Analysis Script:

Visualizations

Diagram 1: ICC Variance Partitioning Workflow

Title: Workflow for Partitioning Variance to Calculate ICC

Diagram 2: Relationship Between ICC Value and Data Structure

Title: Visualizing Low vs. High ICC in Repeated Measures Data

The Scientist's Toolkit

Table 3: Essential Research Reagent Solutions for ICC-Relevant Experiments

| Item | Function/Description | Example Use Case |

|---|---|---|

| R Statistical Software | Open-source environment for data analysis and ICC calculation. | Running mixed models (lme4, nlme) and ICC-specific packages (psych, irr). |

| RStudio IDE | Integrated development environment for R, facilitating code organization and visualization. | Scripting the ICC analysis protocols detailed above. |

psych R package |

Provides the ICC() function for calculating multiple ICC types from a data matrix. |

Quick calculation of Shrout & Fleiss ICC models in Protocol 1. |

irr R package |

Specialized for inter-rater reliability analysis, including various ICC formulations. | Assessing agreement among multiple raters in Protocol 2. |

lme4 R package |

Fits linear and generalized linear mixed-effects models to extract variance components. | The preferred method for flexible ICC calculation in complex designs. |

| Structured Data File (CSV) | Comma-separated values file with correctly formatted repeated measures data. | Essential input for all analysis scripts; requires columns for subject ID, trial/rater, and measurement. |

Introduction Within the broader thesis on R tutorials for among-individual variation analysis, this protocol addresses critical model diagnostics. Valid inference from mixed models in pharmacological and biological research hinges on the assumptions of normally distributed random effects and homoscedasticity (constant variance). This document provides detailed application notes for assessing these assumptions using contemporary R packages.

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function in Analysis | ||

|---|---|---|---|

R lme4 Package |

Fits linear and generalized linear mixed-effects models. Core engine for extracting random effects. | ||

R nlme Package |

Alternative for fitting mixed models, provides direct access to variance functions for heteroscedasticity. | ||

R DHARMa Package |

Creates readily interpretable diagnostic plots for model assumptions via simulation-based scaled residuals. | ||

R performance Package |

Calculates a comprehensive set of model diagnostics and checks, including normality of random effects. | ||

R ggplot2 Package |

Creates customizable, publication-quality diagnostic plots. | ||

| Shapiro-Wilk Test | Formal statistical test for assessing univariate normality of estimated random effects. | ||

| Scale-Location Plot | Diagnostic plot (fitted vs. sqrt | standardized residuals | ) to visually assess homoscedasticity. |

Variance Function (varIdent, varPower) |

Functions in nlme to model and correct for heteroscedastic residual variance structures. |

Protocol 1: Assessing Normality of Random Effects

Methodology

- Model Fitting: Fit your linear mixed model using

lmer()from thelme4package (orlme()fromnlme).

Extract Random Effects: Retrieve the conditional modes (BLUPs) of the random intercepts for each subject.

Visual Assessment:

Q-Q Plot: Plot the extracted random effects against a theoretical normal distribution.

Histogram with Density Overlay: Visualize the distribution shape.

Formal Test: Apply the Shapiro-Wilk test.

Data Presentation: Example Output for Normality Assessment

Table 1: Summary statistics and Shapiro-Wilk test results for extracted random intercepts (n=50 subjects).

| Metric | Value |

|---|---|

| Mean of BLUPs | -0.08 |

| Standard Deviation | 4.21 |

| Skewness | 0.15 |

| Kurtosis | -0.22 |

| Shapiro-Wilk Statistic (W) | 0.985 |

| p-value | 0.742 |

Interpretation: The high p-value (>0.05) and visual inspection (not shown) fail to reject the null hypothesis of normality, supporting the assumption.

Protocol 2: Assessing Heteroscedasticity of Residuals

Methodology

- Visual Assessment (Primary):

- Residuals vs. Fitted Plot: Plot model-fitted values against the Pearson or standardized residuals.

- Residuals vs. Fitted Plot: Plot model-fitted values against the Pearson or standardized residuals.

Using

DHARMafor Robust Diagnostics:The

plot()function outputs a panel including a Q-Q plot and a residuals-vs-predicted plot with a conditional variance test.- Formal Test via

DHARMa:

Data Presentation: Example Output for Heteroscedasticity Assessment

Table 2: Results from DHARMa simulation-based residual diagnostics.

| Test | Statistic | p-value | Interpretation |

|---|---|---|---|

| Kolmogorov-Smirnov | 0.032 | 0.791 | Residuals consistent with uniform distribution. |

| Deviation from Uniformity | 0.023 | 0.873 | No significant deviation detected. |

| Dispersion Test | 1.05 | 0.612 | No significant over/under-dispersion. |

| Outliers Test | 0.00 | 1.000 | No significant outliers detected. |

Protocol 3: Correcting for Heteroscedasticity in nlme

Methodology

If heteroscedasticity is detected, refit the model with a variance structure using the nlme package.

- Fit Model with

varIdent: Allows different variances per level of a categorical factor (e.g., treatment group).

Fit Model with

varPower: Models variance as a power function of a continuous covariate or fitted values.Compare Models: Use AIC/BIC to select the best variance structure and confirm improved diagnostics.

Workflow for Assessing Random Effects Assumptions

Step-by-Step Implementation: Mixed-Effects Models for Variance Partitioning in R

Within the broader thesis on R tutorials for among-individual variation analysis research, Linear Mixed Models (LMMs) are a cornerstone methodology. They are essential for analyzing data with inherent hierarchical or grouped structures—common in biological, pharmacological, and clinical research—where measurements are clustered within individuals, plants, batches, or sites. Unlike standard linear regression, LMMs incorporate both fixed effects (predictors of primary interest) and random effects (sources of random variation, such as individual-specific deviations), allowing for valid inference and generalization when data are correlated.

This protocol provides a detailed, step-by-step guide to implementing your first LMM using the lmer() function from the lme4 package in R, framed explicitly for research investigating among-individual variation.

Model Formulation

A basic LMM can be expressed as:

Y = Xβ + Zb + ε

Where:

- Y is the vector of observed responses.

- X is the design matrix for fixed effects.

- β is the vector of fixed-effect coefficients (parameters to estimate).

- Z is the design matrix for random effects.

- b is the vector of random-effect coefficients (assumed to be normally distributed, e.g., b ~ N(0, Ψ)).

- ε is the vector of residuals (ε ~ N(0, σ²I)).

The power of the model lies in partitioning variance into components attributable to the random effects (among-individual variance) and the residual variance (within-individual variance).

Diagram Title: Logical Flow of Linear Mixed Model (LMM) Analysis

Experimental Protocol: Implementing an LMM withlmer()

Prerequisites and Software Setup

Example Research Scenario

Consider a pharmacological study measuring the drug response level of 50 patients over 4 time points (e.g., Weeks 0, 2, 4, 6). Each patient receives either Drug A or Drug B (a fixed, between-subject factor). The research question focuses on the among-individual variation in baseline response and in the rate of change over time.

Simulated Dataset Structure:

Step-by-Step Modeling Protocol

Step 1: Model Specification and Fitting

Identify your outcome variable, fixed effects, and random effects structure. The random effects structure is defined by (1 + Time | PatientID), indicating random intercepts (1) and random slopes for Time (Time) that are correlated, grouped by PatientID.

Step 2: Model Summary and Interpretation

The critical output includes:

- Fixed effects estimates: The coefficients (β) for

DrugB,Time, and their interaction (DrugB:Time). These are population-level averages. - Random effects variances:

(Intercept)variance represents among-individual variation in baseline response.Timevariance represents among-individual variation in the rate of change over time. Their correlation indicates if individuals with higher baselines change faster/slower. - Residual variance: The remaining within-individual, unexplained variation.

Step 3: Hypothesis Testing for Fixed Effects

Use anova() from the lmerTest package to get p-values based on Satterthwaite's approximation for degrees of freedom.

Step 4: Variance Component Analysis Extract and interpret the random effects.

A high ICC for the intercept indicates substantial among-individual variation relative to within-individual variation.

Step 5: Model Diagnostics Validate model assumptions.

Diagram Title: LMM Analysis Workflow from Data to Report

Table 1: Fixed Effects Estimates from Example LMM

| Term | Estimate (β) | Standard Error | Degrees of Freedom | t-value | p-value |

|---|---|---|---|---|---|

| (Intercept) | 10.24 | 0.53 | 48.5 | 19.32 | <0.001 |

| DrugB | 2.42 | 0.75 | 48.0 | 3.23 | 0.002 |

| Time | 0.81 | 0.08 | 49.0 | 10.12 | <0.001 |

| DrugB:Time | 0.05 | 0.11 | 49.0 | 0.45 | 0.652 |

Table 2: Variance Components (Random Effects & Residual)

| Variance Component | Standard Deviation | Variance (σ²) | Interpretation |

|---|---|---|---|

| PatientID (Intercept) | 2.95 | 8.70 | Among-individual variance in baseline response. |

| PatientID (Time) | 0.98 | 0.96 | Among-individual variance in response to Time. |

| Corr(Intercept, Time) | -0.32 | - | Moderate negative correlation between baseline and slope. |

| Residual | 1.49 | 2.22 | Within-individual, unexplained variance. |

| Total Variance | - | 11.88 | Sum of all variance components. |

| ICC (Intercept) | - | 0.73 | 73% of variance is attributable to among-individual differences. |

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 3: Key Research Reagent Solutions for LMM Analysis

| Item/Category | Primary Function in LMM Analysis | Example/Notes | ||

|---|---|---|---|---|

lme4 R Package |

Core engine for fitting LMMs using maximum likelihood (ML) or restricted ML (REML) estimation. | Provides the lmer() function. The gold standard for flexible LMM specification. |

||

lmerTest R Package |

Provides p-values for fixed effects using Satterthwaite or Kenward-Roger approximations for degrees of freedom. | Essential for null hypothesis testing in a frequentist framework. | ||

performance R Package |

Suite of functions for model diagnostics, comparison, and calculation of performance metrics. | Used for calculating ICC, R², checking convergence, and assumption checks. | ||

ggplot2 R Package |

Creates publication-quality diagnostic and results visualization plots. | Critical for exploring random effects distributions and residual plots. | ||

| Simulated Datasets | Tool for understanding model behavior, testing code, and conducting power analysis before real data collection. | Generated using rnorm(), simulate(), or packages like faux. |

||

| High-Performance Computing (HPC) Cluster | Enables fitting of complex models with large datasets or high-dimensional random effects. | Necessary for genomic or large-scale longitudinal studies. | ||

| Model Formula Cheatsheet | Quick reference for correct lmer() formula syntax for different experimental designs. |

E.g., `(1 | Group)for random intercepts;(1 + Time |

Subject)` for random intercepts & slopes. |

Within the broader thesis on R tutorials for among-individual variation analysis, understanding random effects specification is fundamental. Mixed-effects models (hierarchical models) allow researchers to partition variance into within-individual and among-individual components. This protocol details the syntax and best practices for specifying random intercepts and slopes, which are critical for analyzing repeated measures data common in longitudinal clinical studies, behavioral ecology, and pharmacology.

Core Concepts & Model Specification

A linear mixed model with random intercepts and slopes can be expressed as: Level 1 (Within-individual): ( y{ij} = \beta{0j} + \beta{1j}X{ij} + \epsilon{ij} ) Level 2 (Among-individual): ( \beta{0j} = \gamma{00} + u{0j} ), ( \beta{1j} = \gamma{10} + u{1j} ) Combined: ( y{ij} = \gamma{00} + \gamma{10}X{ij} + u{0j} + u{1j}X{ij} + \epsilon_{ij} )

Where:

- ( y_{ij} ) is the outcome for individual j at time i.

- ( \gamma{00}, \gamma{10} ) are fixed intercept and slope (population averages).

- ( u{0j}, u{1j} ) are the random deviations for individual j from the population intercept and slope.

- ( \epsilon_{ij} ) is the within-individual residual error.

The random effects are assumed to follow a multivariate normal distribution: ( \mathbf{u}_j \sim MVN(\mathbf{0}, \mathbf{\Sigma}) ). The structure of the variance-covariance matrix ( \mathbf{\Sigma} ) is central to model specification.

Comparative Syntax Across R Packages

Table 1: Syntax for random intercepts and slopes in popular R packages.

Package (lme4, nlme, glmmTMB) |

Model Formula (Example: `y ~ time + (1 + time | subject)`) | Key Arguments & Notes |

|---|---|---|---|

lme4 (lmer()) |

`lmer(y ~ time + (1 + time | subject), data = df)` | Default optimizer: nloptwrap. Use control = lmerControl(...) for tuning. Most common for standard LM/GLMM. |

nlme (lme()) |

`lme(y ~ time, random = ~ 1 + time | subject, data = df)` | Requires explicit random argument. Offers more flexibility in correlation (cor*) and variance (var*) structures for the residuals. |

glmmTMB (glmmTMB()) |

`glmmTMB(y ~ time + (1 + time | subject), data = df)` | Syntax similar to lme4. Handles zero-inflation and a wide range of dispersion families. Uses control = glmmTMBControl(...). |

Protocol: Implementing a Random Intercept & Slope Model

Experimental Workflow for Model Fitting

Title: Workflow for Fitting a Mixed Model with Random Effects

Detailed Protocol Steps

Step 1: Data Preparation & Exploratory Analysis

- Objective: Ensure data is in long format (one row per observation). Visually assess between- and within-subject variation.

- Protocol:

- Load data (e.g.,

df <- read.csv("longitudinal_data.csv")). - Use

library(ggplot2)to create spaghetti plots:ggplot(df, aes(x=time, y=outcome, group=subject, color=subject)) + geom_line(alpha=0.6) + geom_smooth(aes(group=1), method='lm', se=F, color='black'). - Check for missing data and outliers.

- Load data (e.g.,

Step 2: Model Specification

- Objective: Define the fixed and random effects structure based on the research hypothesis.

- Protocol:

- Random Intercept Only: Assumes individuals differ in baseline level but have identical responses to predictors. Syntax:

(1 | subject). - Random Intercept & Slope (Uncorrelated): Assumes individuals differ in baseline and in their response to

time, but these deviations are independent. Syntax:(1 | subject) + (0 + time | subject)or(1 | subject) + (time - 1 | subject). - Random Intercept & Slope (Correlated): The default and most common. Allows correlation between the individual's baseline deviation and their slope deviation. Syntax:

(1 + time | subject).

- Random Intercept Only: Assumes individuals differ in baseline level but have identical responses to predictors. Syntax:

Step 3: Model Fitting with lme4

- Objective: Estimate model parameters.

- Protocol:

library(lme4)model_full <- lmer(outcome ~ treatment * time + (1 + time | subject), data = df)- Best Practice: Always center (and often scale) continuous predictors (

scale()) to improve convergence and interpretability of the intercept.

Step 4: Convergence Checking

- Objective: Verify the model has found a reliable solution.

- Protocol:

- Check warnings:

warnings(model_full). - Examine convergence codes:

summary(model_full)@optinfo$conv$lme4. - Troubleshooting: If convergence fails: a) Re-scale predictors. b) Use

control = lmerControl(optimizer = "nloptwrap", calc.derivs = FALSE). c) Simplify the random effects structure.

- Check warnings:

Step 5: Diagnose Random Effects

- Objective: Validate model assumptions regarding the random effects.

- Protocol:

- Normality:

qqnorm(ranef(model_full)$subject[,1])andqqnorm(ranef(model_full)$subject[,2]). - Homoscedasticity: Plot residuals vs. fitted values:

plot(fitted(model_full), residuals(model_full)). - Extract and visualize the variance-covariance matrix of the random effects:

VarCorr(model_full).

- Normality:

Step 6: Model Comparison

- Objective: Justify the chosen random effects structure using information criteria.

- Protocol:

- Fit a simpler model (e.g., random intercept only):

model_ri <- lmer(outcome ~ treatment * time + (1 | subject), data = df). - Compare models with

anova(model_ri, model_full). A significant lower AIC/BIC or a significant likelihood ratio test (LRT) justifies the more complex model.

- Fit a simpler model (e.g., random intercept only):

Step 7: Interpretation & Reporting

- Objective: Communicate results effectively.

- Protocol:

- Report fixed effects with

summary(model_full). - Report variance components: Use

as.data.frame(VarCorr(model_full)). - Calculate the Intraclass Correlation Coefficient (ICC) for the intercept: ( ICC = \frac{\sigma^2{subject}}{\sigma^2{subject} + \sigma^2_{residual}} ).

- Report fixed effects with

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential software tools and packages for random effects modeling.

| Item/Package | Function/Application | Key Notes |

|---|---|---|

| R Statistical Software | Core computing environment for statistical modeling and graphics. | Foundation for all analyses. Use current version (≥4.3.0). |

lme4 Package (lmer, glmer) |

Fits linear and generalized linear mixed-effects models. | Workhorse for standard models. Excellent for hierarchical and crossed designs. |

nlme Package (lme, gls) |

Fits linear and nonlinear mixed-effects models. | Provides more flexible covariance structures for residuals than lme4. |

glmmTMB Package |

Fits generalized linear mixed models with Template Model Builder. | Superior for complex dispersion, zero-inflated, and count models. |

performance Package |

Computes indices of model quality and goodness-of-fit (ICC, R², AIC, checks). | Essential for model diagnosis, comparison, and reporting. |

ggplot2 & lattice |

Creates publication-quality visualizations of data and model predictions. | lattice is particularly useful for paneled spaghetti plots. |

emmeans Package |

Computes estimated marginal means (least-squares means) and contrasts. | Crucial for post-hoc testing and interpreting fixed effects from complex models. |

MuMIn Package |

Conducts model selection and averaging based on AICc. | Useful when multiple plausible models exist. |

Advanced Considerations & Visualizing Random Effects

Random Effects Correlation Structure

Title: Variance-Covariance Matrix for Random Intercept & Slope

- Start Simple: Begin with a random intercept model, then add slopes.

- Center Predictors: Always center (and often scale) continuous predictors involved in random slopes to reduce correlation between random intercept and slope and aid convergence.

- Maximal Structure: Fit the maximal random effects structure justified by the experimental design (Barr et al., 2013), but simplify if convergence fails or models are singular.

- Test Significance: Use likelihood ratio tests (

anova()) to compare nested models, not p-values fromsummary()for random effects. - Report Fully: Always report the estimated variances (and correlation) of the random effects, not just fixed effects.

Application Notes

This application note details a protocol for analyzing among-individual (i.e., between-subject) variation in longitudinal pharmacological response data using R. This is critical in drug development to identify responders vs. non-responders, understand variable kinetics, and personalize dosing regimens. The core statistical approach leverages linear mixed-effects models (LMMs) to partition variance into within-individual (residual) and among-individual components, providing quantifiable estimates of heterogeneity in drug response over time.

Key Quantitative Findings from Recent Literature

Table 1: Summary of Studies on Among-Individual Variation in Pharmacokinetic/Pharmacodynamic (PK/PD) Parameters

| Drug Class & Example | Primary PK/PD Parameter Measured | Reported Coefficient of Variation (CV%) Among Individuals | Key Source of Variation Identified | Reference Year |

|---|---|---|---|---|

| SSRI (Sertraline) | Steady-state Plasma Concentration | 45-60% | CYP2C19 Genetic Polymorphism | 2023 |

| Anticoagulant (Warfarin) | Maintenance Dose Required | 35-50% | VKORC1 & CYP2C9 Genotype | 2022 |

| Immunotherapy (Anti-PD1) | Progression-Free Survival Time | 40-55% | Tumor Mutational Burden & Microbiome | 2023 |

| Statin (Atorvastatin) | LDL-C Reduction (%) | 25-40% | SLCO1B1 Genotype & Compliance | 2024 |

| Analgesic (Oxycodone) | AUC (Area Under the Curve) | 30-50% | CYP3A4/5 and CYP2D6 Activity | 2023 |

Table 2: Variance Components from a Simulated LMM of Drug Response Over Time (Model: Response ~ Time + (1 + Time \| Subject_ID))

| Variance Component | Estimate | % of Total Variance | Interpretation |

|---|---|---|---|

| Among-Individual Intercept | 12.5 | 52% | Variance in baseline starting response. |

| Among-Individual Slope (Time) | 3.1 | 13% | Variance in response trajectory over time. |

| Residual (Within-Individual) | 8.4 | 35% | Unexplained, measurement, or visit-to-visit variance. |

| Total Variance | 24.0 | 100% |

Experimental Protocols

Protocol 1: Longitudinal PK/PD Study for Variance Component Analysis

Objective: To quantify among-individual variation in drug exposure (PK) and effect (PD) over a 4-week treatment period.

Materials: See "Research Reagent Solutions" below.

Methodology:

- Cohort Design: Enroll N=60 participants meeting inclusion/exclusion criteria. Obtain informed consent.

- Dosing: Administer fixed daily dose of the investigational drug. Use placebo arm if applicable.

- Sampling Schedule:

- PK: Collect plasma samples at pre-dose, and at 0.5, 1, 2, 4, 8, 12, and 24 hours post-dose on Day 1 and Day 28.

- PD: Measure relevant biomarker (e.g., enzyme activity, receptor occupancy) at pre-dose, Week 1, Week 2, Week 3, and Week 4.

- Covariates: Collect genomic DNA (for pharmacogenomic panel), baseline demographics, and liver/kidney function tests.

- Bioanalysis: Quantify drug concentration in plasma using validated LC-MS/MS. Measure biomarker using validated ELISA.

- Data Analysis in R:

- Calculate primary PK parameters (AUC, Cmax) using non-compartmental analysis (

PKNCApackage). - For longitudinal PD biomarker data, fit a linear mixed-effects model:

- Calculate primary PK parameters (AUC, Cmax) using non-compartmental analysis (

Protocol 2: In Vitro Hepatocyte Assay for Metabolic Phenotyping

Objective: To determine among-donor variation in intrinsic drug clearance using primary human hepatocytes.

Methodology:

- Hepatocyte Sourcing: Acquire cryopreserved primary human hepatocytes from ≥10 different donors.

- Thawing & Plating: Rapidly thaw cells and plate in collagen-coated 96-well plates at viable density.

- Dosing & Incubation: After 24h recovery, incubate hepatocytes with test compound (1µM) in serum-free medium. Include positive control (e.g., testosterone for CYP3A4).

- Time Course Sampling: Collect supernatant aliquots at 0, 15, 30, 60, 120 minutes.

- Analysis: Quantify parent compound depletion by LC-MS/MS.

- Data Analysis:

- Calculate in vitro intrinsic clearance (CLint) using the depletion half-life method for each donor.

- Compute CV% across donors to quantify among-individual variability.

- Correlate CLint with donor genotype for specific CYP enzymes.

Mandatory Visualization

The Scientist's Toolkit

Table 3: Research Reagent Solutions for Individual Difference Studies

| Item/Category | Specific Example/Supplier | Function in Experiment |

|---|---|---|

| Primary Human Hepatocytes | Thermo Fisher Scientific (Gibco), BioIVT | Gold-standard in vitro model for assessing donor-to-donor variation in hepatic drug metabolism and toxicity. |

| Pharmacogenomic Panel | Illumina PharmacoFocus, Affymetrix Drug Metabolizing Enzyme & Transporter Panel | Targeted genotyping of key variants in genes like CYP2D6, CYP2C19, VKORC1, TPMT to explain PK/PD variability. |

| LC-MS/MS System | SCIEX Triple Quad, Agilent 6470 | High-sensitivity, specific quantification of drugs and metabolites in complex biological matrices (plasma, supernatant). |

| Multiplex Immunoassay | Luminex xMAP, Meso Scale Discovery (MSD) U-PLEX | Simultaneous measurement of multiple PD biomarkers (cytokines, phosphoproteins) from limited sample volumes. |

| R Packages for Mixed Models | lme4, nlme |

Fit linear and nonlinear mixed-effects models to partition variance and model individual trajectories. |

| R Packages for PK/PD Analysis | PKNCA, mrgsolve, nlmixr2 |

Perform non-compartmental analysis, simulate PK using physiological models, and fit complex PK/PD models. |

| Bioanalyzer for DNA/RNA QC | Agilent 2100 Bioanalyzer | Assess quality and integrity of genomic DNA or RNA extracted for biomarker or transcriptomic analysis. |

| Cryopreserved Whole Blood/Plasma | Discovery Life Sciences, ZenBio | Matched biospecimens from diverse donors for in vitro assays correlating genetic makeup with ex vivo drug response. |

This application note is a component of a broader thesis on using R for among-individual variation analysis in biomedical research. It provides a practical protocol for quantifying and interpreting patient-specific variability in longitudinal biomarker data, a critical task in precision medicine and stratified drug development.

Table 1: Simulated Cohort Biomarker (e.g., Serum Protein X) Summary at Baseline

| Patient Stratification | N | Mean Concentration (pg/mL) | SD (pg/mL) | Coefficient of Variation (%) |

|---|---|---|---|---|

| Disease Subtype A | 50 | 125.6 | 28.3 | 22.5 |

| Disease Subtype B | 50 | 98.4 | 35.7 | 36.3 |

| Healthy Reference | 30 | 45.2 | 9.1 | 20.1 |

Table 2: Model Output for Trajectory Variance Components (Mixed-Effects Model)

| Variance Component | Estimate (pg²/mL²) | % of Total Variance | Interpretation |

|---|---|---|---|

| Among-Individuals (Intercept) | 415.2 | 65% | Baseline level variability between patients. |

| Among-Individuals (Slope) | 12.8 | 20% | Variability in rate of biomarker change over time. |

| Residual (Within-Individual) | 105.6 | 15% | Unexplained, measurement-level variability. |

Experimental Protocols

Protocol 3.1: Longitudinal Biomarker Sample Collection & Assay Objective: To collect and process serial blood samples for biomarker quantification.

- Patient Scheduling: Enroll patients and schedule visits at T=0 (baseline), 4, 12, 24, and 52 weeks.

- Sample Collection: Draw 10 mL venous blood into serum separator tubes.

- Processing: Allow clotting for 30 min at RT. Centrifuge at 1500 × g for 15 min. Aliquot serum into 500 µL cryovials.

- Storage: Store at -80°C until batch analysis. Avoid freeze-thaw cycles.

- Quantification: Use a validated, high-sensitivity multiplex immunoassay (e.g., Luminex or MSD U-PLEX). Run all samples from a single patient on the same plate to reduce inter-plate variability. Include triplicate technical replicates and standard curves.

Protocol 3.2: Data Preprocessing & Quality Control in R Objective: To prepare raw assay data for trajectory modeling.

- Data Import: Load raw fluorescence or OD data, standard curves, and patient metadata into R using

readxlordata.table. - Curve Fitting: Fit a 5-parameter logistic (5PL) model to standard curves using the

drcpackage to convert signals to concentrations. - QC Filtering: Exclude replicates with CV > 20%. Impute missing values using last observation carried forward (LOCF) only if <10% of data are missing for a patient.

- Normalization: Apply batch correction using the

svapackage if a plate effect is detected.

Protocol 3.3: Fitting a Linear Mixed-Effects Model for Trajectories Objective: To quantify among-individual variance in biomarker slopes and intercepts.

- Model Specification: Use the

lme4package. A basic model:lmer(Biomarker ~ Time + (Time | Patient_ID), data = df). - Model Fitting: Check convergence. Scale

Timevariable if needed. - Variance Extraction: Use

VarCorr(model)to extract the covariance components for intercept and slope. - Visualization: Extract random effects with

ranef(model)and plot individual predicted trajectories usingggplot2.

Mandatory Visualizations

Diagram Title: Workflow for Biomarker Variability Analysis

Diagram Title: Biological Pathway & Variability Sources for a Serum Biomarker

The Scientist's Toolkit: Research Reagent Solutions

| Item / Solution | Function & Application |

|---|---|

| MSD U-PLEX Assay Kits | Multiplex electrochemiluminescence immunoassay plates for simultaneous, high-sensitivity quantification of up to 10 biomarkers from a single 50 µL serum sample. |

| Luminex xMAP Magnetic Beads | Bead-based multiplex immunoassay system for medium-to-high throughput screening of biomarker panels. |

| Roche cOmplete Protease Inhibitor Cocktail | Added during serum processing to prevent proteolytic degradation of protein biomarkers. |

R Package lme4 |

Primary tool for fitting linear and generalized linear mixed-effects models to partition variance components. |

R Package ggplot2 |

Creates publication-quality graphics for visualizing individual trajectories and model predictions. |

R Package shiny |

Used to build interactive web applications for clinical researchers to explore patient-specific trajectories. |

| Biorepository-grade Cryovials | For secure long-term storage of serum aliquots at -80°C with barcoding for sample tracking. |

| Hamilton STARlet Liquid Handler | Automates sample and reagent pipetting for immunoassays, significantly reducing technical variability. |

Within the broader thesis on R tutorials for among-individual variation analysis, understanding variance components is paramount. Mixed-effects models partition observed variability into distinct sources (e.g., individual-level random effects, residual error). The VarCorr() and sigma() functions in R are essential tools for extracting these components, enabling researchers to quantify biological heterogeneity, assay repeatability, and other key parameters in pharmacological and biomedical studies.

Core Functions: Protocol & Application Notes

Function Syntax and Output

VarCorr() Function Protocol

- Package:

lme4ornlme(must be loaded). - Syntax:

VarCorr(fitted_model_object) - Input: A fitted model object from

lmer()(lme4) orlme()(nlme). - Primary Output: A list containing standard deviations and correlations for random effects. The variance-covariance matrix for random effects is obtained by squaring the standard deviations.

sigma() Function Protocol

- Package: Base R stats (works on models from

lme4,nlme,glm). - Syntax:

sigma(fitted_model_object) - Input: A fitted model object.

- Primary Output: The residual standard deviation (sigma). The residual variance is

sigma(model)^2.

Detailed Experimental Workflow

Protocol: Extracting Variance Components from a Longitudinal Pharmacokinetic Study

- Objective: Quantify inter-individual variation in drug clearance and residual unexplained variability.

- Model:

lmer(plasma_concentration ~ time + dose + (1 | subject_id), data = pk_data) - Step-by-Step:

- Fit the linear mixed model using

lmer(). - Execute

vc <- VarCorr(model)to store variance components. - Execute

print(vc)to view standard deviations and variances. - Execute

sigma_est <- sigma(model)to obtain residual SD. - Calculate Intra-class Correlation Coefficient (ICC):

ICC = (Subject Variance) / (Subject Variance + Residual Variance).

- Fit the linear mixed model using

Table 1: Example Output from VarCorr() and sigma() for a Hypothetical Drug Response Model

| Variance Component | Symbol | Standard Deviation (SD) | Variance (SD²) | Interpretation |

|---|---|---|---|---|

| Intercept (Individual) | σ_α | 5.12 units | 26.21 units² | Among-individual variation in baseline response. |

| Residual (Within) | σ_ε | 2.05 units | 4.20 units² | Unexplained, within-individual measurement variation. |

| Calculated ICC | ρ | - | 0.86 | 86% of total variance is due to stable individual differences. |

Table 2: Comparison of VarCorr() Output Across Common R Packages

| Package | Function | Model Fitting Function | Key Output Format | Accessing Subject Variance |

|---|---|---|---|---|

lme4 |

VarCorr() |

lmer(), glmer() |

merMod S4 object |

as.data.frame(VarCorr(model))$vcov[1] |

nlme |

VarCorr() |

lme() |

Printed table, matrix | VarCorr(model)[1,1] (as numeric) |

glmmTMB| VarCorr() |

glmmTMB() |

List | VarCorr(model)$cond$subject_id |

Visualizing the Workflow and Variance Partitioning

Variance Extraction Workflow

Partitioning of Total Variance

The Scientist's Toolkit: Research Reagent Solutions

| Item/Category | Example in R | Function in Variance Analysis |

|---|---|---|

| Core Modeling Package | lme4 (v1.1-35+) |

Provides lmer() for fitting linear mixed models and the primary VarCorr() function. |

| Alternative/Extended Package | nlme (v3.1-164+) |

Provides lme() and a different VarCorr() implementation; useful for correlated structures. |

| Variance Extractor Function | VarCorr() |

Extracts standard deviations, variances, and correlations of random effects from fitted models. |

| Residual SD Function | sigma() |

Extracts the residual standard deviation (sigma) from fitted models. |

| Result Tidying Tool | broom.mixed::tidy(VarCorr()) |

Converts the VarCorr() output into a tidy data frame for reporting and plotting. |

| ICC Calculation Package | performance::icc() |

Automatically calculates Intra-class Correlation Coefficient from model objects. |

| Visualization Suite | ggplot2 |

Used to create plots (e.g., caterpillar plots of random effects) based on extracted variances. |

Generalized Linear Mixed Models (GLMMs) extend GLMs by incorporating both fixed effects (population-level) and random effects (group-level, e.g., patient, site) to handle correlated, non-normal data common in biomedical research.

Table 1: Common Non-Normal Data Distributions and Corresponding GLMM Link Functions

| Data Type / Outcome | Example in Biomedical Research | Recommended Distribution | Canonical Link Function | Typical Variance Function |

|---|---|---|---|---|

| Binary | Disease Yes/No, Survival Status | Binomial | Logit | µ(1-µ) |

| Count | Number of Tumor Lesions, Cell Counts | Poisson | Log | µ |

| Overdispersed Count | Pathogen Load (variance > mean) | Negative Binomial | Log | µ + µ²/k |

| Proportional | Tumor Volume Reduction, % Inhibition | Beta | Logit | µ(1-µ)/(1+φ) |

| Ordinal | Disease Severity Stage (I-IV) | Cumulative Binomial | Logit, Probit | - |

Table 2: Software Packages for GLMM Implementation in R

| Package | Primary Function | Key Features for Among-Individual Variation | Latest Version (as of 2024) |

|---|---|---|---|

lme4 |

glmer() |

Flexible random effects structures; well-documented. | 1.1-35.1 |

glmmTMB |

glmmTMB() |

Handles zero-inflation, negative binomial, complex random effects. | 1.1.9 |

MASS |

glmmPQL() |

Penalized quasi-likelihood; useful for non-canonical links. | 7.3-60.2 |

brms |

brm() |

Bayesian framework; robust for complex hierarchical models. | 2.20.4 |

GLMMadaptive| mixed_model() |

Handles non-standard distributions and random effects. | 0.9-4 |

Experimental Protocols

Protocol 2.1: Analyzing Repeated Binary Outcomes (Longitudinal Clinical Trial)

Objective: Model the binary response (response/no response) of patients over time, accounting for repeated measures within patients and variability across trial sites.

Materials: R software (v4.3.0+), packages lme4, emmeans, ggplot2.

Procedure:

- Data Preparation: Structure data in long format with columns:

PatientID,SiteID,Time(numeric or factor),Treatment(factor),Response(0/1), and covariates (e.g.,Age,BaselineScore). - Model Specification: Use a binomial GLMM with a logit link. The primary model includes fixed effects for

Time,Treatment, their interaction, andAge. Include random intercepts forPatientID(to account for repeated measures) andSiteID(to account for variability across clinics).

- Model Checking:

- Overdispersion: Check for binomial models using

DHARMapackage simulation residuals. - Convergence: Verify no warnings; check gradient and Hessian.

- Random Effects: Use

ranef(model_binomial)to inspect deviations.

- Overdispersion: Check for binomial models using

- Inference & Interpretation:

- Use

summary(model_binomial)for fixed effects coefficients (log-odds). - Calculate odds ratios via

exp(fixef(model_binomial)). - Obtain predicted marginal means for significant interactions using

emmeans.

- Use

- Visualization: Plot predicted probabilities of response over

Timefor eachTreatmentgroup, with confidence intervals.

Protocol 2.2: Modeling Overdispersed Count Data (Tumor Lesion Counts)

Objective: Analyze the count of new tumor lesions post-treatment, where variance exceeds the mean (overdispersion), with random effects for subject and lab technician.

Materials: R software, packages glmmTMB, DHARMa, performance.

Procedure:

- Data Preparation: Ensure data includes:

SubjectID,TechnicianID,Treatment,Week,LesionCount,Offset(e.g., log(area scanned)). - Model Specification: Fit a Negative Binomial GLMM (type 2) to handle overdispersion. Include fixed effects for

Treatment,Week, and their interaction. Include a random intercept forSubjectIDandTechnicianID. Use an offset if counts are from differently sized areas.

- Model Diagnostics:

- Simulate residuals with

DHARMa::simulateResiduals(model_count)and test for uniformity, dispersion, and outliers. - Check for zero-inflation using

performance::check_zeroinflation(). If present, consider a zero-inflated model (family = zi_nbinom2).

- Simulate residuals with

- Interpretation: Report incidence rate ratios (IRR) by exponentiating fixed effects. Use

emmeansfor post-hoc pairwise comparisons between treatments at specific weeks.

Visualizations

Title: GLMM Analysis Workflow for Biomedical Data

Title: Nested Random Effects: Patients within Clinical Sites

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential R Packages for GLMM Analysis in Biomedical Research

| Package Name | Category | Primary Function in Analysis | Installation Command |

|---|---|---|---|

lme4 / glmmTMB |

Core Modeling | Fit the GLMMs with flexible formulas for fixed and random effects. | install.packages("lme4") |

DHARMa |

Diagnostics | Create readily interpretable scaled residuals for any GLMM to detect misspecification. | install.packages("DHARMa") |

performance |

Model Evaluation | Comprehensive check of model assumptions (ICC, R², overdispersion, zero-inflation). | install.packages("performance") |

emmeans |

Post-hoc Analysis | Calculate estimated marginal means, contrasts, and pairwise comparisons from fitted models. | install.packages("emmeans") |

effects / ggeffects |

Visualization | Plot model predictions (conditional and marginal effects) for interpretation. | install.packages("ggeffects") |

tidyverse |

Data Wrangling | Suite of packages (dplyr, tidyr, ggplot2) for efficient data manipulation and plotting. |

install.packages("tidyverse") |

knitr / rmarkdown |

Reporting | Generate reproducible analysis reports integrating R code, results, and tables/figures. | install.packages("rmarkdown") |

Solving Common Problems: Convergence Issues, Model Selection, and Assumption Checks

Diagnosing and Fixing Convergence Warnings in lme4 Models

Within the broader thesis on using R for among-individual variation analysis, linear mixed-effects models (LMEMs) fitted with lme4 are fundamental. Convergence warnings indicate that the nonlinear optimizer failed to find a reliable solution, potentially biasing parameter estimates and invalidating inferences about individual variation. This protocol details a systematic diagnostic and remediation workflow.

Understanding Convergence Warnings

Convergence warnings in lme4 typically manifest as:

Model failed to converge with max|grad| ...: The gradient at the solution is too steep.Model is nearly unidentifiable: very large eigenvalue: Scale differences between predictors or a singular fit.Model failed to converge: degenerate Hessian: The model is overfitted or ill-specified.

Table 1: Common lme4 Convergence Warnings and Their Immediate Implications

| Warning Type | Key Phrase | Likely Cause | Parameter Reliability | ||

|---|---|---|---|---|---|

| Gradient | `max | grad | ` | Flat or steep likelihood surface | Low |

| Eigenvalue | very large eigenvalue |

Predictor scaling issue or singularity | Very Low | ||

| Hessian | degenerate Hessian |

Overparameterization | None |

Diagnostic Protocol

Protocol 3.1: Initial Model Interrogation

Objective: Gather detailed information about the failed model. Steps:

- Fit the model with

lmer(..., control = lmerControl(calc.derivs = FALSE))to suppress derivative warnings temporarily. - Run

summary(model)and note fixed effects with unusually large SEs or p-values ~1. - Extract the variance-covariance matrix of random effects using

VarCorr(model). Look for correlations at ±1 or variances near zero. - Check model dimensionality with

rePCA(model)to assess random effect complexity.

Protocol 3.2: Singularity and Scalability Check

Objective: Diagnose scale and overfitting issues. Steps:

- Center & Scale: Numeric predictors should be centered and scaled using

scale(). - Check Singularity: Use

lme4::isSingular(model). IfTRUE, the model is overfitted. - Examine Eigenvalues: Calculate eigenvalues of the random effects covariance matrix. A near-zero eigenvalue indicates a singularity.

Table 2: Diagnostic Output Interpretation Guide

| Diagnostic Test | Function/Tool | Problem Indicator | Threshold | ||

|---|---|---|---|---|---|

| Gradient Magnitude | lme4:::getME(model, "gradient") |

Absolute value > 0.001 | `max | grad | > 0.001` |

| Singular Fit | lme4::isSingular(model) |

Output = TRUE |

N/A | ||

| Random Effect Correlation | VarCorr(model) |

Correlation = ±1.0 | |corr| >= 0.99 |

||

| Predictor Scale | sd(x) |

Large ratio between predictors | Ratio > 10 |

Remediation Protocols

Protocol 4.1: Optimizer Control & Selection

Objective: Use a more robust optimization algorithm. Steps:

- Increase iterations:

lmerControl(optCtrl = list(maxfun = 2e5)). - Switch optimizers:

- Use

allFit()to test all available optimizers and compare estimates.

Protocol 4.2: Model Simplification

Objective: Resolve overparameterization (singularity). Steps:

- Remove random effects with near-zero variance, starting with the highest-level correlations.

- Simplify the random-effects structure (e.g., from

(1 + x\|group)to(1\|group) + (0 + x\|group)). - If simplification is not theoretically justified, consider a Bayesian approach with regularizing priors (e.g.,

blmeorbrms).

Protocol 4.3: Re-scaling and Re-parameterization

Objective: Improve optimizer numerical stability. Steps:

- Center and scale all continuous predictors:

(x - mean(x)) / (2*sd(x)). - For models with categorical predictors with many levels, check for complete separation.

- For time-series, consider using an alternative parameterization (e.g., AR1 models in

nlme).

Validation Workflow Diagram

Title: Convergence Warning Diagnostic and Fix Workflow

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Software & Packages for Mixed Model Diagnostics

| Package/Function | Category | Primary Function | Use Case |

|---|---|---|---|

lme4::lmer() |

Core Modeling | Fits LMEMs via REML/ML | Primary model fitting. |

lme4::allFit() |

Diagnostics | Fits model with all optimizers | Identifies optimizer-sensitive parameters. |

performance::check_convergence() |

Diagnostics | Comprehensive convergence check | Single-function summary of issues. |

optimx |

Optimization | Advanced optimization algorithms | Provides robust alternative optimizers. |

dfoptim::nmk() |

Optimization | Nelder-Mead optimizer | A fallback optimizer for difficult problems. |

blme |

Regularization | Bayesian penalized LMEMs | Adds weak priors to prevent singularity. |

sjPlot::plot_model() |

Visualization | Visualizes random effects | Diagnoses random effect distributions. |

RevoScaleR::rxGlm() (Microsoft R) |

Alternative Fitting | Scalable, robust GLMs | For very large datasets. |

Within the broader thesis on using R for among-individual variation analysis, a primary obstacle is the "singular fit" warning when fitting linear mixed-effects models (LMMs). This indicates overfitted random effects structures where the model estimates a variance-covariance parameter as zero or correlations as +/-1. This Application Note details the causes, diagnostic protocols, and solutions for researchers and drug development professionals.

Core Causes of Singular Fits

Singular fits arise when the estimated random effects structure is too complex for the data. The primary causes are:

- Insufficient Data: Too few groups (e.g., subjects, batches) or too few observations per group to estimate random variation reliably.

- Over-parameterization: A random effects formula (e.g.,

(1 + time | subject)) that includes slopes and correlations which the data cannot support. - Near-Zero Variance: A random factor demonstrates practically no variation across groups.

- Perfect Correlation: Random slopes for different predictors are estimated as perfectly correlated (|r| = 1).

Table 1: Quantitative Summary of Common Singular Fit Scenarios

| Scenario | Typical Data Characteristic | Estimated Parameter Causing Singularity | Common in Research Context |

|---|---|---|---|

| Minimal Grouping | N groups < 5 | Random intercept variance (~0) | Pilot studies, rare populations |

| Sparse Repeated Measures | Observations per group < 3 | Random slope variance or correlation (±1) | Longitudinal studies with missed timepoints |

| Redundant Predictor | Between-group predictor variation ~0 | Random slope variance for that predictor (~0) | Drug studies where dose is fixed per cohort |

| Collinear Slopes | Time & Time^2 slopes vary identically across subjects | Correlation between random slopes (±1) | Nonlinear growth modeling |

Diagnostic Protocol

Follow this step-by-step workflow to diagnose the source of a singular fit.

Diagram Title: Workflow for Diagnosing a Singular Model Fit

Detailed Protocol Steps

Protocol 1: Data Structure Interrogation

- Code:

table(data$grouping_variable) - Output Analysis: Check if any grouping level has fewer than 3 observations. Fewer than 5 groups often causes intercept variance issues.

- Code:

ggplot(data, aes(x=factor(group), y=response)) + geom_boxplot() - Visual Analysis: Look for boxes with no spread (near-zero variance).

Protocol 2: Variance-Covariance Examination

- Fit the suspect model using

lmer()fromlme4. - Code:

vc <- VarCorr(fitted_model); print(vc, comp=c("Variance", "Std.Dev", "Corr")) - Analysis: Scrutinize the output. A variance of

0.0000or a correlation of1.000or-1.000indicates the problem term.

Protocol 3: Likelihood Ratio Test (LRT) for Nested Models

- Fit a reduced model (e.g.,

(1 | group)). - Fit the full, singular model (e.g.,

(1 + time | group)). - Code:

anova(reduced_model, full_model) - Interpretation: A non-significant p-value suggests the reduced, non-singular model is sufficient.

Solution Pathways & Experimental Application

Choosing a solution depends on the diagnosed cause and the research question.

Diagram Title: Mapping Causes of Singular Fits to Practical Solutions

Protocol for Implementing Solutions

Protocol 4: Simplifying Random Effects Structure Goal: Reduce complexity while retaining necessary variation.

- Remove Correlations: Change

(1 + time | subject)to(1 + time || subject)or(1 | subject) + (0 + time | subject). - Remove Random Slopes: If time slope is consistent, use only random intercepts:

(1 | subject). - Code Comparison:

Protocol 5: Applying Bayesian Regularization with blme

Goal: Use weak priors to stabilize variance estimates without changing the model's intended structure.

- Install & Load:

install.packages("blme"); library(blme) - Apply Custom Prior: Use

cov.priorto add a weak Wishart prior to the random effects covariance matrix. - Code:

Protocol 6: Falling Back to Fixed Effects Modeling Goal: When random variance is truly zero, a fixed effects model is statistically correct.

- Verify: Ensure

VarCorr()shows random intercept variance is0. - Switch to LM: Use

lm()withaov()for grouped data, or use fixed effects for groups. - Code:

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Software & Packages for Mixed Models Diagnostics

| Reagent/Package | Function | Application Context |

|---|---|---|

lme4 (v 1.1-35.1) |

Fits LMMs/GLMMs via maximum likelihood. Core engine for model fitting. | Primary tool for lmer() and glmer() calls. |

nlme (v 3.1-164) |

Alternative for LMMs, allows for more complex variance structures. | Useful when lme4 fails or for specific correlation structures. |

blme (v 1.0-5) |

Adds Bayesian priors to lme4 models, regularizing estimates. |

Solution for singular fits when model structure must be preserved. |

performance (v 0.13.0) |

Provides check_singularity() for automated singularity detection. |

Rapid diagnostic step in model checking workflow. |

ggplot2 (v 3.5.1) |

Creates data visualization for exploratory data analysis (EDA). | Visualizing group-level variation and model residuals. |

sjPlot (v 2.8.16) |

Generates tabular and graphical summaries of lme4 model outputs. |

Communicating final model results in reports/publications. |