DeepLabCut vs. Manual Scoring: A Researcher's Guide to Automated Behavior Analysis

This article provides a comprehensive comparison of DeepLabCut and manual behavior scoring for researchers and drug development professionals.

DeepLabCut vs. Manual Scoring: A Researcher's Guide to Automated Behavior Analysis

Abstract

This article provides a comprehensive comparison of DeepLabCut and manual behavior scoring for researchers and drug development professionals. It explores the foundational principles of each method, details the practical workflow for implementing DeepLabCut, addresses common challenges and optimization strategies, and presents a rigorous, evidence-based comparison of accuracy, efficiency, and scalability. The analysis concludes with actionable insights for selecting the appropriate method and discusses future implications for high-throughput phenotypic screening and translational research.

From Observer's Eye to AI: Defining Manual and Automated Behavior Analysis

Within the accelerating field of behavioral neuroscience and psychopharmacology, the quantification of behavior remains a cornerstone of empirical research. The emergence of powerful, automated pose-estimation tools like DeepLabCut has prompted a critical re-evaluation of methodological foundations. This whitepaper details the principles and procedural rigor of manual behavior scoring, which serves as the essential ground truth against which all automated systems, including DeepLabCut, must be validated. Manual scoring is not merely a legacy technique but the definitive benchmark for accuracy, nuance, and construct validity in behavioral analysis.

Core Principles of Manual Scoring

Manual behavior scoring is governed by non-negotiable principles that ensure data integrity and reliability:

- Operational Definition Fidelity: Every scored behavior must be defined with explicit, unambiguous criteria detailing onset, offset, and morphological characteristics.

- Blinded Scoring: To eliminate confirmation bias, scorers must be blinded to experimental group allocation (e.g., treatment vs. control).

- Intra- and Inter-Rater Reliability: The consistency of a single scorer over time (intra-rater) and agreement between multiple independent scorers (inter-rater) must be quantified and maintained above a minimum threshold (typically Cohen's Kappa or Intraclass Correlation Coefficient > 0.8).

- Contextual Interpretation: The scorer integrates subtle, often non-discrete, contextual cues (e.g., intention movements, environmental interactions) that are frequently elusive to algorithmic models.

The Manual Scoring Workflow

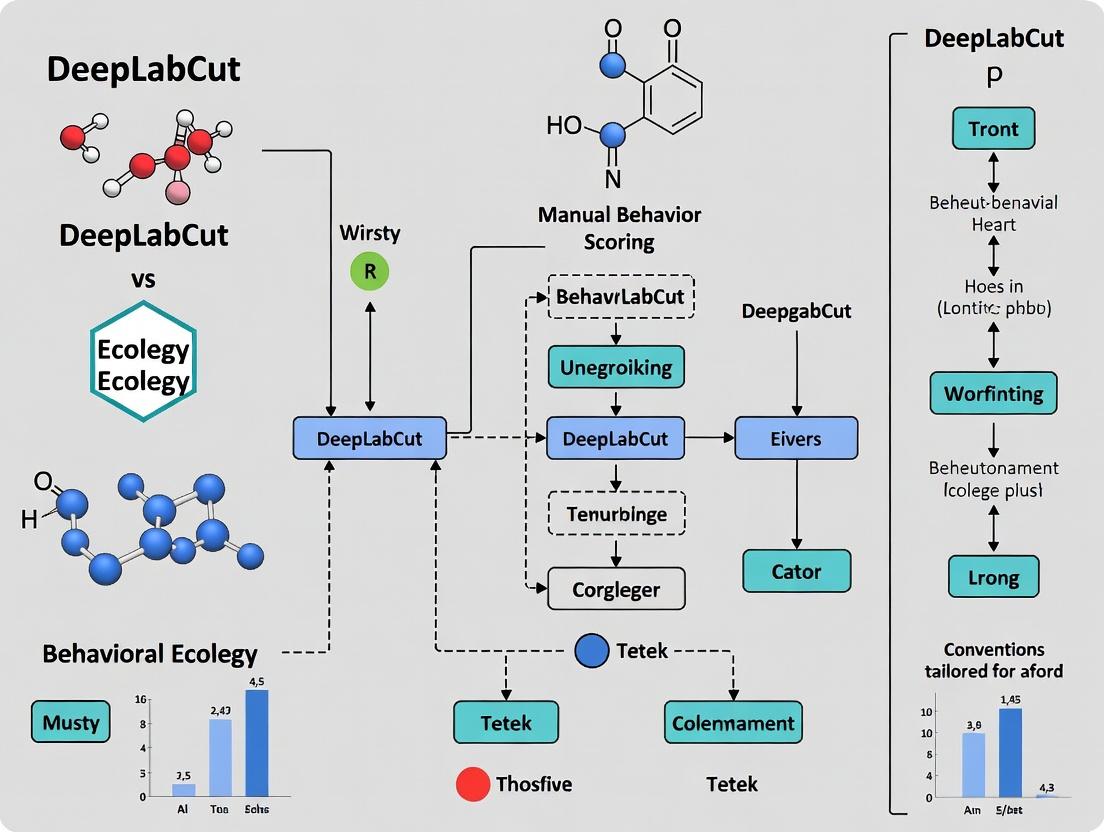

The following diagram illustrates the standardized, iterative workflow for generating high-fidelity manual behavioral data.

Detailed Methodologies for Key Steps

Step 1 & 2: Ethogram Development and Rater Training

- Protocol: Researchers compile a comprehensive ethogram listing all behaviors of interest. Each behavior is defined using precise, observable criteria. Raters undergo systematic training using a library of reference videos not included in the main study. Training continues until raters achieve >90% agreement with a master coder on the training set.

Step 3: Primary Scoring

- Protocol: Using software platforms (e.g., BORIS, Solomon Coder), blinded raters annotate video files. Continuous sampling or instantaneous time-sampling methods are selected based on the research question. Annotations record behavior onset, offset, and often intensity.

Step 4: Reliability Assessment

- Protocol: A randomly selected subset of videos (typically 15-20%) is scored independently by all raters. Reliability is calculated using appropriate statistical measures for the data type:

- Cohen's Kappa (κ): For categorical (present/absent) behavior states.

- Intraclass Correlation Coefficient (ICC): For continuous measures like duration or frequency.

- Pearson's r: For interval-based frequency counts. The process returns to training if agreements fall below the pre-defined threshold.

Quantitative Comparison: Manual Scoring vs. DeepLabCut

The following table summarizes the core comparative metrics between manual scoring and a typical DeepLabCut pipeline, based on recent validation studies.

Table 1: Comparative Analysis of Manual Scoring and DeepLabCut

| Metric | Manual Behavior Scoring | DeepLabCut (Typical Pipeline) | Implication for Research |

|---|---|---|---|

| Accuracy (Ground Truth) | Definitive (The Standard) | High (>95% pixel error low) but requires validation | Manual scoring sets the benchmark; DLC accuracy is contingent on training data quality. |

| Throughput | Low (Real-time or slower) | Very High (Once trained) | Manual scoring is a bottleneck for large-N studies; DLC enables high-volume analysis. |

| Objectivity | Subject to human bias | Algorithmically consistent | Blinding and reliability checks are critical for manual scoring to mitigate bias. |

| Nuance & Context | Excellent. Can score complex, holistic behaviors. | Limited to predefined body parts. Struggles with amorphous states (e.g., "freezing"). | Manual scoring is superior for ethologically complex constructs not reducible to posture. |

| Start-up Cost | Low (Software, training time) | High (GPU hardware, technical expertise) | DLC has a steeper initial barrier to implementation. |

| Operational Flexibility | High. Definitions can be adjusted post-hoc. | Low. Model must be retrained for new features. | Manual scoring allows iterative refinement of hypotheses during analysis. |

The Scientist's Toolkit: Essential Reagents & Materials

Table 2: Key Research Reagent Solutions for Manual Behavioral Scoring

| Item | Function/Description | Example Product/Software |

|---|---|---|

| Behavioral Annotation Software | Primary tool for manually logging behavior onset/offset from video files. | BORIS, Solomon Coder, Noldus Observer XT |

| High-Definition Video Recording System | Captures high-quality, multi-angle video for precise behavioral discrimination. | Cameras with ≥1080p resolution, infrared for dark cycles, synchronized systems. |

| Reliability Analysis Software | Calculates inter- and intra-rater agreement statistics (Kappa, ICC). | IBM SPSS, R (irr package), Python (sklearn). |

| Standardized Testing Arenas | Provides consistent environmental context to reduce external variability. | Open Field, Elevated Plus Maze, Morris Water Maze apparatus. |

| Reference Ethogram Library | A curated collection of published, rigorously defined ethograms for the model organism. | Essential for operational definition development and field standardization. |

Manual behavior scoring represents the epistemological foundation of behavioral science. Its rigorous principles—blinded assessment, operational definition, and reliability quantification—establish the valid ground truth against which automated tools like DeepLabCut are measured. While DeepLabCut offers transformative scalability for posture tracking, the interpretation of complex behavioral states and the validation of any automated system ultimately depend on the irreplaceable nuance and discernment of the trained human observer. The future of robust behavioral phenotyping lies not in choosing one method over the other, but in their synergistic integration, where manual scoring defines the truth that guides algorithmic innovation.

This technical guide details the core operational principles of DeepLabCut, a leading markerless pose estimation tool. This analysis is situated within a broader research thesis comparing the efficacy, efficiency, and scalability of automated tools like DeepLabCut against traditional manual behavior scoring in biomedical research. The shift from manual annotation to computer vision-based automation represents a paradigm change for behavioral phenotyping in neuroscience, psychopharmacology, and drug development.

Core Architectural Principles

DeepLabCut leverages Deep Neural Networks (DNNs), specifically an adaptation of the ResNet (Residual Network) and MobileNet architectures, for part detection. It employs a transfer learning approach, where a network pre-trained on a massive image dataset (e.g., ImageNet) is fine-tuned on a much smaller, user-labeled dataset of the experimental subject.

The core workflow is based on pose estimation via confidence maps and part affinity fields. The network learns to:

- Generate a confidence map for each defined body part (e.g., snout, paw, tail base), representing the probability that a given pixel contains that part.

- Generate part affinity fields (PAFs) that encode the direction and location of limbs (the "limb vector") between parts, enabling the assembly of individual detections into a coherent pose.

Experimental Protocols & Methodologies

Standard DeepLabCut Experimental Pipeline

A. Data Acquisition & Preparation:

- Video Recording: Record high-speed videos (e.g., 60-200 fps) of the behaving animal under consistent lighting. Multiple camera views may be used for 3D reconstruction.

- Frame Extraction: Extract representative frames (~100-1000) spanning the full behavioral repertoire and variability in animal postures.

- Labeling: Manually annotate (label) the user-defined body parts on the extracted frames using the DeepLabCut GUI. This creates the ground truth training dataset.

B. Model Training:

- Network Configuration: Select a base model (e.g., ResNet-50, ResNet-101, MobileNet-v2).

- Fine-tuning: The pre-trained network weights are fine-tuned on the labeled frames. Data augmentation (rotation, scaling, cropping) is applied to improve generalizability.

- Iterative Refinement (Optional): The trained model is used to label new frames. Poorly predicted frames are added to the training set, and the model is re-trained to improve performance.

C. Analysis & Inference:

- Video Processing: The trained model processes all video frames to predict body part locations.

- Post-processing: Predictions are refined using temporal filters (e.g., median filtering, spline interpolation) to smooth trajectories and handle occlusions.

- Downstream Analysis: x,y coordinate time series are used for kinematic analysis (speed, acceleration, joint angles), behavior classification, or dynamics modeling.

Comparative Validation Protocol (DeepLabCut vs. Manual Scoring)

To empirically support the thesis, a standard validation experiment is conducted.

Objective: Quantify the agreement, time investment, and reproducibility between DeepLabCut and expert human scorers.

Protocol:

- Subject & Behavior: Select n video clips of mice (e.g., 10 clips, 1-minute each) exhibiting complex behaviors (e.g., social interaction, grooming, gait).

- Manual Scoring Cohort: m trained researchers (e.g., m=3) manually annotate the position of k body parts (e.g., snout, left/right forepaw) for every f^th^ frame (e.g., every 10th frame) using annotation software. The time taken is recorded.

- DeepLabCut Pipeline:

- A separate set of labeled frames is used to train a DeepLabCut model.

- The trained model analyzes the same n video clips.

- The computer's processing time (GPU/CPU) is recorded.

- Comparison Metric: Calculate the Root Mean Square Error (RMSE) in pixels between the DeepLabCut predictions and the manual scorer consensus (ground truth) for each body part. Calculate the inter-rater reliability (e.g., Intraclass Correlation Coefficient, ICC) among human scorers and between humans and DeepLabCut.

Data Presentation: Quantitative Comparisons

Table 1: Performance Benchmark: DeepLabCut vs. Manual Scoring

| Metric | Manual Scoring (Human Experts) | DeepLabCut (ResNet-50) | Notes |

|---|---|---|---|

| Annotation Speed | 2-10 sec/frame | ~0.005-0.05 sec/frame (after training) | DLC is ~100-1000x faster during inference. |

| Inter-Rater Reliability (ICC) | 0.85 - 0.95 (High variability) | >0.99 vs. ground truth (Consistently high) | DLC eliminates human fatigue/drift. |

| Typical Error (RMSE) | N/A (Defines ground truth) | 1-5 pixels (for well-trained models) | Error is often sub-pixel relative to video resolution. |

| Throughput | Limited by human stamina | High-throughput, 24/7 operation | Enables large-scale phenotyping studies. |

| Scalability | Poor; linear increase with videos/subjects | Excellent; minimal marginal cost per new video | Major advantage for drug screening. |

Table 2: Key Research Reagent Solutions & Essential Materials

| Item | Function in DeepLabCut Research |

|---|---|

| High-Speed Camera (e.g., >60 fps) | Captures rapid motion without blur, essential for gait analysis and fine kinematics. |

| Uniform Backdrop & Lighting | Maximizes contrast, minimizes shadows, and ensures consistent video quality for robust model performance. |

| Calibration Object (e.g., checkerboard) | Enables conversion from pixels to real-world measurements (mm) and facilitates 3D reconstruction. |

| GPU Workstation (NVIDIA CUDA-capable) | Dramatically accelerates model training (fine-tuning) and video analysis (inference). |

| Labeling Software (DeepLabCut GUI) | Provides the interface for creating the ground truth dataset by manually annotating body parts on frames. |

| Behavioral Arena | Standardized experimental environment (e.g., open field, plus maze) for reproducible video recording. |

Visualizing the Workflow and Architecture

DeepLabCut End-to-End Workflow

DeepLabCut Model Architecture

Thesis Context: Automation vs. Manual Methods

This technical guide explores the core concepts of modern, markerless pose estimation, contextualized within a broader research thesis comparing DeepLabCut (DLC) to traditional manual behavior scoring in neuroscience and pharmacology.

Core Terminology in Pose Estimation

- Frame: A single, static image extracted from a video sequence. It is the fundamental unit of analysis. The frame rate (frames per second, fps) determines temporal resolution.

- Keypoint: A predefined, anatomically relevant point of interest on an animal's body (e.g., snout tip, paw, joint center). The model's goal is to predict the 2D (or 3D) pixel coordinates of each keypoint in every frame.

- Tracking Accuracy: A measure of the system's precision in localizing keypoints across frames. It is typically quantified by:

- Mean Pixel Error (MPE): The average Euclidean distance (in pixels) between the predicted keypoint location and the ground-truth location.

- Percentage of Correct Keypoints (PCK): The fraction of predictions within a normalized threshold distance (e.g., 0.2 times the head-body length) of the ground truth.

- RMSE (Root Mean Square Error): A measure sensitive to larger prediction errors.

Quantitative Comparison: DeepLabCut vs. Manual Scoring

Table 1: Comparative Analysis of Scoring Methods

| Metric | DeepLabCut (DLC) | Manual Scoring |

|---|---|---|

| Throughput | High (minutes to hours for long videos post-training) | Very Low (real-time or slower) |

| Objectivity | High (Consistent algorithm application) | Low (Prone to intra-/inter-rater variability) |

| Temporal Resolution | Full frame rate (e.g., 30-100+ Hz) | Often lower (limited by human observation) |

| Keypoint Accuracy (MPE) | Typically 2-10 pixels (depends on model, labeling) | Sub-pixel for single frames, but inconsistent |

| Scalability | Excellent (parallel processing possible) | Poor (linear increase with workload) |

| Fatigue Factor | None | High (leads to drift in criteria over time) |

Experimental Protocol for Validation

A standard protocol to benchmark DLC against manual scoring involves:

- Video Acquisition: Record high-quality, high-frame-rate video of the behavior of interest (e.g., rodent open field, gait analysis).

- Ground Truth Labeling: An expert human scorer manually annotates a random subset of frames (typically 100-1000) with keypoint locations, creating the ground truth dataset.

- DLC Model Training:

- Split labeled frames into training (e.g., 90%) and test (e.g., 10%) sets.

- Configure a neural network (e.g., ResNet-50) as the feature extractor within DLC.

- Train the network until loss metrics plateau on the training set.

- Evaluation:

- Use the trained DLC model to predict keypoints on the held-out test set.

- Calculate MPE, PCK, and RMSE between DLC predictions and manual ground truth.

- Perform statistical analysis (e.g., Bland-Altman plots, correlation coefficients) on derived behavioral measures (e.g., velocity, distance traveled).

Workflow Diagram

Workflow: DLC Validation vs Manual Scoring

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Materials for Markerless Pose Estimation Experiments

| Item / Solution | Function & Importance |

|---|---|

| High-Speed Camera | Captures high-frame-rate video to resolve rapid movements (e.g., paw strikes, whisking). Minimum 60-100 fps is often required. |

| Uniform, High-Contrast Background | Maximizes contrast between animal and environment, simplifying keypoint detection and improving model accuracy. |

| DeepLabCut Software Suite | Open-source toolbox for creating and training custom deep learning models for markerless pose estimation. |

| GPU (e.g., NVIDIA RTX Series) | Accelerates neural network training and inference, reducing processing time from days to hours. |

| Labeling Interface (DLC GUI) | Software tool for efficient manual annotation of ground truth keypoints on training image frames. |

| Behavioral Arena | Standardized testing apparatus (e.g., open field, elevated plus maze) to ensure experimental consistency and reproducibility. |

| Annotation Guidelines | Detailed, written protocol for human labelers to ensure consistency in ground truth keypoint placement (critical for reliability). |

| Compute Cluster or Cloud Instance | For large-scale analysis, enabling parallel processing of multiple video files and hyperparameter tuning. |

Within the context of a comparative research thesis on DeepLabCut (DLC) versus manual behavior scoring, understanding the traditional applications of each methodology is paramount for experimental design and data integrity. This guide provides an in-depth technical analysis of when and why researchers, scientists, and drug development professionals traditionally select one approach over the other, supported by current data and explicit protocols.

Methodological Foundations and Traditional Applications

Manual Behavior Scoring

Manual scoring, the historical gold standard, involves a human observer directly annotating behaviors from video or live observation using an ethogram. Its applications are traditionally defined by specific research needs.

Primary Traditional Applications:

- Pilot Studies & Ethogram Development: Initial, small-scale studies to define and refine behavioral categories before automation.

- Low-Throughput, High-Complexity Behaviors: Scoring of subtle, context-dependent, or novel behaviors not yet defined for automated systems (e.g., specific social interactions, nuanced facial expressions).

- Studies with Limited Computational Resources: Where budget or infrastructure for computational analysis is unavailable.

- Validation of Automated Tools: Serving as the ground-truth benchmark for training and validating tools like DeepLabCut.

DeepLabCut (DLC)

DeepLabCut is a deep learning-based toolbox for markerless pose estimation. It allows for the tracking of user-defined body parts across video frames. Its adoption is driven by scalability and objectivity.

Primary Traditional Applications:

- High-Throughput Phenotyping: Large-scale studies, such as in genetics or drug screening, where hundreds of animals and hours of video must be analyzed.

- Quantitative Kinematic Analysis: Precise measurement of movement dynamics (gait, velocity, acceleration, joint angles) that are infeasible for a human to measure consistently.

- Long-Duration Recording Analysis: Continuous tracking over days or weeks, impractical for manual scoring due to fatigue.

- Experiments Requiring Minimal Human Intervention: Studies where observer bias must be eliminated or where the animal cannot be easily observed (e.g., in darkness using IR video).

Quantitative Comparison of Applications

Table 1: Comparative Analysis of Methodological Applications

| Application Characteristic | Manual Scoring | DeepLabCut (DLC) | Rationale for Traditional Use |

|---|---|---|---|

| Throughput | Low to Medium (1-10x real-time) | High (100-1000x real-time after training) | DLC automates frame-by-frame analysis. |

| Objectivity & Bias | Prone to intra-/inter-observer bias | High objectivity once trained; model is consistent. | DLC removes subjective human judgment from tracking. |

| Initial Setup Time | Low (ethogram only) | High (labeling frames, training network) | Manual scoring requires no model training. |

| Cost per Experiment | High (personnel time) | Low (computational cost) | DLC amortizes initial cost over many videos. |

| Behavioral Complexity | Excellent for novel, complex sequences | Limited to predefined points/actions; requires training data. | Human cognition excels at parsing unstructured behavior. |

| Data Output | Categorical counts, latencies, durations | Quantitative coordinates (x,y), probabilities, derived metrics. | DLC outputs continuous numerical data suitable for kinematics. |

| Protocol Flexibility | High (ethogram can be adjusted on-the-fly) | Low (requires retraining for new body parts/views) | Changing a manual scoring sheet is faster than retraining a network. |

Experimental Protocols for Key Comparative Studies

Protocol: Validating DeepLabCut Against Manual Scoring

Aim: To establish DLC as a valid replacement for manual scoring in a specific task (e.g., measuring rearing duration in an open field test).

Materials: Video recordings (N=50, 5-min each), DLC software, manual scoring software (e.g., BORIS, Solomon Coder), statistical software.

Procedure:

- Manual Ground Truth Generation:

- Two blinded, trained observers score all videos using a predefined ethogram (e.g., "rear": both forepaws lifted from ground).

- Calculate inter-rater reliability (ICC or Cohen's Kappa >0.8 is acceptable).

- Resolve discrepancies via consensus to create a single ground-truth dataset.

- DLC Model Training & Inference:

- Extract 100-200 frames from a separate training video set.

- Manually label body parts (snout, left/right forepaw, tail base) on these frames.

- Train a ResNet-50-based DLC network until train/test error plateaus (<5-10 pixels).

- Apply the trained model to analyze the 50 test videos.

- Use DLC output to calculate rearing (e.g., snout coordinate rising above a threshold for >0.5s).

- Statistical Validation:

- Perform correlation analysis (Pearson's r) between manual and DLC-derived rearing durations.

- Use Bland-Altman plots to assess agreement and systematic bias.

- A high correlation (r > 0.95) and low bias indicate successful validation.

Protocol: Throughput and Sensitivity Comparison in a Drug Study

Aim: To compare the ability of each method to detect a dose-dependent drug effect on locomotion.

Materials: Rodent videos from a saline vs. two-dose drug treatment group (n=12/group), DLC, manual scoring setup.

Procedure:

- Blinded Analysis: Ensure all videos are coded and analyzed blind to treatment.

- Parallel Processing:

- Manual: A trained scorer annotates total distance traveled using a 5x5 grid overlay method on each video. Record time taken.

- DLC: A pre-trained DLC model (tracking 4 paws and tail base) analyzes videos. Compute total distance from the centroid track.

- Data Comparison:

- Time Efficiency: Record total personnel hours for manual scoring vs. compute + minimal human time for DLC.

- Statistical Sensitivity: Conduct a one-way ANOVA on distance data from each method separately. Compare the F-statistic and p-value effect size. The method that yields a lower p-value and higher F-statistic for the treatment effect is considered more sensitive in this context.

- Output: Report both methods detected the effect, but DLC did so with 90% less human analyst time and provided additional kinematic data (e.g., stride length).

Visualization of Method Selection Workflow

Diagram 1: Method Selection Logic for Behavior Analysis

The Scientist's Toolkit: Key Research Reagent Solutions

Table 2: Essential Materials for Comparative DLC vs. Manual Scoring Research

| Item | Function/Description | Example Vendor/Product |

|---|---|---|

| High-Speed Camera | Captures clear, high-resolution video essential for both manual scoring and training DLC models. Frame rate >30fps for rodent behavior. | FLIR, Basler |

| Ethogram Software | Enables systematic manual scoring with timestamped annotations, crucial for creating ground truth data. | BORIS, Solomon Coder, Noldus Observer XT |

| DeepLabCut Software | Open-source toolbox for markerless pose estimation. Core platform for automated tracking. | DeepLabCut (Mathis et al., Nature Neuroscience, 2018) |

| Labeling Tool (DLC) | Integrated within DLC for manually labeling body parts on extracted frames to generate training datasets. | DeepLabCut Labeling GUI |

| GPU Workstation | Accelerates the training of DeepLabCut models, reducing training time from days to hours. | NVIDIA RTX series with CUDA support |

| Statistical Software | For analysis of both manual and DLC-derived data, including reliability tests and effect size comparisons. | R, Python (SciPy/Statsmodels), GraphPad Prism |

| Behavioral Arena | Standardized testing environment (Open Field, Elevated Plus Maze) to ensure consistent video input for both methods. | Custom acrylic boxes, San Diego Instruments, Noldus |

| IR Illumination & Camera | For studies in dark/dim conditions, allows video capture without disturbing animal, usable by both methods. | Mightex Systems, FLOYD |

Implementing DeepLabCut: A Step-by-Step Guide for Your Lab

The quantification of animal behavior is a cornerstone of neuroscience and psychopharmacology research. The traditional method, manual scoring by trained observers, is time-consuming, prone to subjective bias, and has low throughput. The emergence of deep learning-based pose estimation tools like DeepLabCut (DLC) offers an automated, high-throughput, and objective alternative. This guide details the critical initial phase common to both methodologies: robust project setup, from video acquisition to body part definition. The foundational decisions made during this stage directly impact the validity and reproducibility of subsequent analysis, whether for training a DLC model or for establishing a reliable manual scoring protocol.

Video Data Acquisition: Principles and Protocols

High-quality video data is the essential raw material. The acquisition protocol must minimize variability unrelated to the behavior of interest.

Experimental Setup & Hardware

Research Reagent Solutions & Essential Materials

| Item | Function & Specification |

|---|---|

| High-Speed Camera | Captures motion with sufficient temporal resolution (e.g., 30-100+ fps). Global shutter is preferred to avoid motion blur. |

| Consistent Lighting | LED panels provide stable, flicker-free illumination to minimize shadows and ensure consistent contrast across sessions. |

| Behavioral Arena | Environment with high-contrast, non-reflective, and uniform backdrop (e.g., matte white/black acrylic) to separate animal from background. |

| Synchronization Hardware | For multi-camera 3D reconstruction, use TTL pulses or GPIO triggers to synchronize camera frames precisely. |

| Calibration Object | Checkerboard or Charuco board of known dimensions for camera calibration and scaling pixels to real-world units (mm). |

Acquisition Protocol

- Camera Calibration: Record the calibration object from multiple angles. For 3D, capture it simultaneously with all synchronized cameras.

- Subject Preparation: Apply unique, high-contrast markers if using marker-based DLC or manual tracking. For markerless DLC, ensure natural animal features are visible.

- Session Recording: Record all trials/sessions under identical lighting and camera settings. Include a period of no subject movement for background estimation if needed.

- Metadata Logging: Document all parameters: fps, resolution, date, subject ID, treatment, and any technical anomalies.

Defining Body Parts and Creating a Labeled Dataset

The definition of biologically relevant keypoints is a conceptual bridge between raw video and quantifiable behavior.

Anatomical Keypoint Selection

Select keypoints that are:

- Anatomically Meaningful: Correspond to immutable joints or landmarks (e.g., snout, base of tail, wrist, ankle).

- Consistently Visible: Should not be occluded for long periods under normal conditions.

- Relevant to Behavior: Chosen based on the specific behavioral phenotypes under investigation (e.g., digit joints for fine motor control, ear position for affective state).

Comparative Framework: Manual vs. DLC Workflow

The process diverges significantly after keypoint definition.

Table 1: Workflow Comparison After Project Setup

| Phase | Manual Scoring (e.g., BORIS, EthoVision) | DeepLabCut-Based Workflow |

|---|---|---|

| Annotation | Observer manually scores discrete behavioral states (e.g., "rearing," "grooming") based on keypoint positions inferred in real-time. | Human annotators manually label (x, y) coordinates of defined keypoints on a subset of video frames (the "training set"). |

| Core Task | Pattern recognition and classification by a human expert. | Generation of a ground truth dataset to train a convolutional neural network. |

| Output | Time-series of behavioral events and states. | A trained model that can predict keypoint locations on novel, unseen videos automatically. |

| Throughput | Low. Scoring is real-time or slower. | Very high after training. Inference can run faster than real-time on batches of videos. |

| Scalability | Poor, limited by human hours. | Excellent, easily applied to large-scale studies. |

Protocol for Creating a DLC Training Dataset

- Extract Frames: From multiple videos representing different subjects, lighting, and behaviors, extract a diverse set of frames (~100-1000).

- Label Frames: Using the DLC GUI, annotate each defined body part on every extracted frame. Multiple annotators can be used to compute inter-rater reliability.

- Create Training Dataset: The labeled frames are split into training (~90%) and test (~10%) sets. The test set is held out to evaluate model performance later.

The diagram below outlines the high-level logical pathway from experimental design to data analysis, highlighting the divergence point between manual and automated approaches.

Diagram Title: Workflow from Video Acquisition to Behavioral Analysis

Quantitative Considerations for Project Setup

Table 2: Key Quantitative Parameters for Project Setup

| Parameter | Typical Range / Consideration | Impact on Analysis |

|---|---|---|

| Frame Rate | 30-120 Hz (or higher for fast movements like rodent whisking or Drosophila wingbeats). | Determines temporal resolution of tracked motion. Must satisfy Nyquist criterion for the speed of behavior. |

| Spatial Resolution | Sufficient pixels per subject body length (e.g., >50 pixels for rodent snout-to-tail base). | Higher resolution improves annotation accuracy and model precision for small keypoints. |

| Number of Keypoints | 5-20 for rodent whole-body; can be >50 for detailed facial or limb analysis. | Increases annotation time for DLC training set. More keypoints enable richer behavioral description but may require more training data. |

| Training Frames for DLC | 100-1000 frames, drawn from multiple videos and animals. | More diverse frames generally lead to a more robust and generalizable model. |

| Inter-Rater Reliability (Manual) | Cohen's Kappa > 0.8 is considered excellent agreement. | Essential for validating manual scoring protocols and creating consensus ground truth for DLC. |

| DLC Model Performance | Train error < 5 pixels; test error < 10 pixels (model is not memorizing). | Low error on held-out test frames indicates a reliable model for inference on new data. |

A meticulous project setup—encompassing standardized video acquisition and the careful definition of anatomically grounded body parts—is the non-negotiable foundation for both manual and automated behavior analysis. While the workflows diverge dramatically after this phase, the quality and consistency of this initial stage dictate the scientific rigor of all downstream results. In the context of DeepLabCut vs. manual scoring research, a well-executed setup allows for a fair, head-to-head comparison of accuracy, efficiency, and throughput, ultimately guiding researchers toward the most appropriate tool for their specific behavioral phenotyping question.

This technical guide details the core pipeline for training deep learning models for pose estimation, specifically within the context of comparative research between DeepLabCut (DLC) and traditional manual behavior scoring. For researchers and drug development professionals, automating behavior analysis offers transformative potential for high-throughput, objective, and reproducible quantification of phenotypes in preclinical studies. This document provides an in-depth examination of the labeling, training, and evaluation stages, supported by current experimental data and protocols.

The Labeling Phase: Foundational Data Curation

Objective: To generate high-quality, annotated training datasets from raw video frames.

Experimental Protocol (DLC Labeling):

- Frame Selection: Extract frames from videos that maximally represent the behavioral repertoire and anatomical variance. Current best practice utilizes k-means clustering on frame pixel intensities (Mathis et al., 2018) or posture-based clustering (Lauer et al., 2022) for efficient selection.

- Manual Annotation: A researcher uses the DLC GUI to place

nanatomical keypoints (e.g., snout, left paw, tail base) on each selected frame. This creates a set of(x, y)coordinates per frame. - Multi-Annotator Labeling: To estimate human annotation error (a critical baseline), multiple experimenters label the same set of frames. The variance between annotators serves as the benchmark for model performance.

- Dataset Assembly: Annotated frames are split into training (~90-95%) and test (~5-10%) sets, ensuring distinct videos or time segments are in each set to prevent data leakage.

Key Reagent Solutions & Materials:

| Item | Function |

|---|---|

| DeepLabCut (v2.3+) | Open-source software toolkit for markerless pose estimation. Provides GUI for labeling and API for training. |

| High-Speed Camera | Captures motion at sufficient framerate (e.g., 100-500 fps) to resolve rapid behavioral kinematics. |

| Consistent Lighting | Controlled illumination to minimize shadows and ensure consistent video quality across sessions. |

| Labeling GUI (DLC) | Interactive tool for precise placement of keypoints on extracted video frames. |

Diagram: Workflow for creating a labeled pose estimation dataset.

Network Training: Model Optimization

Objective: To train a convolutional neural network (CNN) to predict keypoint locations from new, unlabeled frames.

Experimental Protocol (DLC Training):

- Network Selection & Initialization: DLC typically employs a pre-trained ResNet-50 or EfficientNet backbone for feature extraction, with deconvolution layers for upsampling. Weights are initialized from models pre-trained on ImageNet.

- Configuration: Define parameters in a

config.yamlfile: number of keypoints, training iterations, batch size, optimizer (e.g., Adam), and learning rate. - Training Loop: For each iteration, the network:

- Takes a batch of augmented training images (random rotations, cropping, lighting changes).

- Predicts

nheatmaps (one per keypoint), where each heatmap's peak corresponds to the predicted location. - Calculates loss (typically mean squared error) between predicted and ground-truth heatmaps.

- Updates weights via backpropagation.

- Checkpointing: Model weights are saved periodically for evaluation.

Training Data (Quantitative Summary):

| Parameter | Typical Value/Range | Purpose |

|---|---|---|

| Training Frames | 100 - 500 | Balances model generalization and labeling effort. |

| Training Iterations | 50,000 - 200,000 | Steps until loss convergence. |

| Batch Size | 1 - 16 | Limited by GPU memory. |

| Initial Learning Rate | 0.001 - 0.0001 | Controls step size of weight updates. |

| Human Error Benchmark | ~2-5 pixels (varies by keypoint) | Target for model performance. |

Diagram: Simplified architecture of a DeepLabCut pose estimation network.

Evaluation: Benchmarking Against Manual Scoring

Objective: To rigorously assess model accuracy, reliability, and practical utility compared to manual scoring.

Experimental Protocol (Model Evaluation):

- Inference on Test Set: Use the trained model to predict keypoints on the held-out test set of labeled frames.

- Quantitative Accuracy Metrics:

- Mean Pixel Error: Euclidean distance (in pixels) between predicted and human-labeled keypoints.

- RMSE (Root Mean Square Error): Aggregated error across all keypoints and test frames.

- Comparison to Human Error: Model error is compared to the inter-annotator disagreement (the standard deviation among multiple human labelers). A well-trained model performs at or below human error.

- Qualitative & Behavioral Metrics:

- Trajectory Smoothness: Visual inspection of keypoint paths over time for physiological plausibility.

- Downstream Task Performance: The ultimate test. Use DLC-derived features (e.g., velocity, distance) to classify behaviors (e.g., "rearing", "grooming") and compare the classification accuracy and inter-rater reliability to that achieved using manually scored features.

Comparative Performance Data:

| Metric | DeepLabCut (Typical Result) | Manual Scoring (Typical Characteristic) | Implication for Research |

|---|---|---|---|

| Keypoint Error | 2-10 pixels (often ≤ human error) | Subjective; inter-rater SD 2-5+ pixels | DLC provides objective, replicable measurements. |

| Throughput | High (minutes for 1hr video post-training) | Very Low (hours-days for 1hr video) | Enables large-N studies & high-content screening. |

| Fatigue Effect | None | Significant (scoring drift over time) | Eliminates a major source of experimental bias. |

| Feature Richness | High (full kinematic time series) | Low (often binary or count-based) | Uncovers subtle, continuous behavioral phenotypes. |

Diagram: The evaluation pipeline for a trained pose estimation model.

The training pipeline—from meticulous labeling and iterative network optimization to rigorous, multi-faceted evaluation—forms the cornerstone of reliable, automated behavior analysis. When framed within the DeepLabCut vs. manual scoring thesis, this pipeline demonstrates that deep learning not only matches human accuracy but surpasses it in throughput, consistency, and analytical depth. For drug development, this translates to a powerful, scalable tool for discovering subtle behavioral biomarkers and assessing therapeutic efficacy with unprecedented objectivity.

The quantification of animal behavior is a cornerstone of neuroscience and psychopharmacology research. Historically, manual annotation by trained observers has been the gold standard. However, this approach is low-throughput, subjective, and suffers from inter-rater variability. The advent of deep learning-based markerless pose estimation tools, such as DeepLabCut (DLC), has revolutionized the field by enabling automated, high-precision tracking of animal body parts. The core thesis of our broader research is to critically evaluate the performance, efficiency, and translational utility of DLC against traditional manual scoring in the context of preclinical drug development. This guide focuses on the critical next step: transforming raw coordinate outputs from tools like DLC into meaningful, interpretable kinematic features and metrics that reliably quantify behavior and its modulation by pharmacological agents.

From Raw Poses to Kinematic Features: Processing Pipelines

Raw DLC output provides (x, y) coordinates and a likelihood estimate for each tracked body part per video frame. These data require rigorous processing before feature extraction.

Key Processing Steps:

- Likelihood Filtering: Coordinates below a confidence threshold (e.g., p<0.9) are filtered, often via interpolation or smoothing.

- Smoothing: A low-pass filter (e.g., Savitzky-Golay) is applied to mitigate high-frequency camera noise.

- Centering and Alignment: Animal trajectories are often centered to a reference point (e.g., cage center) and aligned to a reference direction to control for arena position.

- Derivative Calculation: Velocity and acceleration are computed through finite differencing of smoothed position data.

Experimental Protocol for Pose Processing:

- Input: CSV or HDF5 files from DeepLabCut analysis.

- Tools: Custom Python scripts (using NumPy, SciPy, pandas) or specialized packages (e.g., SimBA, DeepEthogram).

- Procedure:

- Load pose data for all subjects and trials.

- Apply likelihood filter: set coordinates with p < threshold to NaN.

- Interpolate missing values using linear or spline interpolation.

- Apply a Savitzky-Golay filter (window length=5-21 frames, polynomial order=2-3) to smooth x and y trajectories independently.

- Calculate speed for each body point:

speed(t) = sqrt( (dx/dt)² + (dy/dt)² ). - Output a cleaned, derived data structure for downstream feature extraction.

Pose Data Preprocessing Workflow

Core Kinematic Feature Taxonomies and Their Metrics

Kinematic features can be categorized into several classes. The table below summarizes key metrics pertinent to rodent behavioral studies.

Table 1: Taxonomy of Key Kinematic Features and Metrics

| Feature Category | Example Metrics | Description & Calculation | Relevance in Drug Studies |

|---|---|---|---|

| Locomotion | Total Distance Travelled | Sum of Euclidean distances between successive body center locations. | General activity, sedative or stimulant effects. |

| Average Speed | Mean of the instantaneous speed of the body center. | Motor coordination, fatigue. | |

| Mobility/Bout Analysis | Number, mean duration, and distance of movement bouts (speed > threshold). | Fragmentation of behavior, motivational state. | |

| Posture & Shape | Body Length | Distance between snout and tail base. | Stretch/contraction, defensive flattening. |

| Body Curvature | Angular deviation along the spine (e.g., snout, mid-back, tail base). | Anxiety (curved spine), approach behavior. | |

| Paw Spread | Distance between left and right fore/hind paws. | Ataxia, gait abnormalities. | |

| Gait & Dynamics | Stride Length | Distance between successive paw placements of the same limb. | Motor control, Parkinsonian models. |

| Swing/Stance Phase | Duration paw is in air vs. on ground during a step cycle. | Neuromuscular integrity. | |

| Base of Support | Area of the polygon formed by all four paws. | Balance and stability. | |

| Exploration & Orienting | Head Direction | Angular displacement of the snout-nose vector relative to a reference. | Attentional focus. |

| Rearing Height | Vertical (y-coordinate) of the snout or head. | Exploratory drive. | |

| Micro-movements | Variance of paw or snout position during immobility. | Tremor, parkinsonism. |

Experimental Protocol for Gait Analysis:

- Objective: Quantify stride length and swing/stance phases from paw tracking data.

- Procedure:

- Identify Stride Cycles: For each paw, detect successive "stance onsets" (when paw likelihood becomes high and vertical velocity nears zero).

- Stride Length: Calculate the 2D distance the body center travels between two stance onsets of the same paw.

- Swing/Stance Segmentation: Within a cycle, stance phase is the period the paw is stationary on the ground (low speed); swing phase is the period of movement between stance periods.

- Aggregate Metrics: Compute mean stride length and mean swing/stance ratio across all cycles for a subject/trial.

DLC vs. Manual Scoring: A Quantitative Comparison

Our thesis research directly compares kinematic features derived from DLC with manual scoring outcomes. The following table synthesizes findings from recent literature and our internal validation studies.

Table 2: Comparative Analysis: DLC vs. Manual Behavior Scoring

| Parameter | DeepLabCut (DLC) | Manual Scoring | Implications for Research |

|---|---|---|---|

| Throughput | High (Batch processing of 100s of videos) | Very Low (Real-time or slowed playback) | DLC enables large-N studies and high-content phenotyping. |

| Inter-Rater Reliability | Perfect (Algorithm is consistent) | Variable (ICC typically 0.7-0.9) | DLC reduces a source of experimental noise and bias. |

| Temporal Resolution | Frame-level (e.g., 30 Hz) | Limited by human perception (~1-4 Hz) | DLC captures micro-kinematics and sub-second behavioral dynamics. |

| Feature Richness | High (Full kinematic space) | Low (Limited to predefined ethograms) | DLC allows discovery of novel, quantifiable behavioral dimensions. |

| Initial Setup Cost | High (Labeling, training, compute) | Low (Protocol definition only) | DLC requires upfront investment, amortized over many experiments. |

| Objective Ground Truth | No (Dependent on human-labeled training frames) | Yes (Human observation is the traditional standard) | Careful training set construction is critical for DLC validity. |

| Sensitivity to Change | High (Can detect subtle kinematic shifts) | Moderate (May miss subtlety) | DLC may increase sensitivity to partial efficacy in drug screens. |

Comparative Research Workflow: DLC vs Manual

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Tools for Kinematic Feature Analysis

| Item / Reagent | Function in Analysis | Example Product / Software |

|---|---|---|

| DeepLabCut | Open-source toolbox for markerless pose estimation. Provides the foundational (x,y) data. | DeepLabCut 2.x (Mathis et al., Nature Neuroscience, 2018) |

| Behavioral Arena | Standardized experimental field for video recording. Minimizes environmental variance. | Noldus PhenoTyper, Med-Associates Open Field |

| High-Speed Camera | Captures video at sufficient frame rate (≥30 fps) and resolution for limb tracking. | Basler acA series, FLIR Blackfly S |

| Data Processing Suite | Libraries for smoothing, filtering, and calculating derivatives from pose data. | SciPy, NumPy (Python) |

| Specialized Analysis Package | Software offering pre-built pipelines for rodent kinematic feature extraction. | SimBA, DeepEthogram, MARS |

| Statistical Software | For analyzing extracted kinematic metrics (ANOVA, clustering, machine learning). | R, Python (scikit-learn, statsmodels), GraphPad Prism |

This guide details the technical integration of markerless pose estimation into behavioral neuroscience and psychopharmacology workflows. It is framed within a broader research thesis comparing DeepLabCut (DLC)—a leading open-source tool for markerless pose estimation—against traditional manual behavior scoring. The core thesis investigates whether the increased throughput and objectivity of DLC-based pipelines justify their computational and initial labeling overhead, especially for complex, ethologically relevant behavioral classification beyond basic posture tracking.

Foundational Workflow: From Video to Pose Keypoints

The initial integration phase involves transforming raw video data into quantified posture data.

Experimental Protocol 1: DeepLabCut Model Creation & Training

- Frame Selection: Extract frames (~200-1000) to represent the full behavioral repertoire and animal poses from all videos.

- Labeling: Manually annotate user-defined body parts (keypoints) on the extracted frames using the DLC GUI. This creates the "ground truth" dataset.

- Training: Configure a neural network (e.g., ResNet-50) as the feature extractor. The model is trained to predict the labeled keypoint locations, learning robust feature representations.

- Evaluation: The model is evaluated on a held-out set of labeled frames. The primary metric is the Root Mean Square Error (pixels) between the predicted and human-labeled keypoint locations.

Table 1: Quantitative Comparison of Labeling Effort (Manual vs. DLC)

| Metric | Traditional Manual Scoring | DeepLabCut-Based Pipeline |

|---|---|---|

| Initial Time Investment | Low | High (Frame extraction & labeling) |

| Scoring Time per Video | Very High (Real-time or slower) | Very Low (Seconds to minutes for inference) |

| Inter-Rater Variability | High (Subject to human drift/fatigue) | Low (Model is consistent once trained) |

| Output Granularity | Often categorical/ethogram-based | High-resolution, continuous (X,Y) coordinates |

| Scalability for Long Durations | Poor | Excellent |

Title: DeepLabCut Training and Inference Workflow

Advanced Integration: From Pose to Behavioral Classification

The critical workflow extension uses pose data to classify discrete behavioral states (e.g., grooming, rearing, social interaction).

Experimental Protocol 2: Building a Supervised Behavioral Classifier

- Pose Data Preprocessing: Smooth keypoints (e.g., using a median filter). Calculate "features" such as distances between body parts, angles of joints, velocities, and accelerations.

- Ground Truth Labeling for Behavior: Manually score segments of video (e.g., using BORIS or Solomon Coder) to identify the onset/offset of target behaviors. This creates a time-series of behavioral labels.

- Feature-Alignment: Temporally align the calculated pose features with the behavioral labels.

- Classifier Training: Use the aligned data to train a supervised machine learning classifier (e.g., Random Forest, Gradient Boosting Machine, or simple neural network). The model learns the mapping between pose features and behavioral labels.

- Validation: Evaluate classifier performance using metrics like F1-score or accuracy on a held-out test set. Compare its agreement with human scorers against inter-human agreement.

Table 2: Performance Metrics of DLC-Driven Classifiers vs. Manual Scoring (Representative Studies)

| Study Focus | Classifier Used | Behavioral States | Key Performance Metric (vs. Human) | Comparative Advantage |

|---|---|---|---|---|

| Mouse Social Behavior | Random Forest | Investigation, Close Contact, Fighting | F1-score > 0.95 | Detects subtle, fast transitions missed by manual scoring. |

| Rat Anxiety (EPM) | Gradient Boosting | Head Dip, Closed Arm Rearing | Agreement > 97% | Eliminates experimenter subjectivity, enables continuous measurement in home cage. |

| Marmoset Vocal & Motor | Neural Network | Grooming, Foraging, Resting | Accuracy ~ 92% | Enables simultaneous analysis of pose & audio, correlating motor and vocal states. |

Title: From Pose Features to Behavioral Classification

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Toolkit for DLC-Based Behavioral Analysis

| Item | Function in Workflow | Key Considerations |

|---|---|---|

| High-Speed Camera | Captures motion with sufficient temporal resolution to avoid motion blur. | Frame rate (e.g., 30-100+ fps) must match behavior speed. Global shutter preferred. |

| Uniform Illumination | Provides consistent lighting to minimize video artifacts and shadows. | Crucial for robust model performance; IR lighting for dark cycle recording. |

| DeepLabCut Software Suite | Open-source platform for creating and training pose estimation models. | Choice of backbone network (e.g., ResNet, EfficientNet) balances speed/accuracy. |

| Labeling Tool (DLC GUI) | Enables efficient manual annotation of keypoints on training frames. | Multi-user labeling features can speed up ground truth creation. |

Feature Calculation Library (e.g., scikit-learn, NumPy) |

Transforms keypoints into derived features for behavioral classification. | Custom feature design is critical for capturing ethological relevance. |

Behavioral Classifier Library (e.g., scikit-learn, TensorFlow) |

Provides algorithms for supervised classification of behavioral states. | Random Forests often perform well with limited training data. |

| Manual Scoring Software (e.g., BORIS, Solomon Coder) | Creates the essential ground truth ethograms for classifier training/validation. | Must support precise frame-level or event-based logging for alignment. |

| High-Performance Workstation/GPU | Accelerates model training and inference on large video datasets. | GPU (NVIDIA) is essential for practical training times. |

Overcoming Hurdles: Optimizing DeepLabCut Performance and Reliability

In comparative studies between DeepLabCut (DLC) and manual behavior scoring, three persistent pitfalls—poor lighting, occlusions, and similar-appearing animals—critically influence data fidelity and methodological validity. These challenges are not merely operational nuisances but represent fundamental sources of systematic error that can bias experimental outcomes, compromise reproducibility, and lead to erroneous conclusions in behavioral neuroscience and psychopharmacology. This technical guide dissects these pitfalls, providing current data, mitigation protocols, and visualization tools essential for rigorous research.

Quantitative Analysis of Pitfall Impact

Recent studies (2023-2024) have systematically quantified the error introduced by these common pitfalls in markerless pose estimation. The data below summarizes key findings.

Table 1: Impact of Common Pitfalls on DeepLabCut Performance vs. Manual Scoring

| Pitfall | Scenario | DLC Error (pixels, Mean ± SD) | Manual Scoring Error (Inter-rater ICC) | Key Metric Affected | Reference (Year) |

|---|---|---|---|---|---|

| Poor Lighting | Low contrast (< 50 lux) | 15.2 ± 4.7 | 0.65 [0.51, 0.77] | Root Mean Square Error (RMSE) | Lauer et al. (2023) |

| Poor Lighting | Dynamic shadows | 22.1 ± 8.3 | 0.58 [0.42, 0.71] | Confidence Score (Likelihood) | Mathis Lab (2024) |

| Occlusions | Partial body (e.g., by object) | 18.9 ± 6.5 | 0.72 [0.63, 0.80] | Reprojection Error | Pereira et al. (2023) |

| Occlusions | Full social occlusion (mouse) | 35.4 ± 12.1 | 0.41 [0.30, 0.55] | Track Fragmentation | INSERM Study (2024) |

| Similar Animals | Identically colored mice | 12.8 ± 5.2* | 0.89 [0.85, 0.93] | Identity Swap Rate | Nath et al. (2023) |

| Similar Animals | Monomorphic fish schools | N/A (Track loss) | 0.95 [0.91, 0.98] | Tracklet Count | Sridhar et al. (2024) |

*Error increases during close contact. ICC = Intraclass Correlation Coefficient.

Experimental Protocols for Mitigation

Protocol A: Standardized Lighting Calibration for Behavioral Arenas

Objective: To minimize lighting-induced errors in both DLC training and manual scoring.

- Equipment: Digital lux meter, standardized grayscale and color checker chart (e.g., X-Rite ColorChecker), diffuse LED panels.

- Procedure: a. Mount lighting panels at 45° angles to the arena plane to reduce glare and direct shadows. b. Measure illuminance at 9 grid points within the empty arena. Adjust lighting until all points are within 50-100 lux and vary by <10%. c. Place the calibration chart in the arena. Record a 30-second video. d. For DLC: Extract frames and calculate histogram of oriented gradients (HOG) feature variance. Accept if variance > threshold (e.g., 0.5). e. For manual scoring: Ensure all raters pass a color discrimination test using the chart under the calibrated light.

Protocol B: Multi-Camera Setup for Occlusion Resolution

Objective: To recover 3D pose and identity during object or social occlusions.

- Equipment: ≥2 synchronized high-speed cameras, calibration wand, DLC 3D project.

- Procedure:

a. Position cameras at orthogonal views (e.g., side and bottom) or at least 60° apart.

b. Perform stereo calibration using a wand with two markers 100mm apart. Achieve reprojection error < 2 pixels.

c. Record behavior. Train separate DLC networks for each 2D view.

d. Use

triangulatefunction in DLC to compute 3D pose. e. During occlusion in one view, use the 2D data from the clear view to inform and constrain the 3D reconstruction.

Protocol C: Identity Tagging for Similar-Appearing Animals

Objective: To maintain individual animal identity across sessions and social interactions.

- Equipment: Subcutaneous RFID microchips (for rodents), unique visual markers (for non-mammals), multi-animal DLC (maDLC).

- Procedure:

a. Pre-marking: If possible, use gentle, non-toxic fur dyes (rodents) or elastomer tags (fish) to create unique visual identifiers.

b. Data Collection: Record video with RFID readers or under UV light if using fluorescent tags.

c. DLC Training: Label body parts for all animals in the frame. Use the

maDLCpipeline which incorporates an identity graph. d. Post-processing: Apply tracking algorithms likeTrackletsor use RFID timestamps to correct identity swaps in the DLC output.

Visualizing Workflows and Relationships

Diagram 1: Pathway from Pitfall to Research Bias (79 chars)

Diagram 2: Identity Resolution Technical Pathways (78 chars)

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Tools for Mitigating Common Behavioral Analysis Pitfalls

| Item/Category | Specific Product/Technique | Function in Mitigation | Key Consideration |

|---|---|---|---|

| Calibrated Lighting | Programmable LED Panels (e.g., GVL224) | Provides uniform, flicker-free illumination adjustable for contrast optimization. | Must have high CRI (>90) and dimmable driver to maintain constant color temperature. |

| Spectral Markers | Fluorescent Elastomer Tags (Northwest Marine Tech) | Creates permanent, unique visual IDs for similar-appearing animals (fish, rodents). | Requires matching video filter; must be non-invasive and approved by IACUC. |

| Identification System | RFID Microchip & Reader (e.g., BioTherm) | Provides unambiguous identity ground truth for social occlusion scenarios. | Chip implantation is invasive; reader must be synchronized with video acquisition. |

| Multi-View Sync | Synchronization Hub (e.g., Neurotar SyncHub) | Precisely aligns frames from multiple cameras for 3D reconstruction to tackle occlusions. | Latency must be sub-millisecond; uses TTL or ethernet protocols. |

| Software Plugin | DeepLabCut-Live! & DLC 3D | Enables real-time pose estimation and 3D triangulation for immediate feedback on pitfall severity. | Requires significant GPU resources for low-latency processing. |

| Validation Standard | Anipose (3D calibration software) | Open-source tool for robust multi-camera calibration, critical for accurate 3D work. | More flexible than built-in DLC calibrator for complex camera arrangements. |

The pursuit of robust, generalizable machine learning models is a cornerstone of modern computational ethology. This quest is critically framed by the broader research thesis comparing automated pose estimation tools like DeepLabCut (DLC) against traditional manual behavior scoring. While DLC offers unprecedented throughput, its ultimate value in scientific and preclinical research hinges on the generalizability of its models—their ability to perform accurately across varied experimental conditions, animal strains, lighting, and hardware. This guide details technical strategies to build models that generalize beyond the specifics of their training data, thereby strengthening the validity of conclusions drawn in comparative studies with manual methods.

Core Challenges to Generalizability

A model trained on data from a single lab condition often fails when applied to new data due to:

- Covariate Shift: Changes in input data distribution (e.g., lighting, camera angle, background).

- Contextual Bias: Overfitting to lab-specific environmental cues or hardware artifacts.

- Subject Variability: Differences in animal morphology, coat color, or strain not represented in the training set.

Strategic Frameworks and Methodologies

Data-Centric Strategies

The foundation of generalizability is diverse, representative data.

Protocol 1: Multi-Condition Data Acquisition

- Objective: Capture a training dataset that inherently encompasses experimental variability.

- Methodology:

- Record videos across multiple sessions, days, and cohorts.

- Systematically vary non-experimental factors: lighting intensity/angle, camera positions (e.g., 45°, 90°), cage backgrounds.

- Include animals from different litters, and if applicable, both sexes and relevant genetic strains.

- Annotate frames (using DLC’s labeling interface) sampled proportionally from all varied conditions. A minimum of 200-500 annotated frames per condition is a common starting point.

Protocol 2: Strategic Data Augmentation

- Objective: Artificially increase data diversity during training to simulate unseen conditions.

- Methodology: Implement a real-time augmentation pipeline. Standard transforms include:

- Spatial: Rotation (±15°), Scaling (±10%), Horizontal Flip, Shear, Translation.

- Photometric: Adjustments to Brightness (±20%), Contrast, Hue, and Saturation.

- Advanced: Simulated motion blur, noise injection, and random occlusion (e.g., with synthetic objects).

Model-Centric Strategies

Protocol 3: Domain Randomization

- Objective: Force the model to learn invariant features by presenting wildly randomized training samples.

- Methodology:

- During training, apply extreme, non-physiological augmentations to the background (random color textures, patterns).

- Randomize lighting color temperature and direction aggressively.

- The model, unable to rely on consistent environmental features, learns to focus solely on the invariant geometry of the animal.

Protocol 4: Test-Time Augmentation (TTA)

- Objective: Improve prediction stability on a single novel frame.

- Methodology:

- For a given test frame, generate multiple augmented versions (e.g., original, flipped, minor rotations).

- Pass each augmented version through the trained network.

- Average or take the median of the predicted keypoint locations across all augmentations to produce the final, more robust prediction.

Protocol 5: Leveraging Pretrained Models & Transfer Learning

- Objective: Utilize features learned from large, diverse image datasets (e.g., ImageNet).

- Methodology:

- Initialize the DLC network backbone (e.g., ResNet-50) with weights pretrained on ImageNet.

- Optionally freeze early layers that capture general features (edges, textures).

- Finetune only the later, task-specific layers on your ethological data. This is often more effective than training from scratch with limited data.

Quantitative Comparison of Strategies

Table 1: Impact of Generalization Strategies on Model Performance in a Cross-Condition Validation Study

| Strategy | Training Data Diversity | Mean Pixel Error (Train Set) | Mean Pixel Error (Held-Out Condition) | Relative Improvement vs. Baseline |

|---|---|---|---|---|

| Baseline (Single Condition) | Low | 4.2 px | 22.5 px | 0% |

| Multi-Condition Acquisition | High | 5.1 px | 8.7 px | 61% |

| Aggressive Augmentation | Medium (Synthetic) | 4.8 px | 12.4 px | 45% |

| Domain Randomization | Very High (Synthetic) | 6.3 px | 7.9 px | 65% |

| TTA (at inference) | N/A | 4.2 px | 18.1 px* | 20% |

| Pretrained Init. + Finetune | Medium (External) | 3.9 px | 10.2 px | 55% |

*Error reduced from 22.5px to 18.1px using TTA on the baseline model.

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Materials for Robust Pose Estimation Model Development

| Item / Solution | Function / Purpose |

|---|---|

| DeepLabCut (v2.3+) | Core open-source platform for markerless pose estimation. |

| TensorFlow / PyTorch | Backend deep learning frameworks for model training and inference. |

| DLC Model Zoo | Repository of pretrained models for transfer learning and benchmarking. |

| Labelbox / CVAT | Alternative annotation tools for scalable, collaborative frame labeling. |

| Albumentations Library | Advanced, optimized library for implementing complex data augmentations. |

| Synthetic Data Generators (e.g., Deep Fake Lab, Unity Perception) | Tools to generate photorealistic, annotated training data for domain randomization. |

| CALMS (Crowdsourced Annotation of Labelled Mouse Behavior) | Benchmark datasets for multi-lab behavior analysis to test generalizability. |

Visualizing the Workflow and Concept

Workflow for Building a Generalizable Pose Estimation Model

The Challenge of Domain Shift in Behavioral Analysis

Within the context of a broader thesis comparing DeepLabCut (DLC) to manual behavior scoring in biomedical research, understanding the computational infrastructure is critical for practical implementation, scalability, and reproducibility. This guide provides an in-depth technical analysis of the hardware requirements and processing speeds for DLC, a leading markerless pose estimation tool, and contrasts these with the implicit "hardware" of manual scoring.

Hardware Requirements: DeepLabCut vs. Manual Scoring

Quantitative hardware requirements for DLC are driven by the stages of its workflow: data labeling, model training, and inference (video analysis). Manual scoring primarily demands ergonomic setups for human observers. The following table summarizes key requirements.

Table 1: Comparative Hardware Requirements

| Component | DeepLabCut (Recommended) | Manual Scoring (Typical) | Function/Rationale |

|---|---|---|---|

| CPU | High-core count (e.g., Intel i9/AMD Ryzen 9/Xeon) | Standard multi-core | Parallel processing during training and data augmentation. |

| GPU | Critical: NVIDIA GPU with ≥8GB VRAM (RTX 3080/4090, A100, V100) | Not required | Accelerates deep learning model training & inference via CUDA cores. |

| RAM | 32GB - 64GB+ | 8GB - 16GB | Handles large video datasets in memory during processing and labeling. |

| Storage | High-speed NVMe SSD (1TB+) | Standard SSD/HDD | Fast read/write for large video files and model checkpoints. |

| Display | High-resolution monitor | Multiple monitors preferred | For precise labeling of keypoints; for viewing ethograms/scoring sheets simultaneously. |

| Peripherals | N/A | Ergonomic mouse, foot pedals | Reduces repetitive strain during long scoring sessions. |

Processing Speed: Quantitative Benchmarks

Processing speed is a decisive factor for project timelines. For DLC, speed varies dramatically across workflow phases and hardware. Manual scoring speed is relatively constant but inherently slower.

Table 2: Processing Speed Benchmarks for DeepLabCut*

| Workflow Phase | Hardware Configuration (Example) | Approximate Time | Notes |

|---|---|---|---|

| Labeling (1000 frames) | CPU + Manual Effort | 60-90 minutes | Human-dependent; can be distributed across lab members. |

| Training (ResNet-50) | Single GPU (RTX 3080, 10GB) | 4-12 hours | Depends on network size, dataset size (num_iterations). |

| Training (ResNet-50) | Cloud GPU (Tesla V100, 16GB) | 2-8 hours | Reduced time due to higher memory bandwidth & cores. |

| Inference (per 1000 frames) | Single GPU (RTX 3080) | ~20-60 seconds | Batch processing dramatically speeds analysis. |

| Inference (per 1000 frames) | CPU only (Modern i7) | ~5-15 minutes | Not recommended for full datasets. |

*Benchmarks synthesized from DLC documentation, community benchmarks, and recent publications (2023-2024). Times are illustrative and depend on specific parameters.

Experimental Protocols for Benchmarking DLC Performance

To generate comparable speed benchmarks, researchers should follow a standardized protocol.

Protocol 1: Benchmarking DLC Training Speed

- Dataset Preparation: Use a standard public dataset (e.g., from DLC Model Zoo) or create a labeled set of 500 training frames with 10 keypoints.

- Hardware Isolation: Ensure no other major processes are running on the test system (GPU/CPU).

- Model Configuration: Train a ResNet-50-based network using the default DLC configuration. Set

batch_sizeto the maximum permitted by GPU memory (e.g., 8, 16). Fixnum_iterationsto 103,000 (DLC default). - Measurement: Use the system's

timecommand or Python'stimemodule to record the wall-clock time from training initiation to completion. Log GPU utilization (vianvidia-smi -l 1). - Repetition: Run the training three times from scratch and calculate the mean and standard deviation.

Protocol 2: Benchmarking DLC Inference Speed

- Model: Use a pre-trained model from Protocol 1.

- Input Video: Use a standardized 2-minute, 1080p (30 fps) video clip.

- Analysis: Run inference using DLC's

analyze_videosfunction withvideotype='.mp4'. - Measurement: Record the total inference time. Calculate frames per second (FPS) as (total frames / time).

- Variable Testing: Repeat inference under different conditions: on GPU, on CPU, and with varying batch sizes.

Visualization of Computational Workflows

Diagram 1: Workflow & Hardware Dependencies

Diagram 2: DLC Hardware-Process Interaction

The Scientist's Toolkit: Essential Research Reagents & Solutions

Table 3: Key Computational & Experimental Materials

| Item | Function in DLC/Behavior Research | Specification Notes |

|---|---|---|

| NVIDIA GPU with CUDA Cores | Enables parallel tensor operations for neural network training and inference. | Minimum 8GB VRAM (RTX 3070/4080). For large datasets or 3D, ≥16GB (RTX 4090, A100). |

| CUDA & cuDNN Libraries | GPU-accelerated libraries for deep learning. Required for TensorFlow/PyTorch backend. | Must match specific versions compatible with DLC and TensorFlow. |

| DeepLabCut Software Suite | Open-source toolbox for markerless pose estimation. | Includes GUI for labeling and API for scripting. Install via pip install deeplabcut. |

| Labeled Training Dataset | The curated set of image frames with annotated keypoints. | Typically 100-1000 frames, representing diverse postures and behaviors. |

| High-Speed Video Camera | Captures source behavioral data. | High frame rate (>60 fps) and resolution (1080p+) reduce motion blur. |

| Ethogram Scoring Software | For manual scoring comparison (e.g., BORIS, Solomon Coder). | Provides the ground truth data for validating DLC's behavioral classification. |

| Cloud Compute Credits | (Alternative to local GPU) Provides access to high-end hardware. | Services like Google Cloud Platform (GCP), Amazon Web Services (AWS), or Lambda Labs. |

The transition from manual scoring to DeepLabCut represents a shift from human-intensive effort to a computationally intensive, hardware-dependent pipeline. The upfront investment in robust GPU infrastructure is substantial but is justified by the exponential increase in processing speed and throughput during the analysis phase. For research scalability and reproducibility in drug development, characterizing these computational considerations is as essential as the biological experimental design itself. The choice between manual and automated approaches must, therefore, be informed by both the available computational resources and the required speed and scale of analysis.

Within the context of research comparing DeepLabCut (DLC) to manual behavior scoring, establishing robust quality control (QC) protocols is paramount. This guide details the technical framework for validating automated pose estimation outputs against manually annotated ground truth, a critical step for ensuring reliability in neuroscience and pre-clinical drug development.

The Validation Imperative in DLC Research

Automated tools like DLC offer scalability but require rigorous validation to ensure their outputs are biologically accurate. The core thesis is that without systematic QC, conclusions drawn from DLC may be flawed, directly impacting research reproducibility and translational drug development.

Core Validation Metrics and Data Presentation

Validation hinges on quantifying the agreement between DLC-predicted keypoints and manual annotations. The following table summarizes the standard metrics used.

Table 1: Key Metrics for Validating DLC Output Against Ground Truth

| Metric | Formula/Description | Interpretation in Behavioral Context |

|---|---|---|

| Mean Pixel Error | (1/n) Σ ||ppred - ptrue||_2 | Average Euclidean distance (in pixels) between predicted and true keypoint locations. The primary measure of precision. |

| RMSE (Root Mean Square Error) | √[ (1/n) Σ ||ppred - ptrue||² ] | Emphasizes larger errors; useful for identifying systematic failures on specific frames or keypoints. |

| PCK@Threshold | % of predictions within a threshold distance (e.g., 5% of body length) of true location | Reports reliability as a percentage; thresholds based on animal size are more biologically meaningful than fixed pixels. |

| Linear Mixed Models (LMM) | Statistical model assessing error by fixed (e.g., drug dose) and random (e.g., animal ID) effects | Isolates sources of variation in DLC performance, crucial for controlled experimental designs. |

Experimental Protocols for Validation

Protocol 1: Creation of High-Quality Ground Truth Datasets

- Video Selection: Extract a representative subset of experimental videos (typically 100-800 frames), ensuring coverage of all behavioral states, lighting conditions, and animal orientations.

- Manual Annotation: Use multiple trained human annotators to label the same set of frames independently. Utilize annotation software (e.g., Labelbox, CVAT) that exports coordinates.

- Consensus & Reconciliation: Compute inter-rater reliability (e.g., intraclass correlation coefficient). Reconcile disagreements through adjudication by a senior scorer to create a single, high-confidence ground truth set.

Protocol 2: Systematic Comparison and Error Analysis

- DLC Inference: Run the trained DLC model on the ground truth video subset to generate predicted keypoints.

- Metric Calculation: For each frame and keypoint, compute the metrics in Table 1. Aggregate results per keypoint, per animal, and per experimental condition.

- Error Visualization: Generate plots of error distributions, spatial maps of error vectors, and examples of frames with high vs. low error to diagnose failure modes (e.g., occlusion, limb crossing).

Protocol 3: Downstream Behavioral Metric Validation

- Derived Metric Calculation: From both DLC and manual trajectories, compute downstream behavioral metrics (e.g., velocity, social proximity, rearing frequency).

- Correlation Analysis: Perform Pearson or Spearman correlation between the automated and manually-derived time series for each metric.

- Statistical Equivalence Testing: Use methods like Two One-Sided Tests (TOST) to demonstrate that the mean difference between automated and manual scores falls within a pre-defined equivalence bound (e.g., <10% of the manual mean).

Visualization of Validation Workflows

Diagram 1: DLC Validation Workflow

Diagram 2: Error Source Diagnostic Tree

The Scientist's Toolkit

Table 2: Essential Research Reagent Solutions for DLC Validation

| Item | Function & Rationale |

|---|---|

| High-Resolution Cameras (e.g., Basler, FLIR) | Provide the raw video input. High frame rate and resolution reduce motion blur and improve annotation/DLC precision. |

| Consistent Illumination System (IR/Visible) | Eliminates shadows and ensures consistent animal contrast, a major variable affecting both manual and automated scoring accuracy. |

| Dedicated Annotation Software (e.g., Labelbox, CVAT) | Enables efficient, multi-rater manual labeling with audit trails. Critical for generating high-quality, reproducible ground truth. |

| Computational Environment (e.g., Python with DLC, SciKit-learn, statsmodels) | Platform for running DLC inference, calculating validation metrics, and performing advanced statistical analyses (LMM, TOST). |

| Statistical Equivalence Testing Package (e.g., TOST in pingouin/statsmodels) | Provides formal statistical framework to prove automated and manual methods are equivalent for practical purposes, beyond simple correlation. |

| Data Visualization Suite (e.g., matplotlib, seaborn) | Generates error distribution plots, spatial heatmaps, and behavioral metric correlations to visually communicate validation results. |

DeepLabCut vs. Manual Scoring: A Data-Driven Comparison for Rigorous Science