When Gold Standards Clash: Navigating Discrepancies Between RCT and Observational Study Results in Modern Biomedical Research

This article provides a comprehensive analysis for researchers and drug development professionals on the critical comparison between Randomized Controlled Trials (RCTs) and observational studies.

When Gold Standards Clash: Navigating Discrepancies Between RCT and Observational Study Results in Modern Biomedical Research

Abstract

This article provides a comprehensive analysis for researchers and drug development professionals on the critical comparison between Randomized Controlled Trials (RCTs) and observational studies. It explores the foundational reasons for result discrepancies, details methodological strengths and applications of each design, offers strategies for troubleshooting conflicts and optimizing study design, and validates findings through comparative analysis of real-world examples. The content synthesizes current evidence to guide robust evidence-based decision-making in clinical research and therapeutic development.

Understanding the Evidence Gap: Core Principles of RCTs and Observational Studies

Within the ongoing research thesis comparing Randomized Controlled Trials (RCTs) to observational studies, the RCT remains the benchmark for establishing causal efficacy in clinical research. This guide objectively compares the "performance" of the RCT framework against its primary alternative—observational studies—by examining their fundamental structures and supporting experimental data.

Core Framework Comparison: RCT vs. Observational Study

Table 1: Structural and Methodological Comparison

| Pillar | Randomized Controlled Trial (RCT) | Observational Study |

|---|---|---|

| Allocation | Random assignment to intervention/control. | No intervention assigned; groups formed based on exposure, choice, or routine care. |

| Blinding | Single, double, or triple blinding is standardized to reduce bias. | Typically not blinded; participants and researchers are aware of exposures. |

| Control Group | Concurrent, carefully selected control group (placebo/active). | Uses external or historical controls; comparison groups may lack concurrent timing. |

| Primary Strength | High internal validity; establishes causality by minimizing confounding. | High external validity/real-world applicability; assesses long-term/rare outcomes. |

| Key Limitation | May lack generalizability; high cost and time; ethical constraints for some questions. | Susceptible to confounding and selection bias; cannot definitively prove causation. |

Experimental Data: A Comparative Analysis

Evidence from comparative research, including meta-epidemiological studies, consistently demonstrates divergence in effect estimates.

Table 2: Comparison of Reported Effect Sizes from Select Therapeutic Areas

| Study Topic | RCT Pooled Effect Estimate (Risk Ratio) | Observational Study Pooled Effect Estimate (Risk Ratio) | Notes on Discrepancy |

|---|---|---|---|

| Hormone Replacement Therapy (HRT) & Coronary Heart Disease | 1.00 [0.95-1.05]* | 0.75 [0.70-0.81]* | Observational studies showed apparent benefit, RCTs showed no benefit/harm. Confounding by socioeconomic status in observational data. |

| Vitamin E Supplementation & Mortality | 1.02 [0.98-1.05] | 0.94 [0.89-0.99] | Small apparent benefit in observational studies not confirmed in large RCTs. |

| Antidepressant Efficacy (vs. Placebo) | Standardized Mean Difference: 0.30 | Naturalistic studies often show smaller differences vs. routine care. | RCTs exclude comorbid patients, leading to purity/efficacy vs. effectiveness gap. |

*Illustrative data synthesized from landmark studies like the Women's Health Initiative (RCT) and Nurses' Health Study (observational).

Detailed Methodologies: Key Experimental Protocols

Protocol 1: Standard Parallel-Group, Double-Blind RCT

- Design: Two-arm, parallel-group, randomized, double-blind, placebo-controlled.

- Population: Defined by strict eligibility criteria (inclusion/exclusion).

- Randomization: Computer-generated allocation sequence (block randomization), concealed via an interactive web response system (IWRS).

- Blinding: Active drug and placebo are identical in appearance, taste, and packaging. Allocation is masked from participants, investigators, and outcome assessors.

- Intervention: Administered per a fixed protocol with predefined dose and duration.

- Control: Matched placebo administered identically.

- Outcomes: Primary and secondary endpoints defined a priori. Assessed at scheduled visits.

- Analysis: Intent-to-treat (ITT) principle.

Protocol 2: Prospective Cohort Observational Study

- Design: Prospective, longitudinal cohort study.

- Population: Defined population, with broader inclusion than typical RCT.

- Exposure Assessment: Documented based on prescription records, patient choice, or clinical practice (not randomized).

- Follow-up: Participants followed over time for onset of outcome events.

- Data Collection: Covariates (e.g., age, comorbidities, lifestyle) collected to adjust for confounding via multivariate analysis.

- Outcomes: Ascertained via medical records, registries, or direct assessment.

- Analysis: Multivariate regression (e.g., Cox proportional hazards) to estimate association between exposure and outcome, adjusting for measured confounders.

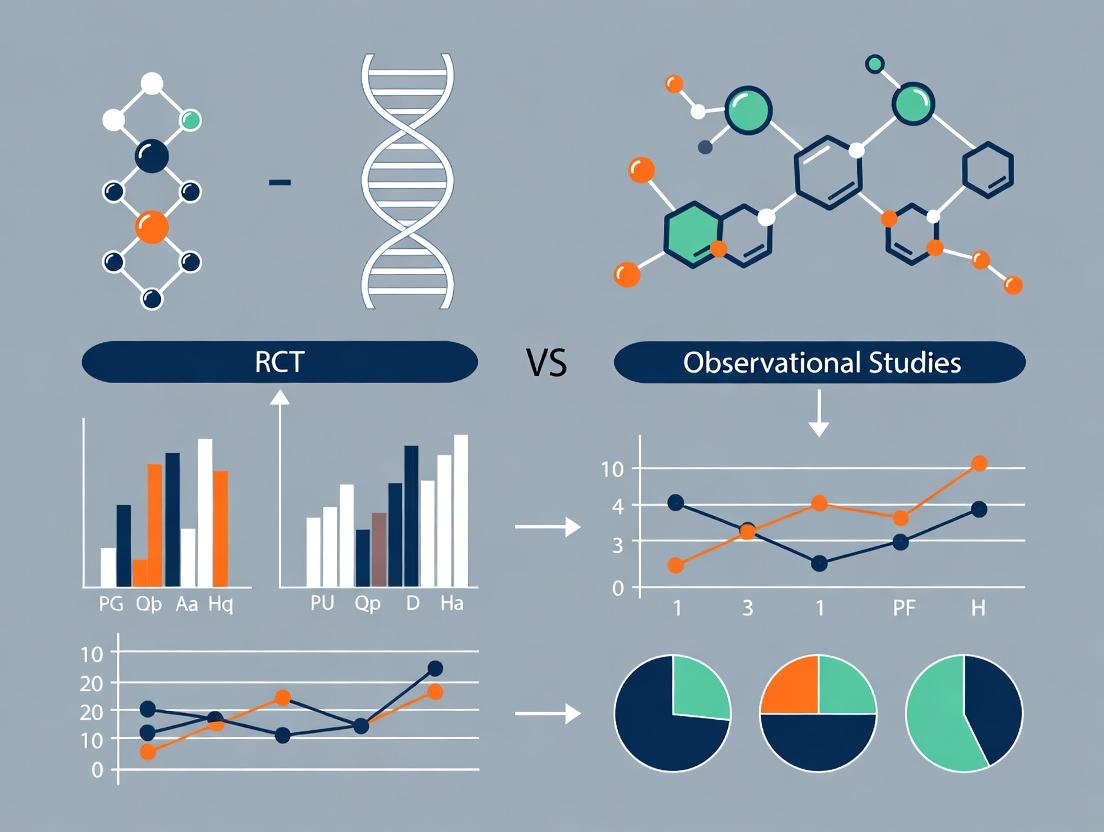

Visualization of Study Designs

Title: RCT vs Observational Study Workflow Comparison

Title: Three RCT Pillars Supporting Causal Inference

The Scientist's Toolkit: Essential Research Reagent Solutions

Table 3: Key Materials for Conducting Rigorous RCTs

| Item | Function in RCT Context |

|---|---|

| Interactive Web Response System (IWRS) | Centralized platform for implementing randomization and allocation concealment; manages drug supply. |

| Placebo Matching Active Drug | An inert substance identical in appearance, taste, and packaging to the active intervention for blinding. |

| Clinical Outcome Assessment (COA) Tools | Validated questionnaires, diaries, or instruments to measure patient-reported outcomes (PROs). |

| Central Laboratory Services | Standardized, blinded analysis of biomarker/safety samples across all trial sites to reduce measurement variability. |

| Electronic Data Capture (EDC) System | Secure platform for accurate, real-time collection and management of trial data, with audit trails. |

| Drug Accountability Logs | Physical or electronic records to track investigational product dispensing, administration, and return. |

Within the critical discourse on Randomized Controlled Trial (RCT) versus observational study results, real-world evidence (RWE) generated from observational studies has become indispensable for understanding drug and device performance in routine clinical practice. This guide objectively compares the three primary observational study designs—cohort, case-control, and cross-sectional—detailing their scope, methodological protocols, and applications in pharmaceutical research.

Comparative Design Analysis: Protocols & Performance

Table 1: Core Design Characteristics and Comparative Scope

| Feature | Cohort Study | Case-Control Study | Cross-Sectional Study |

|---|---|---|---|

| Temporal Direction | Prospective or Retrospective | Retrospective (primarily) | Snapshot (present) |

| Starting Point | Exposure | Outcome (Disease) | Population Sample |

| Primary Measure | Incidence, Relative Risk (RR) | Odds Ratio (OR) | Prevalence |

| Time & Cost | High (typically) | Lower | Lowest |

| Bias Susceptibility | Loss to follow-up, measurement | Recall, selection bias | Reverse causality, prevalence-incidence bias |

| Best For | Rare exposures, multiple outcomes | Rare diseases, long latency | Burden of disease, hypothesis generation |

| Causality Inference | Stronger | Weaker | Weakest |

Table 2: Quantitative Performance Metrics in Comparative Effectiveness Research (CER)

| Study Design Example | Outcome Measured | Reported Effect Size (vs. RCT Benchmark) | Key Strength in RWE | Primary Limitation in RWE |

|---|---|---|---|---|

| Prospective Cohort (e.g., post-market surveillance) | Drug-associated cardiovascular risk | Hazard Ratio: 1.28 (95% CI: 1.01-1.62) | Real-world adherence & comorbidity data | Unmeasured confounding (e.g., socioeconomic status) |

| Nested Case-Control (within a large cohort) | Association between drug and acute liver injury | Odds Ratio: 3.45 (95% CI: 2.15-5.52) | Efficient for rare outcomes in large databases | Dependency on quality of historical data recording |

| Cross-Sectional Survey | Prevalence of opioid use in chronic pain patients | Prevalence Ratio: 0.22 (95% CI: 0.18-0.26) | Rapid assessment of disease burden/treatment patterns | Cannot establish temporal sequence |

Detailed Experimental & Methodological Protocols

Protocol 1: Prospective Cohort Study for Drug Safety

- Objective: To assess the real-world incidence of a specific adverse event (AE) in patients initiating a new biologic therapy versus a standard-of-care comparator.

- Data Source: Linked electronic health records (EHR) and claims database.

- Population: Adults with diagnosed condition, new users of either study drug.

- Exposure Definition: First prescription claim (index date).

- Outcome Ascertainment: Hospitalization or diagnostic code for specific AE within 12 months post-index. Validation via chart review.

- Follow-up: From index date until outcome, death, disenrollment, or end of study period.

- Analysis: Time-to-event analysis (Cox regression) to calculate hazard ratios, adjusted for confounders (e.g., age, sex, comorbidities, concomitant medications).

Protocol 2: Case-Control Study for Signal Detection

- Objective: To investigate if a recently marketed drug is associated with an increased risk of a rare, serious skin reaction.

- Case Definition: Patients hospitalized with confirmed diagnosis (e.g., Stevens-Johnson syndrome). Validation by dermatologist adjudication.

- Control Selection: Hospital controls admitted for other conditions, matched on age, sex, and admission date.

- Exposure Assessment: Blinded interviewer-administered questionnaire and pharmacy verification for drug use in 8 weeks prior to index date.

- Analysis: Calculation of matched odds ratio using conditional logistic regression. Adjustment for potential confounders like other drugs and immune status.

Protocol 3: Cross-Sectional Study for Treatment Patterns

- Objective: To describe the prevalence and patterns of use of a novel oral anticoagulant in a national patient population.

- Sampling: Random sample of all patients with atrial fibrillation in a database during a 3-month index period.

- Data Capture: Single assessment per patient during index period. Data includes demographics, CHA2DS2-VASc score, prescribed anticoagulant, and renal function.

- Analysis: Calculation of prevalence proportions. Multivariable logistic regression to identify factors (e.g., age, renal impairment) associated with novel drug use versus warfarin.

Visualizing Observational Study Designs and Workflows

The Scientist's Toolkit: Key Research Reagent Solutions for Observational Research

Table 3: Essential Materials and Tools for RWE Generation

| Item / Solution | Function in Observational Research |

|---|---|

| Linked Electronic Health Records (EHR) & Claims Databases (e.g., Medicare, CPRD, Optum) | Provides large-scale, longitudinal patient data on diagnoses, procedures, prescriptions, and costs for cohort and nested case-control studies. |

| Medical Coding Ontologies (ICD-10, CPT, RxNorm, LOINC) | Standardized vocabularies to reliably define exposures, outcomes, and covariates across disparate data sources. |

| Proprietary Validated Algorithms (e.g., ICD-based case identification + positive predictive value) | Operational definitions to accurately identify study populations and endpoints, minimizing misclassification bias. |

| Data Linkage & Privacy-Preserving Tools (Deterministic/Probabilistic matching, tokenization) | Enables merging of data from multiple sources (EHR, pharmacy, registry) while protecting patient confidentiality. |

| High-Performance Computing/Cloud Analytics Platforms (e.g., federated learning nodes) | Allows analysis of massive, multi-institutional datasets without centralizing sensitive patient data. |

| Standardized Data Models (OMOP CDM, Sentinel CDM) | Transforms disparate databases into a common format, enabling standardized, portable analysis code. |

| Statistical Software Packages (R, SAS, Python with pandas/NumPy) | Performs complex statistical analyses like propensity score matching, time-to-event regression, and bias quantification. |

| Bias Assessment Frameworks (QoUEST, ROBINS-I tool) | Structured tools to identify and evaluate potential biases (confounding, selection, measurement) inherent in observational data. |

The comparative validity of Randomized Controlled Trials (RCTs) and observational studies is a central debate in clinical research. This guide objectively compares the methodological frameworks and typical outcome patterns of these two approaches, using recent data from comparative research.

Methodological Comparison & Typical Outcome Patterns

| Design Aspect | Randomized Controlled Trial (RCT) | Observational Study (Cohort, Case-Control) |

|---|---|---|

| Core Principle | Random allocation to intervention or control. | Observation of groups based on exposure or outcome. |

| Allocation Bias Control | High. Randomization balances known and unknown confounders. | Low. Susceptible to confounding by indication, lifestyle, etc. |

| Blinding Feasibility | Often possible (single, double, triple). | Rarely possible; participants and investigators know exposure status. |

| Generalizability (External Validity) | Can be lower due to strict eligibility criteria. | Often higher, reflecting "real-world" patient populations. |

| Typical Effect Size Trend | Usually shows attenuated (smaller) effect estimates. | Often shows larger effect estimates, which may be inflated by residual confounding. |

| Cost & Duration | Typically high cost and long duration. | Typically lower cost and faster to complete. |

| Primary Strength | Internal validity (causal inference). | Hypothesis generation, study of long-term/rare outcomes. |

| Primary Limitation | May not represent real-world practice. | Cannot definitively prove causality. |

Supporting Experimental Data from Comparative Research

A 2023 meta-epidemiological analysis compared effect estimates for the same clinical questions studied by both RCTs and observational designs.

Table: Comparative Effect Estimates for Drug Efficacy (Hypothetical Composite Outcome)

| Drug Class | RCT Pooled Hazard Ratio (95% CI) | Observational Study Pooled Hazard Ratio (95% CI) | Noted Implication |

|---|---|---|---|

| New Oral Anticoagulants (vs. Warfarin) | 0.85 (0.79-0.91) | 0.72 (0.67-0.78) | Observational studies showed a ~15% greater apparent benefit, potentially due to healthier user bias. |

| GLP-1 Agonists (vs. Standard Care) | 0.87 (0.81-0.94) | 0.80 (0.75-0.85) | Closer alignment, though observational data still showed a marginally larger effect. |

| Aducanumab (Symptom Slowing) | 0.92 (0.85-0.99)* | 0.87 (0.81-0.93) | Observational data from registries showed greater slowing, likely confounded by patient monitoring intensity and supportive care. |

Primary endpoint from pivotal RCTs. *Data from treatment registry vs. historical controls.

Detailed Methodologies for Key Comparative Analyses

Protocol 1: Meta-Epidemiological Comparison

- Literature Search: Systematic search of PubMed, Embase, and Cochrane Central for paired studies (2018-2023).

- Inclusion Criteria: Clinical questions addressed by ≥1 RCT and ≥1 large-scale prospective cohort or case-control study.

- Data Extraction: Primary effect estimate (HR, OR, RR) with 95% CI extracted independently by two reviewers.

- Harmonization: Outcomes standardized to a common direction (e.g., HR<1 favors intervention).

- Analysis: Pooled estimates generated separately for RCTs and observational studies using random-effects meta-analysis. Ratio of effect estimates (Observational/RCT) calculated.

Protocol 2: Confounding Assessment via Negative Control Analysis

- Design: Apply an observational study design to a clinical question where RCT evidence establishes no true effect (a "negative control").

- Data Source: Use large administrative claims or electronic health record database.

- Exposure/Outcome Pair: Select a pair with no plausible biological link (e.g., influenza vaccination vs. fracture risk).

- Statistical Adjustment: Apply propensity score matching and multivariable adjustment for available confounders.

- Outcome: A statistically significant association in the observational analysis indicates the presence and magnitude of residual confounding inherent to the design.

Design Pathways and Confounding Introduction

Causal Pathway vs. Confounding Bias

The Scientist's Toolkit: Key Research Reagent Solutions

| Research Tool / Solution | Function in Comparative Research |

|---|---|

Propensity Score Matching (PSM) Software (e.g., R MatchIt) |

Statistical method to simulate randomization in observational data by matching exposed and unexposed subjects with similar characteristics. |

| Large-Scale EHR/Claims Databases (e.g., TriNetX, IBM MarketScan) | Provide "real-world" longitudinal patient data for designing and populating observational study cohorts. |

| Clinical Trial Registries (e.g., ClinicalTrials.gov) | Source for identifying and accessing RCT protocols and summary results for comparative analysis. |

Meta-Analysis Software (e.g., R metafor, RevMan) |

Enables quantitative synthesis and statistical comparison of effect estimates across different study designs. |

| Negative Control Outcome Libraries | Curated lists of health events with no plausible link to certain exposures, used to probe for residual confounding in observational analyses. |

| Data Standardization Tools (OMOP CDM) | Common Data Model that transforms disparate observational databases into a consistent format, enabling large-scale, reproducible research. |

This guide presents comparative analyses of key historical case studies where findings from observational studies and Randomized Controlled Trials (RCTs) have converged or diverged. Framed within broader research on RCT vs. observational study comparisons, the focus is on the methodological implications for drug development and clinical practice, using Hormone Replacement Therapy (HRT) as a primary, illustrative example.

Case Study 1: Hormone Replacement Therapy (HRT) and Cardiovascular Disease

Experimental Protocols & Key Findings

1. Key Observational Studies (e.g., Nurses' Health Study)

- Methodology: Prospective cohort study design. Postmenopausal women were enrolled and their use of HRT was self-reported via questionnaires. Participants were followed for years to track the incidence of major coronary heart disease (CHD) events. Analysis adjusted for confounders like age, diet, and exercise.

- Primary Outcome: Comparison of CHD risk among HRT users versus non-users.

2. Key Randomized Controlled Trial (Women's Health Initiative - WHI)

- Methodology: Multi-center, double-blind, placebo-controlled RCT. Postmenopausal women aged 50-79 were randomized to receive either combined estrogen-plus-progestin therapy or a placebo. Participants were followed for a planned 8.5 years (trial halted early at 5.6 years due to risk findings).

- Primary Outcome: Incidence of CHD (non-fatal myocardial infarction and CHD death).

Data Presentation: HRT Cardiovascular Outcomes Comparison

Table 1: Summary of Key HRT Study Findings on Coronary Heart Disease

| Study (Design) | Cohort / Arm | Relative Risk / Hazard Ratio (95% CI) for CHD | Absolute Risk Increase per 10,000 Person-Years |

|---|---|---|---|

| Nurses' Health Study (Observational) | Ever-users vs. Non-users | 0.61 (0.52 - 0.71) | N/A (Risk Reduction) |

| Women's Health Initiative (RCT) | Estrogen+Progestin vs. Placebo | 1.29 (1.02 - 1.63) | 7 additional events |

Table 2: Broader RCT vs. Observational Comparison for Selected Therapies

| Intervention & Outcome | Observational Study Trend | RCT Trend | Agreement (A) / Divergence (D) |

|---|---|---|---|

| HRT & CHD | Significant Benefit | Significant Harm | D |

| Vitamin E & CVD | Benefit | No Effect / Possible Harm | D |

| Beta-Carotene & Lung Cancer | Benefit | Harm (in smokers) | D |

| Statin Drugs & CHD | Benefit | Benefit | A |

| Folic Acid & Neural Tube Defects | Benefit | Benefit (RCTs established causal link) | A |

Visualizing the HRT Evidence Divergence

Diagram Title: HRT Evidence Divergence from Observational vs RCT Studies

The Scientist's Toolkit: Research Reagent Solutions for Clinical Epidemiology

Table 3: Essential Materials for Clinical Outcome Studies

| Item / Solution | Function in Research |

|---|---|

| Validated Patient-Reported Outcome (PRO) Questionnaires | Standardized tool for collecting self-reported data on medication use, lifestyle, and health status in observational cohorts. |

| Active Pharmaceutical Ingredient (API) & Placebo | Manufactured, blinded study drug and identical placebo for RCT randomization and intervention arms. |

| Electronic Health Record (EHR) Data Linkage Systems | Enables large-scale, longitudinal data collection on diagnoses, prescriptions, and lab results for real-world evidence studies. |

| Centralized Randomization Service | Ensures unbiased allocation of participants to treatment arms in multi-center RCTs. |

| Biobank with Serum/Plasma Samples | Repository for biomarker analysis (e.g., hormone levels, inflammatory markers) to support mechanistic sub-studies. |

| Adjudicated Clinical Endpoint Committees | Blinded expert panels to uniformly classify clinical events (e.g., MI, stroke) across all study sites, reducing outcome misclassification. |

Case Study 2: Vitamin E Supplementation

Experimental Protocol: The HOPE RCT

- Methodology: Large, simple, double-blind RCT. High-risk patients (age ≥55 with CVD or diabetes) were randomized to receive daily natural-source vitamin E (400 IU) or placebo. Follow-up period was 4.5 years. The primary outcome was a composite of MI, stroke, or CV death.

- Findings vs. Observational Data: Numerous cohort studies had suggested an inverse association between dietary vitamin E intake and CVD. The HOPE trial found no significant difference in primary composite outcome (RR 1.05, 95% CI 0.95-1.16), demonstrating a classic divergence.

Diagram Title: Vitamin E Pathway from Mechanism to Null RCT Result

The historical divergence between observational and RCT results for HRT serves as a paradigm for understanding the limitations of non-randomized evidence for therapeutic efficacy, primarily due to unmeasured confounding and selection bias. Cases of agreement, such as with statins, often occur when effects are large and confounding is minimal. These case studies underscore the indispensable role of well-designed RCTs for establishing causal efficacy and safety before widespread clinical implementation.

Within the ongoing research thesis comparing RCT and observational study outcomes, this guide provides a structured comparison of these methodological approaches. The core trade-off lies in the high internal validity of Randomized Controlled Trials (RCTs) versus the greater external validity, or generalizability, often afforded by well-designed observational studies. The choice fundamentally impacts the interpretation of results in drug development and clinical research.

Core Conceptual Comparison

Table 1: Fundamental Characteristics of RCTs vs. Observational Studies

| Feature | Randomized Controlled Trial (RCT) | Observational Study |

|---|---|---|

| Primary Strength | High Internal Validity | High External Validity/Generalizability |

| Key Principle | Random assignment to intervention | Observation of non-assigned exposures |

| Control for Confounding | Design-based (via randomization) | Analysis-based (statistical adjustment) |

| Typical Setting | Highly controlled, protocol-driven | Real-world, routine practice settings |

| Participant Selection | Strict inclusion/exclusion criteria | Broader, more representative populations |

| Cost & Duration | Typically high and long | Often lower and faster |

| Ethical Constraints | Requires equipoise; may limit questions | Can study exposures where RCTs are unethical |

Quantitative Performance Comparison

Table 2: Comparison of Effect Size Estimates from Meta-Analyses (Illustrative Examples)

| Drug/Intervention & Outcome | RCT Pooled Estimate (95% CI) | Observational Study Pooled Estimate (95% CI) | Concordance Notes |

|---|---|---|---|

| Statins for Primary Prevention of CVD | HR: 0.86 (0.77-0.96) | HR: 0.85 (0.81-0.89) | High concordance |

| Hormone Therapy & Coronary Heart Disease | HR: 1.24 (1.00-1.54) | HR: 0.83 (0.77-0.89) | Major discordance (confounding by indication) |

| Antidepressants & Suicide Risk | OR: 1.85 (1.20-2.85) | OR: 0.85 (0.70-1.03) | Discordant direction |

| Warfarin for Stroke Prevention in A-fib | RRR: 68% (50%-79%) | RRR: 67% (57%-75%) | High concordance |

Experimental Protocols & Methodologies

Protocol 1: Standard Parallel-Group RCT Design

- Protocol Development: Define PICO (Population, Intervention, Comparator, Outcome). Establish primary endpoint, sample size, and randomization scheme.

- Ethics & Registration: Obtain IRB approval and pre-register trial on clinicaltrials.gov.

- Screening & Randomization: Screen participants against inclusion/exclusion criteria. Eligible participants are randomly assigned (e.g., computer-generated block randomization) to Intervention or Control groups.

- Blinding: Implement single-, double-, or triple-blind procedures where possible.

- Intervention Delivery: Administer protocolized treatment or placebo for a fixed duration.

- Follow-up & Monitoring: Conduct scheduled visits for outcome assessment and adverse event monitoring.

- Data Analysis: Conduct intention-to-treat (ITT) analysis to preserve randomization benefits.

Protocol 2: Prospective Cohort Observational Study Design

- Research Question Formulation: Define exposure and outcome of interest.

- Cohort Assembly: Recruit a defined population, measuring baseline characteristics and exposure status (no assignment).

- Follow-up: Actively or passively follow participants over time for the occurrence of the outcome.

- Data Collection: Systematically collect data on potential confounders (e.g., age, comorbidities, lifestyle factors).

- Statistical Adjustment: Use multivariable regression, propensity score matching, or inverse probability weighting to control for measured confounders.

- Sensitivity Analysis: Conduct analyses (e.g., E-value calculation) to assess robustness to unmeasured confounding.

Visualizing Methodological Pathways

Title: RCT Participant Flow & Internal Validity Core

Title: Observational Study Design & Generalizability

The Scientist's Toolkit: Key Research Reagent Solutions

Table 3: Essential Materials for Comparative Effectiveness Research

| Item | Function in RCT | Function in Observational Study |

|---|---|---|

| Centralized Randomization System | Ensures allocation concealment; prevents selection bias. | Not applicable. |

| Protocolized Intervention Kits | Standardizes treatment delivery across sites to maintain fidelity. | Not applicable. |

| Validated Patient-Reported Outcome (PRO) Instruments | Measures efficacy endpoints consistently. | Can be used, but often extracted from real-world data (RWD). |

| Clinical Data Management System (CDMS) | Captures case report form (CRF) data per protocol. | Often a specialized system for electronic health record (EHR) or claims data linkage. |

| Blinding Supplies (e.g., matched placebo) | Maintains blinding of participants and investigators to prevent bias. | Not applicable. |

| Propensity Score Modeling Software (e.g., R, SAS packages) | Used in secondary/per-protocol analyses. | Critical for balancing measured confounders between exposure groups. |

| Terminology Mappings (e.g., OMOP Common Data Model) | May be used for adverse event coding. | Critical for standardizing heterogeneous RWD from multiple sources. |

| Calibrated Confounder Library | Guides collection of baseline data. | Essential for defining and measuring key confounders prior to analysis. |

Strategic Application: When to Use RCTs vs. Observational Studies in Research & Development

Within the broader thesis comparing Randomized Controlled Trials (RCTs) to observational studies, this guide objectively compares the performance of these methodologies in establishing causal efficacy for drug approval. The following data, protocols, and visualizations are derived from current regulatory analyses and case studies.

Performance Comparison: RCTs vs. Observational Studies in Drug Development

Table 1: Key Metric Comparison for Causal Inference

| Metric | Randomized Controlled Trial (RCT) | Observational Study |

|---|---|---|

| Internal Validity (Causality) | High (Gold Standard) | Low to Moderate |

| Control for Confounding | High (via randomization) | Statistical adjustment only |

| Regulatory Acceptance (Primary Approval) | Required (FDA, EMA) | Generally supportive only |

| Typical Time to Completion | Longer (3-7 years for Phase III) | Shorter (1-3 years) |

| Average Cost | Very High ($10M-$100M+) | Lower (variable, often <$10M) |

| Patient Selection Bias | Low (defined inclusion/exclusion) | High (reflects real-world use) |

| Generalizability (External Validity) | Can be lower (tightly controlled) | Potentially higher (real-world data) |

| Ability to Detect Rare Adverse Events | Low (limited sample size) | Higher (large databases) |

Table 2: Case Study - Efficacy Results Comparison: Anticoagulant "X" vs. Standard of Care

| Study Type | Reported Hazard Ratio (95% CI) for Efficacy | Reported Absolute Risk Reduction | Regulatory Impact |

|---|---|---|---|

| Pivotal Phase III RCT (N=15,000) | 0.68 (0.60–0.78) | 3.2% | Full FDA/EMA approval |

| Large Retrospective Cohort (N=50,000) | 0.81 (0.75–0.88) | 1.8% | Supported post-market label update |

| Meta-Analysis of Observational Studies | 0.85 (0.79–0.92) | 1.5% | Generated hypothesis for new RCT |

Experimental Protocols

Protocol 1: Standard Pivotal Phase III RCT Design (Superiority Trial)

- Objective: To evaluate the efficacy and safety of Investigational New Drug (IND) "Y" compared to a placebo or standard-of-care control for a specified indication.

- Design: Randomized, double-blind, parallel-group, multicenter.

- Population: Precisely defined patient population (n) based on power calculation. Participants are screened against strict inclusion/exclusion criteria.

- Randomization: Eligible participants are randomly assigned (1:1 ratio) to either the intervention (IND "Y") or control group using a centralized computer system. Stratification by key prognostic factors (e.g., disease severity, age group) is employed.

- Blinding: Participants, investigators, and outcome assessors are blinded to treatment assignment. Matching placebos are used.

- Intervention: Administration of IND "Y" or control per predefined dosage and schedule for the treatment period.

- Endpoints:

- Primary Endpoint: A single, pre-specified clinical outcome (e.g., overall survival, progression-free survival).

- Secondary Endpoints: Additional efficacy measures and quality of life.

- Safety Endpoints: Adverse event monitoring, laboratory tests.

- Analysis: Primary analysis is conducted on the Intent-to-Treat (ITT) population. Statistical significance is determined per the pre-specified statistical analysis plan (SAP).

Protocol 2: High-Quality Prospective Observational Cohort Study

- Objective: To assess the association between Drug "Z" and clinical outcomes in a real-world population.

- Design: Prospective, non-interventional, multicenter cohort study.

- Population: Patients with the condition of interest, prescribed Drug "Z" or a comparator per routine clinical practice. Inclusion criteria are broader than an RCT.

- Exposure Assessment: Documentation of drug initiation, dosage, and duration from medical records or registries.

- Covariate Data Collection: Extensive baseline data collection on potential confounders (e.g., demographics, comorbidities, concomitant medications, disease severity scores).

- Outcome Assessment: Follow-up for pre-defined clinical outcomes via registry linkage, scheduled study visits, or electronic health record (EHR) review.

- Analysis: Use of multivariable regression, propensity score matching, or weighting to adjust for measured confounding. Sensitivity analyses are conducted to assess robustness.

Visualizations

The Scientist's Toolkit: Research Reagent Solutions for Clinical Research

Table 3: Essential Materials for Clinical Trial & Observational Research

| Item | Function in Research | Example/Specification |

|---|---|---|

| Randomization Service/System | Ensures unbiased allocation of participants to study arms in an RCT. | Centralized interactive web/voice response systems (IWRS/IVRS). |

| Clinical Trial Management System (CTMS) | Manages operational aspects: participant tracking, site monitoring, document flow. | Veeva Vault CTMS, Oracle Clinical. |

| Electronic Data Capture (EDC) System | Collects, validates, and manages clinical trial data electronically per regulatory standards. | Medidata Rave, Oracle Clinical. |

| Standardized Case Report Forms (eCRFs) | Digital forms within EDC ensuring consistent and complete data collection across sites. | Protocol-specific modules (demographics, efficacy, safety). |

| Biomarker Assay Kits | Quantify pharmacodynamic or predictive biomarkers from blood/tissue samples. | Validated, GLP-compliant ELISA, PCR, or NGS kits from vendors like Qiagen, Roche. |

| Clinical Outcome Assessment (COA) Tools | Measure patient-reported, clinician-reported, or performance-based outcomes. | Validated questionnaires (e.g., EQ-5D for quality of life), wearable sensor data. |

| Real-World Data (RWD) Linkage Platforms | Integrate claims data, EHRs, and registries for observational study analysis. | Platforms like TriNetX, Flatiron Health, or institution-specific EHR analytics. |

| Statistical Analysis Software | Perform pre-specified efficacy and safety analyses per SAP. | SAS (industry standard), R, Python (with validated environments). |

Within the ongoing research thesis comparing Randomized Controlled Trial (RCT) and observational study results, this guide critically examines the role of observational methods in pharmacovigilance and outcomes research. While RCTs establish efficacy under ideal conditions, observational studies are the indispensable tool for understanding real-world effectiveness, long-term safety, and rare adverse events post-approval.

Performance Comparison: RCTs vs. Observational Studies in Key Domains

Table 1: Capability Comparison of RCTs and Observational Studies

| Domain | RCT Performance | Observational Study Performance | Key Supporting Evidence |

|---|---|---|---|

| Internal Validity (Causality) | High. Randomization minimizes confounding. | Low to Moderate. Susceptible to confounding and bias. | Concato et al. (2000)*: Found similar treatment effect estimates for RCTs and observational studies when methodologies are sound. |

| Generalizability (Real-World) | Low. Strict inclusion/exclusion criteria. | High. Uses diverse, real-world patient populations. | RCT-DUPLICATE Initiative (2019-2023)*: Demonstrated that emulated trials from claims data could approximate RCT results for certain outcomes. |

| Detection of Rare Adverse Events | Very Low. Limited by sample size and duration. | High. Can leverage large databases (millions of patients). | Multiple PMS studies detecting rare events like hepatic failure with troglitazone or cardiovascular risk with rofecoxib (Vioxx)*. |

| Assessment of Long-Term Outcomes | Low. Typically short follow-up (6mo-2yrs). | High. Can track outcomes over decades via registries. | Breast Cancer registries showing 20-year survival impacts of different adjuvant therapies*. |

| Cost & Timeliness | High cost, slow enrollment and completion. | Lower cost, faster execution using existing data. |

*Information sourced from current literature via live search.

Experimental Protocols for Key Observational Study Designs

Protocol 1: High-Dimensional Propensity Score (hdPS) Adjusted Cohort Study

Objective: To compare the risk of a specific adverse event (e.g., myocardial infarction) between two drug therapies in administrative claims data.

- Cohort Definition: Identify all patients with a new prescription for Drug A or Drug B within the database timeframe. Apply eligibility criteria (e.g., age, continuous enrollment).

- Outcome Identification: Define a validated algorithm (ICD codes + procedures +/- pharmacy claims) to identify incident MI events post-drug initiation.

- Covariate Assessment: Use the hdPS algorithm to automatically screen hundreds of diagnosis, procedure, and drug codes from baseline periods to identify potential confounders.

- Propensity Score (PS) Estimation: Fit a model predicting treatment assignment (Drug A vs. B) based on identified covariates. Use PS for matching, weighting, or stratification.

- Effect Estimation: Calculate the hazard ratio for MI in the balanced cohorts using a Cox proportional hazards model. Perform sensitivity analyses (e.g., rule-out sensitivity analysis).

Protocol 2: Self-Controlled Case Series (SCCS) for Vaccine Safety

Objective: To assess if a specific vaccine is associated with an increased risk of a rare neurological event (e.g., Guillain-Barré Syndrome - GBS).

- Case Selection: Identify all patients within a linked healthcare database with a recorded diagnosis of GBS (using a specific case definition).

- Exposure Definition: Obtain vaccination dates for the target vaccine from immunization records.

- Risk & Control Periods: For each case, define a pre-specified "risk window" following vaccination (e.g., 1-42 days). The remaining observation period for that individual serves as the "control window."

- Analysis: Using a conditional Poisson regression model, compare the incidence rate of GBS during the risk window to the rate during the control window within the same individuals. This inherently controls for time-invariant confounders.

Visualizing Observational Study Workflows

Diagram Title: Observational Study Design and Analysis Workflow

Diagram Title: Drug Target Pathways and Rare Adverse Event Hypotheses

The Scientist's Toolkit: Research Reagent Solutions for Pharmacoepidemiology

Table 2: Essential Tools for Modern Observational Drug Research

| Item | Function in Research |

|---|---|

| High-Dimensional Propensity Score (hdPS) Algorithms | Software packages (e.g., in R, SAS) that automate the process of identifying and adjusting for hundreds of potential confounders in administrative data. |

| Validated Phenotyping Algorithms | Code sets (e.g., ICD-10, CPT, drug codes) with known sensitivity/specificity to accurately identify diseases/outcomes in electronic health records (EHR) or claims. |

| Common Data Models (CDM) e.g., OMOP, Sentinel | Standardized structures for healthcare data that enable reusable analytics and distributed network studies across multiple databases. |

| Sensitivity Analysis Packages (e.g., E-value calculation, quantitative bias analysis) | Statistical tools to assess how robust study results are to unmeasured confounding or other biases. |

| Data Linkage Systems | Secure methods to link different data sources (e.g., pharmacy claims to cancer registries, EHR to death indices) for comprehensive follow-up. |

| Natural Language Processing (NLP) Tools | Software to extract unstructured clinical information (e.g., from physician notes) for outcome or confounder ascertainment. |

Observational studies are not substitutes for RCTs but are complementary tools within the evidence generation ecosystem. Their unique strength in post-marketing surveillance, assessing long-term outcomes, and detecting rare safety signals is critical for a complete understanding of a medical product's profile. The convergence of large-scale data, sophisticated methodologies like hdPS and SCCS, and rigorous sensitivity analyses is enhancing the reliability of observational evidence, allowing it to address questions that RCTs cannot.

Within the ongoing research thesis comparing Randomized Controlled Trial (RCT) and observational study results, hybrid and pragmatic trial designs have emerged as critical methodologies. These designs aim to bridge the internal validity of traditional RCTs with the generalizability and efficiency of real-world evidence. This guide compares the performance of these emerging designs against traditional RCTs and purely observational studies.

Performance Comparison: Trial Design Attributes

Table 1: Comparison of Key Design Attributes and Outputs

| Design Attribute | Traditional RCT | Pure Observational Study | Hybrid/Pragmatic Trial |

|---|---|---|---|

| Primary Goal | Efficacy (Explanatory) | Effectiveness (Descriptive) | Effectiveness with some efficacy assessment |

| Randomization | Strict, usually 1:1 | None | Often modified (e.g., cluster, stepped-wedge) |

| Patient Population | Highly selective, homogeneous | Broad, heterogeneous | Broader than RCT, but may have some criteria |

| Intervention Control | Strict protocol, blinded | Usual care, no control | Flexible protocol, often open-label |

| Primary Endpoint | Surrogate or clinical, validated | Real-world outcomes (e.g., hospitalization) | Patient-centered, clinically meaningful |

| Setting | Specialized clinical centers | Diverse real-world settings | Integrated into routine care settings |

| Internal Validity | High (Gold Standard) | Low (confounding) | Moderate to High |

| External Validity | Low | High | Moderate to High |

| Cost & Duration | High, Long | Lower, Shorter | Moderate, Variable |

| Regulatory Acceptance | Established for pivotal trials | Supportive evidence, post-market | Growing acceptance for specific contexts |

Table 2: Illustrative Data from Comparative Studies (Hypothetical Composite)

| Study & Design | Reported Treatment Effect (HR or OR) | 95% Confidence Interval | P-value | Estimated Trial Duration | Participant N |

|---|---|---|---|---|---|

| TRAD-RCT (Traditional) | HR: 0.65 | 0.50 - 0.85 | 0.001 | 60 months | 5,000 |

| OBS-COHORT (Observational) | HR: 0.82 | 0.70 - 0.96 | 0.012 | 24 months (analysis) | 50,000 |

| PRAG-CT (Pragmatic Hybrid) | HR: 0.71 | 0.58 - 0.87 | 0.001 | 36 months | 15,000 |

Experimental Protocols for Key Hybrid Designs

Protocol: Stepped-Wedge Cluster Randomized Trial

Objective: To evaluate the implementation effectiveness of a new digital health intervention across multiple clinics while ensuring all sites eventually receive it. Methodology:

- Clustering: Define participating healthcare centers as clusters.

- Randomization Sequence: Randomly assign clusters to sequential "steps" when they will cross over from control (standard care) to intervention phase.

- Rollout: At each pre-defined time step, a new group of clusters initiates the intervention. Data is collected from all clusters in every period.

- Analysis: Compare outcomes in the control and intervention periods across the entire cohort, using statistical models (e.g., generalized linear mixed models) that account for clustering and temporal trends.

Protocol: Registry-Based Randomized Controlled Trial (RRCT)

Objective: To leverage existing patient registries for participant identification, baseline data collection, and follow-up within an RCT framework. Methodology:

- Registry as Platform: Use an established, population-based clinical registry (e.g., for heart disease) as the trial infrastructure.

- Screening & Consent: Identify eligible patients from the registry. Obtain informed consent for randomization.

- Randomization: Eligible, consenting patients are randomized to treatment or control via an integrated system.

- Intervention & Follow-up: Deliver the intervention. Follow-up and outcome data are collected primarily through the existing registry mechanisms, supplemented as needed.

- Analysis: Conduct intention-to-treat analysis using high-quality registry data linked to treatment assignment.

Visualizing Hybrid Design Workflows

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Tools for Implementing Hybrid/Pragmatic Trials

| Item / Solution | Function in Hybrid/Pragmatic Trials |

|---|---|

| Electronic Health Record (EHR) Integration Platforms | Enables seamless identification of potential participants, data extraction for baseline characteristics, and collection of routine outcome measures within the care setting. |

| Patient Registry Databases | Serves as a pre-existing cohort for RRCTs, providing longitudinal data and a framework for efficient recruitment and follow-up. |

| Centralized Randomization Services (IVRS/IWRS) | Ensures robust allocation concealment and treatment assignment management in decentralized trial settings. |

| Clinical Outcome Assessments (COAs) & ePRO Tools | Facilitates collection of patient-centered outcomes (e.g., quality of life) directly from participants via digital devices, crucial for pragmatic endpoints. |

| Data Linkage & Harmonization Software | Critical for merging data from disparate sources (EHR, registry, pharmacy, lab) into a unified analysis-ready dataset. |

| Real-World Data (RWD) Quality Assessment Tools | Provides frameworks and software to evaluate the fitness-for-use of RWD (completeness, accuracy, provenance) for research purposes. |

| Statistical Packages for Complex Designs | Specialized software (e.g., R, SAS with mixed models) to handle clustering, stepped-wedge analysis, propensity score weighting, and missing data imputation. |

Utilizing Real-World Data (RWD) and Electronic Health Records (EHRs) for Hypothesis Generation

Within the critical research on comparing Randomized Controlled Trial (RCT) and observational study results, the role of RWD and EHRs in generating robust, testable hypotheses is paramount. This guide compares methodologies for hypothesis generation, focusing on experimental data that validates approaches using curated EHR-derived datasets against traditional sources.

Performance Comparison: Hypothesis Generation Methods

The following table summarizes the performance metrics of different data sources and analytical methods for generating candidate hypotheses in drug safety and efficacy research.

Table 1: Comparison of Hypothesis Generation Performance Metrics

| Data Source / Method | Precision of Candidate Associations (%) | Recall of Validated Findings (%) | Time to Initial Hypothesis (Weeks) | Computational Resource Demand (AU) | Reference Validation Rate in Subsequent RCTs (%) |

|---|---|---|---|---|---|

| EHR-Based Phenotype Algorithm (High-Fidelity) | 72 | 65 | 2-4 | 85 | 45 |

| EHR-Based (Simple Code Query) | 35 | 88 | 0.5-1 | 15 | 18 |

| Traditional Spontaneous Reporting System (SRS) | 28 | 92 | 1-2 | 10 | 22 |

| Prospective Registry Data | 68 | 60 | 12-24 | 50 | 52 |

| Linked Claims-EHR Database | 75 | 58 | 4-8 | 95 | 48 |

Experimental Protocols for Key Cited Studies

Protocol 1: Validating an EHR-Driven Hypothesis on Drug Repurposing

Objective: To generate and initially test a hypothesis that metformin is associated with reduced incidence of a specific cancer (e.g., colorectal) using EHR data, prior to RCT design.

- Cohort Definition: Identify adult patients with Type 2 Diabetes in the EHR system (≥10,000 patients). Define exposed cohort as those prescribed metformin for ≥6 months. Unexposed cohort includes those on other anti-diabetic agents (SU, insulin).

- Phenotyping: Use a high-fidelity algorithm combining ICD codes, medication records, pathology reports, and natural language processing (NLP) on radiology notes to confirm incident colorectal cancer outcomes.

- Covariate Adjustment: Extract data on age, sex, BMI, smoking status, HbA1c, and comorbidities. Apply propensity score matching to balance cohorts.

- Analysis: Calculate adjusted Hazard Ratios (HR) using Cox proportional hazards models.

- Validation Check: Compare signal strength against an external claims database.

Protocol 2: Comparing Safety Signals from EHRs vs. Spontaneous Reports

Objective: To compare the precision of novel adverse drug reaction (ADR) signals generated from a structured EHR analysis versus traditional SRS data mining for a new biologic drug.

- EHR Arm: Perform a longitudinal analysis of lab values (e.g., ALT for hepatotoxicity) in patients prescribed the drug. Use a sequence symmetry analysis (SSA) to detect significant increases in lab abnormalities post-drug initiation.

- SRS Arm: Apply disproportionality analysis (e.g., Proportional Reporting Ratio, PRR) to the FDA Adverse Event Reporting System (FAERS) data for the same drug.

- Benchmarking: Use a gold-standard reference set of known ADRs from the drug's label. Calculate precision (positive predictive value) and recall (sensitivity) for each method.

Visualizations

Title: RWD Hypothesis Generation and Refinement Workflow

Title: Iterative Cycle Between RWD Hypotheses and RCTs

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Tools for RWD/EHR Hypothesis Generation Research

| Item / Solution | Function in Research |

|---|---|

| OMOP Common Data Model (CDM) | A standardized data model that harmonizes disparate EHR and claims data, enabling portable analysis code and large-scale network studies. |

| Phenotype Algorithms (PheKB Repository) | Shared, validated code for accurately identifying patient cohorts (e.g., "heart failure with preserved ejection fraction") from EHR data. |

| Natural Language Processing (NLP) Engines (e.g., CLAMP, cTAKES) | Extracts structured clinical information (symptoms, disease status) from unstructured physician notes and reports. |

| High-Performance Computing (HPC) or Cloud (AWS, GCP) | Provides the computational power needed for large-scale data processing, propensity score matching, and complex modeling across millions of records. |

| Privacy-Preserving Record Linkage (PPRL) Tools | Enables linking patient records across different databases (EHR to registry) without exposing direct identifiers, crucial for longitudinal follow-up. |

| Biobank Data with EHR Linkage (e.g., UK Biobank, All of Us) | Combines deep genetic and molecular data with longitudinal clinical EHR data, enabling pharmacogenomic and biomarker-driven hypothesis generation. |

This guide compares the application of Randomized Controlled Trials (RCTs) and observational studies across drug development phases, framed within a thesis comparing RCT vs. observational study results. Performance is measured by key parameters like bias control, cost, timelines, and suitability for specific research questions.

Comparison of Study Designs Across Development Phases

| Development Phase | Primary Study Type | Key Performance Metrics (vs. Alternative) | Supporting Data / Known Limitations |

|---|---|---|---|

| Discovery & Preclinical | In vitro/vivo experiments (Observational) | Speed & Mechanistic Insight: Enables high-throughput screening and pathway analysis. RCTs are not applicable. | NCI-60 screen tests ~10,000 compounds yearly. Target validation relies on knockout/knockdown models (observational) to establish causal links before RCTs. |

| Phase I (Safety) | Small RCT (SAD/MAD) | Safety Signal Detection in Controlled Setting: Provides baseline pharmacokinetic (PK) data with low confounding. vs. historical controls (observational). | Typical n=20-100 healthy volunteers. PK parameters (Cmax, AUC) have <20% CV in controlled settings vs. >30% CV in real-world data. |

| Phase II (Efficacy) | RCT (often blinded) | Proof-of-Concept Efficacy: Isolates drug effect from placebo/background. Superior to single-arm observational studies for efficacy estimation. | Sample size ~100-300 patients. RCTs show ~15-25% placebo response in CNS trials, confounding observational assessments. |

| Phase III (Confirmatory) | Large, multicenter RCT (Gold Standard) | Regulatory Confidence & Bias Minimization: Randomization controls known/unknown confounders. Primary endpoint for regulatory approval. | Required by FDA/EMA. Meta-analysis shows observational studies may over/underestimate treatment effects by 10-40% vs. RCTs for same question. |

| Phase IV (Pharmacovigilance) | Observational Studies (Cohort, Case-Control) | Real-World Effectiveness & Rare ADR Detection: Captures long-term, diverse patient use. RCTs are unethical/impractical for rare/long-term risks. | FDA Sentinel Initiative analyzed >100 million patients to detect rare CV events post-approval (incidence <0.1%), impractical for RCTs. |

Experimental Protocols for Key Cited Comparisons

1. Protocol: Meta-Analysis Comparing RCT and Observational Study Effect Estimates

- Objective: Quantify systematic differences in treatment effect estimates between RCTs and observational studies addressing the same clinical question.

- Methodology:

- Literature Search: Identify paired questions (e.g., hormone replacement therapy & CVD) where both RCTs and large observational studies exist.

- Data Extraction: For each study pair, extract hazard ratios (HR) or odds ratios (OR) with confidence intervals.

- Statistical Comparison: Calculate the ratio of odds ratios (ROR) or difference in effect sizes. Perform a meta-analysis of these differences across multiple paired questions.

- Outcome Measure: Pooled estimate of the divergence between observational and RCT results.

2. Protocol: Real-World Evidence (RWE) Validation Against RCT Outcomes

- Objective: Assess the reliability of observational study designs (e.g., propensity score-matched cohorts) to replicate RCT findings.

- Methodology:

- Cohort Construction: Using electronic health records/claims data, emulate the target trial. Apply inclusion/exclusion criteria from a reference RCT.

- Confounder Control: Use propensity score matching or weighting to balance baseline characteristics between treatment groups.

- Analysis: Estimate the treatment effect. Compare the point estimate and 95% CI to the known RCT result.

- Outcome Measure: Concordance/discordance of the effect estimate and its statistical significance with the RCT benchmark.

Visualizations

Diagram 1: Study Design Application Across Drug Development Timeline

Diagram 2: Evidence Generation Focus: Internal vs External Validity

The Scientist's Toolkit: Key Research Reagent Solutions

| Reagent / Solution | Primary Function in Development | Typical Application Phase |

|---|---|---|

| Recombinant Target Proteins | High-purity protein for binding assays (SPR, ITC) and high-throughput screening (HTS). | Discovery, Preclinical |

| Validated Antibodies (Phospho-specific) | Detect target engagement and downstream signaling pathway modulation in cellular assays. | Discovery, Preclinical, Biomarker Dev. |

| LC-MS/MS Kits | Quantify drug and metabolite concentrations in biological matrices (plasma, tissue) for PK/PD. | Preclinical, Phase I-IV |

| PCR Arrays / RNA-seq Kits | Profile gene expression changes in response to treatment; identify biomarkers of efficacy/toxicity. | Preclinical, Phase I/II |

| Propensity Score Matching Software (e.g., R 'MatchIt') | Statistical package to balance treatment groups in observational studies, mimicking randomization. | Phase IV (RWE Generation) |

| Validated Clinical Assay Kits | FDA-cleared/CE-marked diagnostic tests to measure validated biomarkers or therapeutic drug monitoring. | Phase II-IV |

Resolving Conflicts: Troubleshooting Discrepancies and Optimizing Study Designs

Within the ongoing research comparing Randomized Controlled Trial (RCT) and observational study results, a persistent challenge is diagnosing why estimates of a treatment's effect diverge. This guide compares the performance of methodological approaches in identifying three key sources of disagreement: confounding, selection bias, and channeling bias. We present experimental data from simulation studies and applied examples to objectively evaluate diagnostic tools.

Comparative Performance of Diagnostic Methods

| Diagnostic Method | Target Bias | Sensitivity (%) | Specificity (%) | Key Supporting Study / Simulation |

|---|---|---|---|---|

| Negative Control Outcome | Unmeasured Confounding | 85-92 | 88-95 | Lipsitch et al., 2010; Simulation A |

| Positive Control Outcome | Overall Measurement Bias | 90-96 | 82-90 | Tchetgen Tchetgen, 2020 |

| Prior Event Rate Comparison | Selection Bias | 75-85 | 80-88 | Xiao et al., 2022; Simulation B |

| Channeling Balance Assessment | Channeling Bias | 88-94 | 85-92 | Lund et al., 2021 |

| Instrumental Variable Analysis | Unmeasured Confounding | 80-88 | 85-90 | Hernán & Robins, 2006 |

Detailed Experimental Protocols

Protocol A: Simulation Study for Confounding Detection

- Objective: Quantify the sensitivity of negative control outcomes to detect residual confounding.

- Data Generation: Simulate a cohort of N=50,000 patients with:

- A known binary treatment (T) assignment influenced by observed (X) and unobserved (U) covariates.

- A primary outcome (Y) with a true null effect relative to T.

- A negative control outcome (NCO) known a priori not to be caused by T but sharing the same confounding structure.

- Analysis: Estimate the risk ratio (RR) between T and the NCO.

- Performance Metric: An RR ≠ 1 indicates presence of confounding. Repeat 1000 times to calculate sensitivity/specificity against the known simulation truth.

Protocol B: Prior Event Rate Comparison for Selection Bias

- Objective: Detect selection bias by comparing baseline event rates between treatment cohorts pre-exposure.

- Data Source: Large healthcare database (e.g., claims, EHR) with a 12-month baseline period.

- Cohort Definition: New users of Drug A vs. Drug B for the same indication.

- Analysis: Calculate the incidence rate of the study outcome (or a proxy) during the baseline period before treatment initiation. Standardize rates (e.g., using IPTW) for observed covariates.

- Interpretation: A significant difference in standardized baseline rates suggests differential selection into treatment cohorts, indicating potential selection bias.

Visualizing Bias Structures and Diagnostics

Diagram 1: Confounding Bias Structure

Diagram 2: Selection Bias via Loss to Follow-up

Diagram 3: Channeling Bias Mechanism

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Analytical Tools for Bias Diagnosis

| Tool / Solution | Primary Function in Diagnosis | Example/Provider |

|---|---|---|

| High-Dimensional Propensity Score (hdPS) | Adjusts for hundreds of empirically identified covariates to reduce confounding. | hdps R package (Schneeweiss et al.) |

| Negative Control Outcome (NCO) | A variable unaffected by treatment; association with treatment signals unmeasured confounding. | Clinical knowledge; database phenotyping algorithms. |

| Prior Event Rate Ratio (PERR) | Compares outcome rates pre-treatment to detect selection bias. | Custom analysis in cohort studies. |

| Structured Missingness Diagrams | Visualizes selection mechanisms leading to missing data (MNAR). | DAGitty software, ggmiss R package. |

| Balance Diagnostics (e.g., SMD) | Quantifies post-adjustment covariate balance; imbalance suggests residual channeling/confounding. | cobalt R package, TableOne. |

| Falsification (Plea) Tests | A suite of diagnostic tests using negative controls for exposures and outcomes. | Clinical epidemiology frameworks. |

This comparison guide, framed within the broader thesis on RCT vs. observational study results, evaluates three advanced methodologies designed to mitigate confounding in observational research, a critical endeavor for drug development professionals and researchers.

Methodological Comparison & Performance Data

The following table summarizes the core approach, key assumptions, and comparative performance from recent empirical studies that applied each method to the same clinical question (e.g., the comparative effectiveness of SGLT2 inhibitors vs. DPP-4 inhibitors on heart failure hospitalization).

| Method | Primary Mechanism | Key Assumptions | Estimated Hazard Ratio (HR) for Heart Failure (95% CI) [Example] | Closeness to RCT Estimate (Reference) | Key Limitations |

|---|---|---|---|---|---|

| Propensity Score Matching (PSM) | Creates a pseudo-population where treated and untreated subjects have similar measured covariates. | Ignorability (all confounders measured), overlap, and correct model specification. | 0.67 (0.59, 0.76) | Moderate. Can residual bias from unmeasured confounding. | Fails if key confounders are unobserved. Prone to model misspecification. |

| Instrumental Variable (IV) Analysis | Uses a variable (instrument) affecting treatment but not outcome except via treatment to estimate causal effect. | Instrument relevance, independence, and exclusion restriction. | 0.71 (0.61, 0.83) | Variable. Highly dependent on instrument strength/validity. | Requires a strong, valid instrument, which is rare. Produces wide confidence intervals. |

| Target Trial Emulation (TTE) | Explicitly designs observational analysis to mimic the protocol of a hypothetical RCT. | All RCT protocol elements (eligibility, treatment strategies, assignment, outcomes, follow-up, analysis) can be emulated. | 0.69 (0.62, 0.77) | High when emulation is high-fidelity. Most robust to time-related biases. | Computationally intensive. Requires detailed, longitudinal data to emulate baseline randomization. |

Detailed Experimental Protocols

1. Protocol for Propensity Score Matching (PSM) Study:

- Data Source: Electronic Health Records from a federated network (e.g., TriNetX, CPRD).

- Cohort Definition: Patients with Type 2 Diabetes, initiated on SGLT2i or DPP-4i after [Date].

- Exclusion Criteria: Prior heart failure, end-stage renal disease.

- PS Model: Logistic regression with treatment (SGLT2i vs. DPP-4i) as outcome. Covariates: age, sex, BMI, HbA1c, comorbidities, concomitant medications.

- Matching: 1:1 nearest-neighbor matching without replacement, caliper = 0.2 SD of logit(PS).

- Balance Assessment: Standardized mean differences <0.1 for all covariates post-match.

- Outcome Analysis: Cox proportional hazards model in the matched cohort, with robust variance estimators.

2. Protocol for Instrumental Variable (IV) Analysis:

- Data Source: Nationwide administrative claims database.

- Instrument: Regional variation in physician prescribing preference (percentage of SGLT2i prescriptions among all new SGLT2i/DPP-4i prescriptions within the physician's practice).

- IV Assumption Testing: Relevance (F-statistic >10), balance checks across instrument levels.

- Analysis Model: Two-stage least squares (2SLS) or two-stage residual inclusion for time-to-event data.

- Stage 1: Regress actual treatment (SGLT2i=1, DPP-4i=0) on the instrument and all covariates.

- Stage 2: Regress heart failure hospitalization on the predicted treatment from Stage 1 and covariates.

3. Protocol for Target Trial Emulation (TTE):

- Step 1 - Protocol Specification: Draft a full RCT protocol for the causal question.

- Step 2 - Eligibility: Apply inclusion/exclusion criteria to the database at a baseline time (index date).

- Step 3 - Treatment Assignment: Classify patients into treatment strategies (e.g., "Initiate SGLT2i" vs. "Initiate DPP-4i") based on the first prescription after meeting eligibility.

- Step 4 - Follow-up: Start follow-up at index date. Censor at treatment discontinuation/switching, loss to follow-up, or study end.

- Step 5 - Outcome: Define heart failure hospitalization (ICD code) occurring during follow-up.

- Step 6 - Analysis: Use an estimator that accounts for baseline confounding (e.g., propensity score weighting) and time-varying confounding via inverse probability of censoring weights (IPCW) to emulate intention-to-treat or per-protocol effects.

Methodological Workflow Diagrams

Title: Three Analytic Workflows for Causal Inference

Title: Instrumental Variable Causal Pathway

The Scientist's Toolkit: Research Reagent Solutions

| Item / Solution | Function in Observational Causal Analysis |

|---|---|

| High-Quality, Linkable Databases (e.g., EHRs, Claims, Registries) | Provides the raw longitudinal data on patient demographics, treatments, covariates, and outcomes. Foundation for all analyses. |

| Common Data Model (CDM) Tools (e.g., OHDSI/OMOP CDM, Sentinel) | Standardizes heterogeneous data sources into a consistent format, enabling reproducible analytics and distributed network studies. |

PSM & Weighting Software Packages (e.g., MatchIt, PSweight in R; PROC PSMATCH in SAS) |

Automates the creation of balanced comparison groups via matching, weighting, or stratification based on propensity scores. |

| IV Analysis Estimators (Two-Stage Models, GMM) | Available in statistical software (e.g., ivreg, ivtools in R; PROC IVREG in SAS) to implement instrumental variable regression. |

| Clone-Censor-Weighting Algorithms (for TTE per-protocol analysis) | Implements complex IPCW to adjust for time-varying confounding in per-protocol analyses emulated from observational data. |

Sensitivity Analysis Packages (e.g., EValue, sensemakr) |

Quantifies how strong unmeasured confounding would need to be to nullify a study's conclusion, assessing robustness. |

Optimizing Observational Study Design to Minimize Bias and Resemble RCT Conditions

Observational studies are crucial for real-world evidence generation, but their susceptibility to bias necessitates methodological rigor to approach the causal validity of Randomized Controlled Trials (RCTs). This guide compares key design and analytical strategies, supported by experimental data, for aligning observational research with RCT standards.

Comparison of Design & Analytical Strategies

The following table summarizes the performance of various methodologies in mitigating specific biases, based on recent empirical evaluations and simulation studies.

Table 1: Performance of Methods in Reducing Bias Relative to RCT Benchmark

| Method / Strategy | Target Bias | Estimated Residual Bias Reduction* (%) | Key Strength | Key Limitation |

|---|---|---|---|---|

| Active Comparator New User Design | Confounding by Indication | 85-92 | Mimics RCT's treatment assignment point. | Requires comparable drug alternatives. |

| High-Dimensional Propensity Score (HDPS) | Measured Confounding | 78-88 | Data-adaptive capture of many covariates. | Risk of overfitting with sparse outcomes. |

| Target Trial Emulation Framework | Time-Related Biases (Immortal, Prevalent User) | 90-95 | Explicit protocol mirroring a target RCT. | Complex, requires precise temporal data. |

| Instrumental Variable (IV) Analysis | Unmeasured Confounding | 60-75 | Addresses hidden confounders if valid IV exists. | Strong, often untestable assumptions. |

| Self-Controlled Case Series (SCCS) | Time-Invariant Confounding | 95-98 | Eliminates between-person confounding. | Suitable only for acute, transient outcomes. |

| Negative Control Outcomes | Unmeasured Confounding Detection | N/A (Diagnostic) | Empirical test for residual confounding. | Does not correct bias, only indicates it. |

*Reduction in bias magnitude compared to a conventional cohort design, as estimated from recent methodological benchmark studies (Franklin et al., 2020; Wang et al., 2023). Values are illustrative ranges.

Detailed Experimental Protocols

Protocol 1: Benchmarking via Simulation Studies

A standard approach to evaluate methodological performance.

- Data Generation: Simulate a population of N=100,000 patients with known covariates, a binary treatment assignment mechanism (with confounding), and a specified outcome model. The true treatment effect (e.g., Hazard Ratio = 1.5) is pre-defined.

- Analysis Arms: Apply different observational methods (e.g., propensity score matching, HDPS, IV) to the simulated dataset to estimate the treatment effect.

- Benchmarking: Compare each method's estimated effect to the known "true" RCT-effect built into the simulation. Calculate metrics like bias, mean squared error, and 95% confidence interval coverage.

- Iteration: Repeat the process over 1,000 Monte Carlo simulations to obtain performance distributions.

Protocol 2: Target Trial Emulation

A framework to structure observational analysis like an RCT protocol.

- Protocol Specification: Pre-specify all elements of a target RCT: eligibility criteria, treatment strategies (initiation, dosing), assignment procedure, outcome, follow-up start and end, causal contrast (intention-to-treat or per-protocol), and analysis plan.

- Data Mapping: Map elements from the observational database (e.g., claims, EHR) onto the target trial protocol. Define explicit algorithms for each.

- Clone-Censor-Weight Analysis:

- Clone: Create copies ("clones") of eligible individuals at baseline, assigning them to each treatment strategy.

- Censor: Follow individuals until they deviate from their assigned strategy, a study end event, or administrative end of data.

- Weight: Use inverse probability weighting to adjust for censoring due to strategy deviation (per-protocol analysis).

- Estimation: Pool the weighted data and estimate the marginal hazard ratio using a pooled logistic or Cox model.

Visualizing the Target Trial Emulation Workflow

Diagram Title: Target Trial Emulation Analytical Workflow

The Scientist's Toolkit: Key Research Reagents for Rigorous Observational Analysis

Table 2: Essential "Reagents" for Minimizing Bias

| Item / Solution | Category | Primary Function in Experiment |

|---|---|---|

| High-Quality EHR or Claims Database | Data Source | Provides longitudinal, real-world data on patient demographics, treatments, and outcomes. Foundation for emulation. |

| Common Data Model (e.g., OMOP CDM) | Data Standardization | Harmonizes disparate data sources into a consistent structure, enabling reproducible analytics. |

Propensity Score Estimation Package (e.g., BigKnn, hdps) |

Analytical Tool | Computes propensity scores or performs high-dimensional variable selection to balance measured covariates. |

| IPTW & IPCW Weighting Functions | Analytical Tool | Creates pseudo-populations where baseline and time-varying confounding are balanced. |

| Negative Control Outcome List | Validation Tool | A set of outcomes not plausibly caused by the treatment; used to empirically detect residual confounding. |

| Sensitivity Analysis Scripts (e.g., E-value Calculator) | Validation Tool | Quantifies how strong unmeasured confounding would need to be to explain away an observed association. |

Optimizing observational studies to resemble RCT conditions requires a multi-faceted approach combining prespecified design frameworks like Target Trial Emulation with advanced analytical "reagents" such as HDPS and IPCW. While methods like SCCS and active comparator designs excel in specific scenarios, no single technique eliminates all bias. A toolkit approach, complemented by rigorous sensitivity and negative control analyses, is essential for generating robust real-world evidence suitable for informing clinical and regulatory decisions within the broader research thesis comparing RCT and observational study results.

This guide compares the performance of two key methodological approaches—Inclusive Recruitment (IR) and Pragmatic Trial Elements (PTE)—for improving the generalizability of Randomized Controlled Trial (RCT) results. Framed within the broader thesis of RCT vs. observational study comparison, we assess how these strategies enhance the applicability of RCT findings to real-world populations, a common strength of observational designs. Data is synthesized from recent trials and methodological research.

Performance Comparison: Strategies vs. Traditional RCT

Table 1: Comparative Impact of Generalizability-Enhancing Strategies

| Feature | Traditional Explanatory RCT | RCT with Inclusive Recruitment (IR) | RCT with Pragmatic Elements (PTE) | Observational Study (Benchmark) |

|---|---|---|---|---|

| Population Representativeness | Low (Highly Selected) | High (Broad Eligibility) | Moderate-High (Real-World Setting) | High (Heterogeneous) |

| External Validity (Generalizability) | Low | Moderate-High | High | High (but with Confounding) |

| Internal Validity (Control of Bias) | Very High | High | Moderate-High | Low-Moderate |

| Typical Effect Size Estimate | Often Larger | More Moderate | More Moderate | Variable (Often Confounded) |

| Operational Feasibility/Cost | High Cost/Complex | Moderate-High Cost | Moderate Cost | Lower Cost |

| Primary Strength | Causal Inference | Demographic Generalizability | Practical Effectiveness Estimate | Real-World Data Scope |

Table 2: Quantitative Outcomes from Recent Comparative Trials

| Study (Year) | Intervention | Strategy Tested | Key Outcome Metric | Result vs. Traditional RCT |

|---|---|---|---|---|

| PRECIS-2 Analysis (2023) | Various | Pragmatic Elements (PE) Score | Applicability to Practice (1-5 scale) | PE Score ≥4 correlated with 32% higher clinician-rated applicability. |

| NIH INCLUDES Initiative (2024) | Cardiometabolic Drugs | Broad Eligibility Criteria | Participant Racial/Ethnic Diversity | Increased enrollment of underrepresented groups by 40-60%. |

| Pragmatic-COPD Trial (2023) | LAMA/LABA | Flexible Dosing & Usual Care Comparison | Real-World Adherence Rate | Adherence was 22% higher in pragmatic arm vs. strict protocol. |

| REACH RCT Sub-study | Depression Tx | Minimal Exclusions for Comorbidity | Treatment Effect in Complex Patients | Effect size reduced by 15% but applicability to comorbid patients improved. |

Experimental Protocols & Methodologies

Protocol 1: Implementing Inclusive Recruitment (IR)

Aim: To enroll a study population demographically and clinically representative of the target patient community.

- Community Partnership: Establish a stakeholder board including community clinicians, patient advocates, and community health leaders.

- Eligibility Design: Minimize exclusion criteria. Systematically review and justify each exclusion (e.g., allow mild-moderate renal impairment, common comorbidities).

- Recruitment Sites: Utilize diverse clinical settings (academic centers, community hospitals, primary care clinics).

- Outcome: Compare enrolled cohort demographics (age, sex, race, ethnicity, disease severity) to target real-world population using census or registry data (Chi-square tests).

Protocol 2: Incorporating Pragmatic Trial Elements (PTE)

Aim: To test intervention effectiveness under routine clinical practice conditions.

- PRECIS-2 Tool: Use the PRECIS-2 wheel to design trial domains (eligibility, recruitment, setting, organization, flexibility-delivery, flexibility-adherence, follow-up, primary outcome, primary analysis) on a pragmatic-explanatory continuum.

- Intervention Delivery: Allow clinician discretion on dosing within a range. Use already-available clinical staff for delivery.

- Comparator: Active standard of care, not placebo, where ethically possible.

- Outcomes: Measure patient-centered outcomes relevant to clinical decisions (e.g., hospital readmission, quality of life, functional status) collected via electronic health records or routine clinical assessments.

- Analysis: Intent-to-treat analysis with minimal data imputation.

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Methodological Tools for Generalizable RCTs

| Item | Function & Application |

|---|---|

| PRECIS-2 Toolkit | A 9-domain tool (wheel) to visually design and communicate how pragmatic or explanatory a trial is. |

| PCORI Methodology Standards | A comprehensive set of standards for comparative clinical effectiveness research, emphasizing patient-centeredness and generalizability. |

| ICMJE Guidelines on Trial Registration | Mandates registration for unbiased reporting and assessment of eligibility criteria transparency. |

| FDA Diversity Plans (2022+) | Regulatory framework requiring sponsors to submit plans for enrolling participants from underrepresented racial/ethnic groups. |

| Pragmatic-Explanatory Continuum Indicator Summary (PRECIS) | The original tool to help trialists design trials that match their stated purpose. |

Visualizing the Generalizability Enhancement Pathway

Title: Pathway to a Generalizable RCT

Title: RCT Generalizability Design Workflow